Sonification of an Eye

I have always been fascinated by eyes. The colors, shapes, and textures of a person’s iris are as unique to that individual as their fingerprints, and so identifiable that biometric systems are able to identify one person from another with ease.

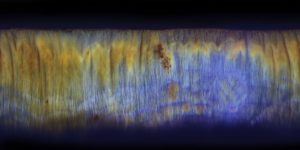

For my project, I sought to sonify the iris, to create an experimental composition based on the characteristics of my own eye. First, I needed to photograph my eye. I did this simply using a macro attachment for my phone. With a base image to work with, I then converted the image of my eye from polar to rectangular coordinates. This has the effect of “unwrapping” the circular form of the eye, so that it forms a linear landscape. These “unwrapped” eyes remind me somewhat of spectrogram images; this was one of the observations that inspired this project.

Next, I sought to sonify this image. How could I read it like a score? I settled on using Metasynth, which conveniently contains a whole system for reading images as sound. However, the unprocessed image was difficult to change into anything but chaotic noise, so to develop the different “instruments” in my composition, I processed the original image quite a bit.

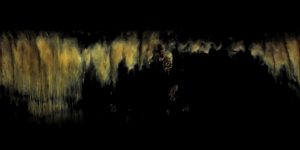

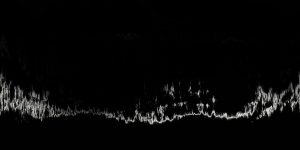

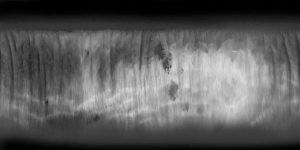

Using ImageJ, a program produced by the National Institute of Health, I was able to identify and separate the various features in my eye into several less complicated images. These simpler images were much easier to transform into sound. Separating the color channels of the image allowed me to create different drones; identifying certain edges, valleys and fissures allowed me to develop the more “note like” elements of the composition.

These are just a few of the layers I created for use in my soundscape; there were seven in total. In Metasynth, I was able to import these images and read them like scores. Each layer has a different process, but this is the general premise: I would pass a wave or noise (I chose pink noise for most tracks) into a wavesynth, grainsynth, or sampler. I would define the size of the image, and a tone map to divide that image into frequencies based on the pixels of the image. For instance, a micro-32 map would divide my 512 pixel high image into 16 notes. I am still working on an understanding of exactly how Metasynth works, but from this point the synthesizer can read the image like a score. Through experimentation, I developed a loop from each of the 7 images I had processed from the photograph of my eye. Each loop represented one rotation around the contours of the eye.

In Audacity, I took all of these loops and mixed them into an experimental composition. As a visual aid to what is being played, I re-polarized the images to produce the video I played in class. The sweeping arm across the image is representative of the location on the eye that is being sonified at that time, however since I was creatively mixing between layers in the composition (vs just fading between one image layer to the next in the video) it wasn’t always easy to understand the connection between image and sound. A further improvement to this project would be to create a video where the opacity of the image layers is representative of the exact mixing I was doing in the audio track.