Unity 3D, OpenPose, Kinect for Windows v2, Speakers, Acrylic Plastic

Ambient Auditory Experience Emanating Sculpture

Installed at the Basement Stairwell of Hunt Library, Carnegie Mellon University

2019

Individual Project

Rong Kang Chew

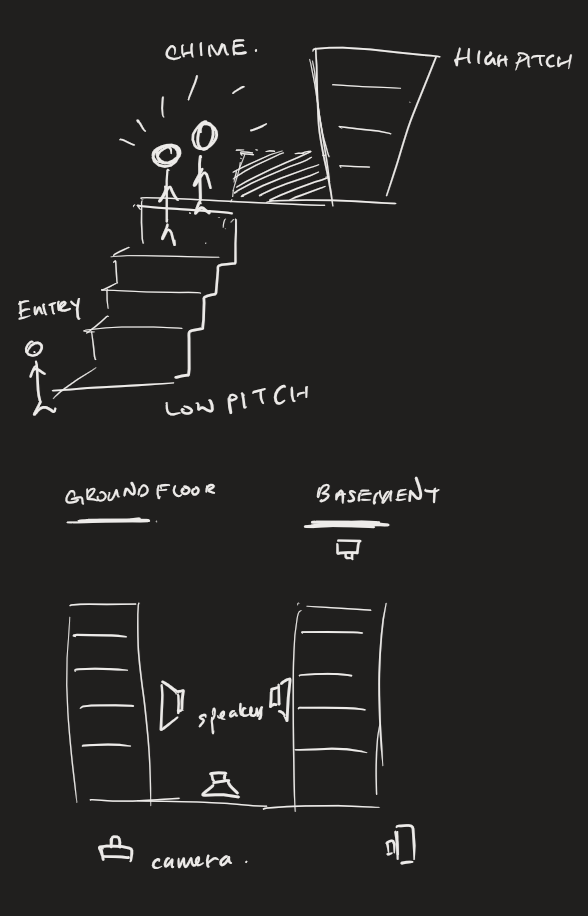

As Hunt Library guests enter the main stairwell, they are greeted with a quiet hum. Something’s changed but they can’t really see what. The hum changes as they walk along the staircase – they are amused but still curious. The sound becomes lower in pitch as they walk down to the basement. Someone else enters the stairwell and notices the noise too – there is brief eye contact with someone else on the staircase – did they hear that too?

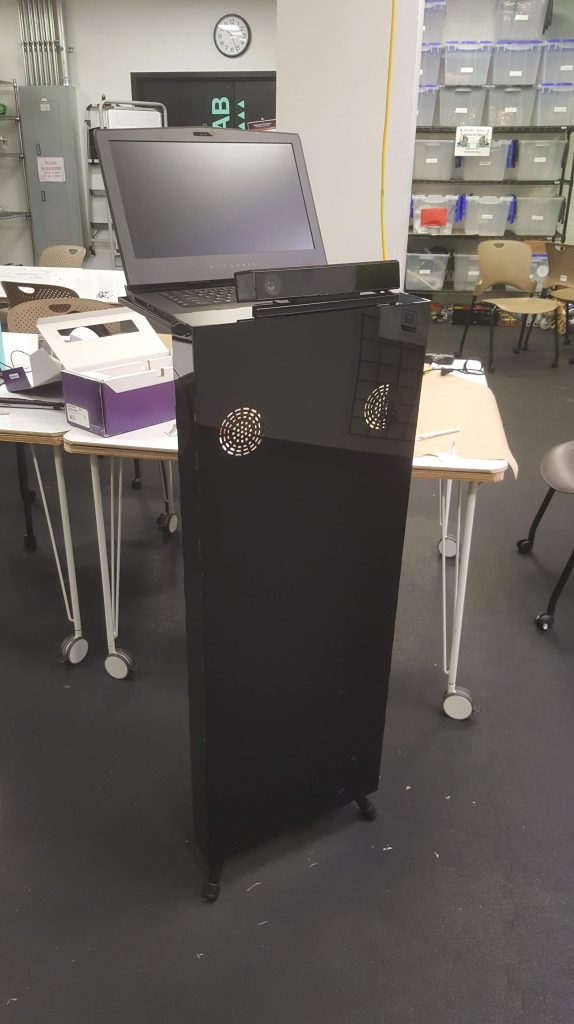

As they reach the bottom and approach the door, they hear another sound – a chime as if they have reached their destination. Some of those not in a hurry notice a sleek machine, draped in smooth black plastic, next to the doorway. It is watching them, and seems to be the source of the sounds. Some try to experiment with the machine. Either way, the guests still leave the staircase, only to return sometime soon.

Process

Staircases are usually shared cramped spaces that are sometimes uncomfortable – we have to squeeze past people, make uneasy eye contact, or ask sheepishly if we could get help with the door. How can we become aware of our state of mind and that of other people as we move through staircases, and could this make being on a staircase a better experience?

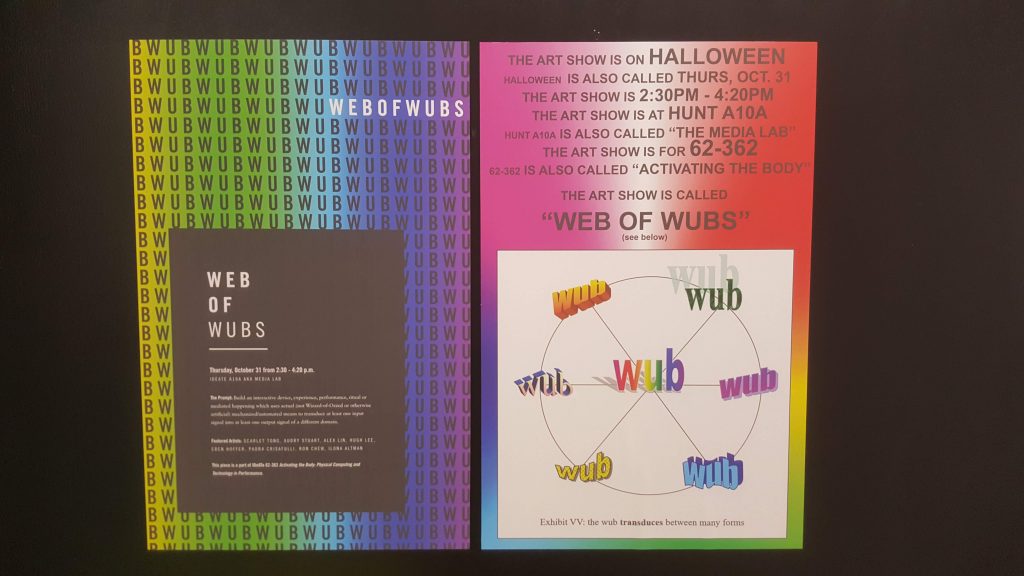

After learning about with the underlying concept for the FLOW theme, which was that of transduction and the changes between various forms and states, I knew I wanted to apply that concept into a installation that occupied the space of a room. In this case, this room was a stairwell leading to the basement level of the Hunt Library in CMU. This space was close enough to the rest of the installations of our show, WEB OF WUBS.

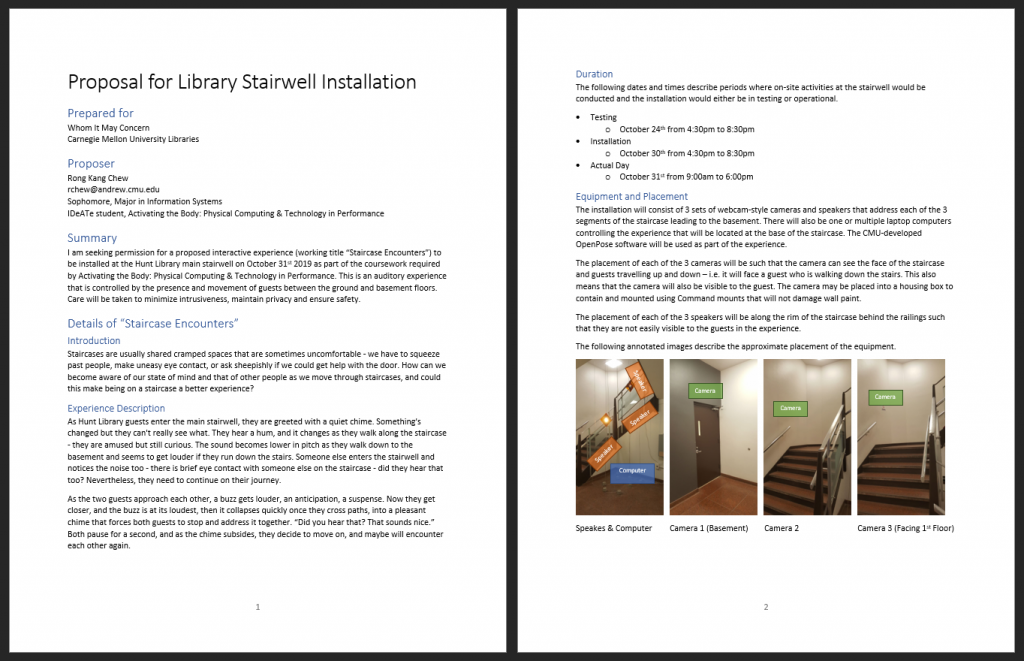

I had to seek permission from CMU Libraries in order to have my installation sited in the stairwell, and therefore had to come up with a proposal detailing my plans and installation date. Due to the nature and siting of the installation, safety and privacy were important emphasis points in the proposal. I would like to thank my instructor Heidi for helping me to get the proposal across to the right people.

Placement in the stairwell is tricky, as I had to ensure that cabling and positions of objects were safe and would not cause any tripping. I iterated through various webcam and placements of cameras, computers and speakers to find out what would work well for the experience. Eventually, I settled with consolidating the entire installation into a single unit instead of trying to conceal its elements. Some of my earlier onsite testing showed that people didn’t really react with the sound if there was no visual element. This and the advice of Heidi encouraged me to put the installation “out there” so that people could see, interact and perhaps play with it.

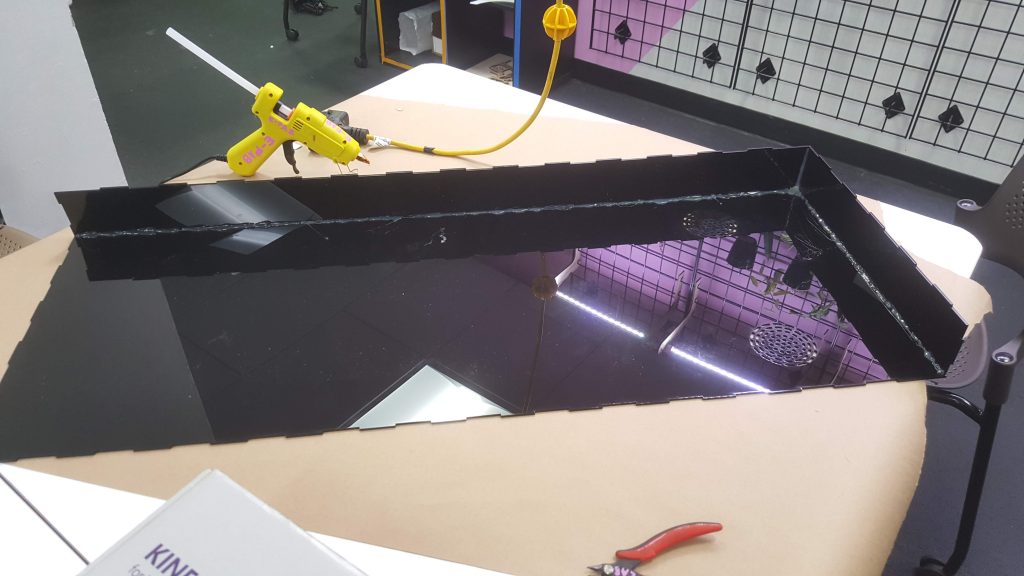

The final enclosure for the sculpture was laser cut out of 1/8″ black acrylic plastic and glued together. Speaker holes were also included for the computer speakers used. Unfortunately, I ran out of material and decided to go with exposing the wiring on the sides. The nature of the glue used does allow disassembly and an opportunity to improve this in the future.

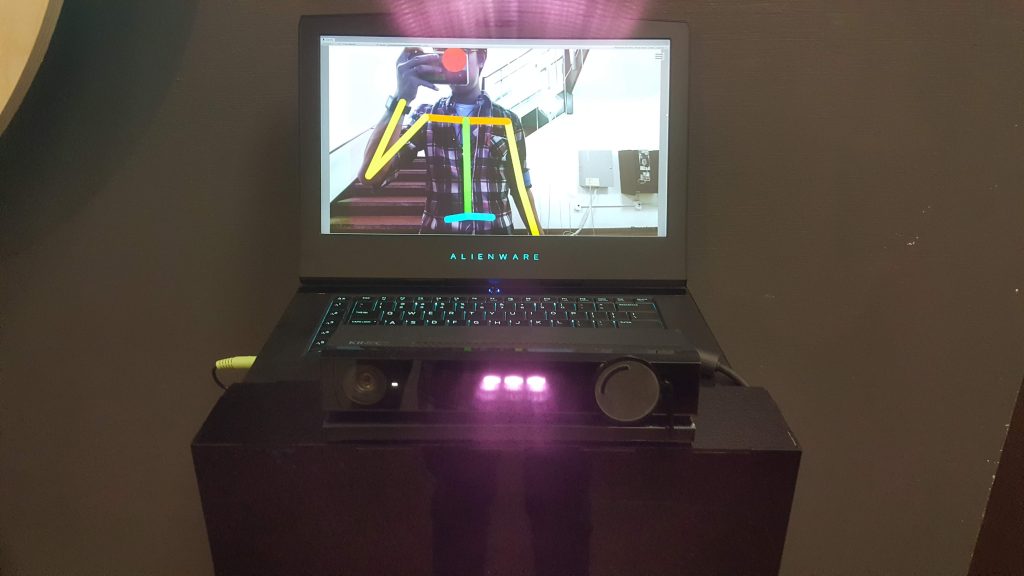

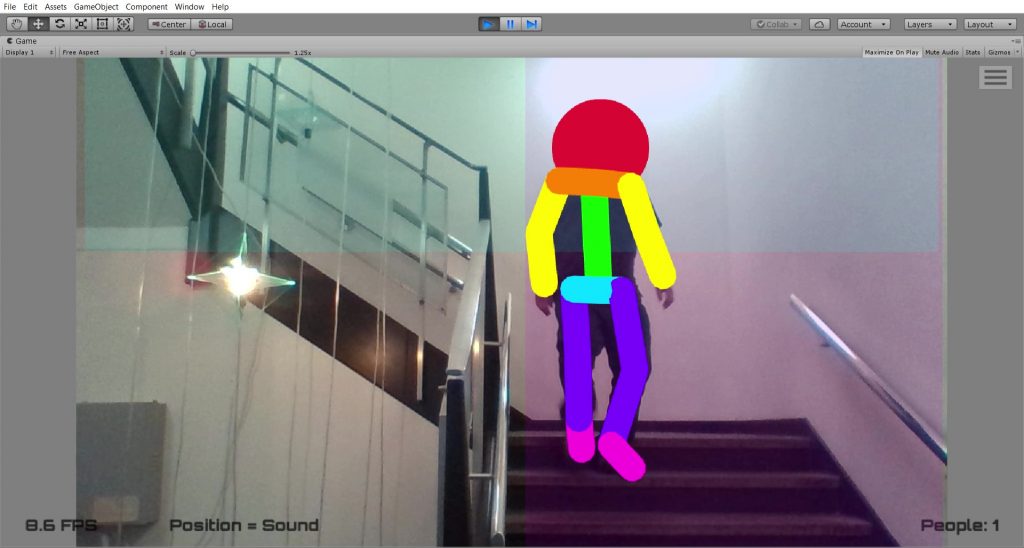

As for the software aspects of the implementation, I used the OpenPose library from the CMU Perceptual Computing lab. This allowed me to figure out where humans are in a particular scene. However, it only detected scenes in 2D, so I had to limit myself to working with the height and width of where people were in a scene. I used the Unity 3D game engine to process this information, and used the average horizontal and vertical positions of people’s heads to adjust the pitch in two “zones” of the staircase. (see end of post for some code).

X,Y position in zone <==> pitch of sounds for that zone

The sounds used by the experience included those from the Listen to Wikipedia experience by Hatnote and some verbal phrases spoken by Google Cloud Text-to-Speech.

Reflection & Improvements

A lot of the learning from this project came from testing on site, and even so, I think I did not arrive at where I actually wanted to be for the installation of Staircase Encounters.

Hidden, Surreal |————————————X——| Explicit, Playful

The key issue was something I mentioned earlier: how noticeable and interacted with did I want my installation to be? In my first tests, it seemed like no one was paying attention to the sounds. But at the end, I think I perhaps made the installation too interactive. I received a lot of feedback from guests that were expecting the sounds to react more to their movements, especially since they were able to see all their limbs being tracked.

I guess given more time, I could have added more parameters to how the music reacts to users, e.g. speed of movement, “excitedness” of limbs, and encounters with any other guests. However, the visual element lead to engagement that was not followed up, which in itself was a little disappointing, like a broken toy.

My key learning from Staircase Encounters is to test and think clearly about the experience – it is easy to be fixated on the building, but not easy to be objective, emotion and measured about the experience and the end product, especially when the building is rushed.

Code

Here is some code that represents the pink and blue “zones”, which track people as they enter and move through them, and updates the sounds accordingly.

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

using System.Linq;

public class Zone : MonoBehaviour

{

public enum Axis

{

X, Y

}

public List<GameObject> soundsToSpawn;

public float min = -60;

public float max = 30;

public float minPitch = 1.5f;

public float maxPitch = -0.5f;

public Axis axisToUse = Axis.X;

private Queue<float> positions;

private int queueSize = 20;

private AudioSource sound;

private float timeStarted = 0;

private bool played;

private int soundMode = 0;

// Start is called before the first frame update

void Start()

{

positions = new Queue<float>();

}

// Update is called once per frame

void Update()

{

if (sound != null && played && !sound.isPlaying && Time.time - timeStarted > 1)

{

Destroy(sound.gameObject);

played = false;

sound = null;

}

if (Input.GetKeyDown("0"))

{

soundMode = 0;

}

if (Input.GetKeyDown("1"))

{

soundMode = 1;

}

}

void OnTriggerEnter2D(Collider2D col)

{

//Debug.Log(gameObject.name + " entered: " + col.gameObject.name + " : " + Time.time);

if (sound == null)

{

timeStarted = Time.time;

sound = Instantiate(this.soundsToSpawn[soundMode]).GetComponent<AudioSource>();

sound.Play();

played = true;

}

}

void OnTriggerStay2D(Collider2D col)

{

if (sound != null)

{

RectTransform rTransform = col.gameObject.GetComponent<RectTransform>();

float point = 0;

switch (this.axisToUse)

{

case Zone.Axis.X:

point = rTransform.position.x;

break;

case Zone.Axis.Y:

point = rTransform.position.y;

break;

default:

break;

}

while (positions.Count >= queueSize)

{

positions.Dequeue();

}

positions.Enqueue(point);

float avgPoint = positions.Average();

//Debug.Log("Avg value of " + this.gameObject.name + " to " + avgPoint + " : " + Time.time);

float targetPitch = map(point, this.min, this.max, this.minPitch, this.maxPitch);

sound.pitch = targetPitch;

}

}

static float map(float x, float in_min, float in_max, float out_min, float out_max)

{

return (x - in_min) * (out_max - out_min) / (in_max - in_min) + out_min;

}

}

Comments are closed.