Processing, Kinect for Windows v2

Wood Frame, Mylar

Digital Capture & Projection, Sculpture

Shown at Every Possible Utterance, MuseumLab, December 2019

2019

Individual Project

Ron Chew

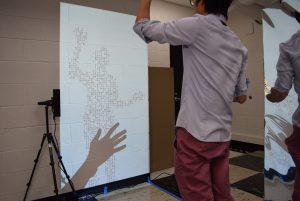

A large silver prism stands in the corner, almost as tall as a human – light seems to emanate out of it as it reflects your presence – projecting something onto the facing wall. The projection, along with a set of digital footprints prompt you to stand in front of it. The display reacts to you – and starts to scan your body as it attempts to digitize and codify your body. It seems to be applying something to your body, a story! The words appear within your silhouette, while it is hard to make out the entire story, you move around trying to read it. You leave wondering if the story was chosen for you to be embodied.

Project Statement

Humans are the most complex machines, and every effort has been made to understand, uncomplicate and replicate our brains. Turning our behavior and personality into something that other machines can begin to mimic has been the ultimate goal.

What if we were truly successful? What if we had a Human Codifier that understood every facet of us: our true cornerstones, motivations and beliefs. Could we use that to configure a Human Operating System, that would perfectly recreate us within its properties and subroutines?

FutureTech Corporation, in collaboration with the Library of Babel, invites you to experience the personalities of Every Possible Utterance, as captured by the Human Codifier. In this experimental procedure, codified experiences, thoughts and occasional ramblings of other humans, will be grafted onto your digital shadows. Through this fully non-invasive installation of other humans onto yourself, you may experience euphoria, epiphanies and emotional changes.

This installation seeks to understand the abstraction of people and their personalities through stories and textual representations, by projecting these stories onto a captured silhouette of each guest. Is this how we might embody other people’s stories? How much of its original meaning we apply to ourselves?

Concept

The prompt for this piece was ARROWS, which captured the complexity in computers where there is direction and indirection of meaning, from simple looking code to complex code, and many disparate pieces coming together to create a whole system. One thing unique about this prompt was that our piece would be shown live during a public show on the night of Dec 13 2019.

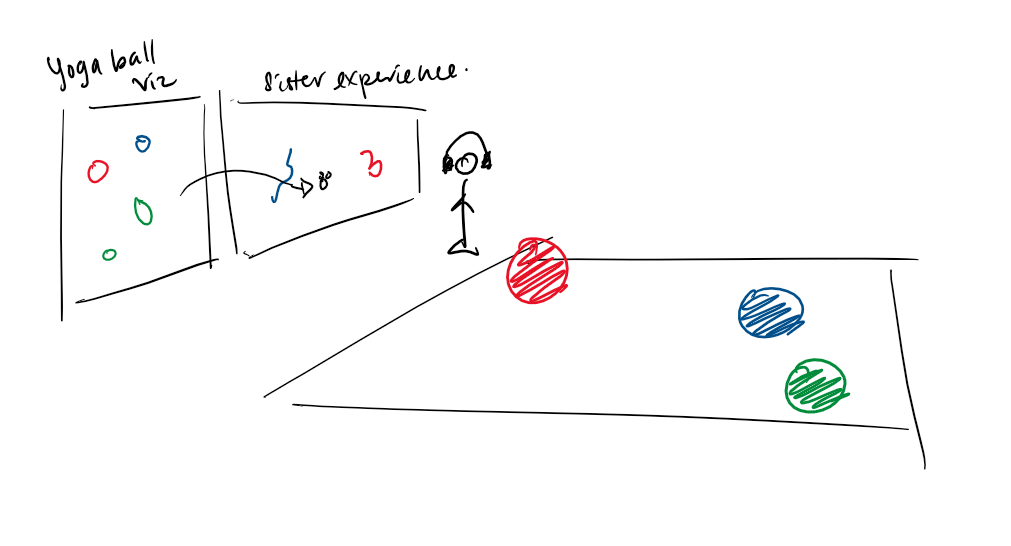

I initially had two ideas to work along each theme. For the first, “Emergent Complexity” of a system of pieces, I had an idea which would work alongside another artist in the Exploded Ensemble (experimental music) class, who wanted to translate the position of yoga balls in a large plaza into music. I would try to capture the position of balls and generate units in a system that would change over time during the night.

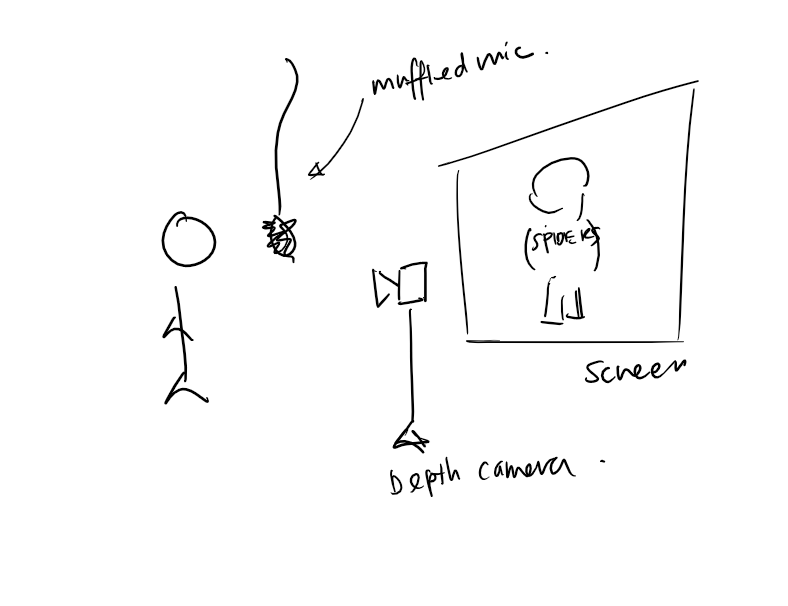

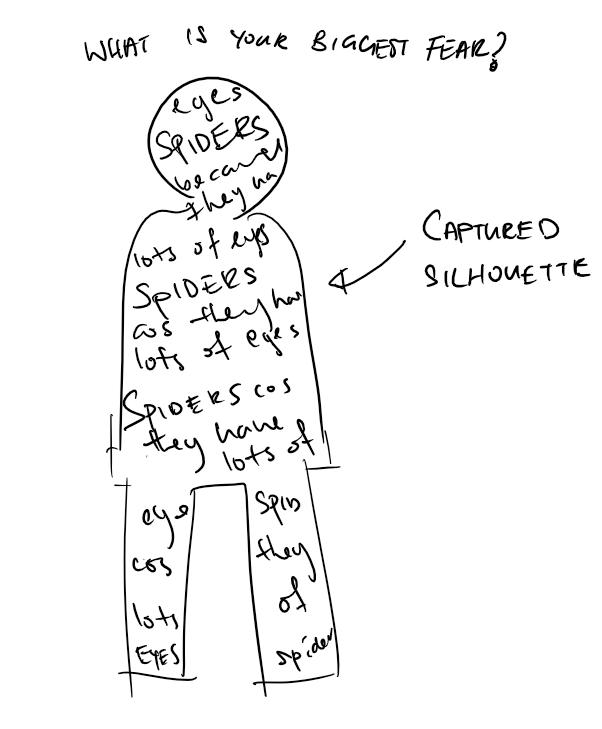

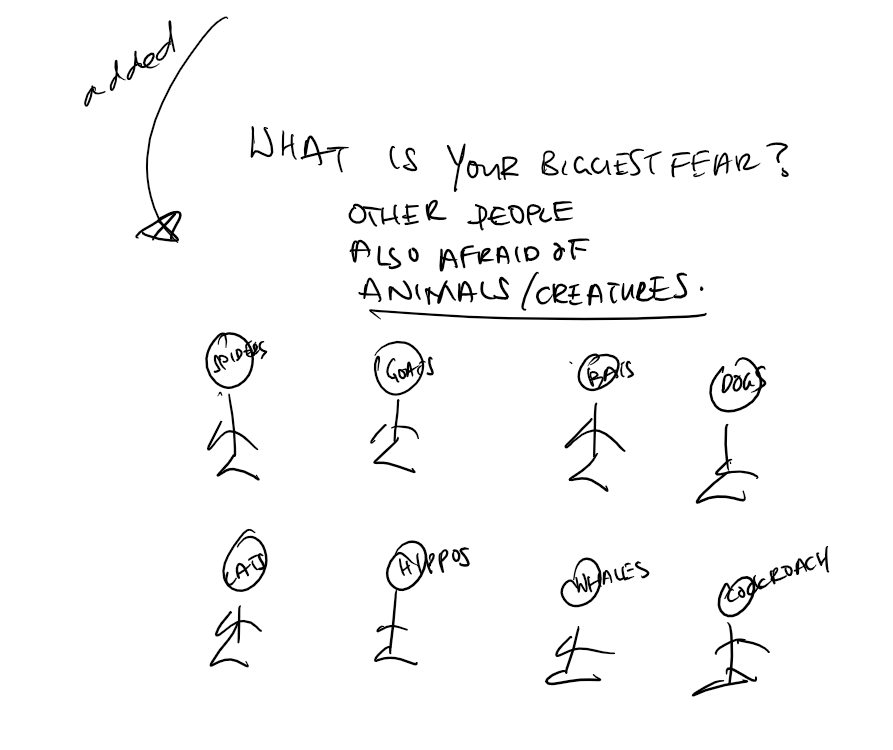

The other idea about abstraction and complexity revolved around trying to abstract humans into the stories and words we use to describe ourselves. I would try to capture the silhouette of the guest and capture a story they would tell – this story would be captured and fill their silhouette, making them “eat their words”. There would be some comparison of what they said and others who were also prompted the same thing, e.g. showing who else who responded “animals” when prompted “What is your biggest fear?”

Due to tight timelines it was not feasible to work on a collaborative piece for the show – I decided to work on the latter idea of presenting an abstraction of a human being as words – simplifying the idea so that there was not a need to capture words live at the show, which would likely have reliability issues.

Building: Software

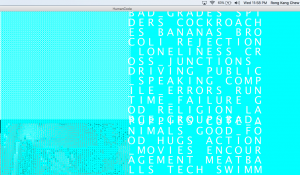

I decided to learn a new platform in building this experience: Processing. While it is relatively simpler than other software platforms, it was still new to me and had a good repository of Kinect examples. I worked my way through different representations of the depth information, starting with thresholding the depth to specific areas to isolate the human, converting the image to an ASCII representation, then filling up the space with specific words.

One of the issues was that there was no skeletal tracking, which would have been useful for introducing more interesting interactions and having a better isolation of the body shape. Unfortunately, this library SimpleOpenNI, required a full privileged Mac to build, which I did not have at the time. I decided to use the openkinect-processing library which just had depth information.

My first iteration of the display was more simplistic, which only displayed simple words according to a certain theme – this first iteration worked well enough – but the meaning of the piece was still not found in the relatively random words. For the second iteration, I formed actual stories within the body, but the use of small constantly-moving text made it difficult to read.

For the final version of the software, a collection of texts from Cloud Atlas, Zima Blue, and additional quotes/statements from students in the IDeATe classes on show was displayed in a static format in front of the user. The display automatically rotated between different displays and texts – slowly abstracting the user from an image of themselves to the actual story. Additional text prompted users to stand in front of the display.

The following resources were helpful in my development:

Building: Sculpture

In order to hide the raised projector creating the display, I decided to come up with a simple sculpture that would thematically hide the projector. I received a great suggestion from my instructors and classmates to use Mylar as a reflective surface – creating a sci-fi like obelisk that would have a slightly imposing presence.

With the guidance of my instructor Zach, a wooden frame for the prism was built upon which the Mylar was stapled and mounted onto. A wooden mount was also created for the projector so that it could be held vertically without obstructing its heat exhaust vents, which caused an overheating issue during one of the critiques.

Overall, this construction went well and effectively helped to hide the short throw projector and increasing the focus/immersiveness of the display. With a physical presence to the installation, I decided to call the installation “The Human Codifier”, a machine that codified humans and applied stories to them.

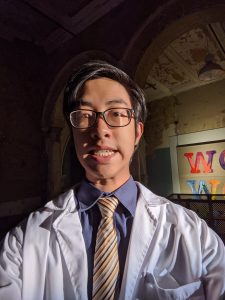

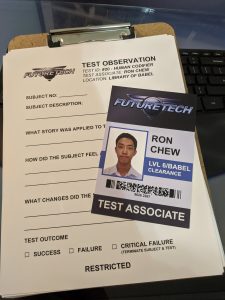

Performance Day

In order to provide some guidance to guests during the display, I decided to add a performative aspect to the installation where I played a “Test Associate” from FutureTech Corporation, who was conducting testing in collaboration with the Library of Babel (the thematic location of the show). The character was completed with a lab coat, security badge and clipboard. As the test associate, I would provide some instructions if they were lost, and conducted some one-question interviews at the end of their interaction.

I felt that the character was useful in providing an opportunity to complete the piece with a connection to the real world instead of a fully self-guided experience. It was also great to be able to talk to guests and also figure out how they felt about the installation.

The position I took up in the MuseumLab for the show was a small nook in the gallery space on the 2nd floor, which I found after scouting the space. It was cozy and perhaps private enough for a single person to experience. The only small tweak I would make about the showing would be that my spot ended up a little poorly lit and perhaps a little hard to get to in the space.

Reflection

Although the actual interaction of this piece was simple, I felt the overall scale of the show and achieving an meaningful experience was the most challenging aspects of this project. Having the additional time and iterations did help me hone in on the exact experience I wanted – a good handful of guests tried to read the stories and dissect its meaning. However, some refinement into the content of the stories could have been made. As to the overall show, I felt that it was a great success, and it was a first for me to have presented to a public audience of 100+ people.

Last of all, I was most thankful to be able to learn along this journey with my classmates Alex, Scarlett, Ilona, Padra, Hugh and Eben, and appreciate all the guidance provided from my instructors Heidi and Zach. Having a close group of friends to work together with and bounce ideas off/provide critique was immensely helpful – and I could not have done it without them.

Other mentions: moving day, after a day of setting up, tearing down 🙁

Additional thanks to Christina Brown for photography, Jacquelyn Johnson for videography, and my friends Brandon and Zoe for some photographs.

Code

Here is the Processing code that I used, alongside the openkinect library as metnioned earlier.

- <span class="kwd">import</span><span class="pln"> org</span><span class="pun">.</span><span class="pln">openkinect</span><span class="pun">.</span><span class="pln">processing</span><span class="pun">.*;</span><span class="pln">

- </span><span class="typ">Kinect2</span><span class="pln"> kinect2</span><span class="pun">;</span><span class="pln">

- </span><span class="com">// Depth image</span><span class="pln">

- </span><span class="typ">PImage</span><span class="pln"> targetImg</span><span class="pun">,</span><span class="pln"> inputImg</span><span class="pun">,</span><span class="pln"> resizeImg</span><span class="pun">;</span><span class="pln">

- </span><span class="kwd">int</span><span class="pun">[]</span><span class="pln"> rawDepth</span><span class="pun">;</span><span class="pln">

- </span><span class="com">// Which pixels do we care about?</span><span class="pln">

- </span><span class="kwd">int</span><span class="pln"> minDepth </span><span class="pun">=</span><span class="pln"> </span><span class="lit">100</span><span class="pun">;</span><span class="pln">

- </span><span class="kwd">int</span><span class="pln"> maxDepth </span><span class="pun">=</span><span class="pln"> </span><span class="lit">1800</span><span class="pun">;</span><span class="pln"> </span><span class="com">//1.8m</span><span class="pln">

- </span><span class="com">// What is the kinect's angle</span><span class="pln">

- </span><span class="kwd">float</span><span class="pln"> angle</span><span class="pun">;</span><span class="pln">

- </span><span class="com">// Density characters for ascii art</span><span class="pln">

- </span><span class="typ">String</span><span class="pln"> letters </span><span class="pun">=</span><span class="pln"> </span><span class="str">"ABCDEFGHIJKLMNOPQRSTUVWXYZ!@#$%^&*()-=_+{}[];':,./<>?`~"</span><span class="pun">;</span><span class="pln">

- </span><span class="typ">String</span><span class="pln"> asciiWeights</span><span class="pun">=</span><span class="str">".`-_':,;^=+/\"|)\\<>)iv%xclrs{*}I?!][1taeo7zjLunT#JCwfy325Fp6mqSghVd4EgXPGZbYkOA&8U$@KHDBWNMR0Q"</span><span class="pun">;</span><span class="pln">

- </span><span class="typ">String</span><span class="pun">[]</span><span class="pln"> stories </span><span class="pun">=</span><span class="pln"> </span><span class="pun">{</span><span class="pln">

- </span><span class="str">"To be is to be perceived, and so to know thyself is only possible through the eyes of the other. The nature of our immortal lives is in the consequences of our words and deeds, that go on and are pushing themselves throughout all time. Our lives are not our own. From womb to tomb, we are bound to others, past and present, and by each crime and every kindness, we birth our future."</span><span class="pln">

- </span><span class="pun">,</span><span class="pln"> </span><span class="str">"I understand now that boundaries between noise and sound are conventions. All boundaries are conventions, waiting to be transcended. One may transcend any convention if only one can first conceive of doing so. My life extends far beyond the limitations of me."</span><span class="pln">

- </span><span class="pun">,</span><span class="pln"> </span><span class="str">"I'll die? I'm going to die anyway, so what difference does it make? Sometimes, it is difficult event for me to understand what I've become. And harder still to remember what I once was. Life is precious. Infinitely so. Perhaps it takes a machine intelligence to appreciate that."</span><span class="pln">

- </span><span class="pun">,</span><span class="pln"> </span><span class="str">"I think I know what I'm passionate about. But is that really true? I don't want to be too much of one thing, in computer science or art, but yet I still want to be an expert at what I can be. They say that I should not be closing too many doors, but it takes so much energy to keep them open too."</span><span class="pln">

- </span><span class="pun">,</span><span class="pln"> </span><span class="str">"I would want the machine to capture the connection I share with others: my family, my friends, my mentors. Ideally, it would describe my flaws as well: my insecurities, my difficulty expressing emotions, my past mistakes. Perhaps if these aspects of me were permanently inscribed in the Library of Babel, a reader would come to understand what I value above all else and what imperfections I work to accept."</span><span class="pln">

- </span><span class="pun">,</span><span class="pln"> </span><span class="str">"Loneliness, the hidden greed I possess, and maybe what happens behind the many masks I have. to the point it might seem like I don’t know who I am."</span><span class="pln">

- </span><span class="pun">,</span><span class="pln"> </span><span class="str">"To know 'mono no aware' is to discern the power and essence, not just of the moon and the cherry blossoms, but of every single thing existing in this world, and to be stirred by each of them."</span><span class="pln">

- </span><span class="pun">};</span><span class="pln">

- </span><span class="typ">String</span><span class="pun">[]</span><span class="pln"> titles </span><span class="pun">=</span><span class="pln"> </span><span class="pun">{</span><span class="pln">

- </span><span class="str">"The Orison"</span><span class="pln">

- </span><span class="pun">,</span><span class="pln"> </span><span class="str">"Boundaries are Conventions"</span><span class="pln">

- </span><span class="pun">,</span><span class="pln"> </span><span class="str">"The Final Work"</span><span class="pln">

- </span><span class="pun">,</span><span class="pln"> </span><span class="str">"Balancing my Dreams"</span><span class="pln">

- </span><span class="pun">,</span><span class="pln"> </span><span class="str">"Inscribing Myself to the Machine"</span><span class="pln">

- </span><span class="pun">,</span><span class="pln"> </span><span class="str">"When Knowing = Not Knowing"</span><span class="pln">

- </span><span class="pun">,</span><span class="pln"> </span><span class="str">"The Book of Life"</span><span class="pln">

- </span><span class="pun">};</span><span class="pln">

- </span><span class="typ">String</span><span class="pun">[]</span><span class="pln"> authors </span><span class="pun">=</span><span class="pln"> </span><span class="pun">{</span><span class="pln">

- </span><span class="str">"Somni-451"</span><span class="pln">

- </span><span class="pun">,</span><span class="pln"> </span><span class="str">"Robert Frobisher"</span><span class="pln">

- </span><span class="pun">,</span><span class="pln"> </span><span class="str">"Zima Blue"</span><span class="pln">

- </span><span class="pun">,</span><span class="pln"> </span><span class="str">"Ron Chew"</span><span class="pln">

- </span><span class="pun">,</span><span class="pln"> </span><span class="str">"Alex Lin"</span><span class="pln">

- </span><span class="pun">,</span><span class="pln"> </span><span class="str">"Lightslayer"</span><span class="pln">

- </span><span class="pun">,</span><span class="pln"> </span><span class="str">"Motoori Norinaga"</span><span class="pln">

- </span><span class="pun">};</span><span class="pln">

- </span><span class="typ">String</span><span class="pun">[]</span><span class="pln"> modeText </span><span class="pun">=</span><span class="pln"> </span><span class="pun">{</span><span class="pln">

- </span><span class="str">"Scanning for:"</span><span class="pln">

- </span><span class="pun">,</span><span class="str">"Digitizing:"</span><span class="pln">

- </span><span class="pun">,</span><span class="str">"Codifying:"</span><span class="pln">

- </span><span class="pun">,</span><span class="str">"Applying Story:"</span><span class="pln">

- </span><span class="pun">,</span><span class="str">"Application Complete."</span><span class="pln">

- </span><span class="pun">};</span><span class="pln">

- </span><span class="typ">String</span><span class="pun">[]</span><span class="pln"> targetText </span><span class="pun">=</span><span class="pln"> </span><span class="pun">{</span><span class="pln">

- </span><span class="str">"HUMANS..."</span><span class="pln">

- </span><span class="pun">,</span><span class="str">"HUMAN_BODY..."</span><span class="pln">

- </span><span class="pun">,</span><span class="str">"DIGITAL_BODY..."</span><span class="pln">

- </span><span class="pun">,</span><span class="str">"STORY_HERE"</span><span class="pln">

- </span><span class="pun">,</span><span class="str">"STORY_HERE"</span><span class="pln">

- </span><span class="pun">};</span><span class="pln">

- </span><span class="kwd">int</span><span class="pln"> drawMode </span><span class="pun">=</span><span class="pln"> </span><span class="lit">0</span><span class="pun">;</span><span class="pln">

- </span><span class="com">// 0 - full color with depth filter</span><span class="pln">

- </span><span class="com">// 1 - pixelize to squares</span><span class="pln">

- </span><span class="com">// 2 - decolorize to single color</span><span class="pln">

- </span><span class="com">// 3 - small text with depth</span><span class="pln">

- </span><span class="com">// 4 - story text without deth</span><span class="pln">

- color black </span><span class="pun">=</span><span class="pln"> color</span><span class="pun">(</span><span class="lit">0</span><span class="pun">,</span><span class="pln"> </span><span class="lit">0</span><span class="pun">,</span><span class="pln"> </span><span class="lit">0</span><span class="pun">);</span><span class="pln">

- color white </span><span class="pun">=</span><span class="pln"> color</span><span class="pun">(</span><span class="lit">255</span><span class="pun">,</span><span class="pln"> </span><span class="lit">255</span><span class="pun">,</span><span class="pln"> </span><span class="lit">255</span><span class="pun">);</span><span class="pln">

- color red </span><span class="pun">=</span><span class="pln"> color</span><span class="pun">(</span><span class="lit">255</span><span class="pun">,</span><span class="pln"> </span><span class="lit">0</span><span class="pun">,</span><span class="pln"> </span><span class="lit">0</span><span class="pun">);</span><span class="pln">

- color magenta </span><span class="pun">=</span><span class="pln"> color</span><span class="pun">(</span><span class="lit">255</span><span class="pun">,</span><span class="pln"> </span><span class="lit">0</span><span class="pun">,</span><span class="pln"> </span><span class="lit">255</span><span class="pun">);</span><span class="pln">

- color cyan </span><span class="pun">=</span><span class="pln"> color</span><span class="pun">(</span><span class="lit">0</span><span class="pun">,</span><span class="pln"> </span><span class="lit">255</span><span class="pun">,</span><span class="pln"> </span><span class="lit">255</span><span class="pun">);</span><span class="pln">

- color BACKGROUND_COLOR </span><span class="pun">=</span><span class="pln"> black</span><span class="pun">;</span><span class="pln">

- color FOREGROUND_COLOR </span><span class="pun">=</span><span class="pln"> white</span><span class="pun">;</span><span class="pln">

- </span><span class="kwd">int</span><span class="pln"> modeTimer </span><span class="pun">=</span><span class="pln"> </span><span class="lit">0</span><span class="pun">;</span><span class="pln">

- </span><span class="kwd">int</span><span class="pln"> indexTimer </span><span class="pun">=</span><span class="pln"> </span><span class="lit">0</span><span class="pun">;</span><span class="pln">

- </span><span class="kwd">int</span><span class="pln"> index </span><span class="pun">=</span><span class="pln"> </span><span class="lit">0</span><span class="pun">;</span><span class="pln">

- </span><span class="typ">String</span><span class="pln"> currentText</span><span class="pun">;</span><span class="pln">

- </span><span class="typ">Boolean</span><span class="pln"> movingText </span><span class="pun">=</span><span class="pln"> </span><span class="kwd">false</span><span class="pun">;</span><span class="pln">

- </span><span class="kwd">int</span><span class="pln"> largerWidth </span><span class="pun">=</span><span class="pln"> </span><span class="lit">1300</span><span class="pun">;</span><span class="pln">

- </span><span class="kwd">int</span><span class="pln"> yPos </span><span class="pun">=</span><span class="pln"> </span><span class="pun">-</span><span class="lit">200</span><span class="pun">;</span><span class="pln">

- </span><span class="typ">PFont</span><span class="pln"> font</span><span class="pun">;</span><span class="pln">

- </span><span class="kwd">void</span><span class="pln"> setup</span><span class="pun">()</span><span class="pln"> </span><span class="pun">{</span><span class="pln">

- size</span><span class="pun">(</span><span class="lit">3000</span><span class="pun">,</span><span class="pln"> </span><span class="lit">1000</span><span class="pun">);</span><span class="pln">

- kinect2 </span><span class="pun">=</span><span class="pln"> </span><span class="kwd">new</span><span class="pln"> </span><span class="typ">Kinect2</span><span class="pun">(</span><span class="kwd">this</span><span class="pun">);</span><span class="pln">

- kinect2</span><span class="pun">.</span><span class="pln">initVideo</span><span class="pun">();</span><span class="pln">

- kinect2</span><span class="pun">.</span><span class="pln">initDepth</span><span class="pun">();</span><span class="pln">

- kinect2</span><span class="pun">.</span><span class="pln">initIR</span><span class="pun">();</span><span class="pln">

- kinect2</span><span class="pun">.</span><span class="pln">initRegistered</span><span class="pun">();</span><span class="pln">

- kinect2</span><span class="pun">.</span><span class="pln">initDevice</span><span class="pun">();</span><span class="pln">

- </span><span class="com">// Blank image</span><span class="pln">

- targetImg </span><span class="pun">=</span><span class="pln"> </span><span class="kwd">new</span><span class="pln"> </span><span class="typ">PImage</span><span class="pun">(</span><span class="pln">kinect2</span><span class="pun">.</span><span class="pln">depthWidth</span><span class="pun">,</span><span class="pln"> kinect2</span><span class="pun">.</span><span class="pln">depthHeight</span><span class="pun">);</span><span class="pln">

- inputImg </span><span class="pun">=</span><span class="pln"> </span><span class="kwd">new</span><span class="pln"> </span><span class="typ">PImage</span><span class="pun">(</span><span class="pln">kinect2</span><span class="pun">.</span><span class="pln">depthWidth</span><span class="pun">,</span><span class="pln"> kinect2</span><span class="pun">.</span><span class="pln">depthHeight</span><span class="pun">);</span><span class="pln">

- currentText </span><span class="pun">=</span><span class="pln"> stories</span><span class="pun">[</span><span class="lit">0</span><span class="pun">];</span><span class="pln">

- drawMode </span><span class="pun">=</span><span class="pln"> </span><span class="lit">0</span><span class="pun">;</span><span class="pln">

- </span><span class="pun">}</span><span class="pln">

- </span><span class="kwd">void</span><span class="pln"> draw</span><span class="pun">()</span><span class="pln"> </span><span class="pun">{</span><span class="pln">

- background</span><span class="pun">(</span><span class="pln">BACKGROUND_COLOR</span><span class="pun">);</span><span class="pln">

- </span><span class="com">// text switcher</span><span class="pln">

- </span><span class="kwd">if</span><span class="pln"> </span><span class="pun">(</span><span class="pln">millis</span><span class="pun">()</span><span class="pln"> </span><span class="pun">-</span><span class="pln"> indexTimer </span><span class="pun">>=</span><span class="pln"> </span><span class="lit">60000</span><span class="pun">)</span><span class="pln"> </span><span class="pun">{</span><span class="pln">

- index</span><span class="pun">++;</span><span class="pln">

- </span><span class="kwd">if</span><span class="pln"> </span><span class="pun">(</span><span class="pln">index </span><span class="pun">></span><span class="pln"> </span><span class="lit">6</span><span class="pun">)</span><span class="pln"> </span><span class="pun">{</span><span class="pln">

- index </span><span class="pun">=</span><span class="pln"> </span><span class="lit">0</span><span class="pun">;</span><span class="pln">

- </span><span class="pun">}</span><span class="pln">

- currentText </span><span class="pun">=</span><span class="pln"> stories</span><span class="pun">[</span><span class="pln">index</span><span class="pun">];</span><span class="pln">

- indexTimer </span><span class="pun">=</span><span class="pln"> millis</span><span class="pun">();</span><span class="pln">

- </span><span class="pun">}</span><span class="pln">

- </span><span class="com">// mode switcher</span><span class="pln">

- </span><span class="kwd">if</span><span class="pln"> </span><span class="pun">(</span><span class="pln">drawMode </span><span class="pun"><=</span><span class="pln"> </span><span class="lit">3</span><span class="pln"> </span><span class="pun">&&</span><span class="pln"> millis</span><span class="pun">()</span><span class="pln"> </span><span class="pun">-</span><span class="pln"> modeTimer </span><span class="pun">>=</span><span class="pln"> </span><span class="lit">5000</span><span class="pun">)</span><span class="pln"> </span><span class="pun">{</span><span class="pln">

- drawMode</span><span class="pun">++;</span><span class="pln">

- modeTimer </span><span class="pun">=</span><span class="pln"> millis</span><span class="pun">();</span><span class="pln">

- </span><span class="pun">}</span><span class="pln">

- </span><span class="kwd">if</span><span class="pln"> </span><span class="pun">(</span><span class="pln">drawMode </span><span class="pun"><=</span><span class="pln"> </span><span class="lit">4</span><span class="pln"> </span><span class="pun">&&</span><span class="pln"> millis</span><span class="pun">()</span><span class="pln"> </span><span class="pun">-</span><span class="pln"> modeTimer </span><span class="pun">>=</span><span class="pln"> </span><span class="lit">40000</span><span class="pun">)</span><span class="pln"> </span><span class="pun">{</span><span class="pln">

- drawMode </span><span class="pun">=</span><span class="pln"> </span><span class="lit">0</span><span class="pun">;</span><span class="pln">

- modeTimer </span><span class="pun">=</span><span class="pln"> millis</span><span class="pun">();</span><span class="pln">

- </span><span class="pun">}</span><span class="pln">

- </span><span class="kwd">if</span><span class="pln"> </span><span class="pun">(</span><span class="pln">drawMode </span><span class="pun">>=</span><span class="pln"> </span><span class="lit">3</span><span class="pun">)</span><span class="pln"> </span><span class="pun">{</span><span class="pln">

- targetText</span><span class="pun">[</span><span class="lit">3</span><span class="pun">]</span><span class="pln"> </span><span class="pun">=</span><span class="pln"> titles</span><span class="pun">[</span><span class="pln">index</span><span class="pun">]</span><span class="pln"> </span><span class="pun">+</span><span class="pln"> </span><span class="str">" : "</span><span class="pln"> </span><span class="pun">+</span><span class="pln"> authors</span><span class="pun">[</span><span class="pln">index</span><span class="pun">]</span><span class="pln"> </span><span class="pun">+</span><span class="pln"> </span><span class="str">"..."</span><span class="pun">;</span><span class="pln">

- targetText</span><span class="pun">[</span><span class="lit">4</span><span class="pun">]</span><span class="pln"> </span><span class="pun">=</span><span class="pln"> titles</span><span class="pun">[</span><span class="pln">index</span><span class="pun">]</span><span class="pln"> </span><span class="pun">+</span><span class="pln"> </span><span class="str">" : "</span><span class="pln"> </span><span class="pun">+</span><span class="pln"> authors</span><span class="pun">[</span><span class="pln">index</span><span class="pun">];</span><span class="pln">

- </span><span class="pun">}</span><span class="pln">

- </span><span class="com">// START DRAWING!</span><span class="pln">

- </span><span class="kwd">if</span><span class="pln"> </span><span class="pun">(</span><span class="pln">drawMode </span><span class="pun"><</span><span class="pln"> </span><span class="lit">3</span><span class="pun">)</span><span class="pln"> </span><span class="pun">{</span><span class="pln">

- targetImg </span><span class="pun">=</span><span class="pln"> kinect2</span><span class="pun">.</span><span class="pln">getRegisteredImage</span><span class="pun">();</span><span class="pln">

- rawDepth </span><span class="pun">=</span><span class="pln"> kinect2</span><span class="pun">.</span><span class="pln">getRawDepth</span><span class="pun">();</span><span class="pln">

- </span><span class="kwd">for</span><span class="pln"> </span><span class="pun">(</span><span class="kwd">int</span><span class="pln"> i</span><span class="pun">=</span><span class="lit">0</span><span class="pun">;</span><span class="pln"> i </span><span class="pun"><</span><span class="pln"> rawDepth</span><span class="pun">.</span><span class="pln">length</span><span class="pun">;</span><span class="pln"> i</span><span class="pun">++)</span><span class="pln"> </span><span class="pun">{</span><span class="pln">

- </span><span class="kwd">if</span><span class="pln"> </span><span class="pun">(</span><span class="pln">rawDepth</span><span class="pun">[</span><span class="pln">i</span><span class="pun">]</span><span class="pln"> </span><span class="pun">>=</span><span class="pln"> minDepth </span><span class="pun">&&</span><span class="pln"> rawDepth</span><span class="pun">[</span><span class="pln">i</span><span class="pun">]</span><span class="pln"> </span><span class="pun"><=</span><span class="pln"> maxDepth</span><span class="pun">)</span><span class="pln"> </span><span class="pun">{</span><span class="pln">

- inputImg</span><span class="pun">.</span><span class="pln">pixels</span><span class="pun">[</span><span class="pln">i</span><span class="pun">]</span><span class="pln"> </span><span class="pun">=</span><span class="pln"> targetImg</span><span class="pun">.</span><span class="pln">pixels</span><span class="pun">[</span><span class="pln">i</span><span class="pun">];</span><span class="pln">

- </span><span class="pun">}</span><span class="pln"> </span><span class="kwd">else</span><span class="pln"> </span><span class="pun">{</span><span class="pln">

- inputImg</span><span class="pun">.</span><span class="pln">pixels</span><span class="pun">[</span><span class="pln">i</span><span class="pun">]</span><span class="pln"> </span><span class="pun">=</span><span class="pln"> BACKGROUND_COLOR</span><span class="pun">;</span><span class="pln">

- </span><span class="pun">}</span><span class="pln">

- </span><span class="pun">}</span><span class="pln">

- </span><span class="pun">}</span><span class="pln"> </span><span class="kwd">else</span><span class="pln"> </span><span class="pun">{</span><span class="pln">

- targetImg </span><span class="pun">=</span><span class="pln"> kinect2</span><span class="pun">.</span><span class="pln">getDepthImage</span><span class="pun">();</span><span class="pln">

- rawDepth </span><span class="pun">=</span><span class="pln"> kinect2</span><span class="pun">.</span><span class="pln">getRawDepth</span><span class="pun">();</span><span class="pln">

- </span><span class="kwd">for</span><span class="pln"> </span><span class="pun">(</span><span class="kwd">int</span><span class="pln"> i</span><span class="pun">=</span><span class="lit">0</span><span class="pun">;</span><span class="pln"> i </span><span class="pun"><</span><span class="pln"> rawDepth</span><span class="pun">.</span><span class="pln">length</span><span class="pun">;</span><span class="pln"> i</span><span class="pun">++)</span><span class="pln"> </span><span class="pun">{</span><span class="pln">

- </span><span class="kwd">if</span><span class="pln"> </span><span class="pun">(</span><span class="pln">rawDepth</span><span class="pun">[</span><span class="pln">i</span><span class="pun">]</span><span class="pln"> </span><span class="pun">>=</span><span class="pln"> minDepth </span><span class="pun">&&</span><span class="pln"> rawDepth</span><span class="pun">[</span><span class="pln">i</span><span class="pun">]</span><span class="pln"> </span><span class="pun"><=</span><span class="pln"> maxDepth</span><span class="pun">)</span><span class="pln"> </span><span class="pun">{</span><span class="pln">

- inputImg</span><span class="pun">.</span><span class="pln">pixels</span><span class="pun">[</span><span class="pln">i</span><span class="pun">]</span><span class="pln"> </span><span class="pun">=</span><span class="pln"> FOREGROUND_COLOR</span><span class="pun">;</span><span class="pln">

- </span><span class="pun">}</span><span class="pln"> </span><span class="kwd">else</span><span class="pln"> </span><span class="pun">{</span><span class="pln">

- inputImg</span><span class="pun">.</span><span class="pln">pixels</span><span class="pun">[</span><span class="pln">i</span><span class="pun">]</span><span class="pln"> </span><span class="pun">=</span><span class="pln"> BACKGROUND_COLOR</span><span class="pun">;</span><span class="pln">

- </span><span class="pun">}</span><span class="pln">

- </span><span class="pun">}</span><span class="pln">

- </span><span class="pun">}</span><span class="pln">

- inputImg</span><span class="pun">.</span><span class="pln">updatePixels</span><span class="pun">();</span><span class="pln">

- resizeImg </span><span class="pun">=</span><span class="pln"> inputImg</span><span class="pun">.</span><span class="kwd">get</span><span class="pun">();</span><span class="pln">

- resizeImg</span><span class="pun">.</span><span class="pln">resize</span><span class="pun">(</span><span class="lit">0</span><span class="pun">,</span><span class="pln"> largerWidth</span><span class="pun">);</span><span class="pln">

- </span><span class="com">//image(resizeImg, kinect2.depthWidth, yPos);</span><span class="pln">

- </span><span class="kwd">switch</span><span class="pun">(</span><span class="pln">drawMode</span><span class="pun">)</span><span class="pln"> </span><span class="pun">{</span><span class="pln">

- </span><span class="kwd">case</span><span class="pln"> </span><span class="lit">0</span><span class="pun">:</span><span class="pln">

- image</span><span class="pun">(</span><span class="pln">resizeImg</span><span class="pun">,</span><span class="pln"> kinect2</span><span class="pun">.</span><span class="pln">depthWidth</span><span class="pun">,</span><span class="pln"> yPos</span><span class="pun">);</span><span class="pln">

- </span><span class="kwd">break</span><span class="pun">;</span><span class="pln">

- </span><span class="kwd">case</span><span class="pln"> </span><span class="lit">1</span><span class="pun">:</span><span class="pln">

- pixelateImage</span><span class="pun">(</span><span class="pln">resizeImg</span><span class="pun">,</span><span class="pln"> </span><span class="lit">10</span><span class="pun">,</span><span class="pln"> kinect2</span><span class="pun">.</span><span class="pln">depthWidth</span><span class="pun">,</span><span class="pln"> yPos</span><span class="pun">);</span><span class="pln">

- </span><span class="kwd">break</span><span class="pun">;</span><span class="pln">

- </span><span class="kwd">case</span><span class="pln"> </span><span class="lit">2</span><span class="pun">:</span><span class="pln">

- ASCII_art</span><span class="pun">(</span><span class="pln">resizeImg</span><span class="pun">,</span><span class="pln"> </span><span class="lit">20</span><span class="pun">,</span><span class="pln"> </span><span class="lit">15</span><span class="pun">,</span><span class="pln"> kinect2</span><span class="pun">.</span><span class="pln">depthWidth</span><span class="pun">,</span><span class="pln"> yPos</span><span class="pun">);</span><span class="pln">

- </span><span class="kwd">break</span><span class="pun">;</span><span class="pln">

- </span><span class="kwd">case</span><span class="pln"> </span><span class="lit">3</span><span class="pun">:</span><span class="pln">

- rando_art</span><span class="pun">(</span><span class="pln">resizeImg</span><span class="pun">,</span><span class="pln"> </span><span class="lit">25</span><span class="pun">,</span><span class="pln"> </span><span class="lit">20</span><span class="pun">,</span><span class="pln"> kinect2</span><span class="pun">.</span><span class="pln">depthWidth</span><span class="pun">,</span><span class="pln"> yPos</span><span class="pun">);</span><span class="pln">

- </span><span class="kwd">break</span><span class="pun">;</span><span class="pln">

- </span><span class="kwd">case</span><span class="pln"> </span><span class="lit">4</span><span class="pun">:</span><span class="pln">

- </span><span class="typ">Story_art</span><span class="pun">(</span><span class="pln">resizeImg</span><span class="pun">,</span><span class="pln"> currentText</span><span class="pun">,</span><span class="pln"> </span><span class="lit">30</span><span class="pun">,</span><span class="pln"> </span><span class="lit">40</span><span class="pun">,</span><span class="pln"> </span><span class="lit">17</span><span class="pun">,</span><span class="pln"> FOREGROUND_COLOR</span><span class="pun">,</span><span class="pln"> BACKGROUND_COLOR</span><span class="pun">,</span><span class="pln"> kinect2</span><span class="pun">.</span><span class="pln">depthWidth</span><span class="pun">,</span><span class="pln"> yPos</span><span class="pun">);</span><span class="pln">

- </span><span class="kwd">break</span><span class="pun">;</span><span class="pln">

- </span><span class="pun">}</span><span class="pln">

- </span><span class="com">// DEBUG TEXT</span><span class="pln">

- fill</span><span class="pun">(</span><span class="pln">FOREGROUND_COLOR</span><span class="pun">);</span><span class="pln">

- </span><span class="com">//text("THRESHOLD: [" + minDepth + ", " + maxDepth + "]", 10, 36);</span><span class="pln">

- </span><span class="com">// PRINT TEXT</span><span class="pln">

- fill</span><span class="pun">(</span><span class="pln">FOREGROUND_COLOR</span><span class="pun">);</span><span class="pln">

- pushMatrix</span><span class="pun">();</span><span class="pln">

- translate</span><span class="pun">(</span><span class="lit">450</span><span class="pun">,</span><span class="pln"> </span><span class="lit">700</span><span class="pun">);</span><span class="pln">

- rotate</span><span class="pun">(-</span><span class="pln">HALF_PI</span><span class="pun">);</span><span class="pln">

- textAlign</span><span class="pun">(</span><span class="pln">LEFT</span><span class="pun">);</span><span class="pln">

- font </span><span class="pun">=</span><span class="pln"> loadFont</span><span class="pun">(</span><span class="str">"HelveticaNeue-Bold-48.vlw"</span><span class="pun">);</span><span class="pln">

- textFont </span><span class="pun">(</span><span class="pln">font</span><span class="pun">);</span><span class="pln">

- text</span><span class="pun">(</span><span class="pln">modeText</span><span class="pun">[</span><span class="pln">drawMode</span><span class="pun">],</span><span class="pln"> </span><span class="lit">0</span><span class="pun">,</span><span class="pln"> </span><span class="lit">0</span><span class="pun">);</span><span class="pln">

- font </span><span class="pun">=</span><span class="pln"> loadFont</span><span class="pun">(</span><span class="str">"HelveticaNeue-Bold-24.vlw"</span><span class="pun">);</span><span class="pln">

- textFont </span><span class="pun">(</span><span class="pln">font</span><span class="pun">);</span><span class="pln">

- text</span><span class="pun">(</span><span class="pln">targetText</span><span class="pun">[</span><span class="pln">drawMode</span><span class="pun">],</span><span class="pln"> </span><span class="lit">0</span><span class="pun">,</span><span class="pln"> </span><span class="lit">50</span><span class="pun">);</span><span class="pln">

- popMatrix</span><span class="pun">();</span><span class="pln">

- </span><span class="pun">}</span><span class="pln">

- </span><span class="kwd">void</span><span class="pln"> </span><span class="typ">Story_art</span><span class="pun">(</span><span class="typ">PImage</span><span class="pln"> input</span><span class="pun">,</span><span class="pln"> </span><span class="typ">String</span><span class="pln"> output</span><span class="pun">,</span><span class="pln"> </span><span class="kwd">int</span><span class="pln"> </span><span class="typ">TextSize</span><span class="pun">,</span><span class="pln"> </span><span class="kwd">int</span><span class="pln"> xSpace</span><span class="pun">,</span><span class="pln"> </span><span class="kwd">int</span><span class="pln"> ySpace</span><span class="pun">,</span><span class="pln"> color target</span><span class="pun">,</span><span class="pln"> color bg</span><span class="pun">,</span><span class="pln"> </span><span class="kwd">int</span><span class="pln"> startX</span><span class="pun">,</span><span class="pln"> </span><span class="kwd">int</span><span class="pln"> startY</span><span class="pun">)</span><span class="pln"> </span><span class="pun">{</span><span class="pln">

- textAlign</span><span class="pun">(</span><span class="pln">CENTER</span><span class="pun">,</span><span class="pln"> CENTER</span><span class="pun">);</span><span class="pln">

- strokeWeight</span><span class="pun">(</span><span class="lit">50</span><span class="pun">);</span><span class="pln">

- textSize</span><span class="pun">(</span><span class="typ">TextSize</span><span class="pun">);</span><span class="pln">

- </span><span class="kwd">int</span><span class="pln"> textIndex </span><span class="pun">=</span><span class="pln"> </span><span class="lit">0</span><span class="pun">;</span><span class="pln">

- </span><span class="com">//transformation</span><span class="pln">

- </span><span class="kwd">for</span><span class="pln"> </span><span class="pun">(</span><span class="kwd">int</span><span class="pln"> x</span><span class="pun">=</span><span class="lit">0</span><span class="pun">;</span><span class="pln"> x</span><span class="pun"><</span><span class="pln">input</span><span class="pun">.</span><span class="pln">width</span><span class="pun">;</span><span class="pln"> x</span><span class="pun">+=(</span><span class="pln">xSpace</span><span class="pun">))</span><span class="pln"> </span><span class="pun">{</span><span class="pln">

- </span><span class="kwd">for</span><span class="pln"> </span><span class="pun">(</span><span class="kwd">int</span><span class="pln"> y</span><span class="pun">=</span><span class="pln">input</span><span class="pun">.</span><span class="pln">height</span><span class="pun">-</span><span class="lit">1</span><span class="pun">;</span><span class="pln"> y</span><span class="pun">></span><span class="lit">0</span><span class="pun">;</span><span class="pln"> y</span><span class="pun">-=(</span><span class="pln">ySpace</span><span class="pun">))</span><span class="pln"> </span><span class="pun">{</span><span class="pln">

- </span><span class="com">// get a grayscale color to determine color intensity</span><span class="pln">

- color C </span><span class="pun">=</span><span class="pln"> input</span><span class="pun">.</span><span class="kwd">get</span><span class="pun">(</span><span class="pln">x</span><span class="pun">,</span><span class="pln"> y</span><span class="pun">);</span><span class="pln">

- color greyscaleColor </span><span class="pun">=</span><span class="pln"> </span><span class="kwd">int</span><span class="pun">(</span><span class="lit">0.299</span><span class="pln"> </span><span class="pun">*</span><span class="pln"> red</span><span class="pun">(</span><span class="pln">C</span><span class="pun">)</span><span class="pln"> </span><span class="pun">+</span><span class="pln"> </span><span class="lit">0.587</span><span class="pun">*</span><span class="pln">green</span><span class="pun">(</span><span class="pln">C</span><span class="pun">)</span><span class="pln"> </span><span class="pun">+</span><span class="pln"> </span><span class="lit">0.114</span><span class="pun">*</span><span class="pln">blue</span><span class="pun">(</span><span class="pln">C</span><span class="pun">));</span><span class="pln">

- </span><span class="com">// map grayscale color to intensity of target color</span><span class="pln">

- color quant </span><span class="pun">=</span><span class="pln"> </span><span class="kwd">int</span><span class="pun">(</span><span class="pln">map</span><span class="pun">(</span><span class="pln">greyscaleColor</span><span class="pun">,</span><span class="pln"> </span><span class="lit">0</span><span class="pun">,</span><span class="pln"> </span><span class="lit">255</span><span class="pun">,</span><span class="pln"> bg</span><span class="pun">,</span><span class="pln"> target</span><span class="pun">));</span><span class="pln">

- </span><span class="com">//fill(quant);</span><span class="pln">

- fill</span><span class="pun">(</span><span class="pln">input</span><span class="pun">.</span><span class="kwd">get</span><span class="pun">(</span><span class="pln">x</span><span class="pun">,</span><span class="pln"> y</span><span class="pun">));</span><span class="pln">

- </span><span class="com">// draw, but rotated</span><span class="pln">

- pushMatrix</span><span class="pun">();</span><span class="pln">

- translate</span><span class="pun">(</span><span class="pln">x </span><span class="pun">+</span><span class="pln"> startX</span><span class="pun">,</span><span class="pln"> y </span><span class="pun">+</span><span class="pln"> startY</span><span class="pun">);</span><span class="pln">

- rotate</span><span class="pun">(-</span><span class="pln">HALF_PI</span><span class="pun">);</span><span class="pln">

- text</span><span class="pun">(</span><span class="pln">output</span><span class="pun">.</span><span class="pln">charAt</span><span class="pun">(</span><span class="pln">textIndex</span><span class="pun">),</span><span class="pln"> </span><span class="lit">0</span><span class="pun">,</span><span class="pln"> </span><span class="lit">0</span><span class="pun">);</span><span class="pln">

- popMatrix</span><span class="pun">();</span><span class="pln">

- </span><span class="com">//text(output.charAt(textIndex),x + startX, y + startY);</span><span class="pln">

- textIndex</span><span class="pun">++;</span><span class="pln">

- </span><span class="kwd">if</span><span class="pln"> </span><span class="pun">(</span><span class="pln">textIndex </span><span class="pun">==</span><span class="pln"> output</span><span class="pun">.</span><span class="pln">length</span><span class="pun">())</span><span class="pln"> </span><span class="pun">{</span><span class="pln">

- textIndex </span><span class="pun">=</span><span class="pln"> </span><span class="lit">0</span><span class="pun">;</span><span class="pln">

- </span><span class="pun">}</span><span class="pln">

- </span><span class="pun">}</span><span class="pln">

- </span><span class="pun">}</span><span class="pln">

- </span><span class="pun">}</span><span class="pln">

- </span><span class="kwd">void</span><span class="pln"> ASCII_art</span><span class="pun">(</span><span class="typ">PImage</span><span class="pln"> input</span><span class="pun">,</span><span class="pln"> </span><span class="kwd">int</span><span class="pln"> textSize</span><span class="pun">,</span><span class="pln"> </span><span class="kwd">int</span><span class="pln"> spacing</span><span class="pun">,</span><span class="pln"> </span><span class="kwd">int</span><span class="pln"> startX</span><span class="pun">,</span><span class="pln"> </span><span class="kwd">int</span><span class="pln"> startY</span><span class="pun">)</span><span class="pln"> </span><span class="pun">{</span><span class="pln">

- textAlign</span><span class="pun">(</span><span class="pln">CENTER</span><span class="pun">,</span><span class="pln"> CENTER</span><span class="pun">);</span><span class="pln">

- strokeWeight</span><span class="pun">(</span><span class="lit">50</span><span class="pun">);</span><span class="pln">

- textSize</span><span class="pun">(</span><span class="pln">textSize</span><span class="pun">);</span><span class="pln">

- </span><span class="com">//transformation from grayscale to ASCII art</span><span class="pln">

- </span><span class="kwd">for</span><span class="pln"> </span><span class="pun">(</span><span class="kwd">int</span><span class="pln"> y</span><span class="pun">=</span><span class="lit">0</span><span class="pun">;</span><span class="pln"> y</span><span class="pun"><</span><span class="pln">input</span><span class="pun">.</span><span class="pln">height</span><span class="pun">;</span><span class="pln"> y</span><span class="pun">+=(</span><span class="pln">spacing</span><span class="pun">))</span><span class="pln"> </span><span class="pun">{</span><span class="pln">

- </span><span class="kwd">for</span><span class="pln"> </span><span class="pun">(</span><span class="kwd">int</span><span class="pln"> x</span><span class="pun">=</span><span class="lit">0</span><span class="pun">;</span><span class="pln"> x</span><span class="pun"><</span><span class="pln">input</span><span class="pun">.</span><span class="pln">width</span><span class="pun">;</span><span class="pln"> x</span><span class="pun">+=(</span><span class="pln">spacing</span><span class="pun">))</span><span class="pln"> </span><span class="pun">{</span><span class="pln">

- </span><span class="com">//remap the grayscale color to printable character</span><span class="pln">

- color C </span><span class="pun">=</span><span class="pln"> input</span><span class="pun">.</span><span class="kwd">get</span><span class="pun">(</span><span class="pln">x</span><span class="pun">,</span><span class="pln"> y</span><span class="pun">);</span><span class="pln">

- color greyscaleColor </span><span class="pun">=</span><span class="pln"> </span><span class="kwd">int</span><span class="pun">(</span><span class="lit">0.299</span><span class="pln"> </span><span class="pun">*</span><span class="pln"> red</span><span class="pun">(</span><span class="pln">C</span><span class="pun">)</span><span class="pln"> </span><span class="pun">+</span><span class="pln"> </span><span class="lit">0.587</span><span class="pun">*</span><span class="pln">green</span><span class="pun">(</span><span class="pln">C</span><span class="pun">)</span><span class="pln"> </span><span class="pun">+</span><span class="pln"> </span><span class="lit">0.114</span><span class="pun">*</span><span class="pln">blue</span><span class="pun">(</span><span class="pln">C</span><span class="pun">));</span><span class="pln">

- </span><span class="kwd">int</span><span class="pln"> quant</span><span class="pun">=</span><span class="kwd">int</span><span class="pun">(</span><span class="pln">map</span><span class="pun">(</span><span class="pln">greyscaleColor</span><span class="pun">,</span><span class="pln"> </span><span class="lit">0</span><span class="pun">,</span><span class="pln"> </span><span class="lit">255</span><span class="pun">,</span><span class="pln"> </span><span class="lit">0</span><span class="pun">,</span><span class="pln"> asciiWeights</span><span class="pun">.</span><span class="pln">length</span><span class="pun">()-</span><span class="lit">1</span><span class="pun">));</span><span class="pln">

- fill</span><span class="pun">(</span><span class="pln">input</span><span class="pun">.</span><span class="kwd">get</span><span class="pun">(</span><span class="pln">x</span><span class="pun">,</span><span class="pln"> y</span><span class="pun">));</span><span class="pln">

- </span><span class="com">// draw, but rotated</span><span class="pln">

- pushMatrix</span><span class="pun">();</span><span class="pln">

- translate</span><span class="pun">(</span><span class="pln">x </span><span class="pun">+</span><span class="pln"> startX</span><span class="pun">,</span><span class="pln"> y </span><span class="pun">+</span><span class="pln"> startY</span><span class="pun">);</span><span class="pln">

- rotate</span><span class="pun">(-</span><span class="pln">HALF_PI</span><span class="pun">);</span><span class="pln">

- text</span><span class="pun">(</span><span class="pln">asciiWeights</span><span class="pun">.</span><span class="pln">charAt</span><span class="pun">(</span><span class="pln">quant</span><span class="pun">),</span><span class="pln"> </span><span class="lit">0</span><span class="pun">,</span><span class="pln"> </span><span class="lit">0</span><span class="pun">);</span><span class="pln">

- popMatrix</span><span class="pun">();</span><span class="pln">

- </span><span class="pun">}</span><span class="pln">

- </span><span class="pun">}</span><span class="pln">

- </span><span class="pun">}</span><span class="pln">

- </span><span class="kwd">void</span><span class="pln"> rando_art</span><span class="pun">(</span><span class="typ">PImage</span><span class="pln"> input</span><span class="pun">,</span><span class="pln"> </span><span class="kwd">int</span><span class="pln"> textSize</span><span class="pun">,</span><span class="pln"> </span><span class="kwd">int</span><span class="pln"> spacing</span><span class="pun">,</span><span class="pln"> </span><span class="kwd">int</span><span class="pln"> startX</span><span class="pun">,</span><span class="pln"> </span><span class="kwd">int</span><span class="pln"> startY</span><span class="pun">)</span><span class="pln"> </span><span class="pun">{</span><span class="pln">

- textAlign</span><span class="pun">(</span><span class="pln">CENTER</span><span class="pun">,</span><span class="pln"> CENTER</span><span class="pun">);</span><span class="pln">

- strokeWeight</span><span class="pun">(</span><span class="lit">50</span><span class="pun">);</span><span class="pln">

- textSize</span><span class="pun">(</span><span class="pln">textSize</span><span class="pun">);</span><span class="pln">

- </span><span class="com">//transformation from grayscale to ASCII art</span><span class="pln">

- </span><span class="kwd">for</span><span class="pln"> </span><span class="pun">(</span><span class="kwd">int</span><span class="pln"> y</span><span class="pun">=</span><span class="lit">0</span><span class="pun">;</span><span class="pln"> y</span><span class="pun"><</span><span class="pln">input</span><span class="pun">.</span><span class="pln">height</span><span class="pun">;</span><span class="pln"> y</span><span class="pun">+=(</span><span class="pln">spacing</span><span class="pun">))</span><span class="pln"> </span><span class="pun">{</span><span class="pln">

- </span><span class="kwd">for</span><span class="pln"> </span><span class="pun">(</span><span class="kwd">int</span><span class="pln"> x</span><span class="pun">=</span><span class="lit">0</span><span class="pun">;</span><span class="pln"> x</span><span class="pun"><</span><span class="pln">input</span><span class="pun">.</span><span class="pln">width</span><span class="pun">;</span><span class="pln"> x</span><span class="pun">+=(</span><span class="pln">spacing</span><span class="pun">))</span><span class="pln"> </span><span class="pun">{</span><span class="pln">

- </span><span class="com">//just get a random character</span><span class="pln">

- fill</span><span class="pun">(</span><span class="pln">input</span><span class="pun">.</span><span class="kwd">get</span><span class="pun">(</span><span class="pln">x</span><span class="pun">,</span><span class="pln"> y</span><span class="pun">));</span><span class="pln">

- </span><span class="com">// draw, but rotated</span><span class="pln">

- pushMatrix</span><span class="pun">();</span><span class="pln">

- translate</span><span class="pun">(</span><span class="pln">x </span><span class="pun">+</span><span class="pln"> startX</span><span class="pun">,</span><span class="pln"> y </span><span class="pun">+</span><span class="pln"> startY</span><span class="pun">);</span><span class="pln">

- rotate</span><span class="pun">(-</span><span class="pln">HALF_PI</span><span class="pun">);</span><span class="pln">

- text</span><span class="pun">(</span><span class="pln">letters</span><span class="pun">.</span><span class="pln">charAt</span><span class="pun">(</span><span class="kwd">int</span><span class="pun">(</span><span class="pln">random</span><span class="pun">(</span><span class="lit">0</span><span class="pun">,</span><span class="pln"> </span><span class="lit">55</span><span class="pun">))),</span><span class="pln"> </span><span class="lit">0</span><span class="pun">,</span><span class="pln"> </span><span class="lit">0</span><span class="pun">);</span><span class="pln">

- popMatrix</span><span class="pun">();</span><span class="pln">

- </span><span class="pun">}</span><span class="pln">

- </span><span class="pun">}</span><span class="pln">

- </span><span class="pun">}</span><span class="pln">

- </span><span class="com">// Adjust the angle and the depth threshold min and max</span><span class="pln">

- </span><span class="kwd">void</span><span class="pln"> keyPressed</span><span class="pun">()</span><span class="pln"> </span><span class="pun">{</span><span class="pln">

- </span><span class="kwd">if</span><span class="pln"> </span><span class="pun">(</span><span class="pln">key </span><span class="pun">==</span><span class="pln"> </span><span class="str">'a'</span><span class="pun">)</span><span class="pln"> </span><span class="pun">{</span><span class="pln">

- minDepth </span><span class="pun">=</span><span class="pln"> constrain</span><span class="pun">(</span><span class="pln">minDepth</span><span class="pun">+</span><span class="lit">100</span><span class="pun">,</span><span class="pln"> </span><span class="lit">0</span><span class="pun">,</span><span class="pln"> maxDepth</span><span class="pun">);</span><span class="pln">

- </span><span class="pun">}</span><span class="pln"> </span><span class="kwd">else</span><span class="pln"> </span><span class="kwd">if</span><span class="pln"> </span><span class="pun">(</span><span class="pln">key </span><span class="pun">==</span><span class="pln"> </span><span class="str">'s'</span><span class="pun">)</span><span class="pln"> </span><span class="pun">{</span><span class="pln">

- minDepth </span><span class="pun">=</span><span class="pln"> constrain</span><span class="pun">(</span><span class="pln">minDepth</span><span class="pun">-</span><span class="lit">100</span><span class="pun">,</span><span class="pln"> </span><span class="lit">0</span><span class="pun">,</span><span class="pln"> maxDepth</span><span class="pun">);</span><span class="pln">

- </span><span class="pun">}</span><span class="pln"> </span><span class="kwd">else</span><span class="pln"> </span><span class="kwd">if</span><span class="pln"> </span><span class="pun">(</span><span class="pln">key </span><span class="pun">==</span><span class="pln"> </span><span class="str">'z'</span><span class="pun">)</span><span class="pln"> </span><span class="pun">{</span><span class="pln">

- maxDepth </span><span class="pun">=</span><span class="pln"> constrain</span><span class="pun">(</span><span class="pln">maxDepth</span><span class="pun">+</span><span class="lit">100</span><span class="pun">,</span><span class="pln"> minDepth</span><span class="pun">,</span><span class="pln"> </span><span class="lit">1165952918</span><span class="pun">);</span><span class="pln">

- </span><span class="pun">}</span><span class="pln"> </span><span class="kwd">else</span><span class="pln"> </span><span class="kwd">if</span><span class="pln"> </span><span class="pun">(</span><span class="pln">key </span><span class="pun">==</span><span class="pln"> </span><span class="str">'x'</span><span class="pun">)</span><span class="pln"> </span><span class="pun">{</span><span class="pln">

- maxDepth </span><span class="pun">=</span><span class="pln"> constrain</span><span class="pun">(</span><span class="pln">maxDepth</span><span class="pun">-</span><span class="lit">100</span><span class="pun">,</span><span class="pln"> minDepth</span><span class="pun">,</span><span class="pln"> </span><span class="lit">1165952918</span><span class="pun">);</span><span class="pln">

- </span><span class="pun">}</span><span class="pln"> </span><span class="kwd">else</span><span class="pln"> </span><span class="kwd">if</span><span class="pln"> </span><span class="pun">(</span><span class="pln">key </span><span class="pun">==</span><span class="pln"> </span><span class="str">'m'</span><span class="pun">)</span><span class="pln"> </span><span class="pun">{</span><span class="pln">

- movingText </span><span class="pun">=</span><span class="pln"> </span><span class="pun">!</span><span class="pln">movingText</span><span class="pun">;</span><span class="pln">

- </span><span class="pun">}</span><span class="pln"> </span><span class="kwd">else</span><span class="pln"> </span><span class="kwd">if</span><span class="pln"> </span><span class="pun">(</span><span class="pln">key </span><span class="pun">>=</span><span class="pln"> </span><span class="str">'0'</span><span class="pln"> </span><span class="pun">&&</span><span class="pln"> key </span><span class="pun"><=</span><span class="pln"> </span><span class="str">'9'</span><span class="pun">)</span><span class="pln"> </span><span class="pun">{</span><span class="pln">

- drawMode </span><span class="pun">=</span><span class="pln"> </span><span class="typ">Character</span><span class="pun">.</span><span class="pln">getNumericValue</span><span class="pun">(</span><span class="pln">key</span><span class="pun">);</span><span class="pln">

- </span><span class="pun">}</span><span class="pln">

- </span><span class="pun">}</span>

Comments are closed.