Team: Yu Jiang & Max Kornyev

Abstract

Our original development goals for the maze device were to cater an interactive experience to the user; mainly through a unique mechanical design and nontrivial set of control methods. But during a time when we’re often apart from our friends, we expanded our project to create a remote marble maze experience unlike any other: with players being able to use their maze locally, switching game modes, and then letting their remote friends take control.

The work done in this project is a great example of how microcontrollers and the network can transform a game that is usually played alone into a truly novel social experience.

Objectives

Our project goals first involved prototyping a Fusion360 maze design, laser cutting, and then assembling the physical component. Then, through weekly iterations on the code and control schemes, we planned to develop a remote input mode for the Arduino and a local sonar-based controller.

Project Features:

- Laser-cut Mechanical Marble Maze – with roll & pitch movement motivated by an Arduino microcontroller.

- Physical Input – a two-sonar-sensor setup for triangulating an object in 2D space, and converting it to a 2-axis maze movement.

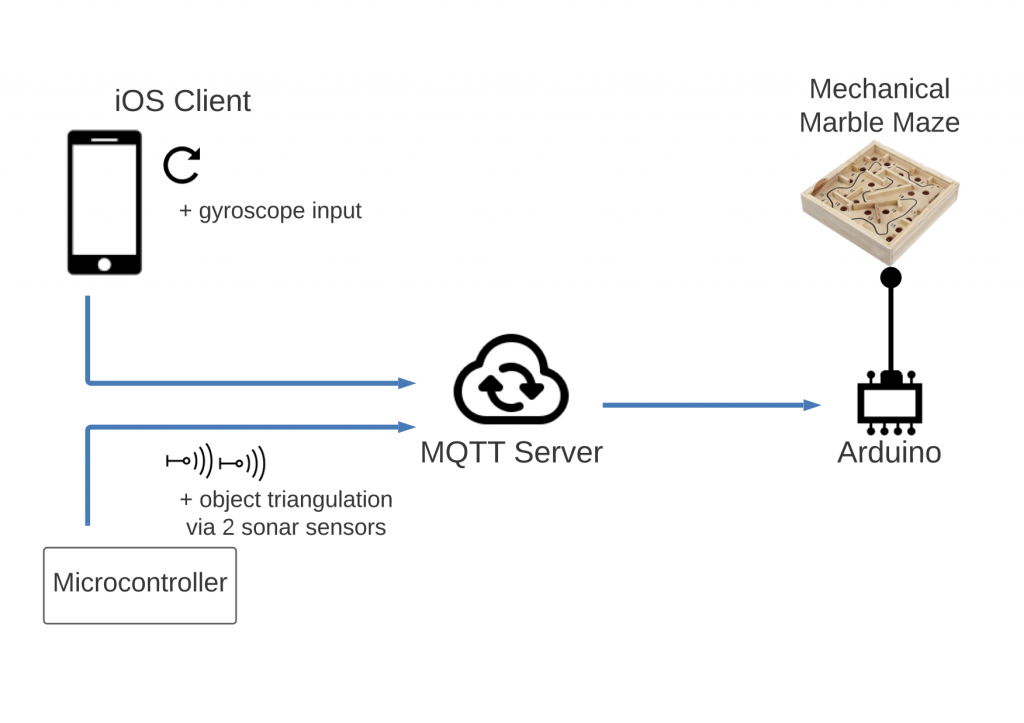

- Remote Input Mode – an Arduino mode for parsing MQTT server messages when connected to a network device.

- Software Input – an iOS application that forwards Gyroscope data to the MQTT server.

Implementation

CAD Design

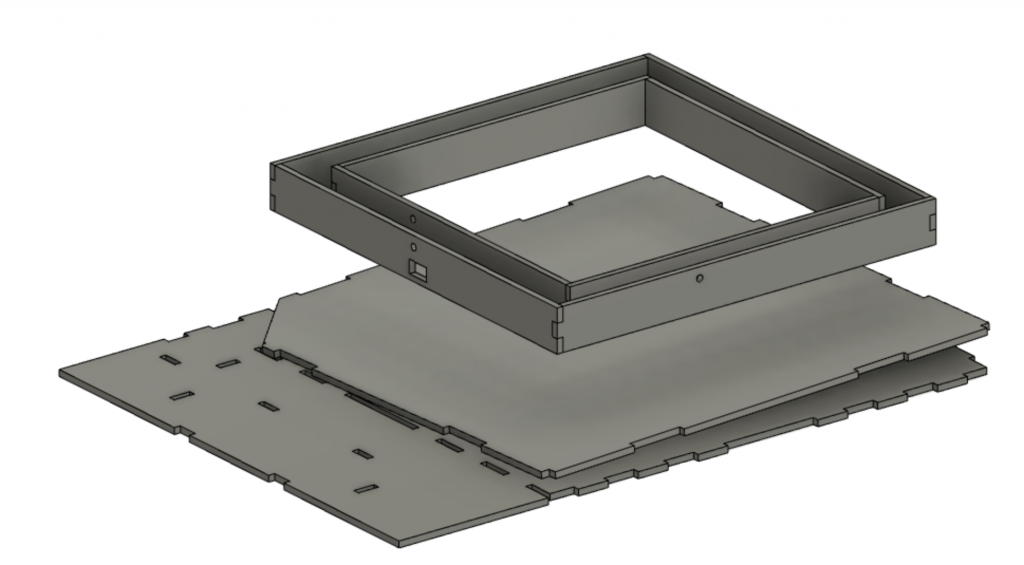

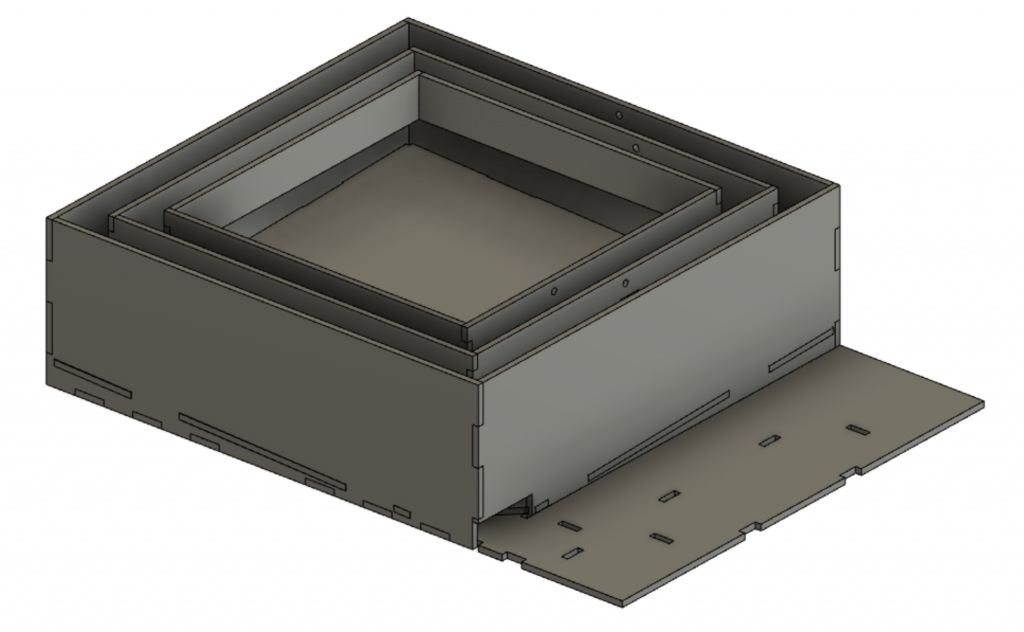

We made a CAD design to laser cut the parts. The main CAD design includes two narrow pivoting frames, the main maze board, a tilted marble retrieval board, and the main playground base and walls — the complete CAD design can be accessed below.

The structure was assembled with lightweight 6mm laser-cut plywood, low friction shoulder screws, and two hobby servo motors.

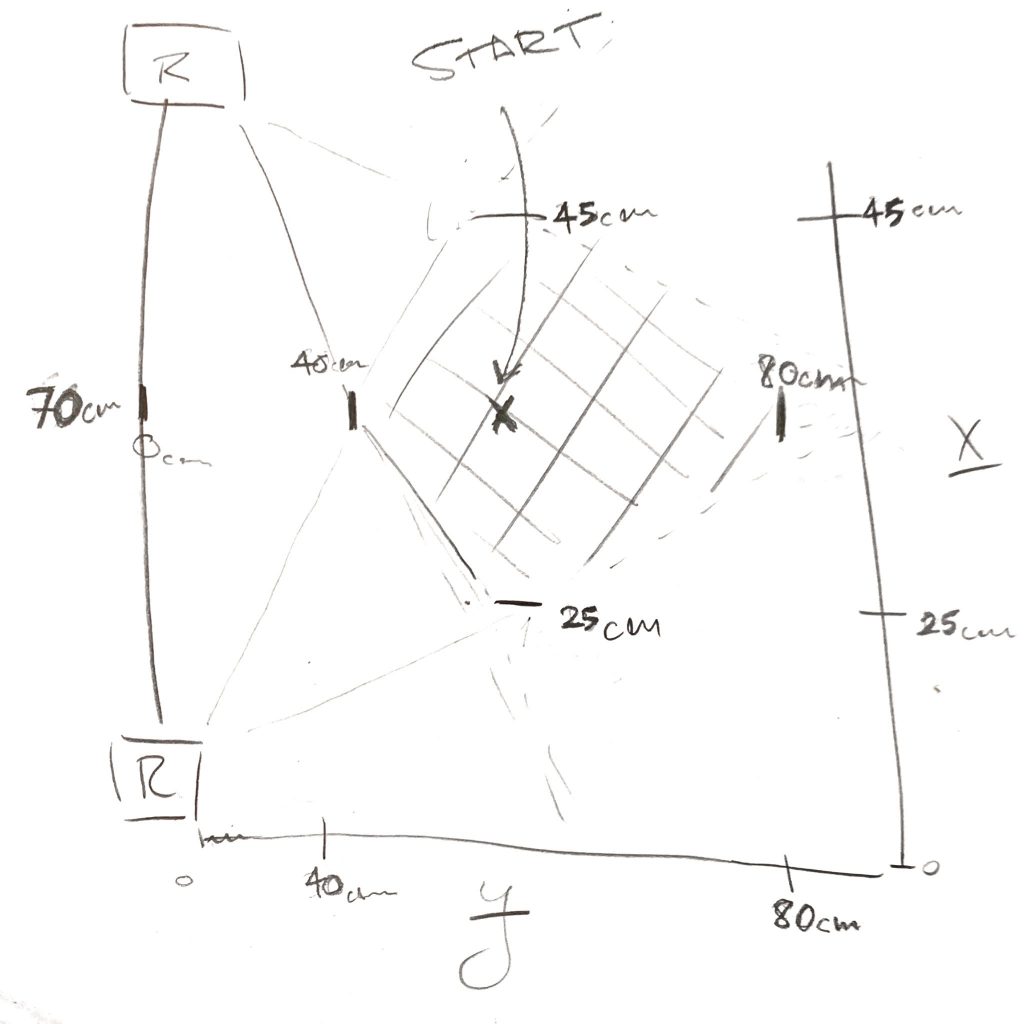

After extending our Arduino program with a remote input mode, we designed a sonar range sensor control scheme to control the two maze axes. Operating the device with nothing but your hands was a feature we hoped would make the game more engaging to play.

We then iterated on our input scheme, and developed a triangulation setup that could convert the 2-dimensional movement of an object into movement on two servo axes.

Outcomes

Laser-cut Mechanical Marble Maze

By using a Gimbal frame, we were able to design a sturdy yet mechanically revealing structure that resonated with curious players. This also offered us some vertical flexibility, in that a taller maze frame allowed us to implement a marble retrieval system: This would catch the marble below the gameboard for the player, and deposit it in front of them.

Physical Input

We were also able to successfully implement a control scheme that we can confidently say users have probably never seen before. In the following video, we utilized Heron’s formula and a bit of trig to control this remote maze by moving a water bottle within a confined space.

Remote Mode & Software Input

Unfortunately, testing this feature from halfway around the world posed one serious issue: latency. The combination of sending serial output to an MQTT broker and across the world to another serial channel was too much for our MQTT server to handle, and caused input clumping on the receiving maze side.

In order to address this, we developed an iOS client that can tap into your mobile device’s quality Gyroscopic sensor, and directly control the tilting of a remote board. This provided us with much more accurate sensor input, requiring minimal parsing on the Arduino side. And, in our experiments, the application helped almost entirely eliminate input clumping on the receiving Arduino’s end by transmitting at 4 messages/second.

Our final project outcomes looked like this:

In what ways did your concept use physical movement and embedded computation to meet a human need?

Locally, our device can function alone. Through two separate sonar setup schemes, we provided unique means of control to the player. And the control of the device via hand movements and object movements definitely seemed more natural to execute than using a more limiting tactile control. And ultimately, by porting our device to accept remote input from a variety of sources, we made what is typically a solo gaming experience into a fun activity that you can share with your remote friends.

In what ways did your physical experiments support or refute your concept?

Our device does achieve and encourage remote game playing. It also conveys a sense of almost ambient telepresence – it shows more than presence but also the remote person’s relative movements through the tilting of the two axes. Right now the two players can collaborate in a way such that the remote player controls the axis then the local player can observe and reset the game if needed. Our game is also open to future possibilities for the local player to be more involved perhaps through combing remote and local sensor/phone input.

We found that the latency issue across the MQTT bridge significantly hampers the gaming experience but we expect the latency to be greatly reduced if two players are relatively closer (in the same country/city). Another major issue is that currently, the remote player doesn’t get a complete visual of the board – this can either be later added or the game can be made into a collaboration game such that the local player is instructing the remote player’s movement.

Future Work

With our device, we successfully implemented a working maze game capable of porting remote input to it. But, there are two main aspects in which the device can be extended.

Additional Game Modes:

In order to really take the novelty of controlling a device in your friend’s house to the next level, we must make the game interactive for both parties. By adding interactive game modes, each player could take control of an axis, and work together (or against each other) to finish the marble maze. This would encourage meaningful gameplay, and engage both users to return to the remote game mode.

Networking Improvements:

A code level improvement that our Arduino could benefit from involves an extension of the REMOTE_INPUT mode. Currently, there are no controls for buffering latent remote input; such that the device will process the signals as soon as they are available. As a result, the local user will see an undefined jitter in the maze whenever a block of inputs are processed. Implementing a buffer to store these inputs and process them at a set rate will not rid of any latencies, but it will reflect a delayed but accurate movement of the messages that were transmitted. This will make it obvious to the user that network latencies are present, as opposed to the undefined movements.

Contributions

Max: Arduino code, two sonar triangulation scheme, and the iOS client.

Yu: CAD design and laser cutting, prototype assembly, and local testing.

Citations

- The object triangulation setup scheme and Heron’s formula implementation is adopted from Lingib’s Dual Echo Locator demo.

- The highpass filter and smoothing code are inspired by Garth Zeglin @ CMU.

Supporting Material

- Source Code on Github

– Ardunio Source

– iOS Application Source - CAD files

– Link to Fusion Sketch

Leave a Reply

You must be logged in to post a comment.