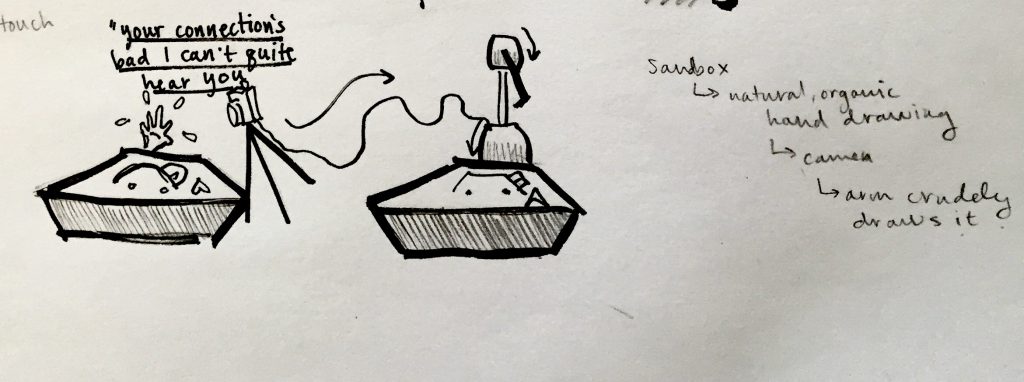

Concept: “Your connection is bad, I can’t hear you”: A method of projecting personal ideas to a larger audience through sand.

original sketch for idea

Narrative: People these days use many forms of social media and share some of their personal thoughts with a larger audience than intended. Our goal is to imitate the implications of the web where information is more accessible and public than ever. A viewer will draw something, which is then roughly projected for everyone else to see. The notion that there are consequences for the information that you choose to share is central to our project. Additionally, because the projection will only be a rough sketch of what was drawn originally, we emphasize the theme that things posted online may be taken out of context without all the necessary details to understand it. The drawing that is projected is, in a way, carefully curated by the viewer who draws it, and depends more on what they want others to see than what they are actually thinking themselves. Sand was chosen for the medium to invoke a childlike effect. Rather than whiteboards or other types of ink drawings which may be easier to process, sand will offer a contrast between the advanced technologies that people are using these days, the problems, they cause, and the much simpler days of playing in sandboxes.

Themes: This project relates to our course theme of dynamic interaction with objects and materials by having the robot draw in the sand, potentially similar to how a human would. We hope that there is a difference between the human and the robot, as that would create an interesting dilemma from having a very human user try to direct (indirectly) a robot to convey a message. The lightness of sand coupled with the robotic stiffness of the robot would create what could be a delicate motion.

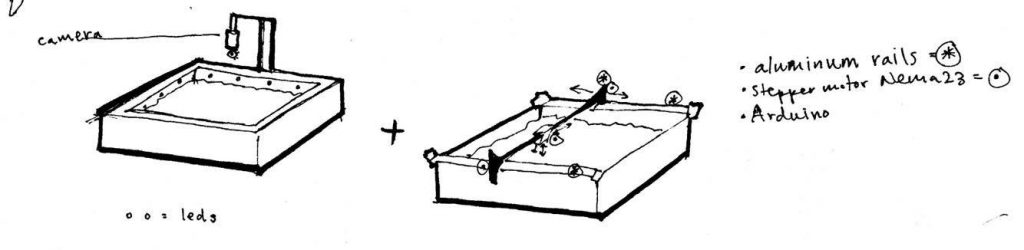

Design Decisions: The piece will consist of 2 sandboxes, one which is 2×2’ and sits on a table, and one which is 6×6’ and sits on the ground on the other side of the table, visible but out of reach to the viewers. The smaller sandbox will be surrounded by approximately 1’ tall barriers on three sides (excluding the side facing the viewer) to prevent other viewers who are not drawing from easily seeing what they are drawing, but short enough that the viewer who is drawing can still see the large sandbox on the other side. Each sandbox is filled with fine dry sand that is reminiscent of what we played with in sandboxes as children. The smaller sandbox on the table has two buttons next to it. One button is labeled ‘send’ and the other is labeled ‘clear’. The send button will prompt the image drawn to be imitated by the robot in the large scale sandbox, and the clear button will clear both the smaller and larger sandboxes, by using a rake in a sweeping motion. There will also be a webcam above to capture the image on the sand. It will be mounted approximately three feet above the sand box so that the viewer has enough room to move around and over the sandbox, but close enough that the sandbox takes up as much of the field of vision of the camera as possible. There will be two light strips in the smaller sandbox, lining each of two adjacent sides to create a dramatic and harsh shadow so that the camera will be better at picking up the forms that have been drawn in the sand. In terms of light quality, bright daylight would be what we are aiming for, but it depends on the availability of light strips in that color. The larger sandbox will have three rails and a metal stick to write in the sand. The three rails give the drawing mechanism 3 degrees of freedom. This way, it can draw more than one continuous line.

The user can use their finger to draw in the ‘sender’ (smaller) box. Once they are satisfied, they can press the ‘send’ button. Once this happens, the webcam captures the image through OpenCV and the corresponding ‘receiver’ (larger) box on the other side of the barrier starts to draw the captured image. A user can also press the ‘clear’ button, and a metal rod will sweep across the sand to even it back out. This 2 box system allows one viewer to draw and project, while many other viewers can witness the process.

For the physical components of the piece itself, we’re going for a more childlike sandbox in order to inspire the user to feel comfortable drawing in the sand. This means, a big wooden sandbox(maybe red/aged paint), filled with light, dry, uniform, sand. The larger unit(5’x5’ or 6’x6’) will ideally be identical to the personal unit, just larger.

On “send”, the larger unit clears itself and then begins to draw anew. This means that it does not recognize old lines, and instead treats each drawing as a brand new one. The clearing system is not necessarily separate from the drawing stylus, but we are hoping to be able to use the z-axis to use the rail as a clearing mechanism. The robot will be able to move along the z axis, but has only two settings: “up” or “down” which denote whether the robot is drawing or not. In other words, the robot’s drawing will not be able to draw at various depths, but only in simple lines. However, the line does not need to be continuous.

We hope to obtain a “rough sketch” as the output the robot gives. This ties into how things posted/written remotely cannot translate/convey so many things that talking face to face does(ie. Tone, context, etc). The robot will ignore lines of certain size, as they are too small and trivial, and instead only focus on the bulk of what is there. It will also try to make assumptions at what the given image is by joining lines that are close beyond a threshold.

basic sketch of the two piece project important notes: lighting now embedded in box, drawing system is through CNC plotter-type

basic sketch of the two piece project important notes: lighting now embedded in box, drawing system is through CNC plotter-type

Materials:

- Sand

- Web-camera – basic Logitech Webcam

- Strip leds

- Wooden boxes

- Arduinos

- Stepper Motors

- Sliding rails

Milestones:

| proof-of-concept demonstration: | |

| We want to have a robot that can draw (not necessarily in sand) a basic image (maybe just a geometric shape that we hard code into it) for a proof of concept demonstration. This will be similar to a laser cutter mechanism. After that, we will integrate OpenCV and work on having two units that can communicate effectively with each other. We will also have to design the actual thing that draws in the sand and decide what kind of effect we want to create with it. | |

| initial design documentation: | |

| In addition to the progress made from the proof-of-concept, we want to have diagrams for our drawing mechanism, an overall code outline, and a confirmed list of parts. | |

| critical path analysis: | |

| We aim to have a build plan scheduled out, with concrete dates for each component (sandbox, drawing mechanism, coding, etc). | |

| phase 1 prototype: | |

| Our phase 1 prototype will consist of a single, complete node. This includes the sandbox, the drawing robot, another sandbox for the drawing robot to draw in, the OpenCV component, and seamless software integration of all the parts. This will almost be the completed work, except without the communication aspect. Once we have built one, building the second will be straightforward and knowledge can be reused. The only challenge that follows is to link the second node to the first after it is built, so that they can work together as a cohesive system. | |

| phase 2 prototype: | |

| Our phase 2 prototype will consist of both working nodes. It will include any changes to fix problems identified from the phase 1 prototype. | |

Appendix

- What exactly is the rubric for the visual interpretation? E.g., how would you

instruct a human being to draw with a stick based on what they see?

I would instruct the human being to look for the shadows in the sand cast by the light. These shadows should form the basis of the lines that the machine (or in this case the human being) should draw. These may be lines or points, depending on what the input image. The specific implementation will vary based on our testing, e.g. the sensitivity of the webcam, OpenCV, sharpness of the light, sharpness of the shadows, granularity of the sand. If the resolution of the image is too low, or OpenCV cannot capture sharp images, or the best light we find does not have the desired sharpness, or the finest granularity of sand is not fine enough, we can adjust the machine to ignore shadows of a certain smaller size, whether these anomalies be introduced through the coarseness of the sand, the low resolution of the image, or OpenCV itself, etc. So, depending on our specific implementation and materials we are able to end up procuring, I would instruct the human being to draw with a stick accordingly. At the basest, the human being should mimic the input image, although the details and specifics of the lines will vary based on our materials and testing.

- How is the viewer prompted as to the scenario?

Since the form of the piece consists of a large, 6’ x 6’ sandbox with very uninviting and industrial looking rails spanning across, as well as a very inviting looking desk with a 2’ x 2’ sandbox in it, the viewer is indirectly prompted by the approachability of the components of the piece. Since the large sandbox has metal rails spread across its opening, it is not approachable. However, the smaller sandbox is easily accessible, placed in a desk with lights illuminating it and a webcam over it, clearly indicating some kind of input.

- What tools are provided to the viewer for drawing?

The viewer is not provided any tools for drawing. As mentioned before, the approachability, lights, and webcam of the small sandbox clearly indicate input. From there, the user can easily surmise due to the webcam that an image should be somehow placed into the small sandbox. Next, they can logically conclude that images can be drawn in the sand. In the absence of a tool, they can then assume that using their hands is the proper method of input.

- How will you limit the complexity of the input, or not?

We do not limit the complexity of the input, because part of the enjoyment of the visitor is seeing how the machine interprets their drawn images. Depending on the implementation, like the sensitivity of the webcam, how OpenCV works, sharpness of the light, sharpness of the shadows, granularity of the sand, the machine drawn image most likely will not be an exact reproduction of the user input. Thus looking at the similarities and differences in the images should be fun for the user.

,

- What is the speed of drawing (in mm/sec)?

The speed of the drawing will be 70 mm/sec, so it is not too slow that the user gets bored.

- How many seconds will it take to render a representative image?

Once again, this depends on the size of the input image. The image should scale to the input image, so it should be 3x larger. The specific size is dependent on what the user draws.

- How is the viewer confident that a person sees the result?

The viewer is confident that they can see the result because the larger box is directly in front of them. Also, others can see the box because the larger box is so large.

- Does this evoke a fax machine? (Transactional, delayed, transcoded.)

No it does not, because the output image will most likely not be an exact replica of the original image. It is also rendered in sand, not paper and ink. The delay is also most likely longer than a normal fax machine. It is also not a method to send a message to a single person, but rather a projection of the image to the world (hence the much larger box). The only similarity is that an image is being transmitted.

Comments are closed.