November 2nd:

At the moment, we have a camera mounted to a stepper motor with the goal of creating our main motion: camera panning/rotation at varying speeds. While both software and hardware are still in progress, we are confident in our direction. We have experimented with both facial and motion recognition, using facial for tracking and motion to determine how “exciting” an audience-performer might be.

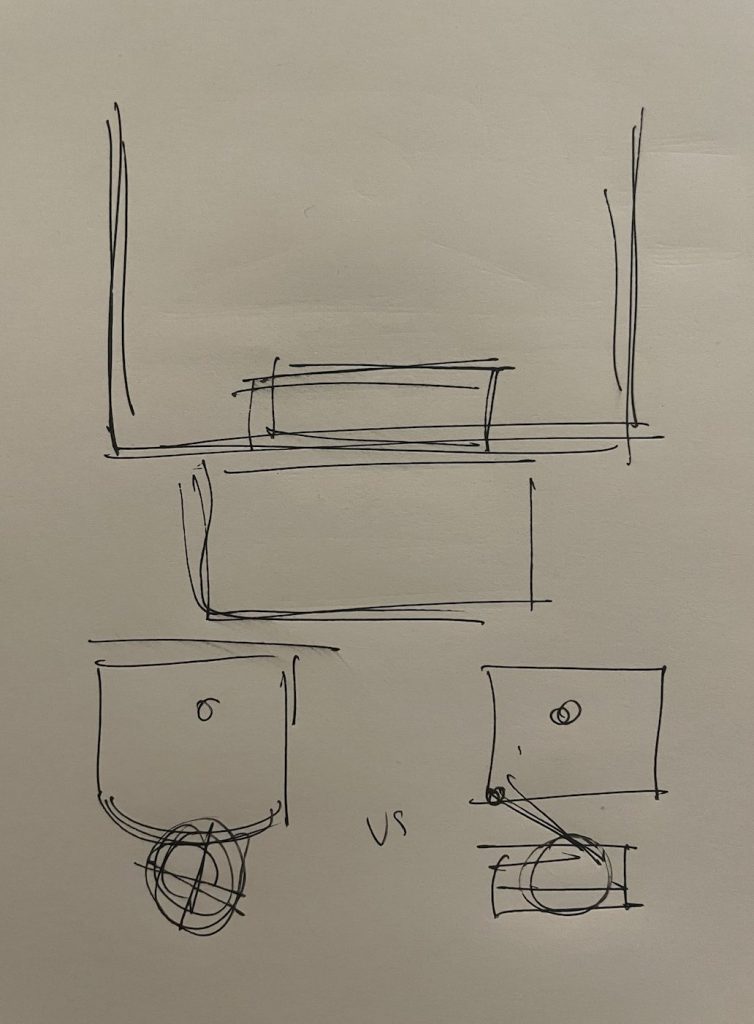

Moving forward, we plan to first integrate software into our physical system, enabling the motor to track a subject using facial recognition. Then, we look to explore an engagement rubric/rating system, further experiment with software to create engaging panning, and implement another motor into the physical system to create the tilt motion (the tilt will be a much more subtle motion- we are looking for around 30deg). We hope to implement these additions in the coming week. At that point, we will work to finely-tune software and resulting motion, and fabricate/recreate certain physical parts to establish a stronger and more consistent visual identity/impact. If all goes well, we will create two copies of this final model for a total of three tracking cameras- the remaining work will namely involve the construction of the final piece and software implementation.

Video Demo: Facial and Motion Recognition

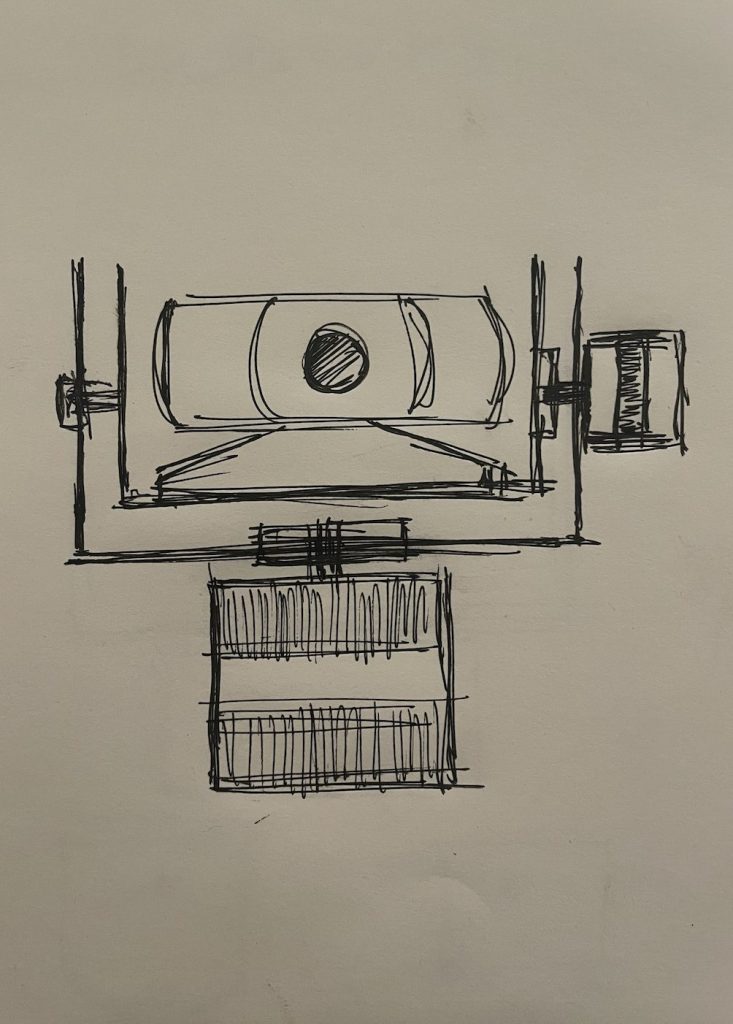

Protoype Sketching: