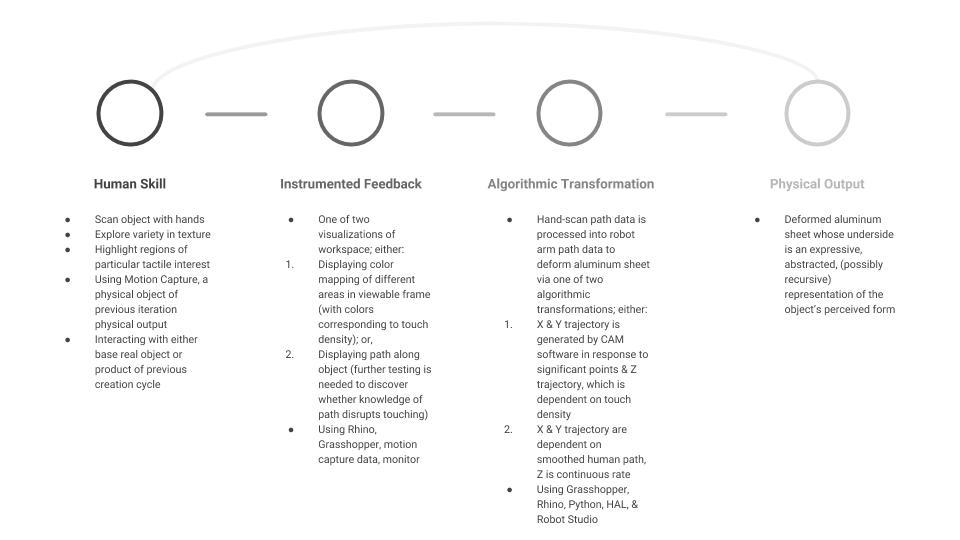

The purpose of this project is to integrate machine skill and human skill to respond to, and generate in abstract, a tactile caricature of an object. We aim to explore fabrication that relies on fully robotic active skills and a pair of human skills (one of which is innate, and the other of which would be developed by this process).

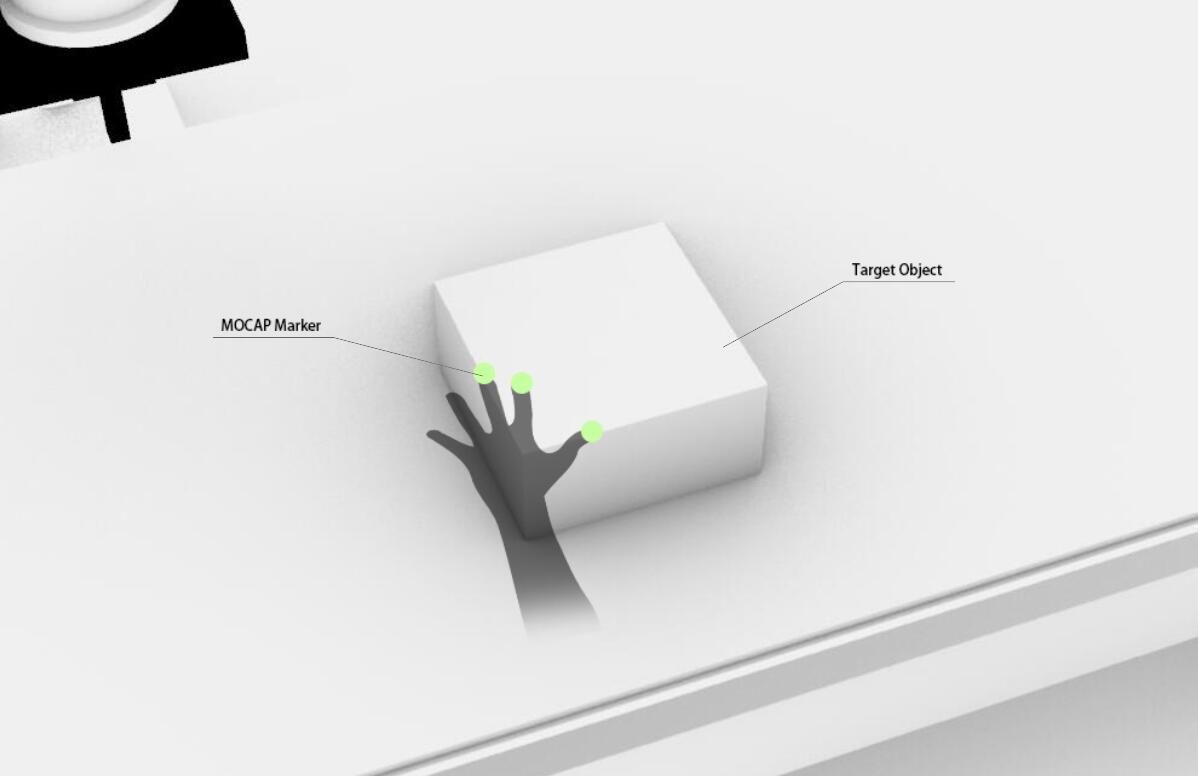

The innate human skill of viscerally exploring an object with hands will be applied to, initially, a base object. The human task will be considered “3D hand-scanning”, or “hand scanning”, but the genuine human, non-robot-replicable skill is tactile sensing and tactile opinion. This is a very visceral, innate execution of sensing that human beings can rely on to react to their environment. The motion of the human scan will be tracked with motion capture markers, and this will allow us to collect data on what affordances are of particular tactile interest. This process would also help develop the human skill of physical awareness of 3D objects (also known as 3D visualization when applied to drafting or CAD modeling).

With this path data, we can learn which features are the most tactilely significant, and this knowledge can be applied to robot arm aluminum deformation.

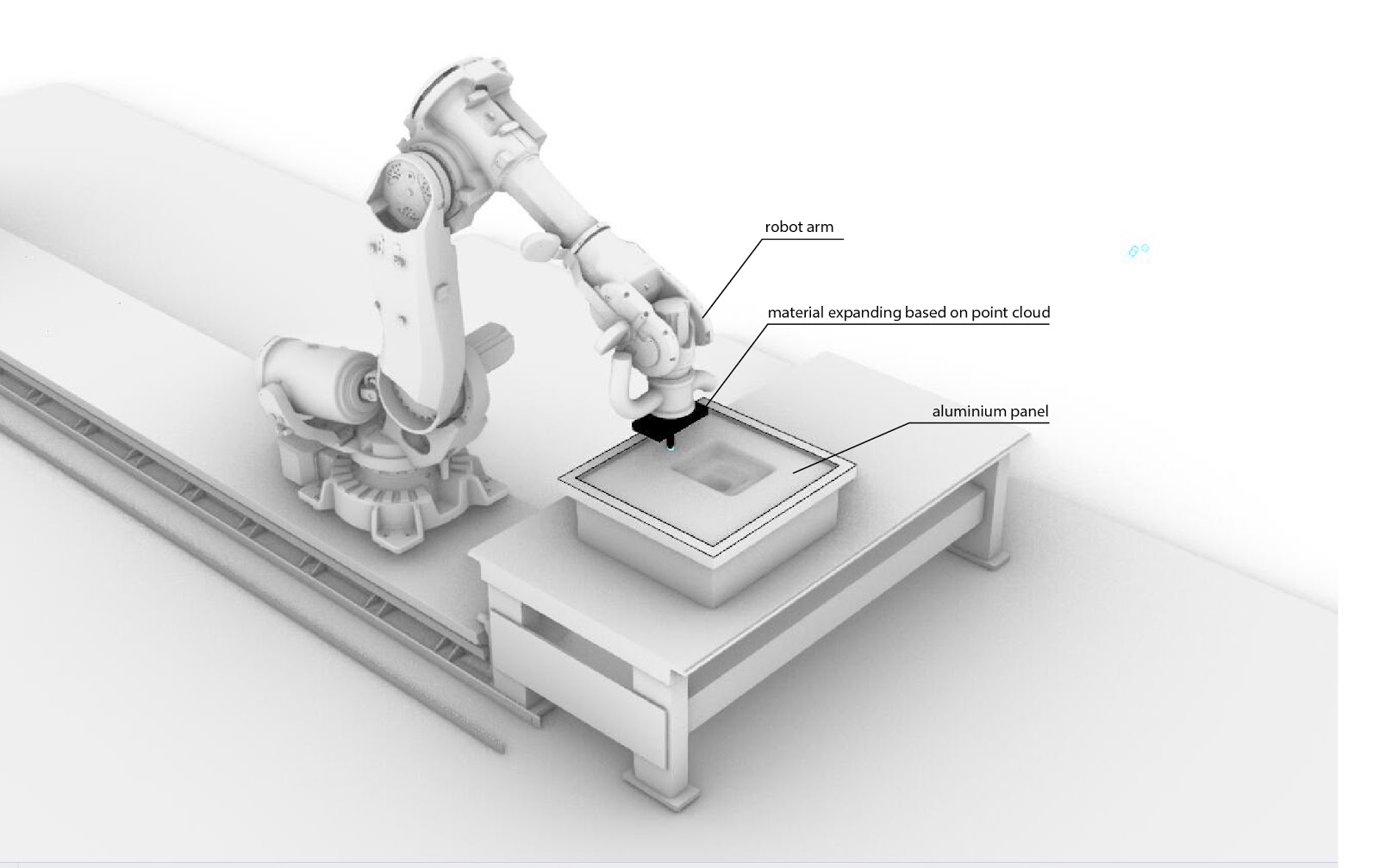

Model of robot in action deforming aluminum sheet

Finger motion along a subject is replicated by the robot “finger” motion along a deformed sheet.

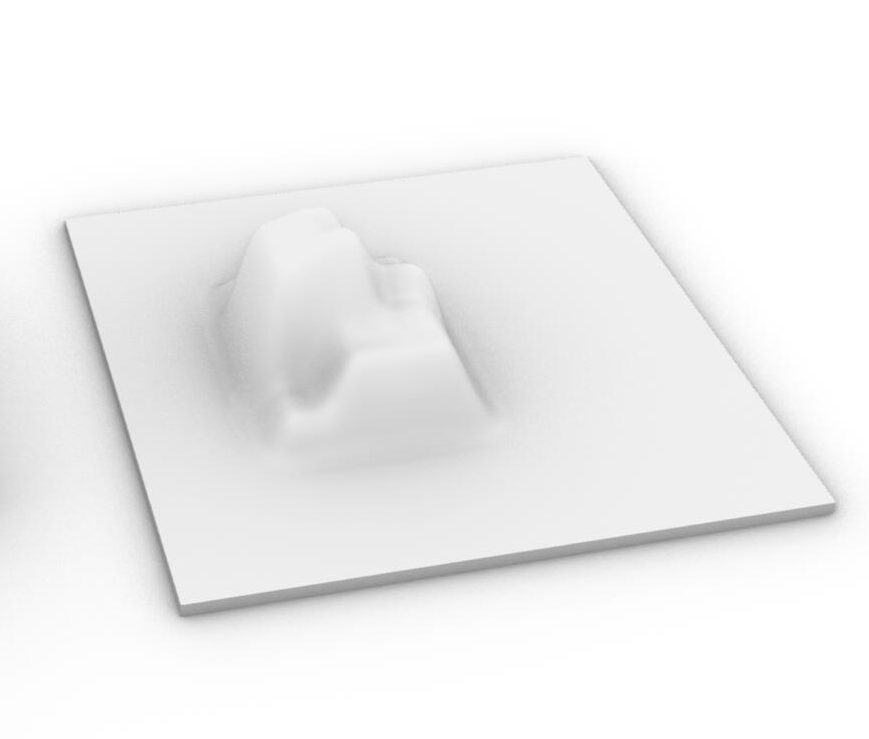

Model of aluminum sheet post-deformation

If possible, we’d like to explore implementing a “recursive” approach: the user explores a base object, the sheet is deformed, the next human exploration is conducted on the deformed sheet, and either the same sheet – or a different sheet – is subsequently deformed. This echoing deformation could occur several times, and the final result would be a deep tactile caricature of the object and its children.

The technical context of this piece references photogrammetry – the process of generating a 3D model by combining many photos of a single subject. This project pays homage to photogrammetry by using dynamic data to create 3D visualizations of the object, but incorporates physical and tactile action both in the input and in the output. The cultural context of this piece explores how caricature, which is the act of isolating the outstanding features of a subject and disproportionately representing them, can be applied to tactile sensing in an object.

Hybrid Skill Diagram – the process of conversion from human skill to physical output

The implementation of this project will rely on the Motive Motion Capture system to collect hand scan data. The immediate feedback on which the human scanner will rely will be derived from Rhino. This hand scan data will be sent to Grasshopper, where it may need to be cleaned up/smoothed by a Python script, and then will be converted to robot arm control data in, HAL (a Grasshopper extension), and Robot Studio. An aluminum sheet will be held tightly in a mount, and the robot arm will deform the aluminum by pushing it down according to processed scan trajectory data.

Deform + Reform was inspired in part by this project from the CMU School of Architecture.