Perry Naseck | Andey Ng | Mary Tsai

Overview

Our project aims to discover and analyze the gestural aesthetic of graffiti and its translation into a robotic and technical system. Our goal is to augment and extend, but not replace, the craft of graffiti using a robotic system. With the capabilities of the robot, we can explore and find artistic spaces that may be beyond the reach of human interaction but still carry heavy influences from the original artist. Ranging from the basic foundation of a pattern to new designs that are created by fractals of the tag, we hope to combine the artist’s style and tag to create a new art piece that is developed parametrically through Grasshopper.

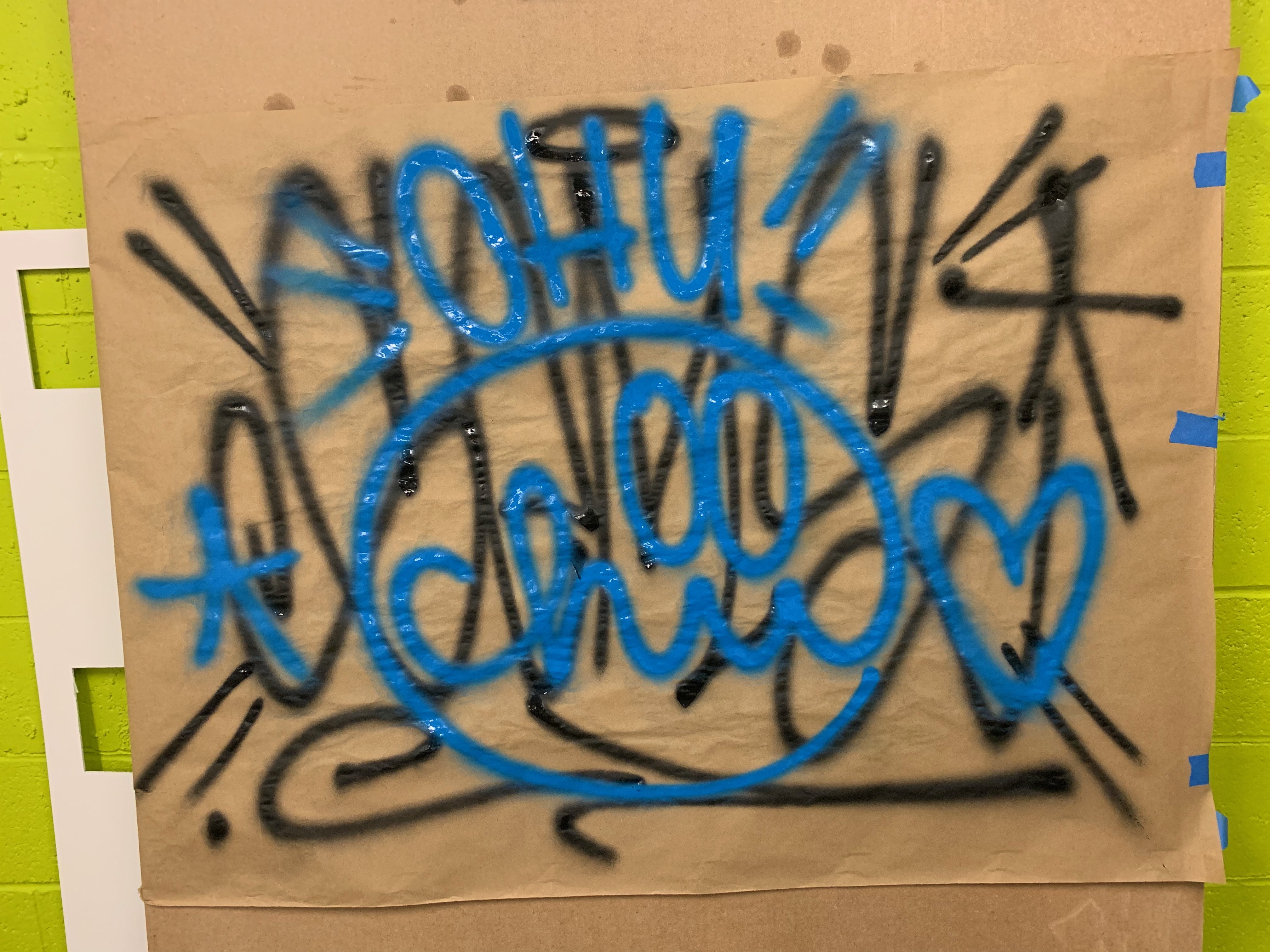

Due to the complexity of graffiti, we are sticking solely to representing the graffiti artists “tag”, which is always a single color and considered the artist’s personalized signature. The tag is a complex set of strokes that are affected by the angle of the spray can, the distance from the wall, the speed that the can is moved, and the amount of pressure in the can. The objective of the project is to explore the translation of human artistic craft into the robotic system, while maintaining the aesthetic and personal styles of the artist.

Hybrid Skill Workflow & Annotated Drawing

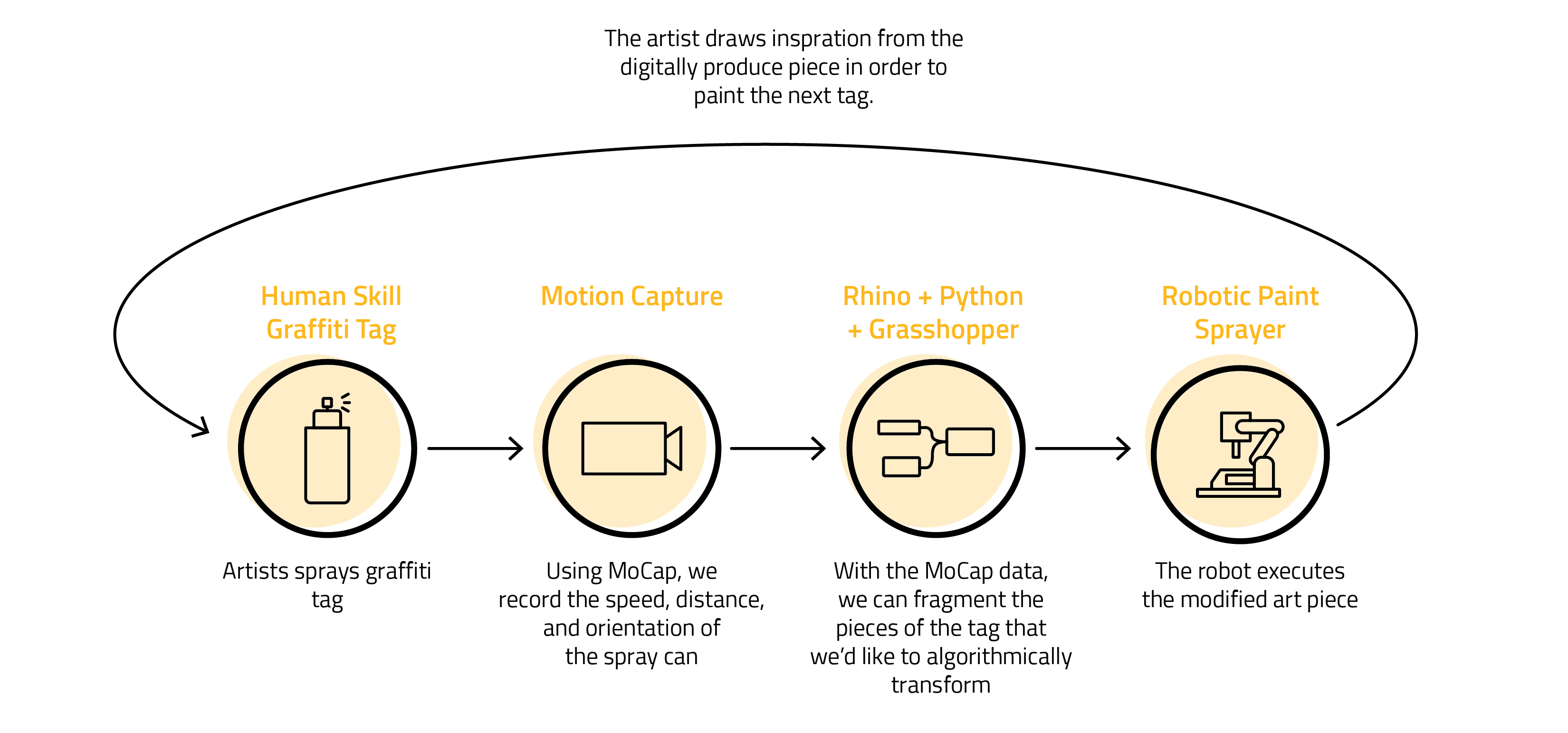

- Our first step is to understand and capture unique gestural motions that embody the style of the graffiti artist (i.e. flares, drips). We will watch and record the artists spray an assortment of different tags and styles on canvas. Once these gestural motions are categorized, our team will analyze and formulate different variables to modify and test. These variables may consist of components such as speed, distance, and orientation.

- We will then use Motion Capture technology to capture the artist’s gestures performing these specific components. This will allow us to begin to programmatically analyze how to translate the human motions into the robot’s language. Using Rhino and Grasshopper, we will program the robot to reproduce a similar piece, inspired by the original graffiti technique. While this is a useful origin point, our goal is not to simply reproduce the graffiti tag, but to enhance it in a way that can only be done through digital technology. We plan on analyzing components of the tag and parametrically modifying them to add character or ‘noise’ and have the robot produce that piece either on top of the artist’s canvas or on a separate canvas – the goal is to be able to see the origin analog piece and compare it to the digitally altered one.

- The artist would draw inspiration from the robotically enhanced piece and start to experiment with different ideas in order to see what the digital version can produce. This process is repeated multiple times. If the plan is to produce one larger cohesive final piece (i.e. a giant tag spread out over multiple canvases), the overall shape will need to be planned out beforehand. The communication between human and machine would be very procedural, alternating between graffiti drawn by the artist and art produced by the robot.

- The final project will be a collaborative and co-produced piece that has both analog and robotically sprayed marks, emphasizing the communicative and learning process between human and machine. Ideally, we would be using multiple canvases so the sizes of the art pieces would be more workable with the robot.

Algorithmically Modified Examples

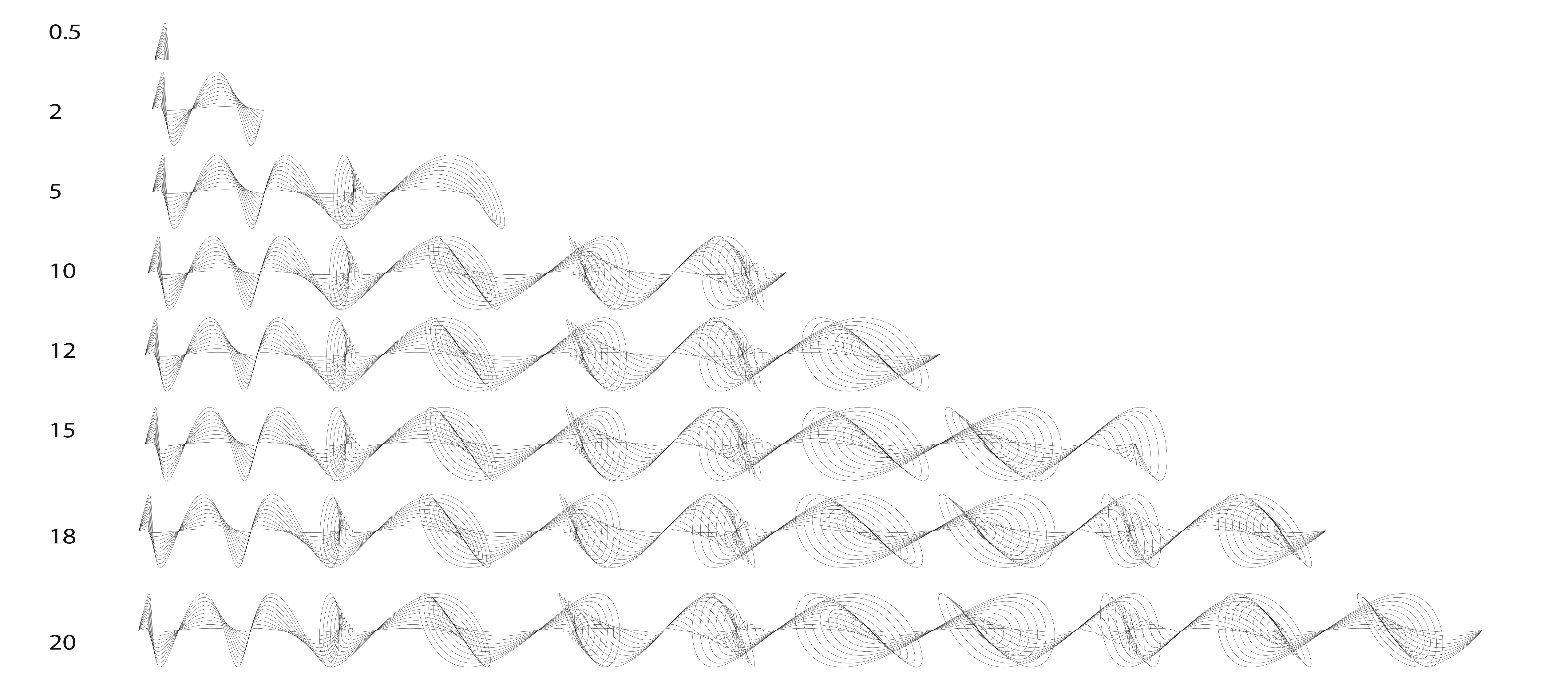

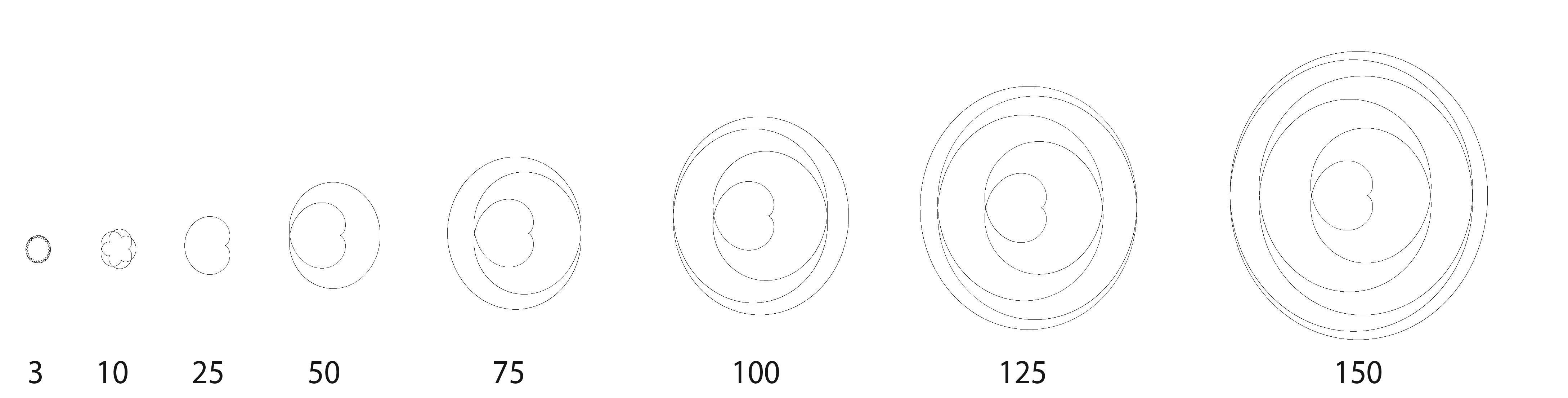

By using Python scripting in Grasshopper, we will be able to modify, exaggerate, or add features based off the motion capture. Below are some examples of how components could be transformed.