Perry Naseck, Andey Ng, and Mary Tsai

Abstract

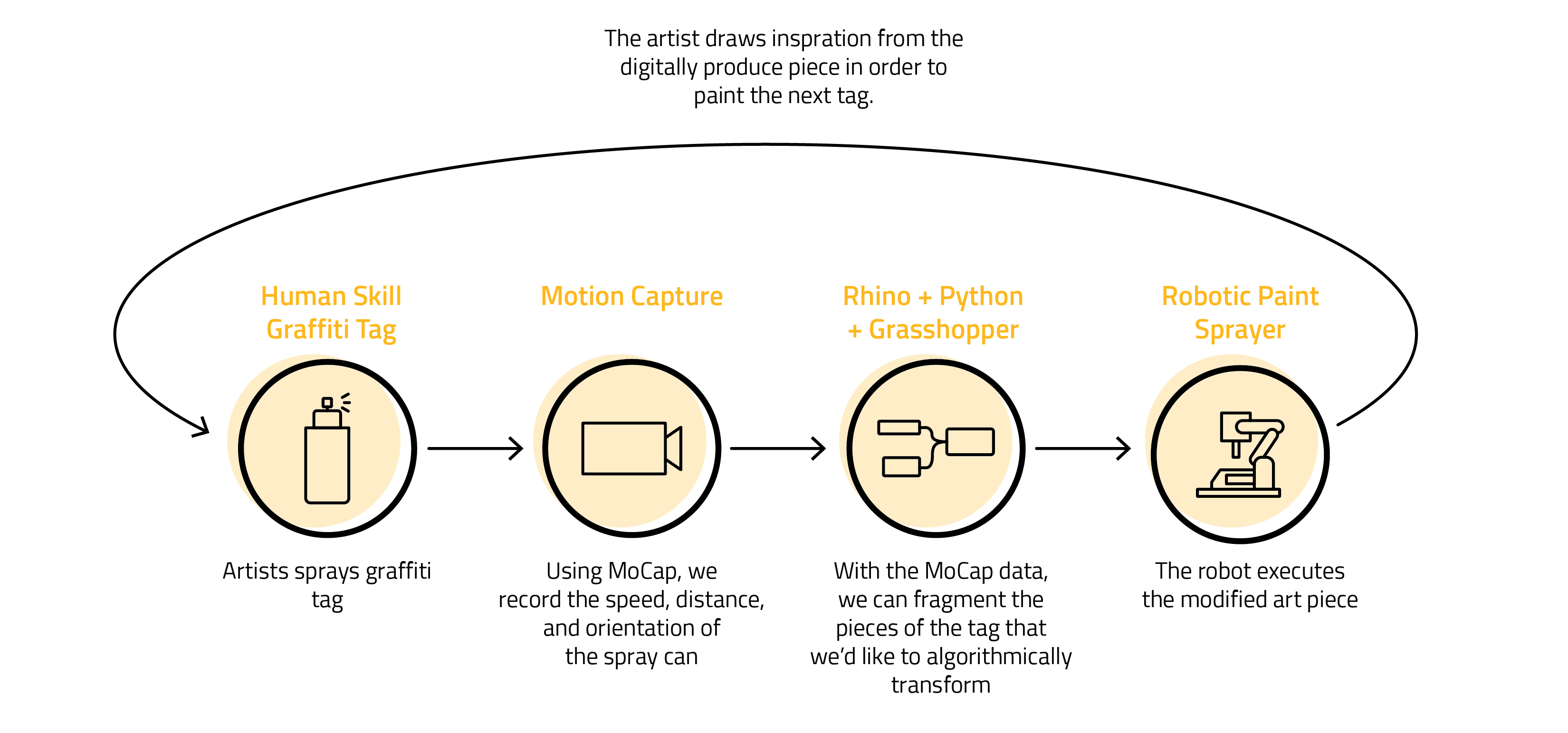

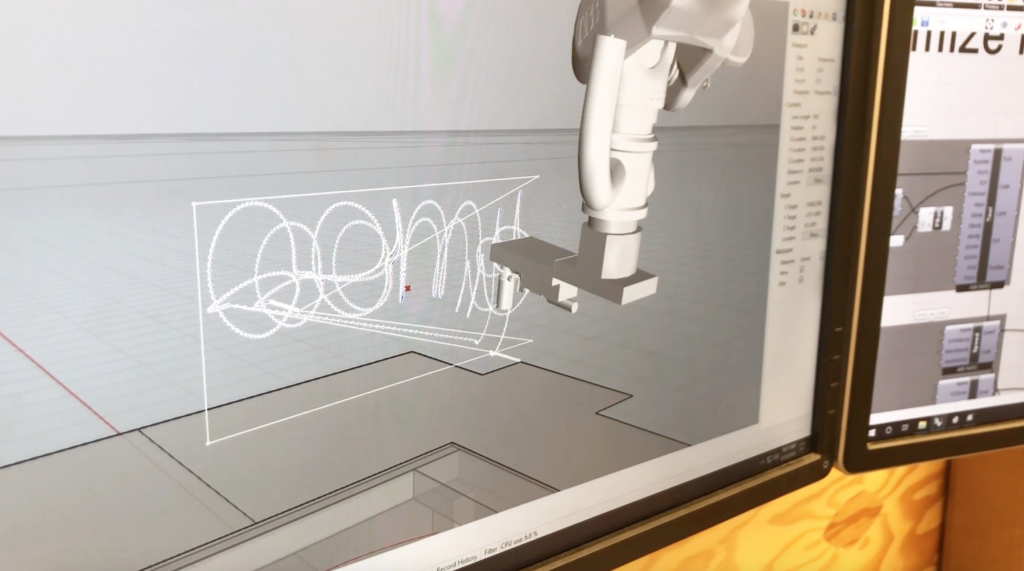

Our project aimed to discover and analyze the gestural aesthetic of graffiti and its translation into a robotic and technical system. Our goal was to augment and extend, but not replace, the craft of graffiti using a robotic system. With the capabilities of the robot, we explored and found artistic spaces that were beyond the reach of human interaction but still carried heavy influences from the original artist. We were able to modify the artist’s aesthetic and tag to create a new art piece that was developed programmed through Grasshopper.

Objectives

Our goals were to be able to utilize the robot’s capabilities to create something that an artist would not be able to make. We were able to take advantage of the reach and scale of the robot, specifically the track on which it could slide up and down. By programming transformations, each letter was able to be perfectly replicated multiple times but in positions that would have been impossible for the artist do complete.

Implementation

Ultimately, we ended up sticking with simple modifications for the sake of time and producing a successful end piece for the show. We were able to take each letter and apply a transformation to it via Grasshopper. A large part of getting to this process was physically making the hardware work (the spray tool and canvases) as well as the programming in Python. If taken further, we would ideally like to create more intricate and complex transformations give the amount of area and space that we have with the robot.

Outcomes

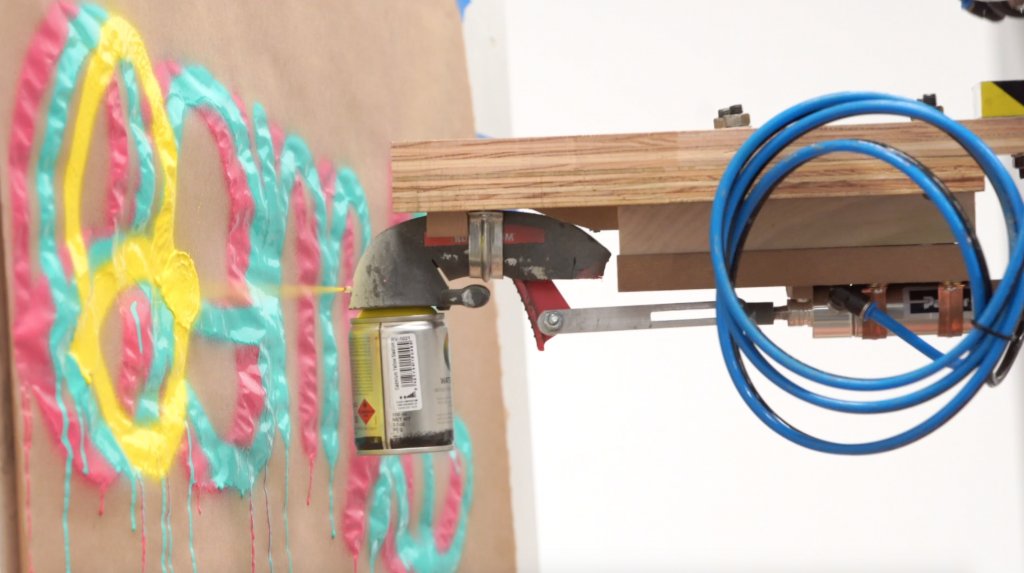

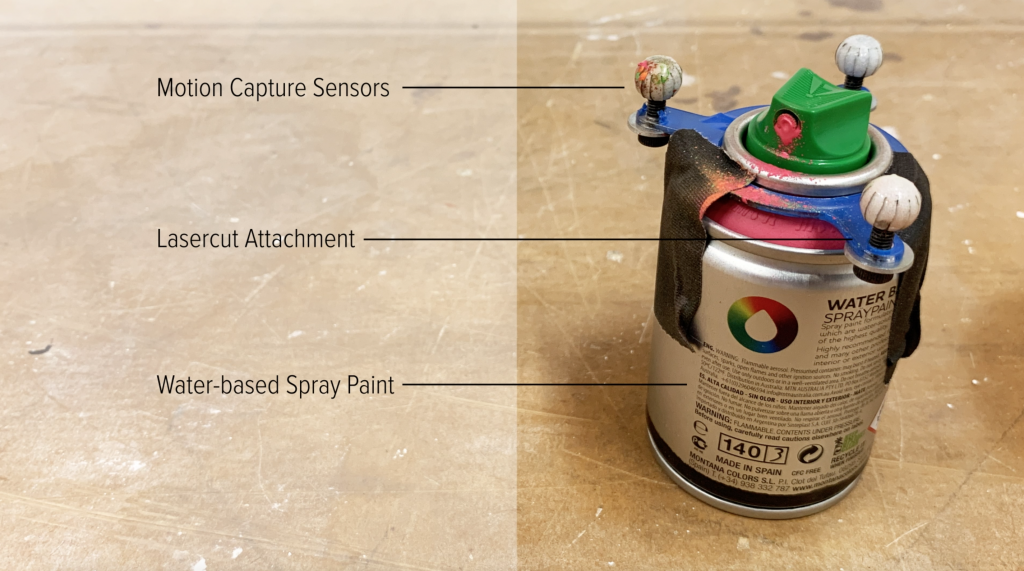

We were able to successfully make the robot spray an artist’s tag to pretty much 100% accuracy. At the final show, one of the graffiti artists commented that it looked exactly like what he would do and wasn’t sure if he would recognize this tag as being sprayed by the robot. The hardware was something we nailed down in the first half of the semester, with some fine tuning along the way (nozzle type, distance from canvas, etc.). The programming side was a bit more complicated and we were able to successfully program the robot to spray the desired outcomes. Some of our challenges included the robot arm being constrained in specific angles and distances, but we were able to remedy most of that by turning the plane 90 degrees, and using the wall that runs the length of the track. We were unable to test all the initial transformations that we had managed to program due to time but those would be included in any future considerations.

Contribution

Perry Naseck worked on developing a Grasshopper programming that pre-processed all the data through his Python script. The backend of this project required precise timing of when the pneumatic trigger turns the spray can on/off. He developed a Python script that directed the robot arm to start and stop spraying based on the branches. Additionally, Perry performed transformation implementation such as resizing, rotation, and shifting.

Andey Ng worked on transformation implementation through different plug-ins such as Rabbit. To create more elaborate transformations that replicate fractals, the Rabbit plugin allowed us to create large scaled transformations. She also was able to create the negative-space transformations that manipulates the piece to fill in the bounded whitespace except for the letters itself.

Mary Tsai was in charge of the necessary 3D Rhino modeling as well as the physical hardware and tool construction. She created the spray mount by attaching the pneumatic piston to applicator by a milled aluminum piece as well as the easel that would hold the practice canvases. Mary also worked on transformations that incorporated the sine wave into the path of the robot, which could vary motion based off of amplitude and period. She also filmed and produced the final video.

Diagram

Photo Documentation

In the final sprayed piece, the letters G and E were rotated around specific points, the letter M was replicated and shifted up multiple times, and the S was scaled up and shifted to the right.