Live Demonstration

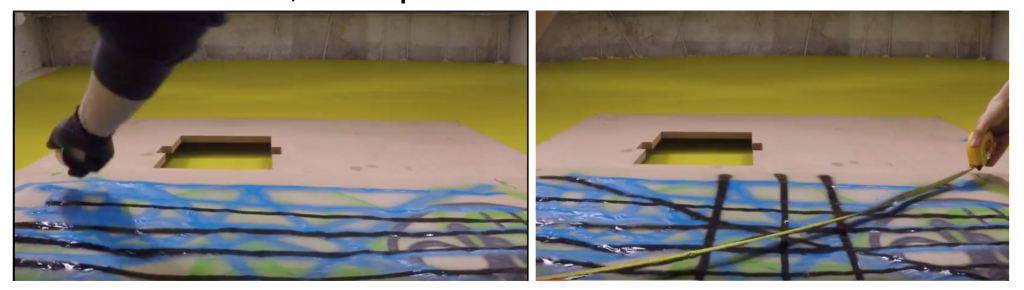

The tool we built to mount to the robot consists of a spray applicator that was cut and attached to the piston via a milled piece of aluminum. After building the spray paint applicator tool, we tested it on the robot by inputting a manual path for it to follow while manipulating the pneumatic piston on and off at the right times. Overall, the test was successful and showed potential for what a programmed in path could may like. Our simple test allows us to test speed, corner movement, and distance from the drawing surface. In the GIF above, the robot arm is moving at 250 mm/second (manual/pendant mode), but it can increase to up to 1500 mm/second with an automated program path.

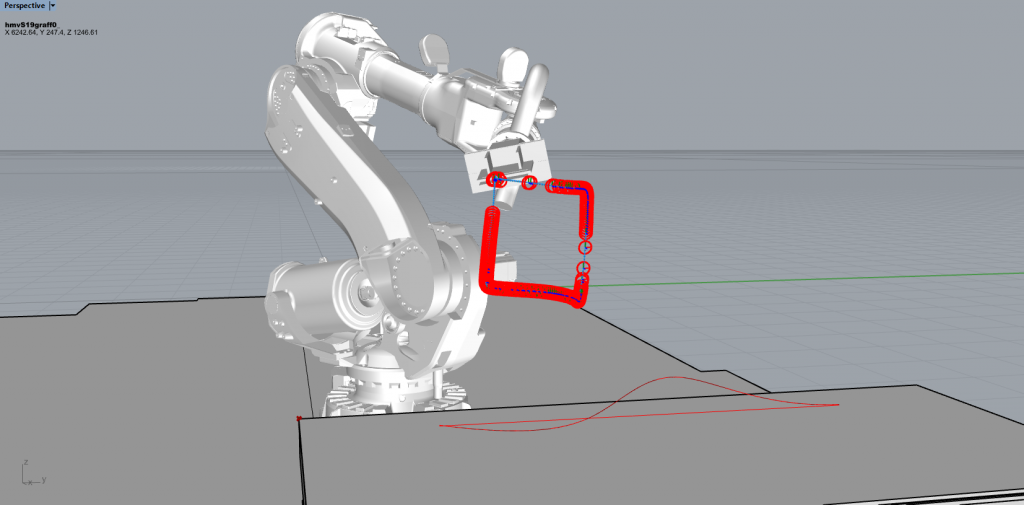

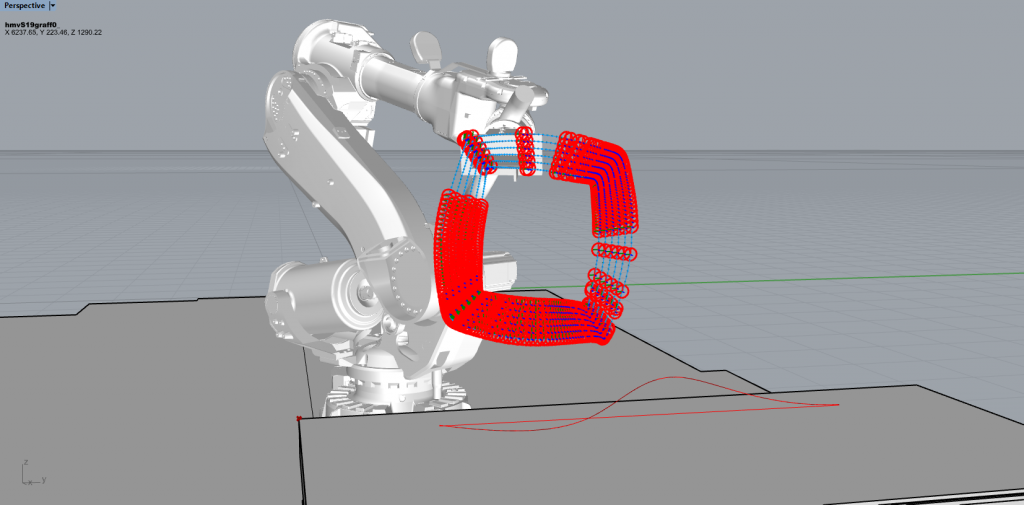

Example Artifact – Capability

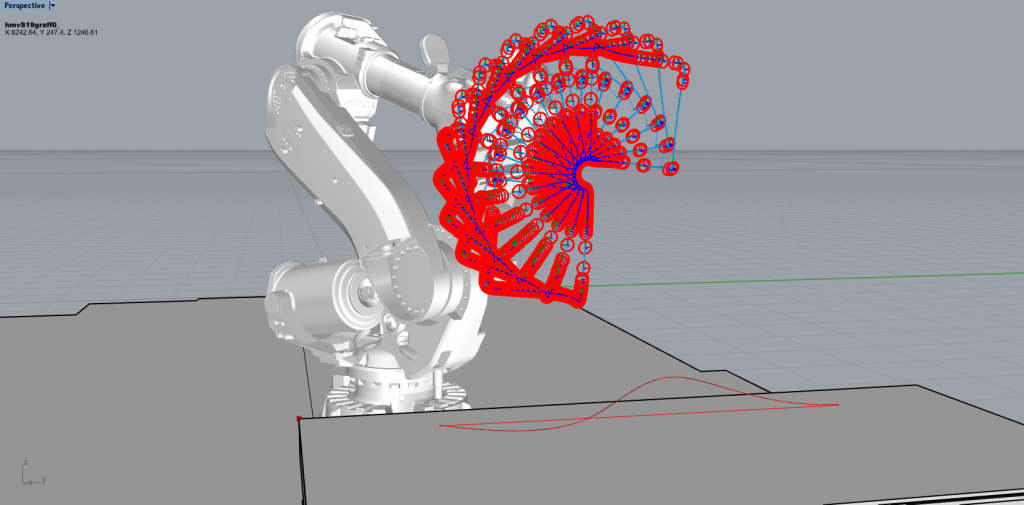

Pictured above is a spray path of a box recorded with motion capture in Motive and then imported into HAL in Rhino. Gems visited the lab to make this recording, which captured the linear motion, speed, angle, and distance from the drawing surface of the can. Below are two possible transformations applied to this square path.

Next Steps | Moving Forward

We have determined the key building blocks of our project and split them into two main sections. The first being solely contained in Rhino and Grasshopper – determining the capabilities of the robot and programming in the paths that can be taken based off of the motion capture study. The second part is the actual hardware and spraying ability of the robot, which we managed to tackle using a spray applicator and and a pneumatic piston system.

Our final product will be an iterative building of the piece over multiple steps. The piece would emerge where the artist responds after the robot has acted, making this a procedural and performative process. In the beginning stages of the performance, the artist would have only simple concepts and ideas of what the robot is actually capable of but his knowledge would increase over time of what can be computationally translated, which would inform his next steps.

The artists will choose from a set of transformations, and the the robot will perform the selected transformation the on the last step. The amount of options available to the artist for each transformation (such as the location or scale) will be determined as we program each transformation.

Our next steps are to determine a library of specific ways that the motion capture can be modified and exemplified. We will use many of the 2D transformations from our original proposal as a starting point. Our main goal is to have the robot produce something that a human in incapable of doing. The different variables such as speed, scale, and precision are the most obvious factors to modify. With speed, the robot has capabilities of going up to 1500 mm/second. In scale, the robot has a reach of 2.8 meters, and combining with the increased speed, the output would be drastically different from what a human could produce. The robot is capable of being much more precise, especially at corners. If allowed, the outcome could look quite mechanical and different, which would be an interesting contrast to the hand-sprayed version.

Project Documentation

To understand the gestural motions, we initially captured the artist’s techniques to understand which variables we must account for. For instance, we must account for the artist’s speed, distance from the canvas, and wrist motions to create the flares.

To find the measurements of velocity that we need to input into the robot arm, we calculated the artist’s average speed and distance of each stroke. These measurements are used as data points to be plugged into Grasshopper.

To capture the stroke types, we recorded the artist’s strokes through Motion Capture. Each take describes a different type of stroke and/or variable such as distance, angle, speed, flicks, and lines.

The Motion Capture rigid body takes are recorded as CSV points which are fed into Grasshopper that create an animated simulation of the robot arm’s movement.

To perform the spray action with the robot, we designed a tool with a pneumatic piston that pushes and releases a modified off-the-shelf spray can holder.