Abstract

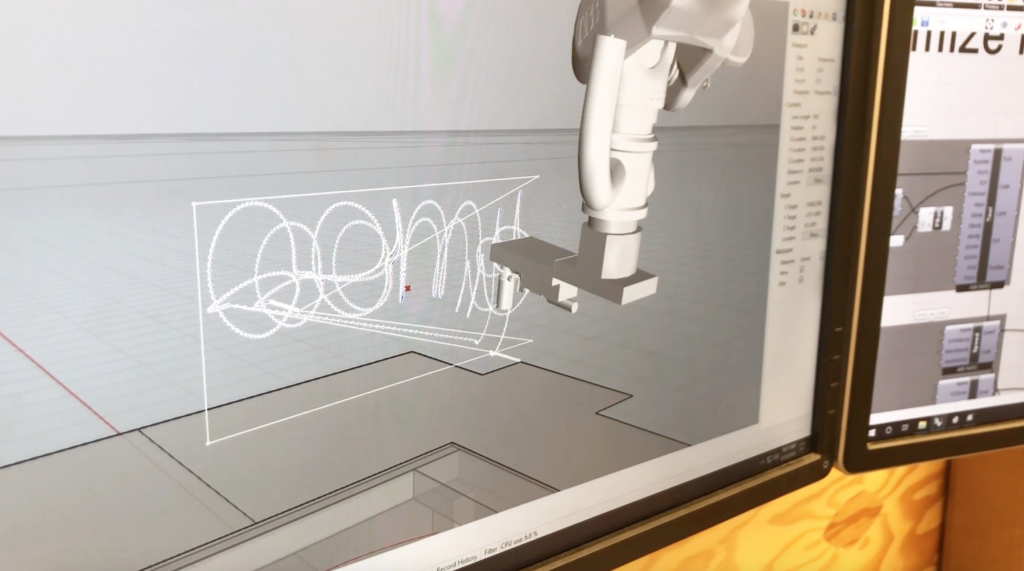

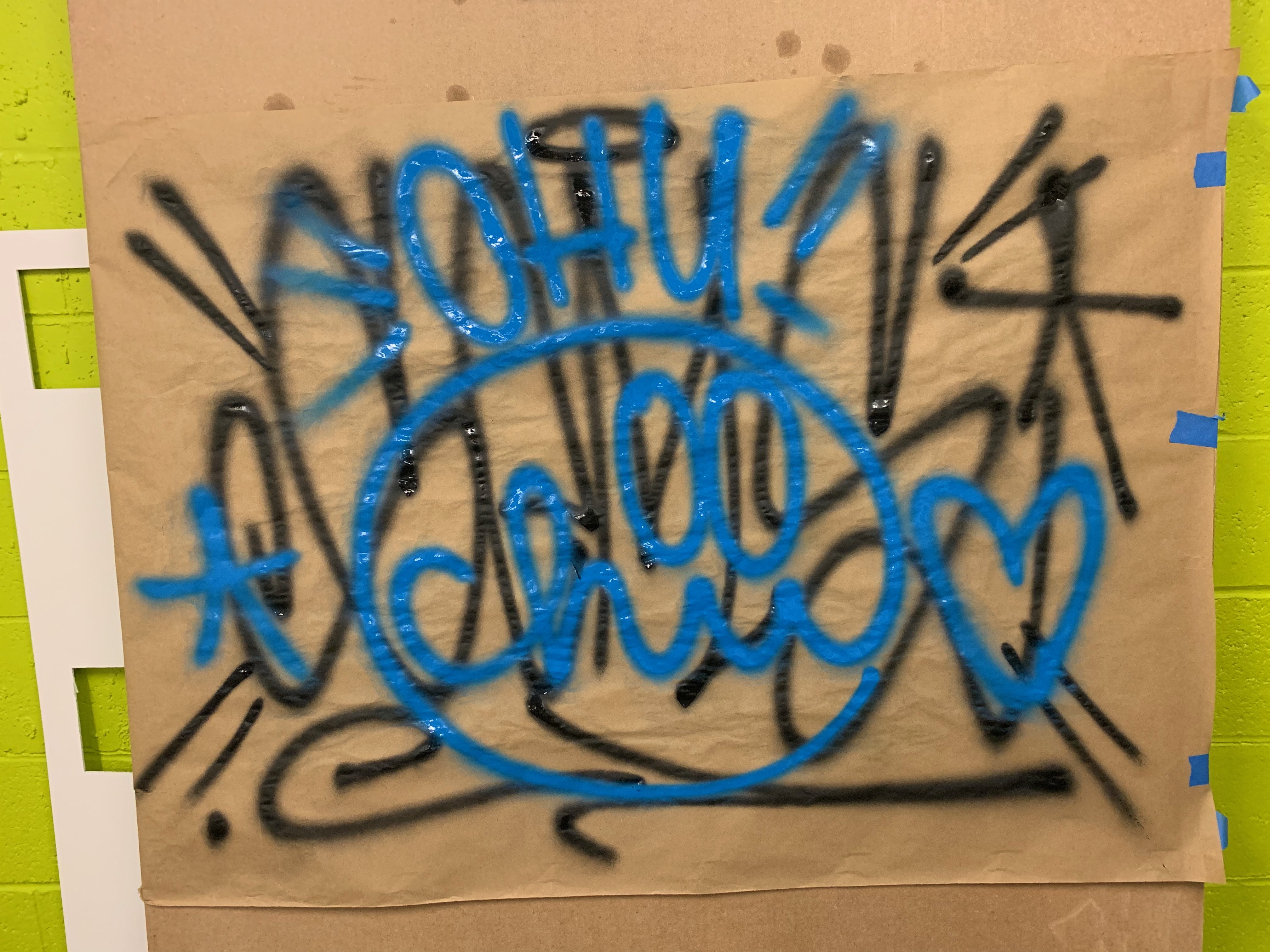

Our project aimed to discover and analyze the gestural aesthetic of graffiti and its translation into a robotic and technical system. Our goal was to augment and extend, but not replace, the craft of graffiti using a robotic system. With the capabilities of the robot, we explored and found artistic spaces that were beyond the reach of human interaction but still carried heavy influences from the original artist. We were able to modify the artist’s aesthetic and tag to create a new art piece that was developed programmed through Grasshopper.

Objectives

Our goals were to be able to utilize the robot’s capabilities to create something that an artist would not be able to make. We were able to take advantage of the reach and scale of the robot, specifically the track on which it could slide up and down. By programming transformations, each letter was able to be perfectly replicated multiple times but in positions that would have been impossible for the artist do complete.

Implementation

Ultimately, we ended up sticking with simple modifications for the sake of time and producing a successful end piece for the show. We were able to take each letter and apply a transformation to it via Grasshopper. A large part of getting to this process was physically making the hardware work (the spray tool and canvases) as well as the programming in Python. If taken further, we would ideally like to create more intricate and complex transformations give the amount of area and space that we have with the robot.

Outcomes

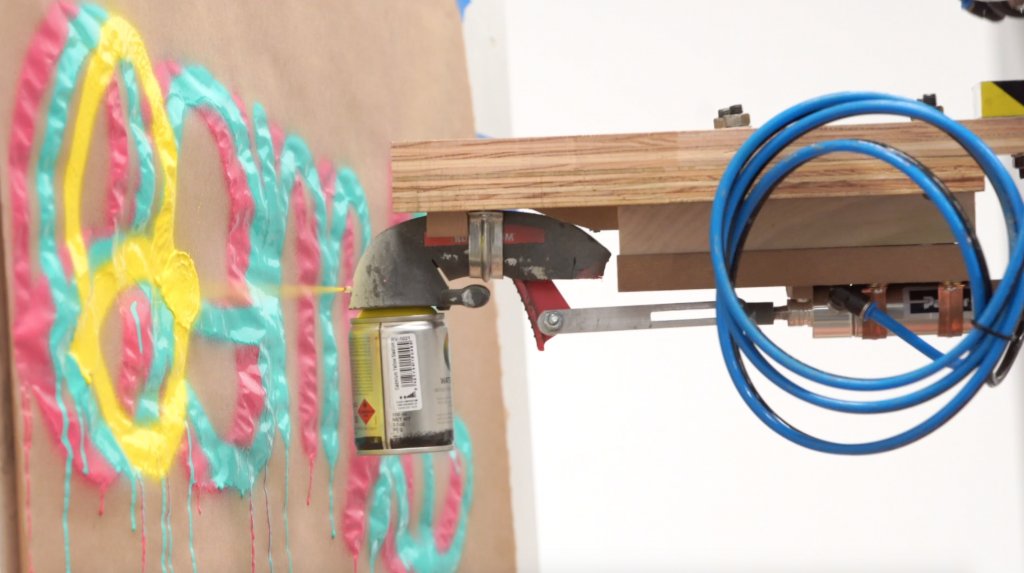

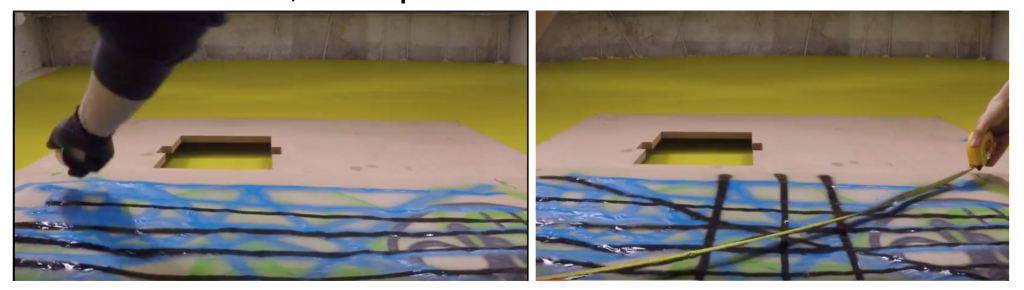

We were able to successfully make the robot spray an artist’s tag to pretty much 100% accuracy. At the final show, one of the graffiti artists commented that it looked exactly like what he would do and wasn’t sure if he would recognize this tag as being sprayed by the robot. The hardware was something we nailed down in the first half of the semester, with some fine tuning along the way (nozzle type, distance from canvas, etc.). The programming side was a bit more complicated and we were able to successfully program the robot to spray the desired outcomes. Some of our challenges included the robot arm being constrained in specific angles and distances, but we were able to remedy most of that by turning the plane 90 degrees, and using the wall that runs the length of the track. We were unable to test all the initial transformations that we had managed to program due to time but those would be included in any future considerations.

Contribution

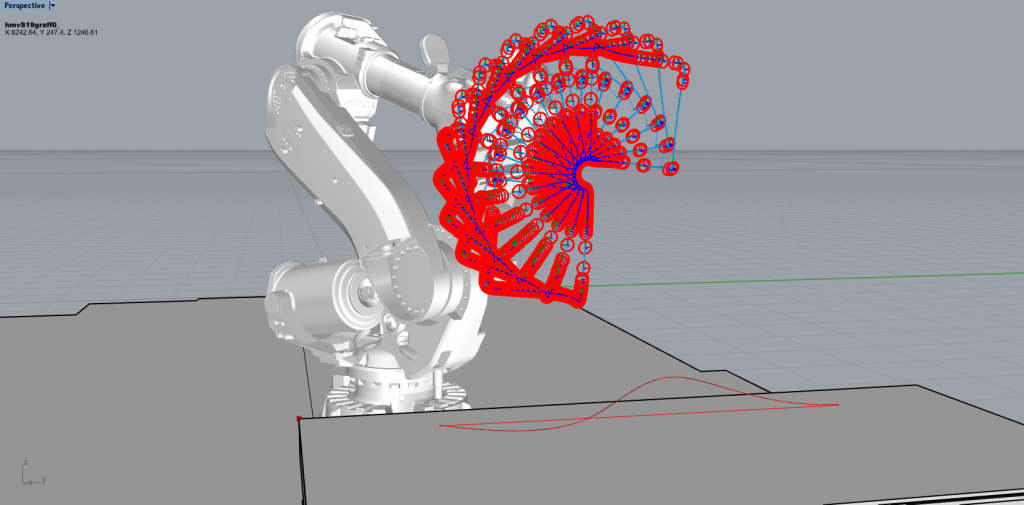

Perry Naseck worked on developing a Grasshopper programming that pre-processed all the data through his Python script. The backend of this project required precise timing of when the pneumatic trigger turns the spray can on/off. He developed a Python script that directed the robot arm to start and stop spraying based on the branches. Additionally, Perry performed transformation implementation such as resizing, rotation, and shifting.

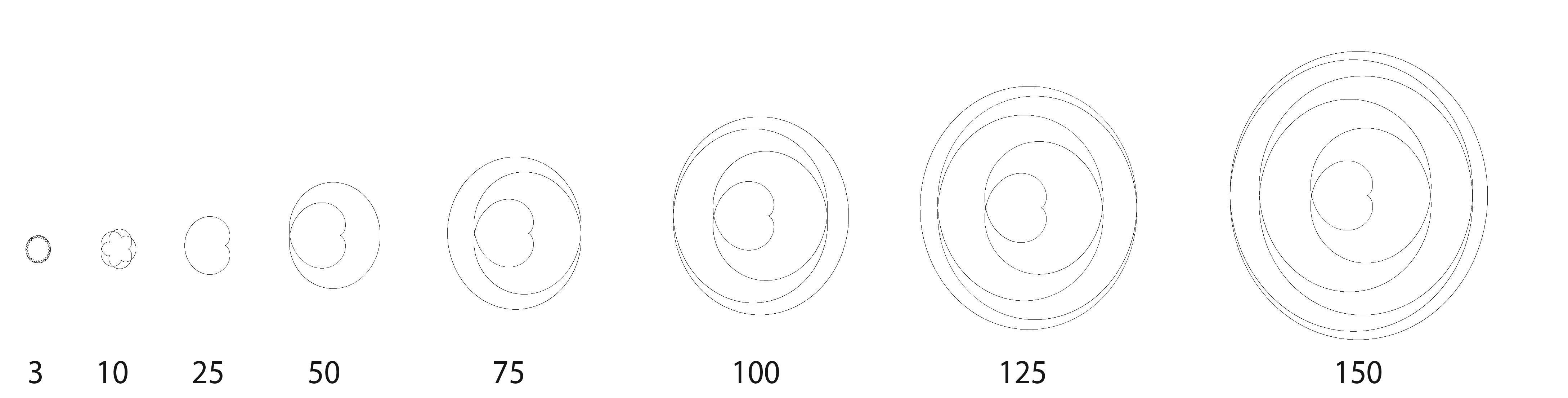

Andey Ng worked on transformation implementation through different plug-ins such as Rabbit. To create more elaborate transformations that replicate fractals, the Rabbit plugin allowed us to create large scaled transformations. She also was able to create the negative-space transformations that manipulates the piece to fill in the bounded whitespace except for the letters itself.

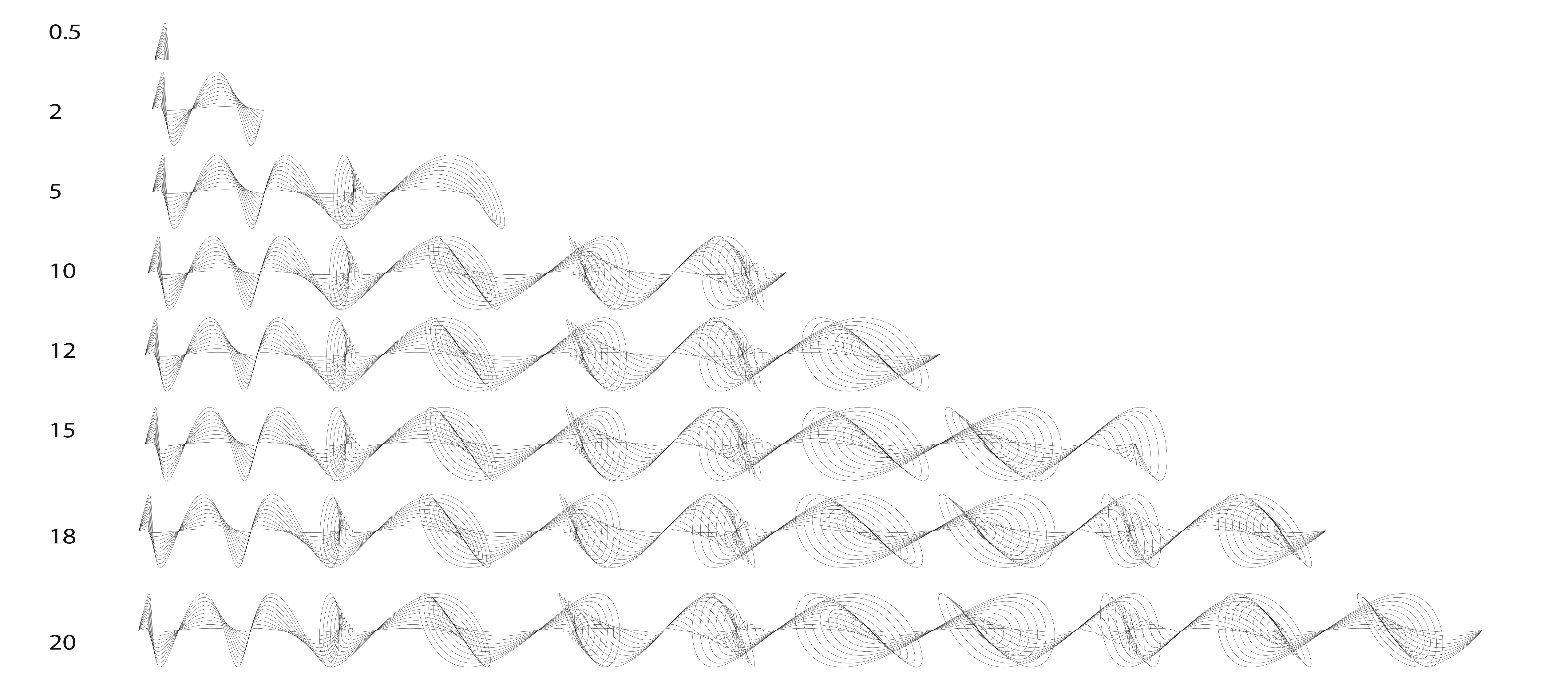

Mary Tsai was in charge of the necessary 3D Rhino modeling as well as the physical hardware and tool construction. She created the spray mount by attaching the pneumatic piston to applicator by a milled aluminum piece as well as the easel that would hold the practice canvases. Mary also worked on transformations that incorporated the sine wave into the path of the robot, which could vary motion based off of amplitude and period. She also filmed and produced the final video.

Diagram

Photo Documentation

In the final sprayed piece, the letters G and E were rotated around specific points, the letter M was replicated and shifted up multiple times, and the S was scaled up and shifted to the right.

]]>

The tool we built to mount to the robot consists of a spray applicator that was cut and attached to the piston via a milled piece of aluminum. After building the spray paint applicator tool, we tested it on the robot by inputting a manual path for it to follow while manipulating the pneumatic piston on and off at the right times. Overall, the test was successful and showed potential for what a programmed in path could may like. Our simple test allows us to test speed, corner movement, and distance from the drawing surface. In the GIF above, the robot arm is moving at 250 mm/second (manual/pendant mode), but it can increase to up to 1500 mm/second with an automated program path.

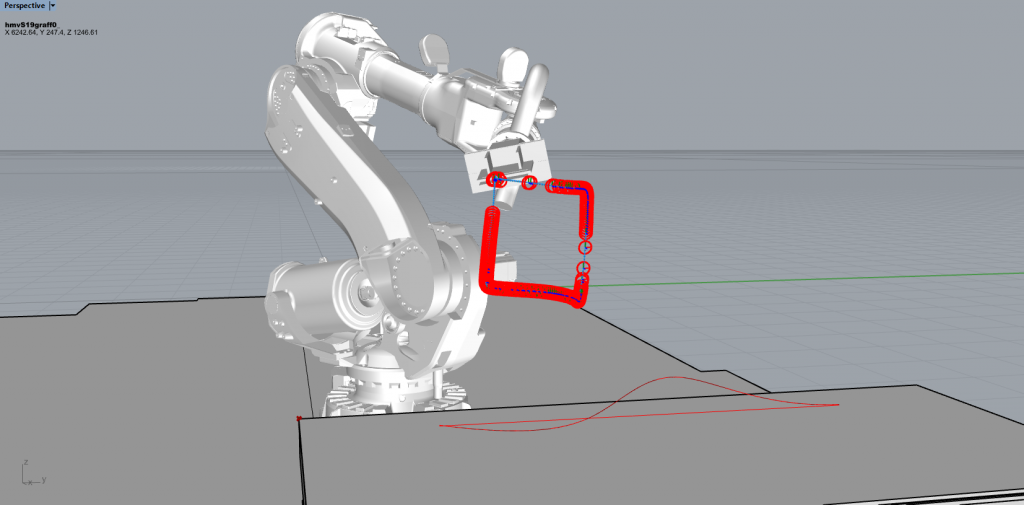

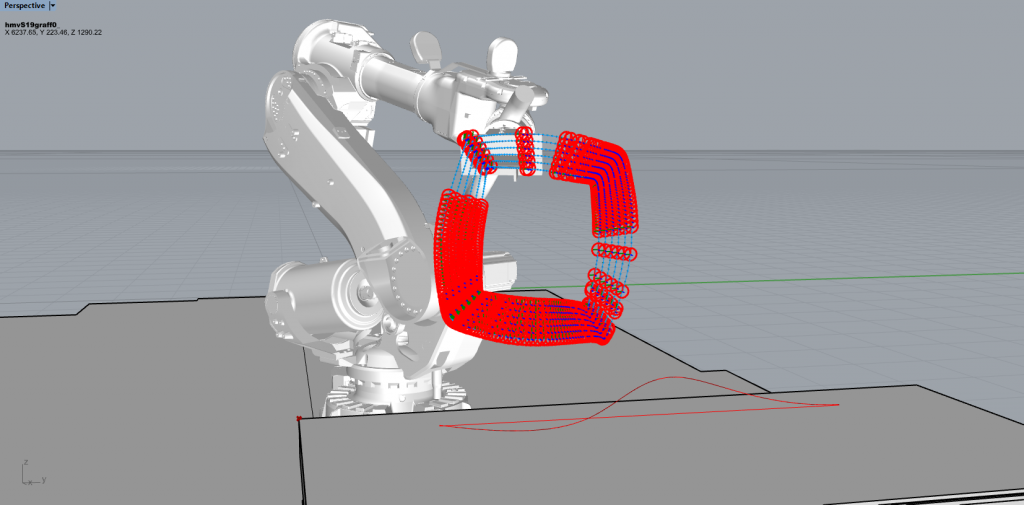

Example Artifact – Capability

Pictured above is a spray path of a box recorded with motion capture in Motive and then imported into HAL in Rhino. Gems visited the lab to make this recording, which captured the linear motion, speed, angle, and distance from the drawing surface of the can. Below are two possible transformations applied to this square path.

Next Steps | Moving Forward

We have determined the key building blocks of our project and split them into two main sections. The first being solely contained in Rhino and Grasshopper – determining the capabilities of the robot and programming in the paths that can be taken based off of the motion capture study. The second part is the actual hardware and spraying ability of the robot, which we managed to tackle using a spray applicator and and a pneumatic piston system.

Our final product will be an iterative building of the piece over multiple steps. The piece would emerge where the artist responds after the robot has acted, making this a procedural and performative process. In the beginning stages of the performance, the artist would have only simple concepts and ideas of what the robot is actually capable of but his knowledge would increase over time of what can be computationally translated, which would inform his next steps.

The artists will choose from a set of transformations, and the the robot will perform the selected transformation the on the last step. The amount of options available to the artist for each transformation (such as the location or scale) will be determined as we program each transformation.

Our next steps are to determine a library of specific ways that the motion capture can be modified and exemplified. We will use many of the 2D transformations from our original proposal as a starting point. Our main goal is to have the robot produce something that a human in incapable of doing. The different variables such as speed, scale, and precision are the most obvious factors to modify. With speed, the robot has capabilities of going up to 1500 mm/second. In scale, the robot has a reach of 2.8 meters, and combining with the increased speed, the output would be drastically different from what a human could produce. The robot is capable of being much more precise, especially at corners. If allowed, the outcome could look quite mechanical and different, which would be an interesting contrast to the hand-sprayed version.

Project Documentation

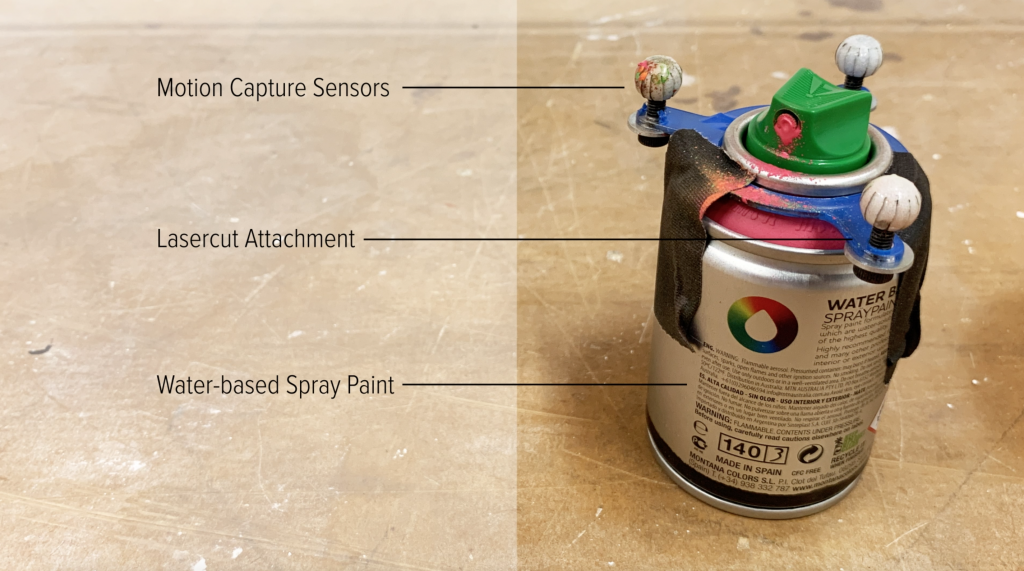

To understand the gestural motions, we initially captured the artist’s techniques to understand which variables we must account for. For instance, we must account for the artist’s speed, distance from the canvas, and wrist motions to create the flares.

To find the measurements of velocity that we need to input into the robot arm, we calculated the artist’s average speed and distance of each stroke. These measurements are used as data points to be plugged into Grasshopper.

To capture the stroke types, we recorded the artist’s strokes through Motion Capture. Each take describes a different type of stroke and/or variable such as distance, angle, speed, flicks, and lines.

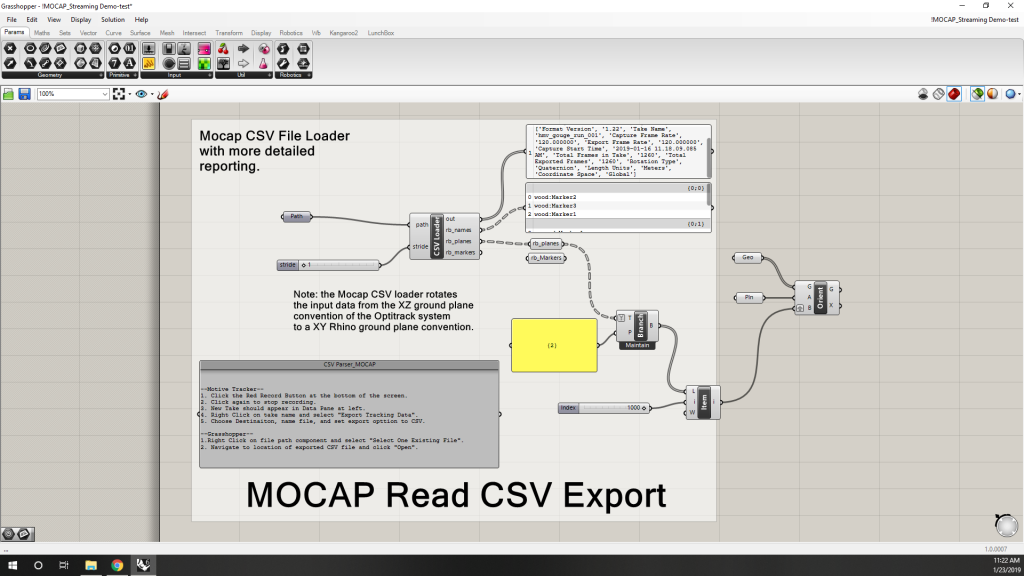

The Motion Capture rigid body takes are recorded as CSV points which are fed into Grasshopper that create an animated simulation of the robot arm’s movement.

To perform the spray action with the robot, we designed a tool with a pneumatic piston that pushes and releases a modified off-the-shelf spray can holder.

Overview

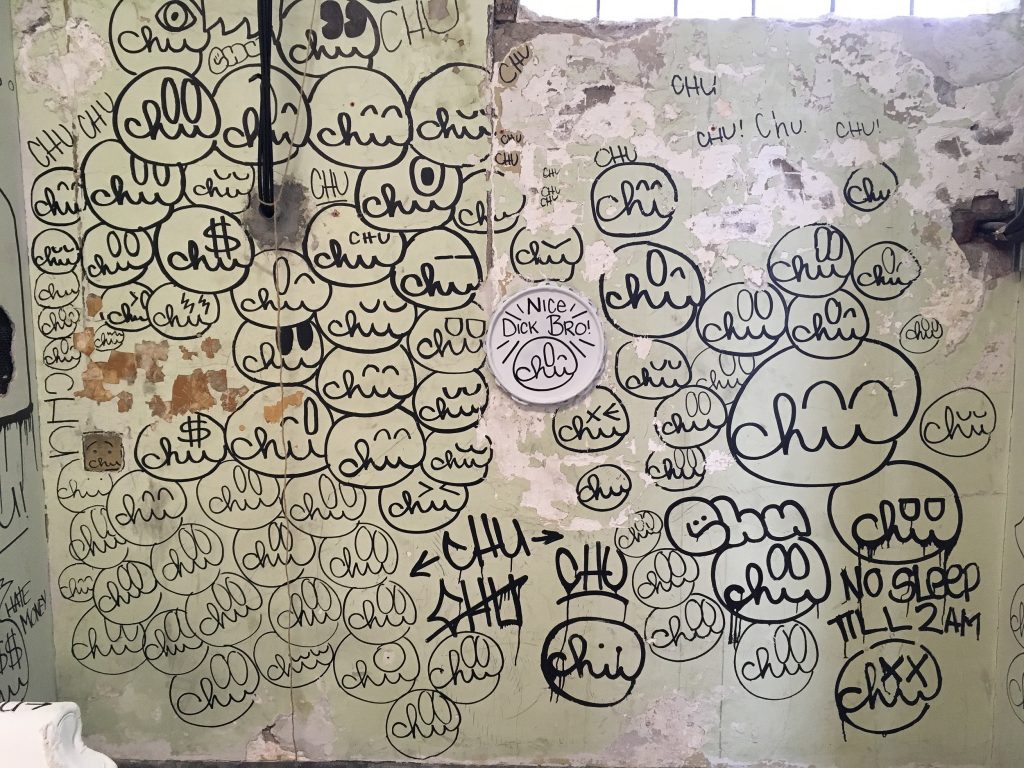

Our project aims to discover and analyze the gestural aesthetic of graffiti and its translation into a robotic and technical system. Our goal is to augment and extend, but not replace, the craft of graffiti using a robotic system. With the capabilities of the robot, we can explore and find artistic spaces that may be beyond the reach of human interaction but still carry heavy influences from the original artist. Ranging from the basic foundation of a pattern to new designs that are created by fractals of the tag, we hope to combine the artist’s style and tag to create a new art piece that is developed parametrically through Grasshopper.

Due to the complexity of graffiti, we are sticking solely to representing the graffiti artists “tag”, which is always a single color and considered the artist’s personalized signature. The tag is a complex set of strokes that are affected by the angle of the spray can, the distance from the wall, the speed that the can is moved, and the amount of pressure in the can. The objective of the project is to explore the translation of human artistic craft into the robotic system, while maintaining the aesthetic and personal styles of the artist.

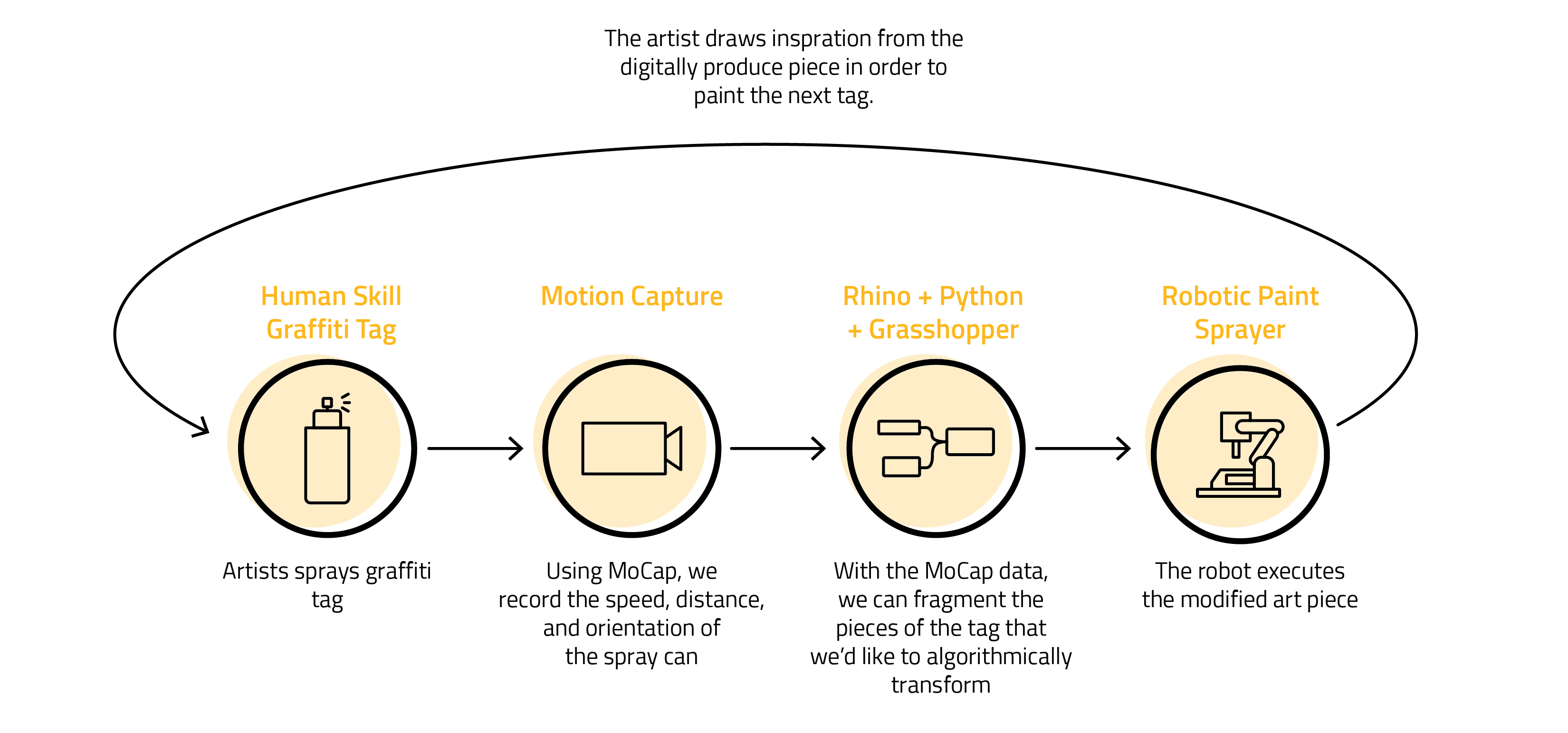

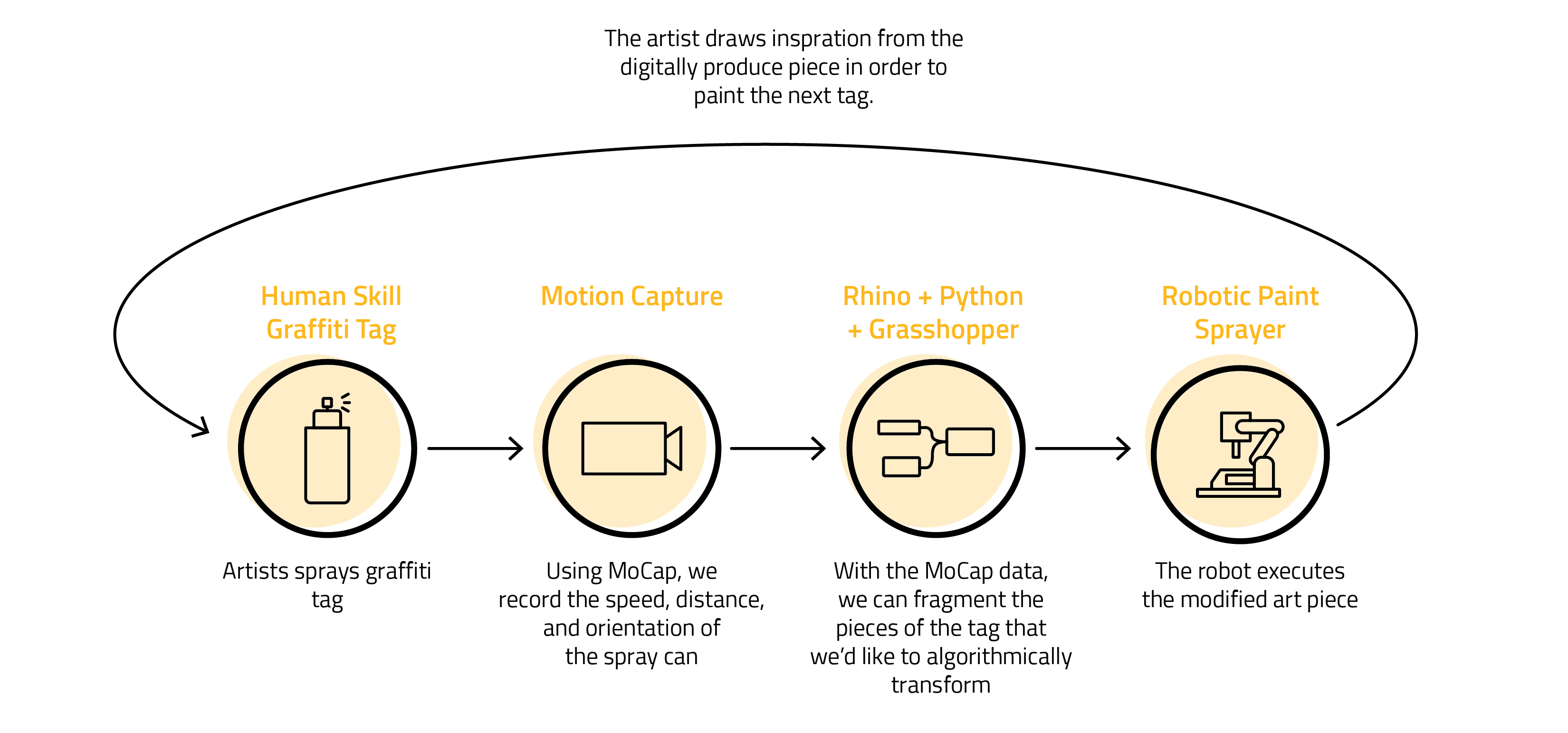

Hybrid Skill Workflow & Annotated Drawing

- Our first step is to understand and capture unique gestural motions that embody the style of the graffiti artist (i.e. flares, drips). We will watch and record the artists spray an assortment of different tags and styles on canvas. Once these gestural motions are categorized, our team will analyze and formulate different variables to modify and test. These variables may consist of components such as speed, distance, and orientation.

- We will then use Motion Capture technology to capture the artist’s gestures performing these specific components. This will allow us to begin to programmatically analyze how to translate the human motions into the robot’s language. Using Rhino and Grasshopper, we will program the robot to reproduce a similar piece, inspired by the original graffiti technique. While this is a useful origin point, our goal is not to simply reproduce the graffiti tag, but to enhance it in a way that can only be done through digital technology. We plan on analyzing components of the tag and parametrically modifying them to add character or ‘noise’ and have the robot produce that piece either on top of the artist’s canvas or on a separate canvas – the goal is to be able to see the origin analog piece and compare it to the digitally altered one.

- The artist would draw inspiration from the robotically enhanced piece and start to experiment with different ideas in order to see what the digital version can produce. This process is repeated multiple times. If the plan is to produce one larger cohesive final piece (i.e. a giant tag spread out over multiple canvases), the overall shape will need to be planned out beforehand. The communication between human and machine would be very procedural, alternating between graffiti drawn by the artist and art produced by the robot.

- The final project will be a collaborative and co-produced piece that has both analog and robotically sprayed marks, emphasizing the communicative and learning process between human and machine. Ideally, we would be using multiple canvases so the sizes of the art pieces would be more workable with the robot.

Algorithmically Modified Examples

By using Python scripting in Grasshopper, we will be able to modify, exaggerate, or add features based off the motion capture. Below are some examples of how components could be transformed.

Overview

Our project aims to discover and analyze the gestural aesthetic of graffiti and its translation into a robotic and technical system. Our goal is to augment and extend, but not replace, the craft of graffiti using a robotic system. With the capabilities of the robot, we can explore and find artistic spaces that may be beyond the reach of human interaction but still carry heavy influences from the original artist.

Due to the complexity of graffiti, we are sticking solely to representing the graffiti artists “tag”, which is always a single color and considered the artist’s personalized signature. The tag is a complex set of strokes that are affected by the angle of the spray can, the distance from the wall, the speed that the can is moved, and the amount of pressure in the can.

The objective of the project is to explore the translation of human artistic craft into the robotic system, while maintaining the aesthetic and personal styles of the artist. Our study will not be character based (studying one letter at a time), but more about the techniques that underlie the graphic style.

Timeline

- Our first step is to understand and capture unique gestural motions that embody the style of the graffiti artist (i.e. flares, drips). We will watch and record the artists spray an assortment of different tags and styles on canvas. Once these gestural motions are categorized, our team will analyze and formulate different variables to modify and test. These variables may consist of components such as speed, distance, and orientation.

- We will then use Motion Capture technology to capture the artist’s gestures performing these specific components. This will allow us to begin to programmatically analyze how to translate the human motions into the robot’s language.

- Utilizing Rhino and Grasshopper, we may program the robot to replicate the graffiti technique. While this is a useful origin point, our goal is not to simply reproduce the graffiti tag, but to enhance it in a way that can only be done through digital technology. We are not aiming even to perfectly replicate a tag or a style, as an imperfect representation is a new style and output in itself. With this in mind, we will begin experimenting with modifying different variables and looking at the pieces produced.

- The final output and which variables we experiment with will be determined from our findings in the previous steps.

- The final project will be a collaborative and co-produced piece that has both analog and robotically sprayed marks, emphasizing the communicative and learning process between human and machine.

Introduction:

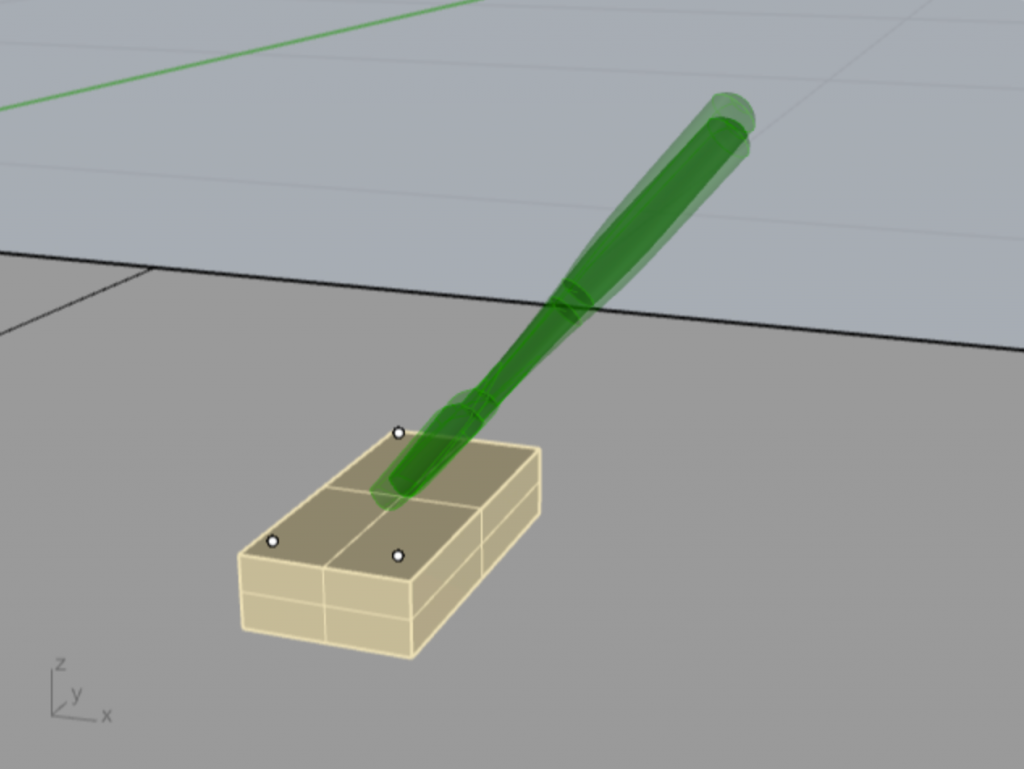

For this exercise, we chose to study a simple hand gouge and a basswood blank. The specific motion we are interested in is the gouge digging in and carving up a sliver of the wood. Because of the linear shape of the hand gouge, we had to construct a platform on which the retroreflective markers could be attached. We also added these markers to the wood blank.

Process:

Because of the small workable surface area of the wood block, the hand gouge motions were limited and fairly constricted to movement in one direction. Typically, a wood carver would be able to approach the block from multiple directions in order to make certain types of carving. The range of motion was further constrained keeping the markers in the camera’s field of view.

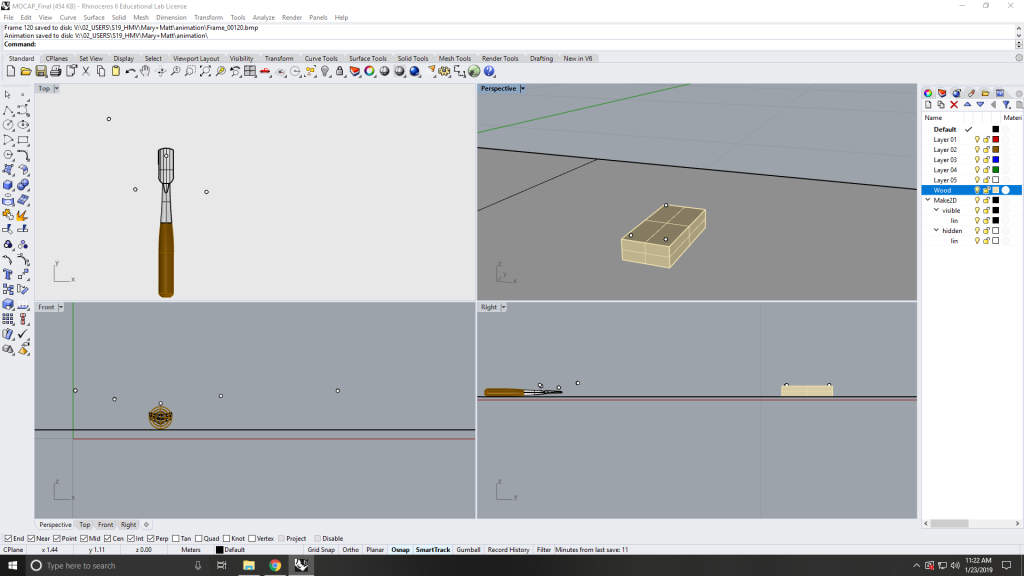

We then created two rigid bodies (the gouge and the block) in Motive and captured a base pose for orientation and three takes of ten seconds of use.

We imported the base pose capture data into Grasshopper and baked its points into Rhino. We created models of the tool and block independently and then used the base planes from the motion capture data to orient and position the models. Since the wood block is static, it also served as our ground plane.

We used these models in Rhino to create Grasshopper geometries for each rigid body (Geo). We then fed the motion capture data into Grasshopper and used the orient node to manipulate the position of our Rhino models.

We added a slider to isolate one interesting second of motion: where the tool interacts with the wood and captured the animation.

Analysis:

Based on our motion study and data, we would be able to figure out the velocity and force at which the gouge hits the block (visibly, the point at which the gouge stops moving). This appear obvious, but depending on the level of skill of the craftsperson (or wood type/grain direction) the gouge would either come to a hard stop or continuously move through the wood. In our capture we see the former—the tool coming to a complete stop and building up enough force to overcome the material. Some of this is because of the limited range of motion afforded to the craftsperson during motion capture. Some of the tool rotation observed in our study is a result of the resistance that the tool encountered.