Project Objectives

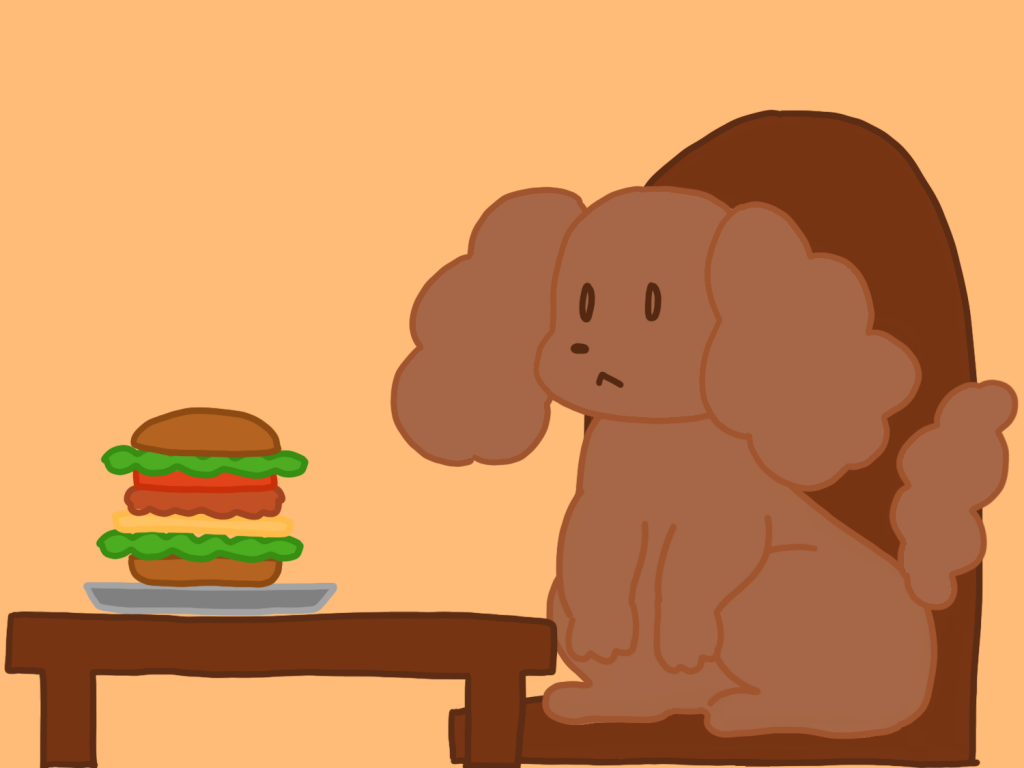

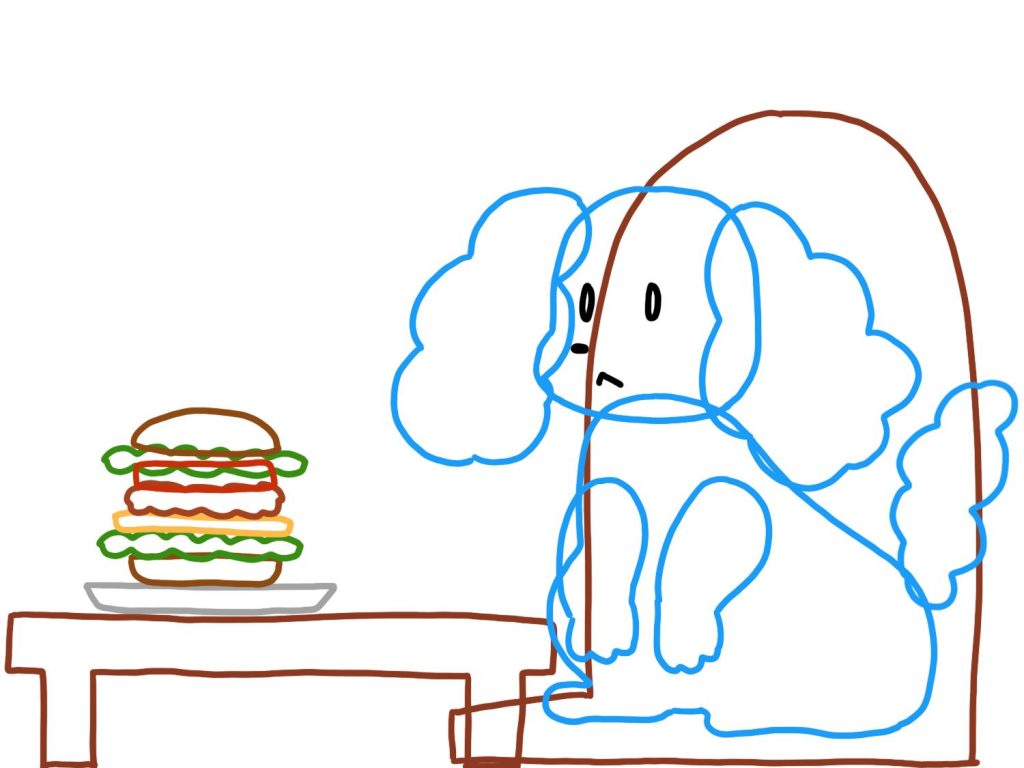

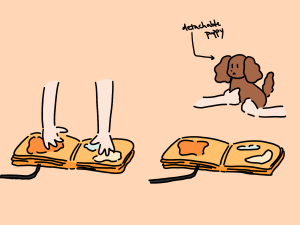

Lunch Time for the Puppy is an interactive children’s fabric book. The book is made from felt and other fabric material with different textures, and is embedded with soft sensors and electronic output elements for creating a rich storytelling experience. We produced a proof of concept prototype of the design by making one page from the book for demonstration. Our vision for the project is to have an entire book fabricated with similar methods with the main character, the detachable interactive puppy. The complete story would contain various scenarios of the puppy at places around the house.

Interaction and Story Outline

The puppy is designed to be detachable and responsive, able to be reattached to various positions throughout the fabric book. The fabric book contains different scenes of a puppy’s day and each page corresponds to different behaviors of a puppy. For example, petting the puppy in the shower is different from petting the puppy before going for a walk.

Waiting for a walk

Shower time

Cuddle on couch

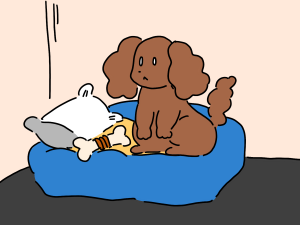

Bedtime

Creative Design Opportunities

With our successful implementation and modification of the methods provided by the Multi-Touch Kit (reference 1) research paper, we believe that by using this technique, capacitive sensing with off-the-shelf microcontroller board can be used for prototyping and designing touch-screen-like interactions for fabric, static flat surfaces and surfaces of other 3D objects. The technique can be used for augmenting children’s fabric books with digital interactions additional to the original material based interactions. Soft sensor grid can be integrated with the design of other soft materials for a more unified look of the book.

We see the possibility of creating open-ended storylines with adding different states of the behaviors. The rich interactive quality also helps reduce screen time for children.

Prototyping Process

In this section, we will discuss sensor iterations and software modifications during the prototyping process.

Sensor Iterations

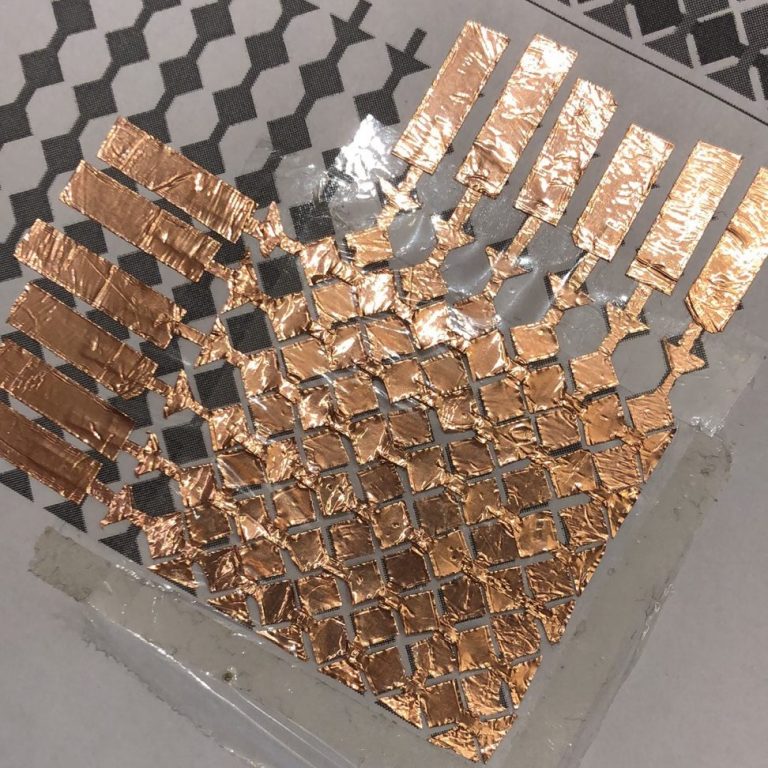

We fabricated our sensors using instructions from the original research project. The materials and tools we used were copper tapes, think double sided tapes, paper, scissors, and exacto knives.

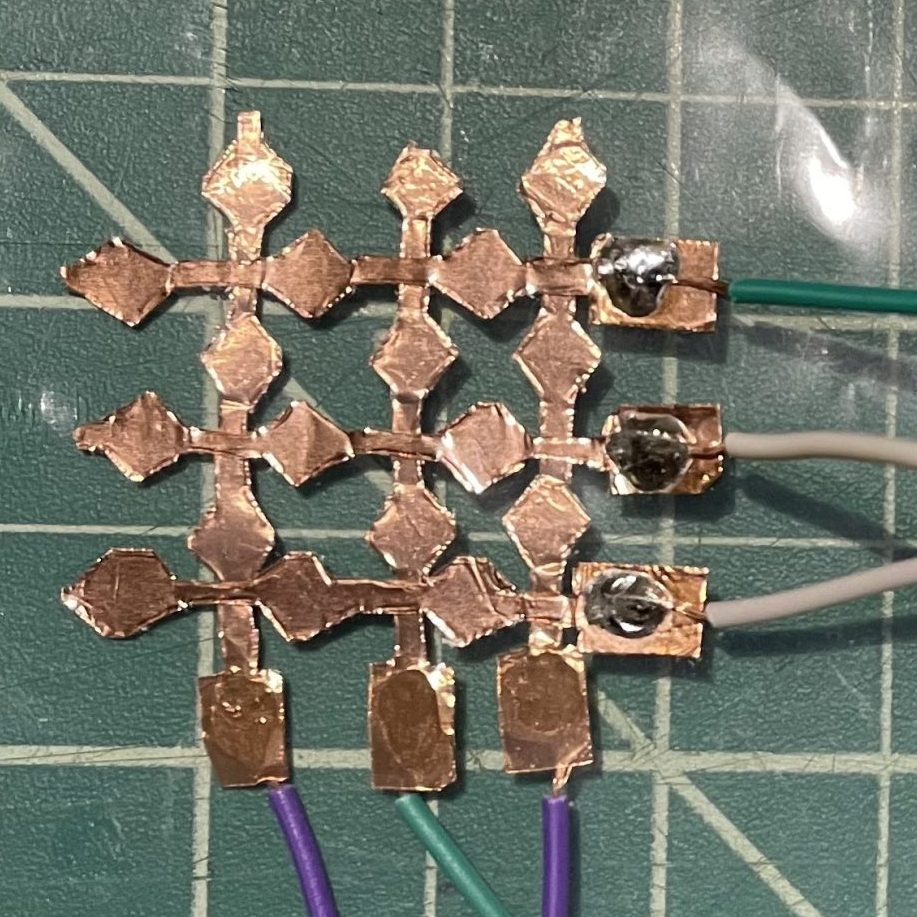

The first grid (3×3)

The second grid (6×6)

The third grid (6×6 on page)

The very first grid we made was of size 3×3. Copper tapes are taped to opposite sides of a thin plastic sheet. We made this to test if the provided multi-touch kit works at all.

We looked for ways to ease the fabrication process. We found the sensor design guideline and printed out the patterns for easy tracing. After a few attempts we fabricated the sensors using the following process:

- Cut off a piece of paper that contains a strip of the grid from the printed pattern

- Tape the strip to do the back of the conductive tape

- Use the taped conductive tape along the traces

- Use the exacto knife to cut the vertical traces for the think lines connecting diamonds

- Use the scissors to cut along the diamonds

- Peal off the backing of the conductive tape to tape it with the guidance of the printed pattern

The second grid we made was of size 6×6. The top layer of the copper tapes are taped on plastic sheet. The bottom layer of the copper tapes are taped on the paper. And the plastic sheet is taped on top of the paper. This sensor grid had issues with not recognizing light touches, so we suspected that it was caused by the gaps between the plastic sheet and the paper.

The third and the final grid we made was still of size 6×6. The copper tapes are taped the same as the first sensor grid: copper tapes are taped to opposite sides of a thin plastic sheet. This sensor worked relatively reliable and light touches could be detected.

Software Modifications

We built our software implementation using the Processing sketch from the original research project. Here is what the sketch does:

- Read analog values from the pins

- Set Baseline

- Use BlobDetection to identify&locate touches

- Use OpenCV to visualize the touches

We extracted the result of blob detection to do gesture recognitions.

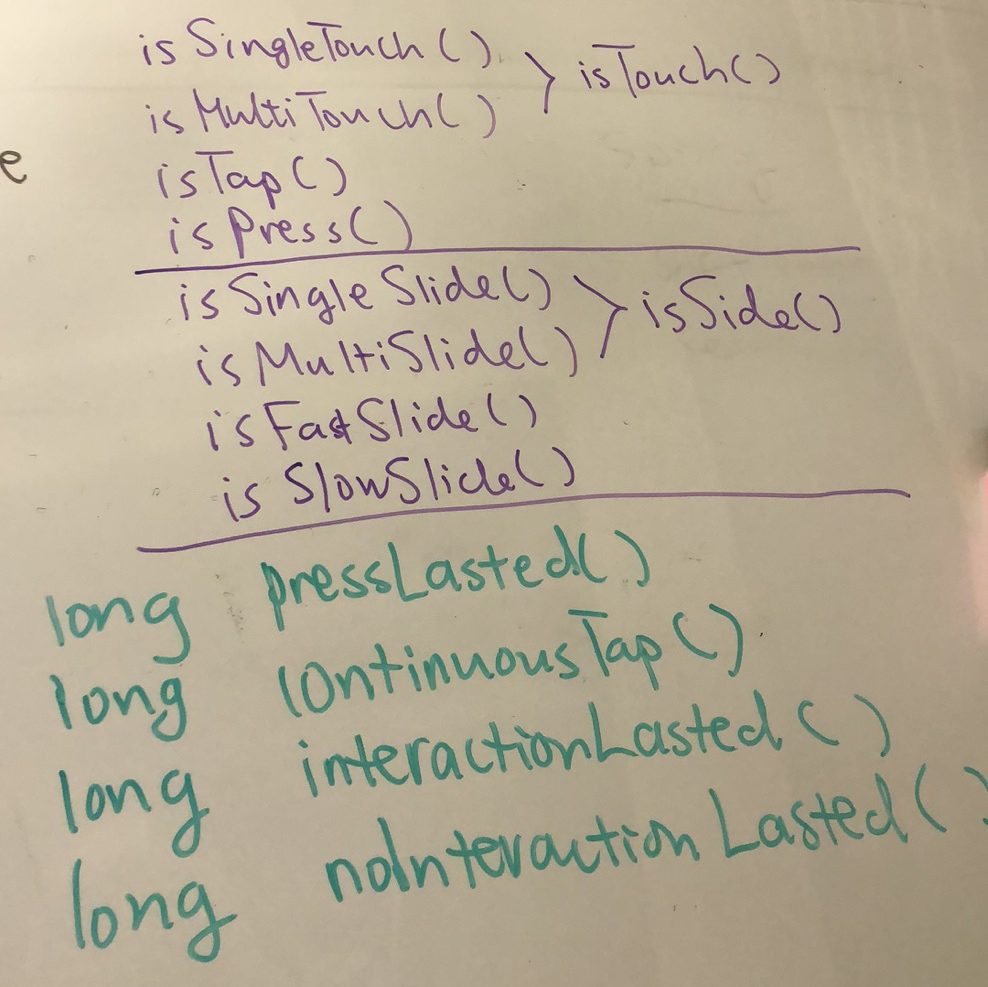

Initially, we wanted to be able to implement gestures recognitions of the set below.

We then ran into some troubles when trying to detect sliding behavior, partly because of the grid is actually low resolution, and it cannot slide from touching multiple positions at the same time. Thus we decided to put sliding apart and use the other interactions.

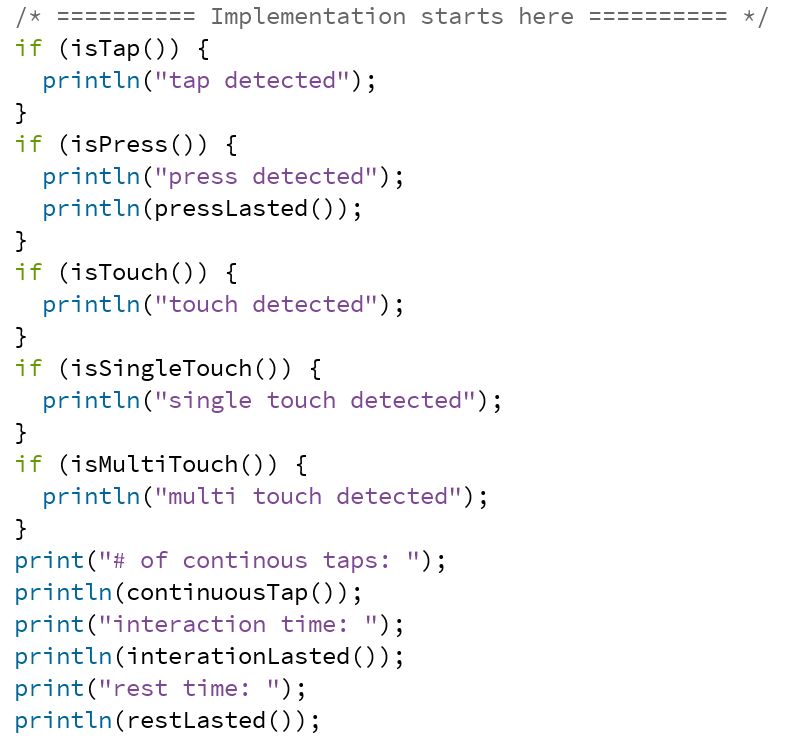

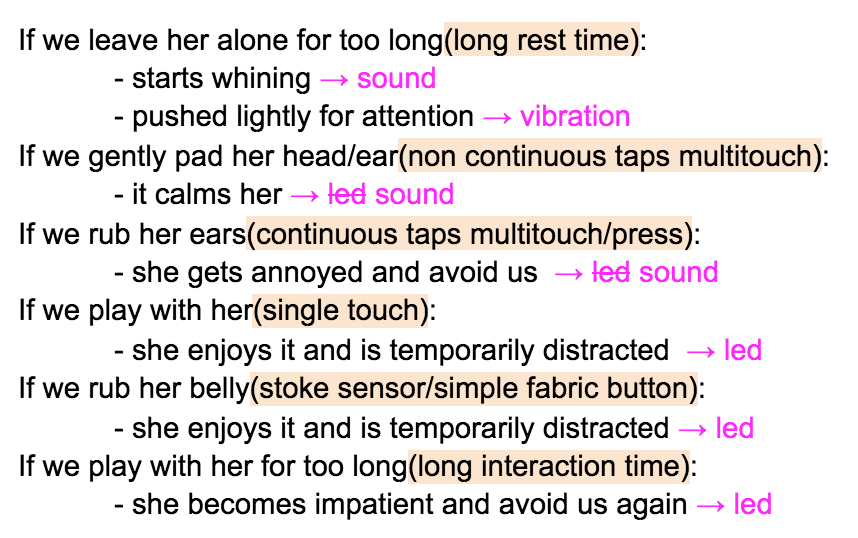

Above is the API we ended up implementing. Note that we use the number of continuous taps to detect slides. Using the available gestures, we were able to map them to output behaviors shown below.

Full page

Close up of the grid

Movable paws

Outcomes

In this section, we will discuss the successes and failures of our choices, things we’ve learned, and how future work would further improve the results.

Successes

- Successful implementation of the multi-touch kit with software modification tailored to our purpose: With the limited resources we had, a lot of our time were spent on how to fabricate the sensor grid. Though our hand-cutting method is nowhere near scalable, it is sufficient for a small sensing area like the one we have.

- Exploration of interactive fabric book with more complex interactions: Earlier works on tangible interactive books have focused on using simple e-textile inputs(e.g. buttons, sliders, and etc.) and thermochromic paint. We believe that our project is a proof of concept for interactive fabric book with un-constrained interaction sites. In other words, the entire page or a detachable piece of the page could act like a touch screen. Further research would be needed to determine effects of such interactions.

- Variations of behaviors: To bring the puppy more to life, we implemented a very naive state machine so that the puppy has different states that represent different moods. The states are very limited right now and not all state changes have corresponding outputs, future works could further the complexity of the state machine.

Failures

- Sensor grid robustness: The sensor grid has a low resolution because our sensor grid is very small. With a 6×6 grid, there are 36 ‘pixels’ in total, but a usual touch contact can easily cover up to 10 pixels. The use of blob detection also introduces errors like a single touch with a too large of a contact area could be detected as two separate touches. We suspect that some of these detection errors might be eliminated with a larger sensor grid, i.e. a larger sensing surface. One may asks about the diamond size and spacings, we chose the size and spacings as recommended by the original research project. Further works could look into replacing blob detections with some other algorithms to detect touches.

- Missing opportunities provided by the multi-touch kit: Many current interactions we hae can be done with simpler inputs. For example, taps/presses can be detected using a fabric button or capacitive touch sensing. Though we do use touch coordinates to decide whether the touches are the same as before for each time

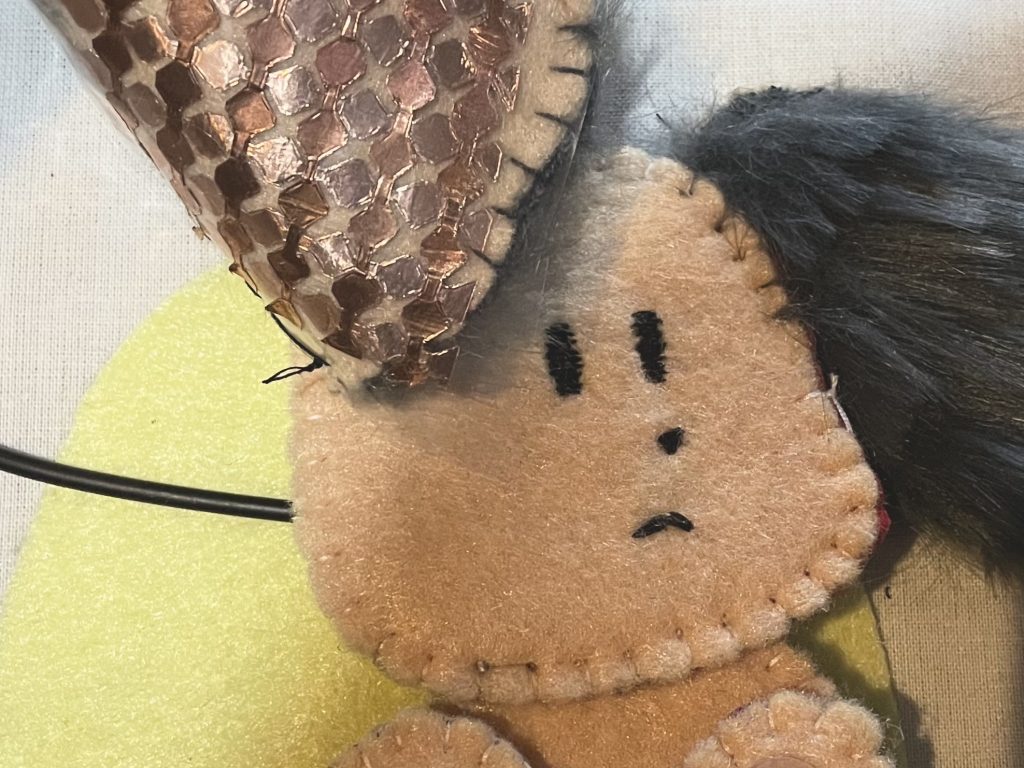

loop()is called, we are not taking advantages of the rich possibilities offered by location information. Further works could explore more gestures such as direction slides, pinches, and other gestures that a 2D touch screen can recognize. One thing to keep in mind here is that although we are mimicking a touch screen using fabric, we should still remember the unique tangible interaction opportunities that soft fabric brings. For example, pinching fabric is drastically different from a hard touch screen as the fabric will be pinched/folded as well. - Unnatural interactions compared with interacting with a real puppy: Right now the sensor grid is placed behind the ear of the puppy and thus makes the interaction of reaching to the back very natural. It was a decision between the aesthetics and the normality of interactions, and we chose to hide the sensor grid. A limitation of the multi-touch kit is that fingers must directly contact with the top conductive layer. The question about using resistive sensing technologies instead of the capacitive one that we chose was raised, and we believe that resistive sensing complicates the fabrication process and causes a more unnatural interaction as it requires firmly pressings on the sensor. For future work, one could use conductive thread to directly sew the sensors onto the fabric and to have the sensors at more natural locations.

Due to limited resources, we weren’t able to integrate as many different textures as we hoped for. Moving forward, we believe that adding more textures to the body of the puppy would allow a more diverse tactile experience.

Sources

- Hardware requirements and schematics can be found from the tutorial by the original research project.

- Software source code(README has instructions on using the arduino and processing sketches.):

Group member contributions

Equal contributions:

- Preliminary research

- Project scope definement

- Storybook storyline

- Interaction design

- Testing/prototyping the sensor grid

- Weekly reports

- Final system troubleshooting

Catherine’s additional contribution:

- Software implementation

- Troubleshooting on a breadboard

Yanwen’s additional contribution:

- Fabrication of the fabric page

- Integration of soft technology components with fabric materials

References

Narjes Pourjafarian, Anusha Withana, Joseph A. Paradiso, and Jürgen Steimle. 2019. Multi-Touch Kit: A Do-It-Yourself Technique for Capacitive Multi-Touch Sensing Using a Commodity Microcontroller. In Proceedings of the 32nd Annual ACM Symposium on User Interface Software and Technology (UIST ’19). Association for Computing Machinery, New York, NY, USA, 1071–1083. DOI:https://doi-org.proxy.library.cmu.edu/10.1145/3332165.3347895

Jie Qi and Leah Buechley. 2010. Electronic popables: exploring paper-based computing through an interactive pop-up book. In Proceedings of the fourth international conference on Tangible, embedded, and embodied interaction (TEI ’10). Association for Computing Machinery, New York, NY, USA, 121–128. DOI:https://doi-org.proxy.library.cmu.edu/10.1145/1709886.1709909

Irene Posch. 2021. Crafting Stories: Smart and Electronic Textile Craftsmanship for Interactive Books. In Proceedings of the Fifteenth International Conference on Tangible, Embedded, and Embodied Interaction (TEI ’21). Association for Computing Machinery, New York, NY, USA, Article 100, 1–12. DOI:https://doi-org.proxy.library.cmu.edu/10.1145/3430524.3446076