In Christie’s 2018 autumn auction, the famous controversial AI artwork “Portrait of Edmond Belamy” was sold for $432,500. The creator, the French art collective Obvious, had no prior history or reputation as an artist. And even though being created with an algorithm, the portrait nonetheless looks like one less delicate portraiture than you would expect to see in a museum.

After this auction, AI Art has seen an increase in artists from around the world participating. In the past two years, AI Art has seen a surge in online platforms like AIArtists.org, as well as exhibitions, conferences, contests, and discussion panels dedicated to AI Art.

In the extensive discussion on creating AI Art, one issue receives continuing dispute – on what level of computer autonomy in making art-generating decisions can be regarded as essential for the creative process? Are computational technologies still defined as mere tools, or do they exhibit independent “behavioral” properties?

Define AI

The concept of Artificial Intelligence adopted is based on Marvin Minsky’s most widely recognized definition:

The performance of tasks, which, if performed by a human, would be deemed to require intelligence.

Geraint A Wiggins. 2006. A preliminary framework for description, analysis and

comparison of creative systems. Knowledge-Based Systems 19, 7 (2006), 449–458.

Simple cellular automata, an evolutionary algorithm, or any other computing system that meets the concept can be artificial intelligence. Through the way they interact with their physical or virtual surroundings, as well as themselves, these computational systems present a certain level of intelligence. When it comes to intelligence, learning is a major leap, and model-based reasoning is essential for an AI to elicit human-like learning, decision making, and most critically, autonomy.

Art Practise

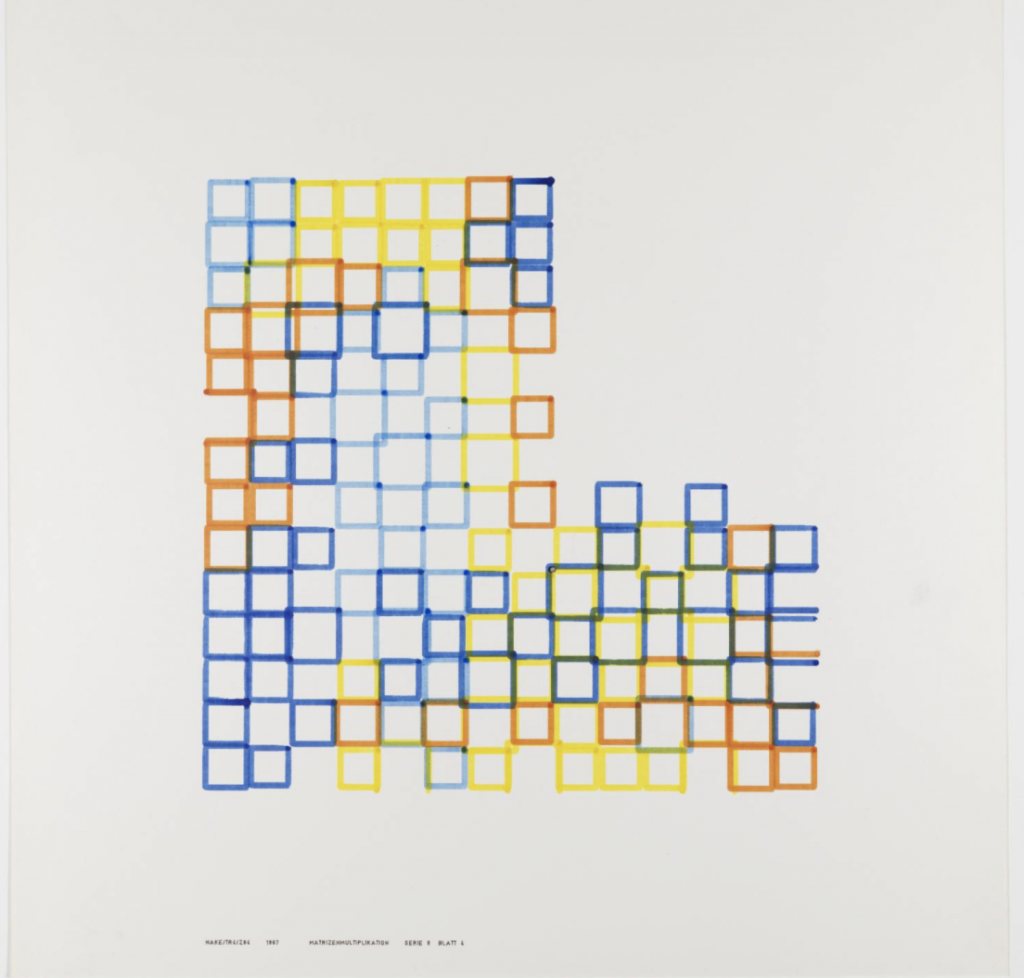

Since at least the late 1950s, a group of engineers/ artists at Max Bense’s laboratory at the University of Stuttgart began experimenting with computer graphics. Frieder Nake, Georg Nees, Manfred Mohr, and Vera Molnár investigated the use of mainframe computers, plotters, and algorithms in the manufacture of aesthetically appealing objects. A simple experiment to test some of the novel equipment in Max Bense’s laboratory rapidly evolved into an art movement.

This is only one illustration of how computer art evolved in the 1960s. Procedural, generative, or parametric” design is commonly used in the design and architecture sectors to describe the usage of algorithms.

Harold Cohen, a professor at the University of California, San Diego, has been using a self-designed program AARON, which is able to autonomously create original images, since 1973. Cohen began his research by exploring AI applications in the visual arts. He continued to develop AARON after its inception. The application could be used to make better choices, such as color or composition selection. Initially, AARON could only create black and white line drawings. It progressed to make digital prints of colored shapes like human figures and flora – the first examples of artificial intelligence art. Aaron once joked about how he could become the only artist to have a posthumous show of new works created solely after death because AARON could generate pictures on its own.

The drawing robot created by artist Patrick Tresset is yet another example of an intelligent tool. Its behavior and sketching style are both controlled by the algorithms that allow Paul’s drawings to be displayed, limiting the robot’s creative freedom. These systems are affected by studies of human behavior, specifically how artists depict behavior and how individuals interact with machines. Tresset creates robots and autonomous computational systems to manufacture a variety of products.

Sougwen Chung and her robots Drawing Operations Unit, Generation Four operated by a written AI system, alongside other robots in Chung’s performance-based artworks. She and the robots paint enormous canvases together as part of a cooperative effort and impromptu dancing.

Technological Landmark

Several significant technological advancements in recent years aided the growing interest in AI Art. Many rendering and texture synthesis methods have been created in recent decades in computer graphics and computer vision research, which are supposed to apply an “artistic style” to the input image. Academic results dated back to 2001 when the article ‘Image analogies’ was published, and 20 years later, such synthesis methods commonly applicated in image process applications become part of the modern-day ‘cannot live without mobile phone’ life. Nevertheless, deep neural networks have just lately been used to stylize photos and create new images. The quoted image illustrates key technology milestones influencing AI Art production.

GANs (Generative Adversarial Nets) are likely the most significant technological innovation that has contributed to the present growth of AI Art. Developed by Goodfellow and his colleagues, GAN marks a turning point in the use of machines to create visual content. A GAN operates by training two “competing” models: a generator and a discriminator. The generator is to figure out how the real examples in the input sample are spread out and make realistic images. The discriminator, on the other hand, is trained to tell the difference between fake images made by the generator and real images from the original sample. This framework produced convincing false variants of actual images for diverse image content kinds.

Google engineer Alexander Mordvintsev introduced DeepDreams in 2015. This strategy was meant to improve DCNN (Deep Convolutional Neural Networks) interpretability by displaying patterns that increase neuron activation. The method later became a popular new kind of digital art production because of its psychedelic and hallucinogenic style.

NST (Neural Style Transfer) is one of the most well-known AI inventions. It sped up the use and development of AI technologies for art. This method was first described in a very important paper by Gatys which showed how CNNs can be used to create stylized images by separating and combining the “content” and “style” of an image. After this breakthrough, there were a lot of new research contributions and applications. The work of Jing gives a full overview of the existing NST techniques and the different ways they can be used. “Content” refers to recognizable objects and people in an image, while “style” refers to a deviation from a photorealistic depiction of “content” that is aesthetically pleasing or interesting. But in the context of art history, style is often seen as more than just the way lines and brushstrokes look. It is often seen as a more subtle and context-dependent idea. Also, stylized images made with NST methods are usually just a clear combination of images that already exist, not an original and unique piece of art. Even though NST could be used in a creative way to make digital art, it is important to be skeptical of the trend of calling anything with a painterly look to it “art.”

Elgammal made AICAN, take GAN one step further in its ability to generate content in a creative way. In their work, they say that if a GAN model is taught by looking at pictures of paintings, it will only learn how to make pictures that look like art that already exists. Like the NST method, this won’t make anything truly artistic or new. They suggest changes to the optimization criterion that would make it possible for the network to make creative art by getting as far away as possible from well-known styles while still staying within the art distribution. Through a series of exhibitions and experiments, the creators of the AICAN system showed that people often couldn’t tell the difference between images made by AICAN and artworks made by humans. In addition to the AICAN project, many other developers and artists have used GANs with different modifications and training settings to make digital art. It has become the most popular technology in the AI Art scene right now.

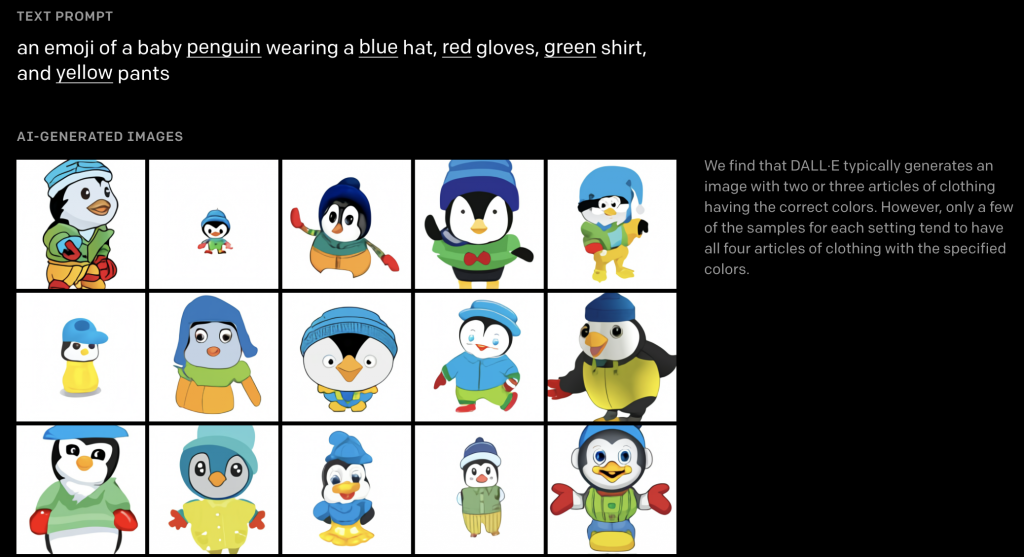

In 2021, CLIP and DALL-E, two amazing new OpenAI “multimodal” neural networks have already been released. The developers claim these are “steps towards systems with better comprehension of the world”.

Named after Salvador Dali, DALL-E, a 12-billion parameter version of GPT-3 trained to generate graphics from a text description input. It employs the same transformer design as its predecessors. One way to look at it is that “it receives both the text and the image as a single stream of data containing up to 1280 tokens and is trained using maximum likelihood to generate all of the tokens, one after another”.

OpenAI’s DALL-E website has some incredible demo examples, one as follows:

By incorporating a basic pre-training job, OpenAI has shown that CLIP can perform well on a wide range of datasets. From a set of 32,768 randomly selected text snippets, predict which caption is connected with a given sample image. CLIP enables anybody to create their own classifiers and eliminates the requirement for task-specific training data and unlock certain niche tasks more easily because it doesn’t need task-specific training data.

Conclusion

Gaining attention is one of the most fundamental aspects of success in an age with too much information or art. In light of the current AI excitement, it is logical that presenting the story behind a certain artwork as something being created autonomously by an AI system seems to generate more curiosity than yet another human-made work. “Autonomous AI artist” narratives are mostly driven by marketing, and displays of AICAN-created art, like the Christie’s Belamy sale, use the concept of autonomy for publicity purposes. The application of anthropomorphic language in the instance of the Christie’s Belamy auction considerably raised the public interest in the art.

Nevertheless, as AI technologies are becoming more and more advanced, the distinction between employing an AI system as a tool or as a creator of content is becoming more blurry. The general public can hardly understand and interpret even a small subset of AI systems, since the topic’s complexities go beyond the scope of a single research subject, and scientists from a variety of fields are beginning to pay attention to it. A conceptual framework for philosophical thinking about machine art by Mark Coeckelbergh considering from several aspects the question if machines can generate art. Aesthetic theory, computational creativity, and the role of technology in the creation of art are all discussed in this paper, which also contributes to the fields of technology philosophy and philosophical anthropology as a whole. Perhaps we should adopt a more comprehensive understanding of what happens in creative perception and engagement as a hybrid human-technological and emergent or even poetic process, a model which leaves greater opportunity for allowing ourselves be surprised by creativity—human and possibly non-human.