Narrative Description

During these pandemic times, it becomes increasingly difficult to stay connected with peers. This raises concern involving communication among people but also brings forth the need for telepresence. For our project, we will be using computer vision and facial recognition components to detect human eyes and send that information to an arduino that controls the movement of a device which will reproduce the idea of Mona Lisa’s eyes. However, instead of having the Monas Lisa follow the person looking at it since we were trying to establish a connection among a diverse set of people, our device will replicate the partners eye movements. The goal of this project is to enhance the connection among people.

The ideal installation place would be in two different museums. However, as part of an MVP, our goal is to first keep the device on a person’s desk. This will facilitate the implementation of the core of our project first, then later on if our device is a success it would be put into museums. A typical experience would be as follows: Imagine the device is set up in two different museums. Person 1 is in museum A and person 2 is in museum B. In each museum there would be 2 devices: the computer and the device. If person 1 is using the computer in their museum, this would control the movements of the device in museum B and vice versa.

Similar to the Mona Lisa, people at first glance might be perplexed at first, as they try to figure out whether the eyes of the artwork is following them. Later on, they might become amused once they realize that the eyes are moving.

The hallmark of success is to build a working prototype which meets our goal of enhancing telepresence. This success can be achieved by having a fully functional computer vision software for detecting eye movements, a functional communication channel that sends information from the eye detection software to the arduino of the other person, and finally a working device which responds to the data received and produces a physical motion that corresponds to the eye movements.

Technical Outline

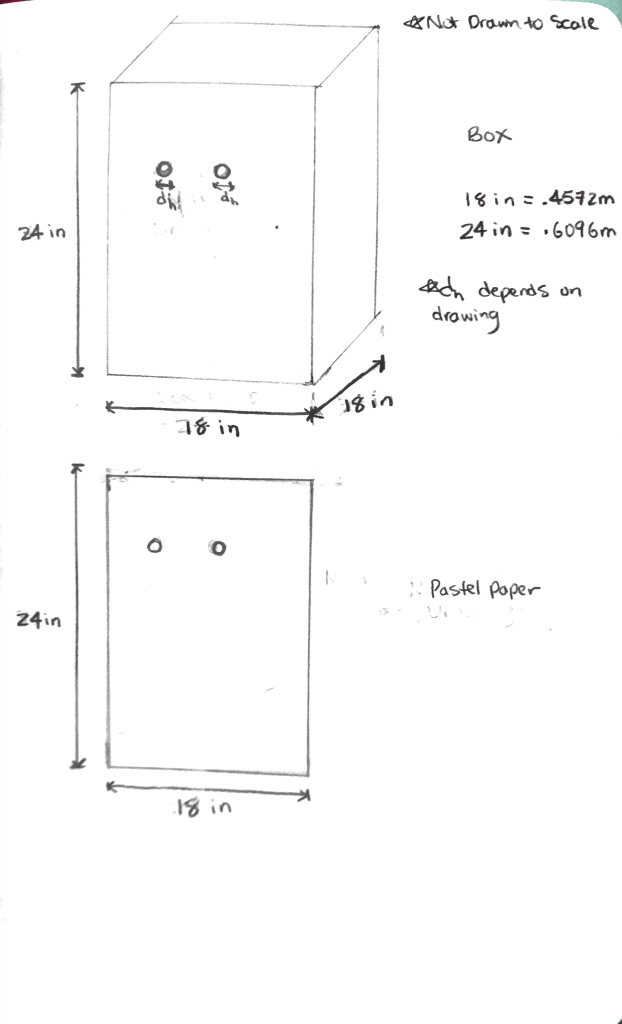

Our project will be split into three technical components: hardware, software, and a communication channel between these. In the hardware, the drawing will be placed on the front of a wooden box that can be set atop any table, and the eyes will be controlled by servos. In the software, we will be using open cv to create an eye detection program. The communication channel will use MQTT to send the eye detection data messages through a bridge program to the arduino that controls the servo motors of the hardware.

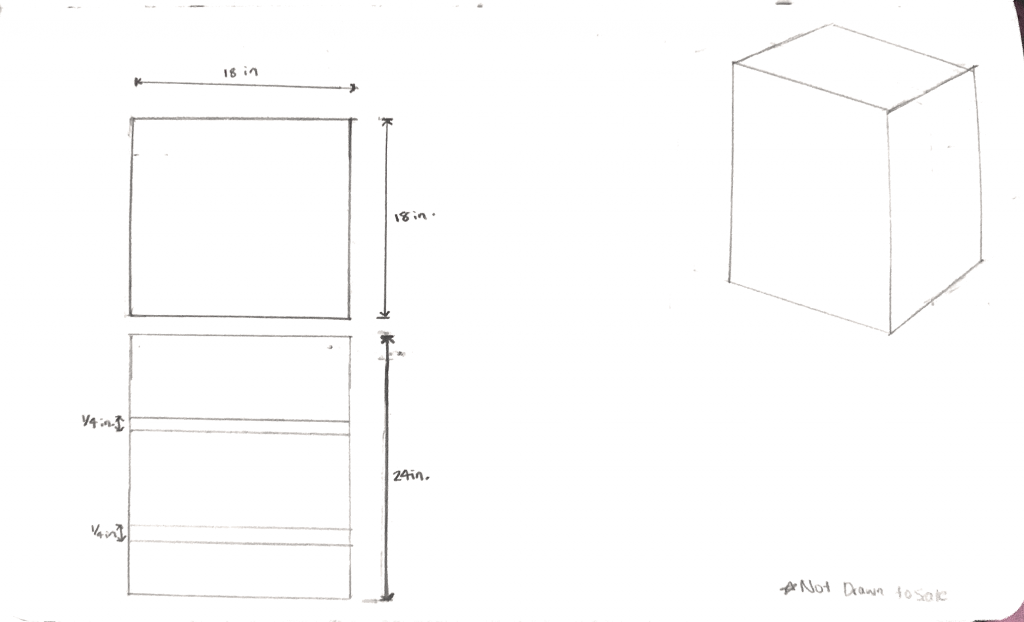

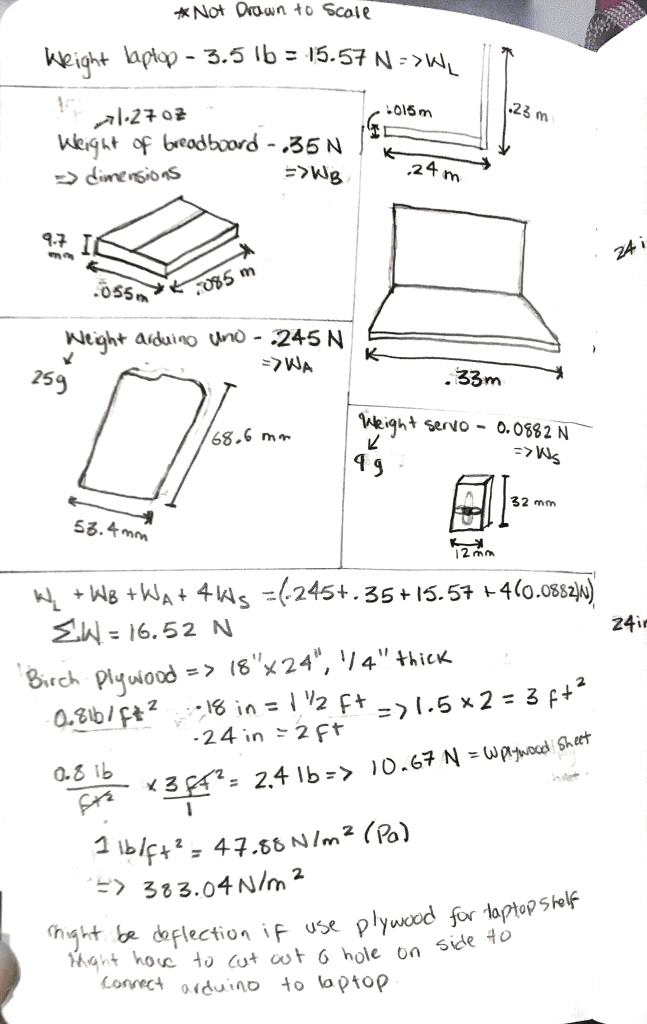

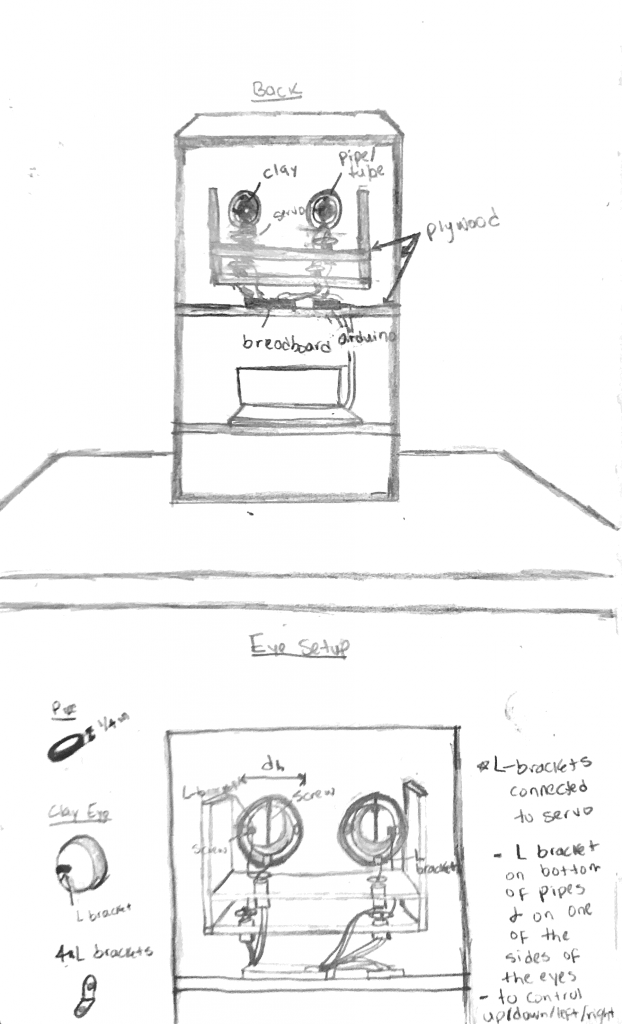

The Mona Lisa drawing will be made up of pastel paper and oil pastels. It will most likely be attached to the front of the box with some type of adhesive that will not bleed through the paper, maybe two sided tape. The box will probably be made up of plywood because of it’s sturdy but light nature. The eyes will be controlled by four servos, and a paperclip will connect the servos to the eyes via L-brackets. We have decided to use modeling clay for the eyes since it is initially flexible but hardens later. The box will be hollow so that we can hide the more mechanical features inside of the box. We will try to add shelves in the box, as that allows us to fit more items that we need. The materials that we are currently planning to fit inside the box include the four servos, the laptop, the wires, the 830 tie-points breadboard, and the arduino.

The software component will use python open CV for facial recognition. The first step is to pinpoint the eyes of the person. Opencv allows for the use of facial keypoint detectors to create bounding boxes for facial features. The real time feed from the video can be split into frames. Once I have individual frames, I can use this bounding feature to isolate a person’s eye. After this, I need to find the center of the eyeball. I can start by defining coordinate points of the user’s left and right eye. Using a mask, I can convert the image into black and white, where the eyes would be represented in white and all other information would be “removed” or made black. We can then apply another mask to segment the eye (split it into eyeball and rest components). Once we have the information of the pupil, I can define a center axis across the middle of the eye and compute the distance between the current pupil position and the central axis. This information will then be sent to the arduino using the MQTT bridge program which will be normalized for servo movement on the arduino end. Once the arduino has processed and converted the data appropriately, it will be sent to the servos which will cause the eyes to move.

The key technical challenges include the connection between hardware and software (arduino and CV software), choice of box material, and the conversion of data from the eye detection software into arduino data that can be used for servos. The biggest challenge we face deals with how to merge together the parts that we are working on. Marione has a strong mechanical background, so she will be working on the physical / mechanical device, whereas Max has a strong background in programming, so he will work on the software side. The difficulty is that once we finish our individual pieces, the components need to be merged through the bridge program, so that data can be transmitted from the software to the arduino. The reason this is difficult is because in previous assignments we were given the bridge program and simply had to use it. Now, we are going to need to modify it and integrate it into our own project. With regards to choosing material for the box, we need to pick a material that is sturdy enough to support the weight of the computer connected to the arduino. On the other hand, we also want the material to be easy to use. Finally, we need to come up with a way to map the computer vision information to arduino data. Based on our plan, we have great ideas for how to develop the CV software and how to build the mechanical structure of the Mona Lisa project, but we still need to find a good way to have the Mona Lisa eyes accurately represent the user’s eyes. We are going to need to come up with some sort of mathematical formula for converting the values correctly. I believe that this will be solved over time. As we continue to build our project we will be able to fine tune the calculations to become more accurate.

Drawings

Leave a Reply

You must be logged in to post a comment.