For this project I wanted to do build a digital audio workstation that one could control entirely with one hand, and with as little keyboard/mouse interaction as possible. The leap motion controller seemed like the easiest way to do this, but I was stuck for a bit trying to figure out how to control so many different parameters with just one hand. The gate object was the key here, in conjunction with the machine learning patch we looked at in class. Upon recognizing a certain hand position, the gate object would cycle through its outlets and activate different subpatches that recognized different sets of hand gestures. For example, the hand gestures in mode 1 would control audio playback- pause, play, forward/reverse, etc. The same hand gestures in mode 2 would control a spectral filter, and in mode 3 they might control a midi drum sequencer, and so on for various different modes. At least that was the idea in theory…

I definitely bit off more than I could chew with this undertaking. There was way too much I wanted to do, and only a couple of modes that worked semi-reliably. I intend to smooth out the process and eventually have the daw be as fluid and intuitive as possible. One day maybe a disabled music producer might be able to perform an entire set with one hand and not a single click.

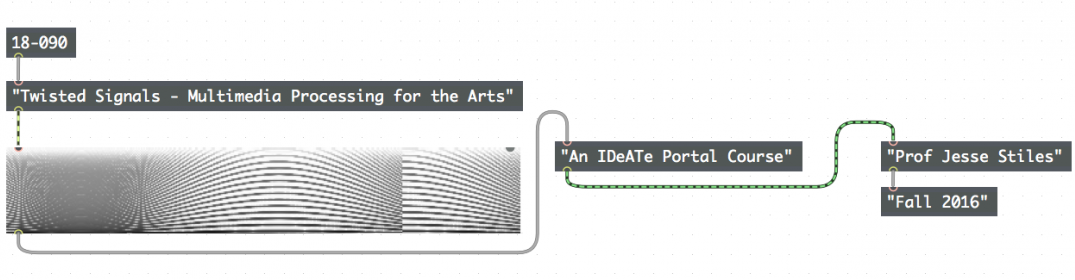

Here’s the (WIP) patch:

Thanks!