Artificial intelligence in the arts is growing increasingly more complex. It’s 2022, and robots are co-creating art, NFTs are celebrating and confusing art consumers around the world, and at least 85% of Americans have smartphones that give immediate access to endless amounts of streaming content. This article specifically covers the recommendation algorithms built to support content delivery for this majority, their cultural implications, and bias control.

Pervasive Algorithms

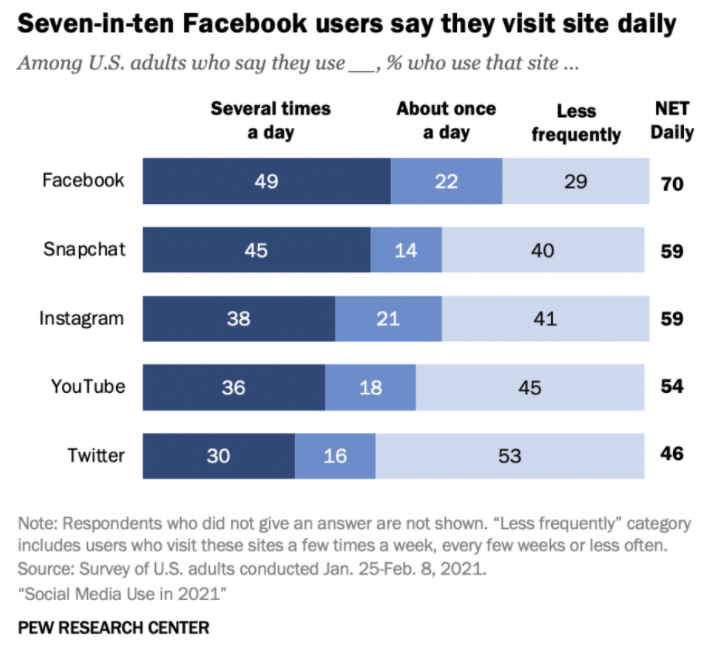

New technologies in the crypto realm and metaverse have distracted many from the increasing prevalence of social media and content streaming applications like Spotify, Facebook, YouTube, TikTok, Twitter, etc. So, before delving into the unsolved problems with these services, it is worth reviewing how important they are in our lives through examining consumer data. According to the Pew Research Center, a majority of adults use social media applications at least once a day. The algorithms these applications use to present relevant content to their users vary technically across companies, but one element remains the same between them. The algorithms are constantly improving their modeling capabilities using a combination of content-based, collaboration-based, and knowledge-based methods. Content-based methods use metadata about items presented to recommend what a user would like to see, whereas collaborative and knowledge-based methods use user profiles and behavior and a combination of user and content data, respectively, to share content relevant to specific users. The high-level goal of the algorithm, regardless of its specific structure, is to add personalization and enjoyment to the application for its user.

The Center for Humane Technology calls the cause of our daily reliance on personalized social media and content streaming applications “pervasive technology.” Advanced recommendation algorithms disrupt our daily lives because they constantly reinvent what we see to appeal to our psychological wiring. For example, recommending dramatic or violent social media content appeals to human curiosity, and creating endless scrolling in an application gives users the power to continue engaging in that curiosity consumption without bound. When asked to describe positive experiences with recommendation algorithms, a group of peers responded that they enjoy receiving a mixture of similar and new content, events to attend based on past consumption, and niche accounts that make them feel “extra special and unique.” The common thread in these sentiments is the feeling of being known by the application. Recommendation algorithm tuning has progressed to the point that individuals expect algorithms to understand them, and they reward these systems with their undivided attention when they do.

TikTok currently has one of the best content recommendation algorithms on the market in terms of capturing users’ interests and recommending desired content. It is estimated that 90%-95% of what TikTok users watch on the application comes from the TikTok algorithms’ recommendations. For reference, only approximately 70% of YouTube views can be traced to its recommendation algorithms, and YouTube is widely considered one of the best content streaming platforms with more than 2 billion active users. TikTok is still changing its algorithm often to make even more improvements in content recommendation, but this video provides a general use case for exploring how it curates individualized content.

Cultural Implications – Spotify Case

A major unanswered question surrounding recommendation algorithms and their control over society’s content consumption is: how will these algorithms influence cultural traditions and preferences around art in the long-term? Spotify has the most transparent recommendation algorithm out of most massive content sharing applications, so it is easiest to explain the potential cultural disruptions facilitated by recommendation algorithms using it as an example.

Before platforms like Spotify existed, music was a means of cultural expression. Communities shared songs live from generation to generation to tell stories about their struggles and triumphs, and even in the early 2000s when CDs, mp3 players, and iPods were prevalent, music exposure was dictated largely by the community individuals lived in. However, biases in Spotify’s algorithms are now changing the narrative of how music integrates with society because they expose listeners only to whatever culture(s) they understand.

The first bias that influences what the Spotify algorithm shares is the popularity bias. This bias occurs when a user enters Spotify’s user matrix upon creating an account. The system has little context with which to build successful recommendations upon at this point, so the application is programmed to begin presenting a selection of popular music to the user to kick off their content personalization. The more the user listens, the more Spotify can narrow from a broad list of hits to their most resonant, curated content, creating what has been referred to as an “echo chamber” of listening behavior. Because algorithms are influenced by user behavior, this programming also results in a feedback loop between users and the app that can only be broken if the user searches content outside of the types Spotify presents. There is no way to know exactly how this tunneled call and response of the Spotify user and recommendation algorithm will change our society’s relationship to music, but there have already been urgent calls to redesign music streaming algorithms due to their gender biases. This issue hints at a turbulent relationship between the streaming music industry and equitable music access that has surely changed society’s music affinities already.

Making Algorithms Less Bias

First and foremost, recommendation algorithms are largely built to serve their application’s parent company. The more time users spend on the applications, the more the companies that develop them make. For these platforms to be sustainable and fair to the artists, musicians, and content creators that use them, however, their developers would need to change their goal and code with extreme consciousness toward the biases they feed into them. Because we know that these systems have impactful and unchecked influences on our culture, we must think of their development as a venture in computational social science. An algorithm’s development team can do a lot by bringing interdisciplinary perspectives into the room when building recommendation models to positively or negatively affect the bias in their performance. To mitigate instances of bias in algorithmic design, the developers must first consider diversifying their team.

1. Diversify Development Teams

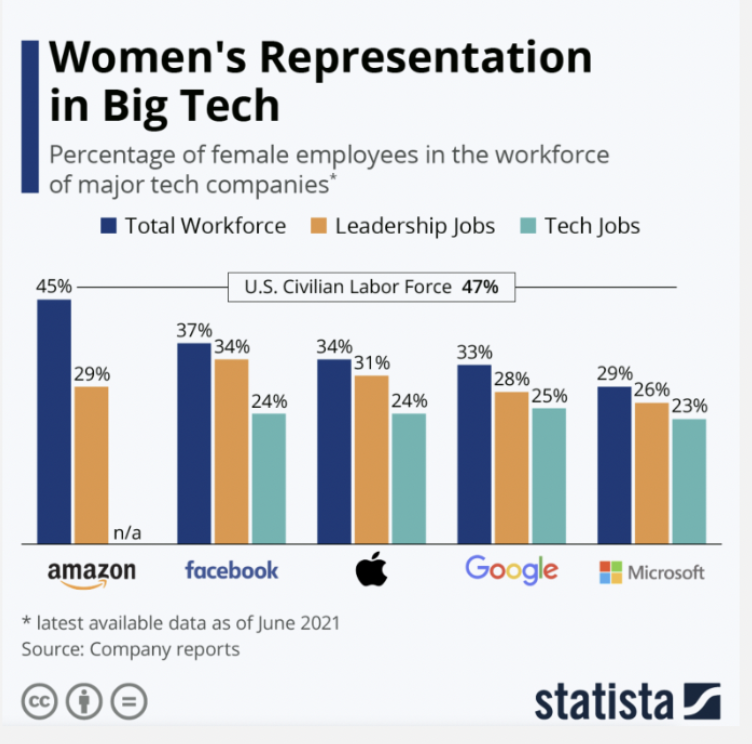

As Kriti states in “How to keep human bias out of AI”, many women in the computer science field are not respected, trusted, or valued for their capabilities and intelligence as highly as their male colleagues. This issue in the technology field may relate to the fact that gender biases have been discovered in music streaming services. Based on statistics from big technology companies like Google and Facebook, it is likely that pervasive algorithms behind such services are primarily developed by men. Although big tech companies are making great strides to change this, it is also likely that these algorithms are being developed by individuals who identify as White or Asian.

It takes diverse minds to nurture an algorithm to make diverse decisions. In other words, the more perspectives a development team can engage about how algorithms could potentially leave cultures behind in their data, behaviors, and reach, the less likely it is that the algorithm will be culturally disruptive in a homogenizing manner. Potentially, the diverse team can even craft an algorithm that uplifts diverse and marginalized voices in ways the physical world does not to change our world for the better. Training diverse teams to understand and manage their personal biases could help them grow toward making socially informed and equitable algorithmic decisions as well.

2. Talk about the Data

The complexity of recommendation algorithms is beyond comprehension for most individuals, so it can be difficult to fathom how to improve them from the outside and ensure they fairly represent diverse creators. However, it is widely known that humans create algorithms by feeding them massive amounts of data and fine-tuning their responses to improve the accuracy of their suggestions. This training data that developers choose to give the algorithms matters because the algorithm can only respond within the confines of the information it is given. To provide an oversimplified example for why this is important to artists, imagine that a new music streaming service is building a simple recommendation algorithm. They feed the algorithm a training dataset full of the song metadata and user listening behavior for 200,000 pop music lovers. Then, they recommend a song to a new user who rarely listens to music by collaboratively matching the user with the profiles in this data set. The algorithm will likely recommend a pop song to the new user because it has learned that these songs are popular.

The issue in the above example scenario is that having only pop fans’ data in the algorithm’s training set makes it less likely that a user will get exposure to other types of music that the service provides. For an artist outside the pop genre, this means that they may not gain exposure simply because of this data oversight. It is therefore crucial that developers think about diversity and popularity rankings not just in their physical team, but in the data they feed their algorithms when training them. Over the past few years, lawmakers have proposed over 100 bills in over 20 states aiming to make data sharing more transparent within the AI and recommendation system space and pressure companies to think about data implementation biases.

3. The Power of the User

One of the most exciting elements of recommendation algorithms is that they are always evolving. While it is the onus of the companies that create them to protect artists and content creators by making sure all content has a fair chance of getting presented to users on their platforms, individuals can make up for their blind spots through their consumption behavior. For example, using application search features to find new content outside of the genres typically presented teaches the algorithm to nurture a diverse individual content ecosystem.

It will ultimately take collaborative efforts beginning at the highest level of technology team recruitment and stepping down to the individual’s consumption behavior to mitigate algorithmic biases. While comprehensive, this work will affect the long-term cultural and artist equity implications of popular recommendation algorithms, thus affecting how our society engages with art in the future.

References

“22 Examples of Artificial Intelligence in Daily Life (2022) | Beebom.” Accessed February 20, 2022. https://beebom.com/examples-of-artificial-intelligence/.

Amazon Science. “The History of Amazon’s Recommendation Algorithm,” November 22, 2019. https://www.amazon.science/the-history-of-amazons-recommendation-algorithm.

AMT Lab @ CMU. “How Streaming Services Use Algorithms.” Accessed February 27, 2022. https://amt-lab.org/blog/2021/8/algorithms-in-streaming-services.

AMT Lab @ CMU. “Streaming Service Algorithms Are Biased, Directly Affecting Content Development.” Accessed February 27, 2022. https://amt-lab.org/blog/2021/11/streaming-service-algorithms-are-biased-and-directly-affect-content-development.

Auxier, Brooke, and Monica Anderson. “Social Media Use in 2021.” Pew Research Center: Internet, Science & Tech (blog), April 7, 2021. https://www.pewresearch.org/internet/2021/04/07/social-media-use-in-2021/.

“Bias in AI: What It Is, Types, Examples & 6 Ways to Fix It in 2022,” September 12, 2020. https://research.aimultiple.com/ai-bias/.

Born, Georgina. “Artificial Intelligence, Music Recommendation, and the Curation of Culture: A White Paper,” n.d., 27.

Borodescu, Ciprian. “The Anatomy of High-Performance Recommender Systems – Part 1 – Algolia Blog.” Algolia Blog. Accessed February 21, 2022. https://www.algolia.com/blog/ai/the-anatomy-of-high-performance-recommender-systems-part-1/.

“DI_CIR-State-of-AI-4th-Edition.Pdf.” Accessed February 20, 2022. https://www2.deloitte.com/content/dam/insights/articles/US144384_CIR-State-of-AI-4th-edition/DI_CIR-State-of-AI-4th-edition.pdf.

Ferraro, Andrés, and Christine Bauer. “Music Recommendation Algorithms Are Unfair to Female Artists, but We Can Change That.” The Conversation. Accessed February 21, 2022. http://theconversation.com/music-recommendation-algorithms-are-unfair-to-female-artists-but-we-can-change-that-158016.

“Guide to Customer Experience Digital Transformation in 2022,” May 31, 2020. https://research.aimultiple.com/cx-dx/.

“How to Keep Human Bias out of AI | Kriti Sharma – YouTube.” Accessed February 24, 2022. https://www.youtube.com/watch?v=BRRNeBKwvNM&t=17s.

Influencive. “The Future of Art: A Recommendation-Engine-Powered, Fully-Customizable, NFT Marketplace,” February 17, 2022. https://www.influencive.com/the-future-of-art-a-recommendation-engine-powered-fully-customizable-nft-marketplace/.

Knibbe, Julie. “Fairness, Diversity & Music Recommendation Algorithms.” Music Tomorrow (blog), September 21, 2021. https://music-tomorrow.com/2021/09/fairness-and-diversity-in-music-recommendation-algorithms/.

NW, 1615 L. St, Suite 800 Washington, and DC 20036 USA202-419-4300 | Main202-857-8562 | Fax202-419-4372 | Media Inquiries. “Demographics of Mobile Device Ownership and Adoption in the United States.” Pew Research Center: Internet, Science & Tech (blog). Accessed February 27, 2022. https://www.pewresearch.org/internet/fact-sheet/mobile/.

Pastukhov, Dmitry. “Inside Spotify’s Recommender System: A Complete Guide to Spotify Recommendation Algorithms.” Music Tomorrow (blog), February 9, 2022. https://music-tomorrow.com/2022/02/how-spotify-recommendation-system-works-a-complete-guide-2022/.

“Persuasive Technology.” Accessed February 20, 2022. https://www.humanetech.com/youth/persuasive-technology.

“Programmers, Lawmakers Want A.I. to Eliminate Bias, Not Promote It.” Accessed February 27, 2022. https://pew.org/3v1vGYh.

Schwartz Reisman Institute. “Algorithms in Art and Culture: New Publication Explores Music in the Age of AI.” Accessed February 21, 2022. https://srinstitute.utoronto.ca/news/ai-music-recommendation-and-the-curation-of-culture.

Social Media Marketing & Management Dashboard. “How Does the YouTube Algorithm Work in 2021? The Complete Guide,” June 21, 2021. https://blog.hootsuite.com/how-the-youtube-algorithm-works/.

Teichmann, Jan. “How to Build a Recommendation Engine Quick and Simple.” Medium, August 6, 2020. https://towardsdatascience.com/how-to-build-a-recommendation-engine-quick-and-simple-aec8c71a823e.

The Social Dilemma. “The Social Dilemma – A Netflix Original Documentary.” Accessed February 27, 2022. https://www.thesocialdilemma.com/.

TrustRadius Blog. “2020 People of Color in Tech Report,” September 21, 2020. https://www.trustradius.com/vendor-blog/people-of-color-in-tech-report.

Wall Street Journal. How TikTok’s Algorithm Figures You Out | WSJ, 2021. https://www.youtube.com/watch?v=nfczi2cI6Cs.

“Workforce Representation – Google Diversity Equity & Inclusion.” Accessed February 27, 2022. https://diversity.google/annual-report/representation/.

Writer, Senior. “How Top Tech Companies Are Addressing Diversity and Inclusion.” CIO (blog). Accessed February 27, 2022. https://www.cio.com/article/193856/how-top-tech-companies-are-addressing-diversity-and-inclusion.html.

“YouTube User Statistics 2022 | Global Media Insight.” Accessed March 1, 2022. https://www.globalmediainsight.com/blog/youtube-users-statistics/.