For this next phase we worked on getting the software and hardware connected.

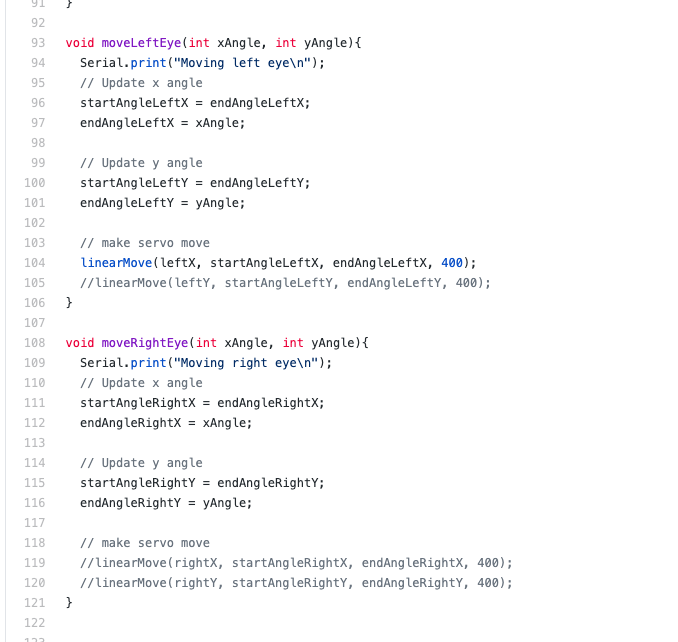

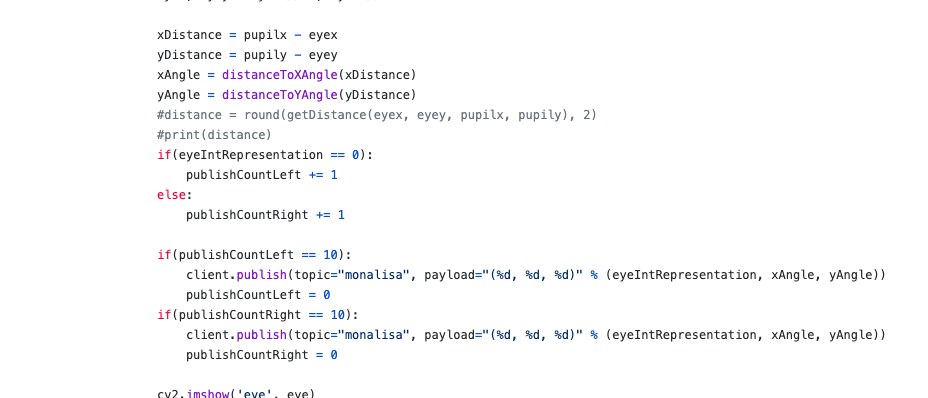

For the software Max finished the arduino code to set up 4 servos to move the eyes. The servos were used for the following: X direction in left eye, Y direction in left eye, X direction in the right eye, Y direction in the right eye. This allows for 2 dimensional eye movements. Furthermore, we changed the eye tracking code so that not every single message would be sent to the arduino. The reason we decided to do this was because if we were to send every message, it would be physically impossible to move the arduino in time before the next message is received. The downside to this behavior is that there is a slight delay in the user’s eye movements compared with the device.

Marione finished the hardware component. There were two parts to this: external box and eye component. For the external box, she used a combination of cardboard and plywood. Then for the eye component an eyeball was made using a clay ball which supported motion in the x-axis while the outer ring was composed of a cardboard structure and supported movement in the y-axis. We had issues with the clay structure because it was difficult to attach outside structure, since even superglue only worked for a limited time. We had to try and instead, attach the servos to the clay eyeball using paperclips.

Through this testing phase we were able to determine and tweak some parts of our project. First, we determined that the angles were too small, so in the code we increased the change in the angles to a value that we think properly represents the mapping from user eye to machine eye. Next, we also found out that the paper clip would keep disconnecting from the clay ball, so we came up with a solution to use a ping pong ball (still need to get this), which should make it easier to keep the servos linked to the eye. We still need to balance out a way to prevent the eye delay issue that was talked about previously, while also not sending to many messages / actions to the servos so that they can properly work.

Here is a video of our testing phase:

Leave a Reply

You must be logged in to post a comment.