Project Objectives

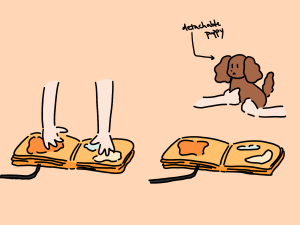

Lunch Time for the Puppy is an interactive children’s fabric book. The book is made from felt and other fabric material with different textures, and is embedded with soft sensors and electronic output elements for creating a rich storytelling experience. We produced a proof of concept prototype of the design by making one page from the book for demonstration. Our vision for the project is to have an entire book fabricated with similar methods with the main character, the detachable interactive puppy. The complete story would contain various scenarios of the puppy at places around the house.

Interaction and Story Outline

The puppy is designed to be detachable and responsive, able to be reattached to various positions throughout the fabric book. The fabric book contains different scenes of a puppy’s day and each page corresponds to different behaviors of a puppy. For example, petting the puppy in the shower is different from petting the puppy before going for a walk.

Waiting for a walk

Shower time

Cuddle on couch

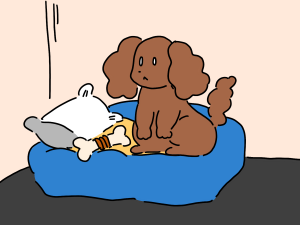

Bedtime

Creative Design Opportunities

With our successful implementation and modification of the methods provided by the Multi-Touch Kit (reference 1) research paper, we believe that by using this technique, capacitive sensing with off-the-shelf microcontroller board can be used for prototyping and designing touch-screen-like interactions for fabric, static flat surfaces and surfaces of other 3D objects. The technique can be used for augmenting children’s fabric books with digital interactions additional to the original material based interactions. Soft sensor grid can be integrated with the design of other soft materials for a more unified look of the book.

We see the possibility of creating open-ended storylines with adding different states of the behaviors. The rich interactive quality also helps reduce screen time for children.

Prototyping Process

In this section, we will discuss sensor iterations and software modifications during the prototyping process.

Sensor Iterations

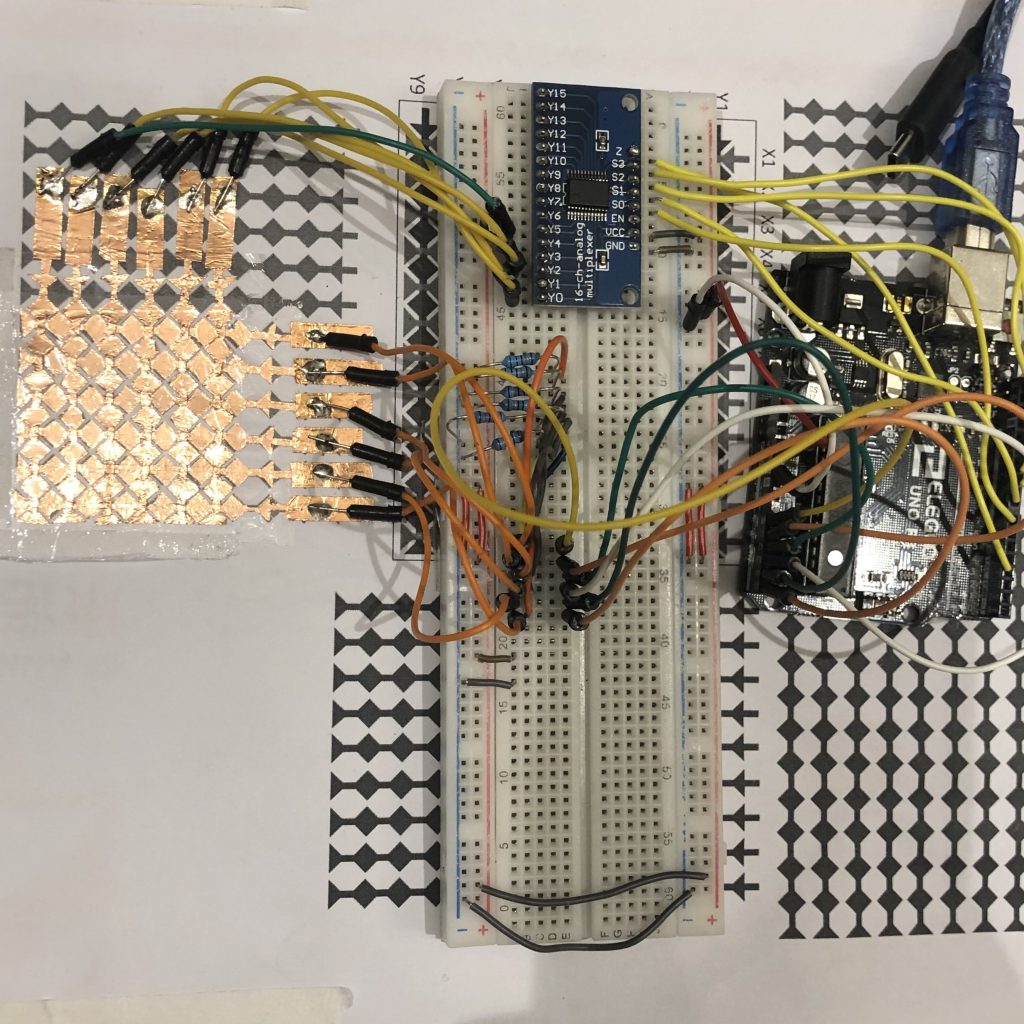

We fabricated our sensors using instructions from the original research project. The materials and tools we used were copper tapes, think double sided tapes, paper, scissors, and exacto knives.

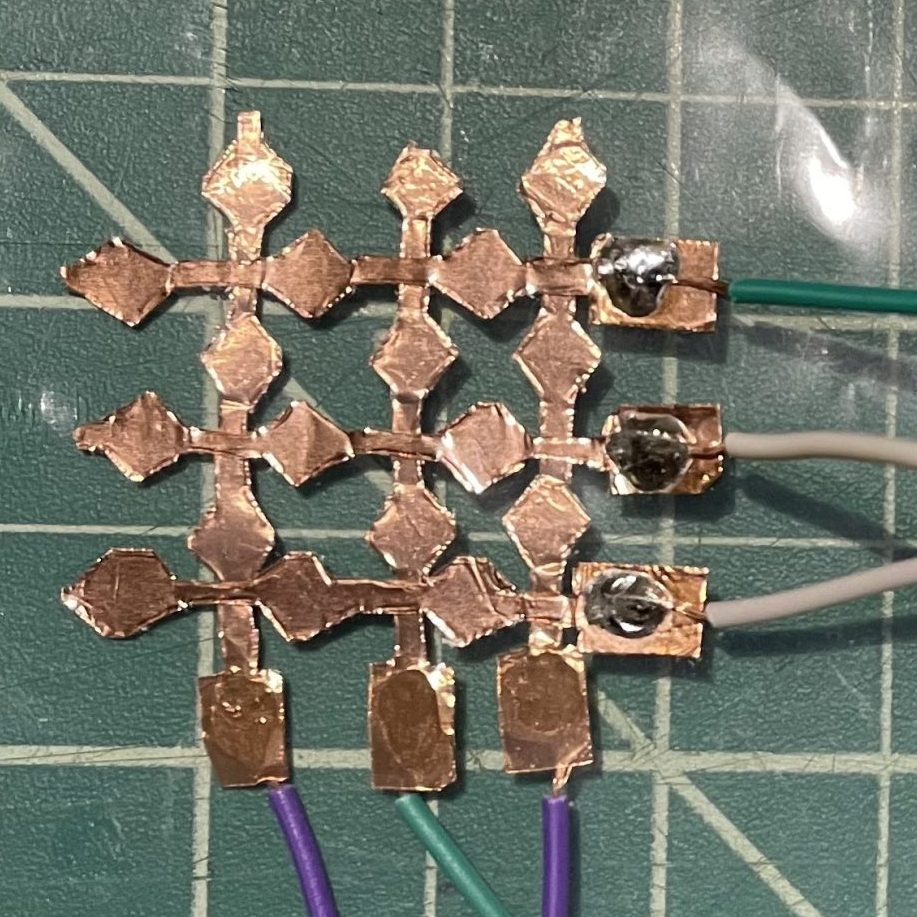

The first grid (3×3)

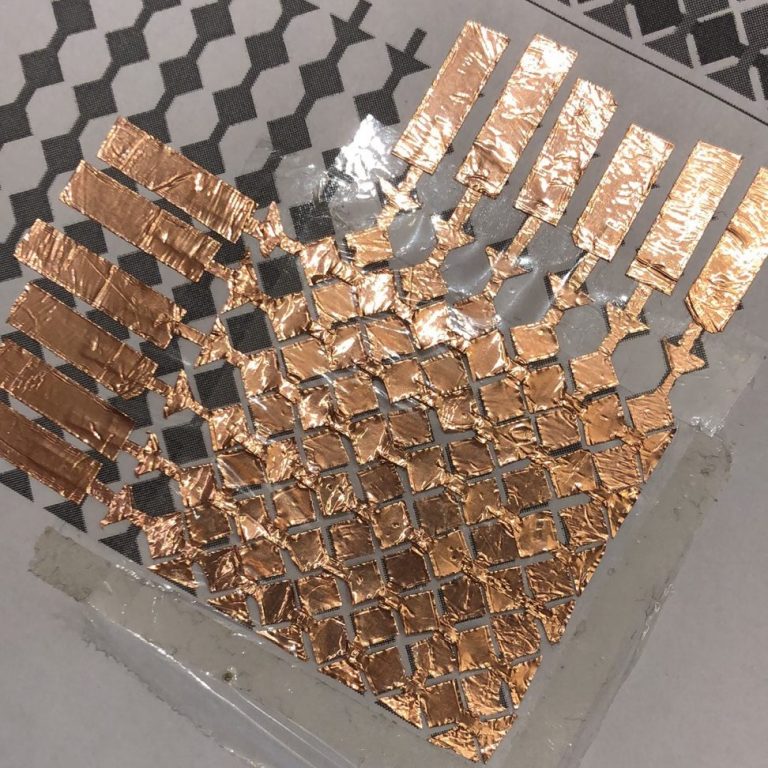

The second grid (6×6)

The third grid (6×6 on page)

The very first grid we made was of size 3×3. Copper tapes are taped to opposite sides of a thin plastic sheet. We made this to test if the provided multi-touch kit works at all.

We looked for ways to ease the fabrication process. We found the sensor design guideline and printed out the patterns for easy tracing. After a few attempts we fabricated the sensors using the following process:

- Cut off a piece of paper that contains a strip of the grid from the printed pattern

- Tape the strip to do the back of the conductive tape

- Use the taped conductive tape along the traces

- Use the exacto knife to cut the vertical traces for the think lines connecting diamonds

- Use the scissors to cut along the diamonds

- Peal off the backing of the conductive tape to tape it with the guidance of the printed pattern

The second grid we made was of size 6×6. The top layer of the copper tapes are taped on plastic sheet. The bottom layer of the copper tapes are taped on the paper. And the plastic sheet is taped on top of the paper. This sensor grid had issues with not recognizing light touches, so we suspected that it was caused by the gaps between the plastic sheet and the paper.

The third and the final grid we made was still of size 6×6. The copper tapes are taped the same as the first sensor grid: copper tapes are taped to opposite sides of a thin plastic sheet. This sensor worked relatively reliable and light touches could be detected.

Software Modifications

We built our software implementation using the Processing sketch from the original research project. Here is what the sketch does:

- Read analog values from the pins

- Set Baseline

- Use BlobDetection to identify&locate touches

- Use OpenCV to visualize the touches

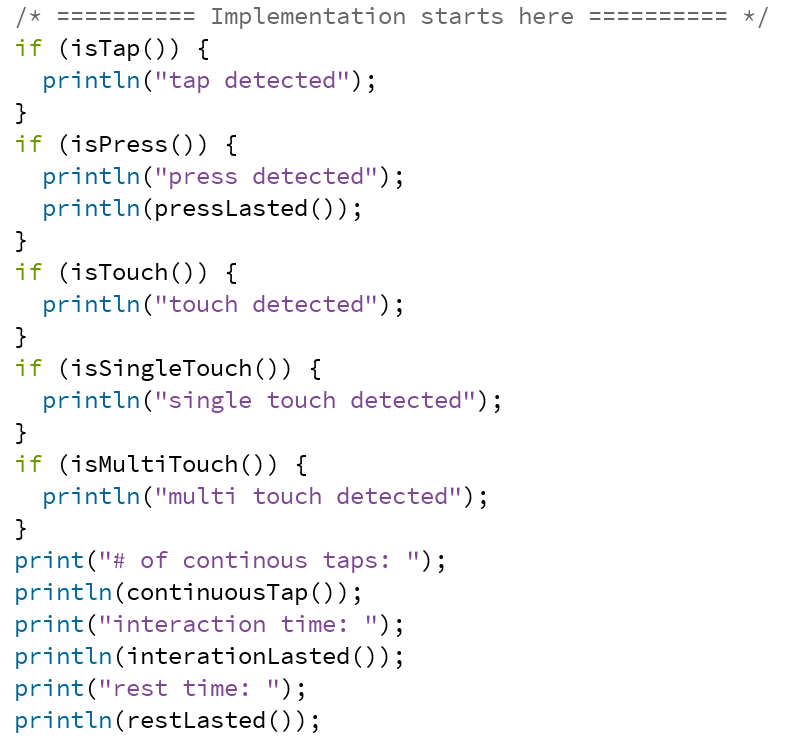

We extracted the result of blob detection to do gesture recognitions.

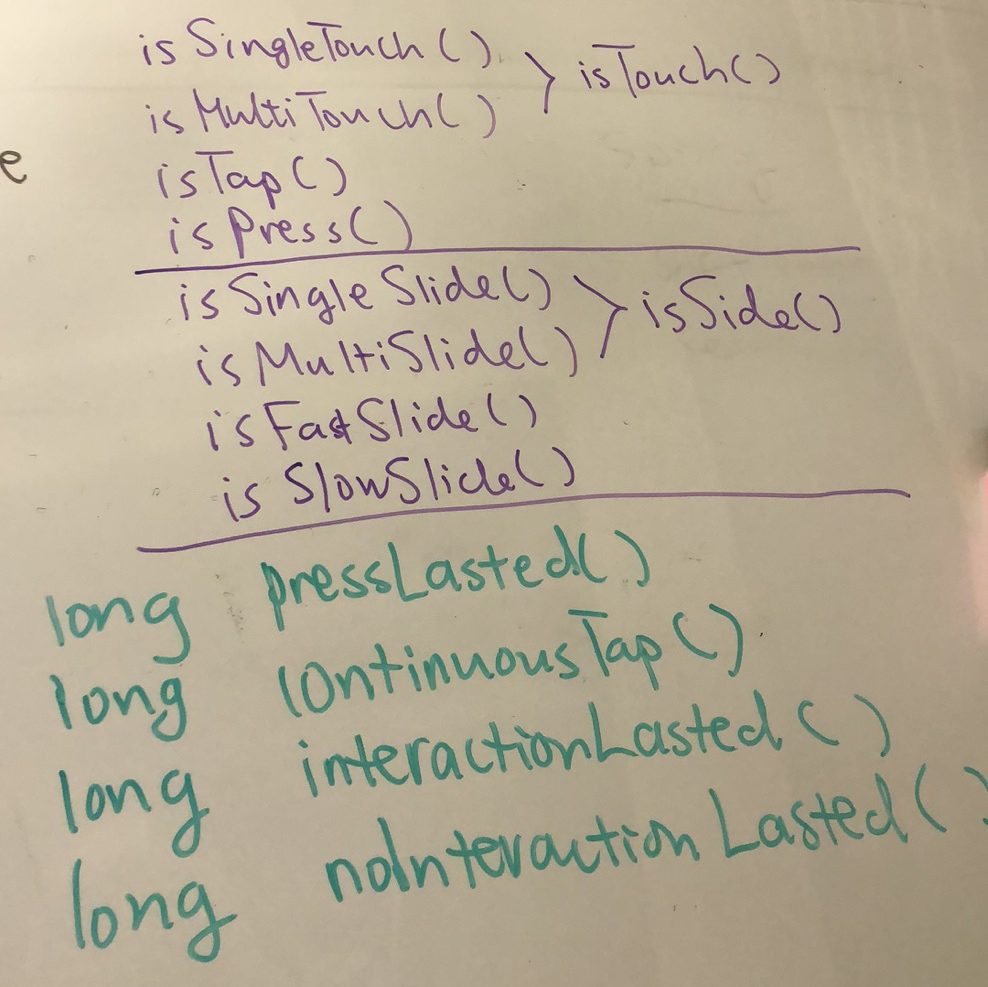

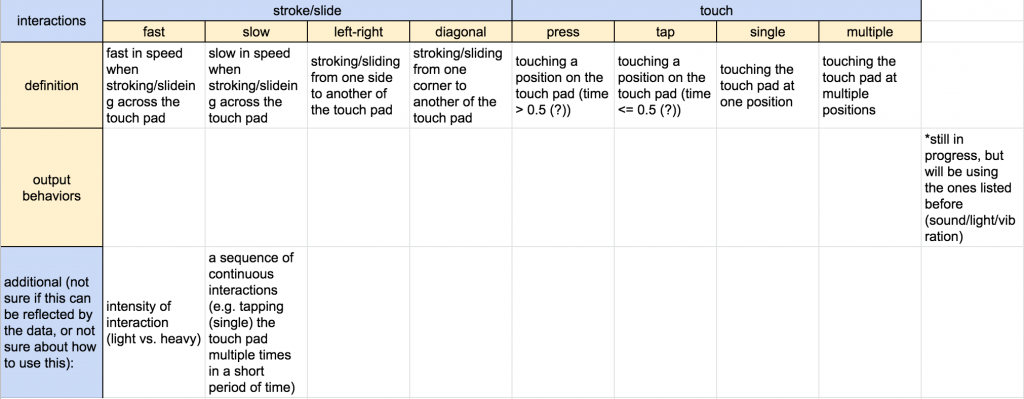

Initially, we wanted to be able to implement gestures recognitions of the set below.

We then ran into some troubles when trying to detect sliding behavior, partly because of the grid is actually low resolution, and it cannot slide from touching multiple positions at the same time. Thus we decided to put sliding apart and use the other interactions.

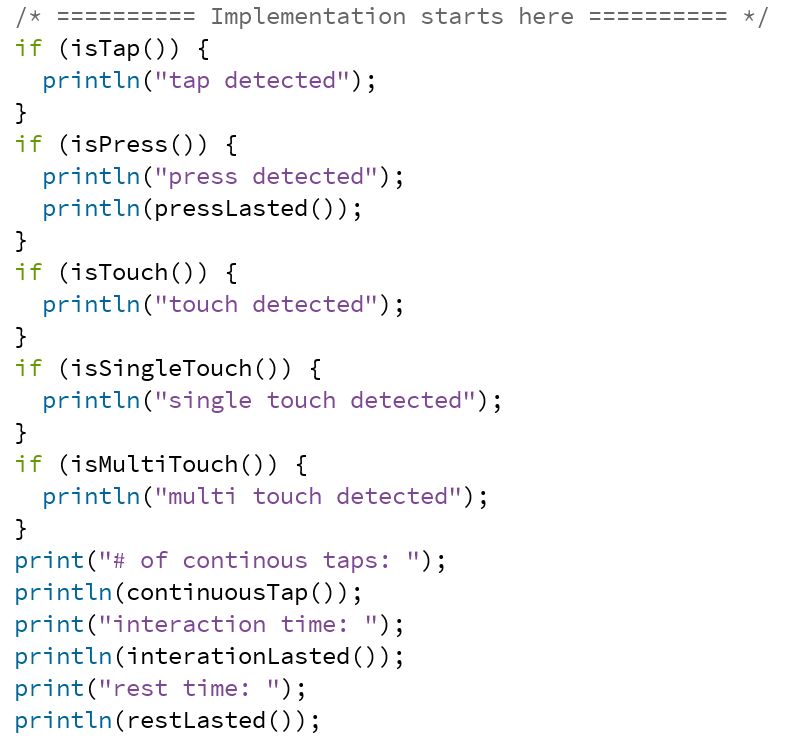

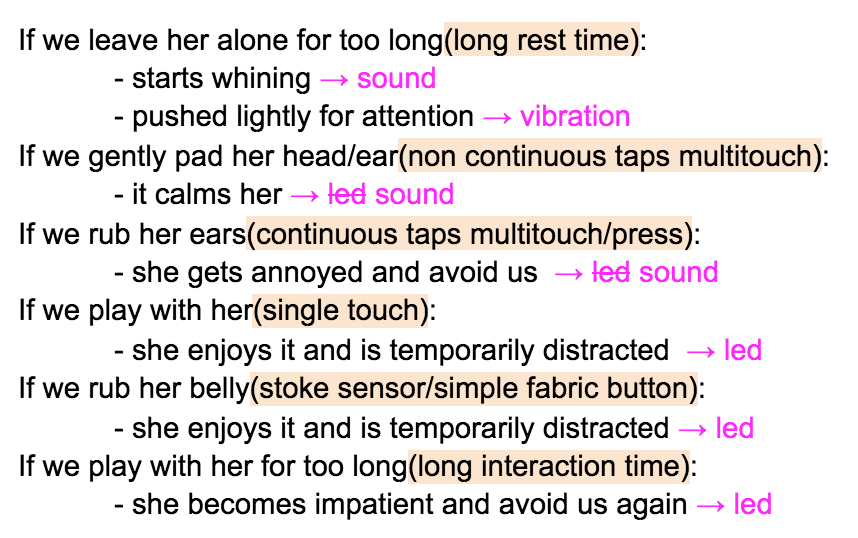

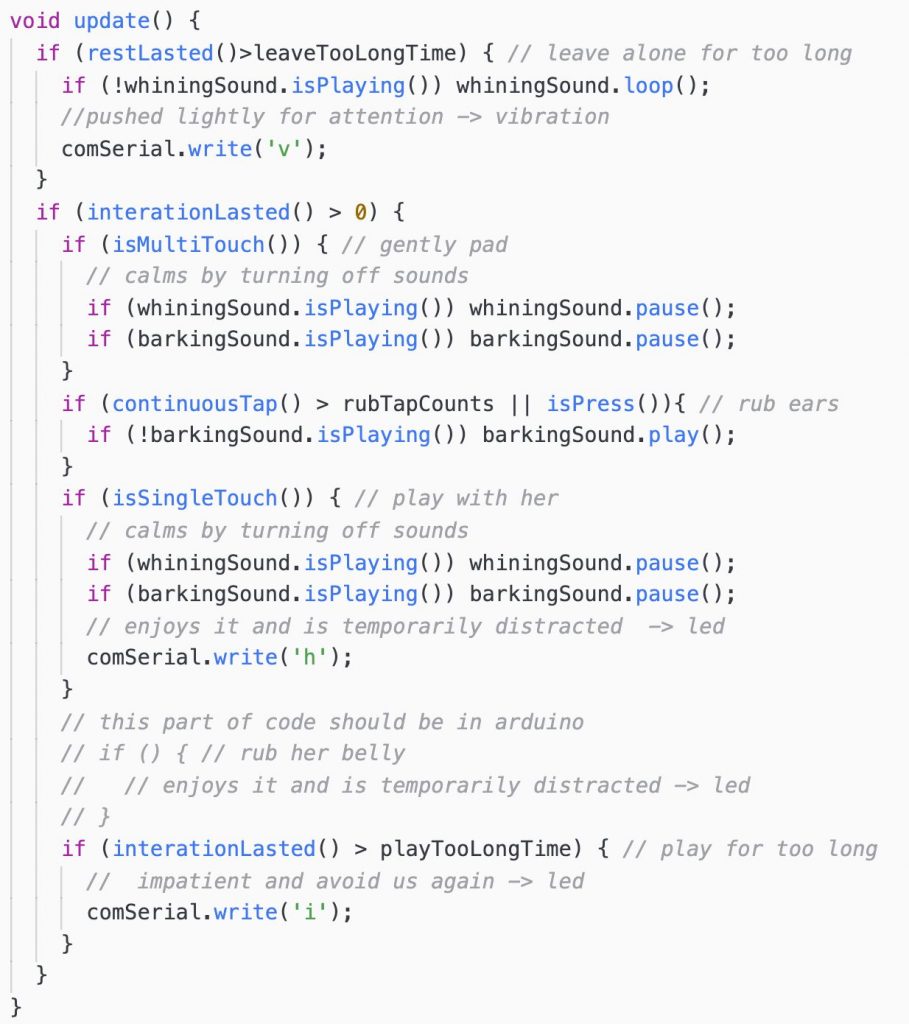

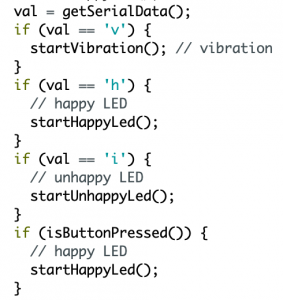

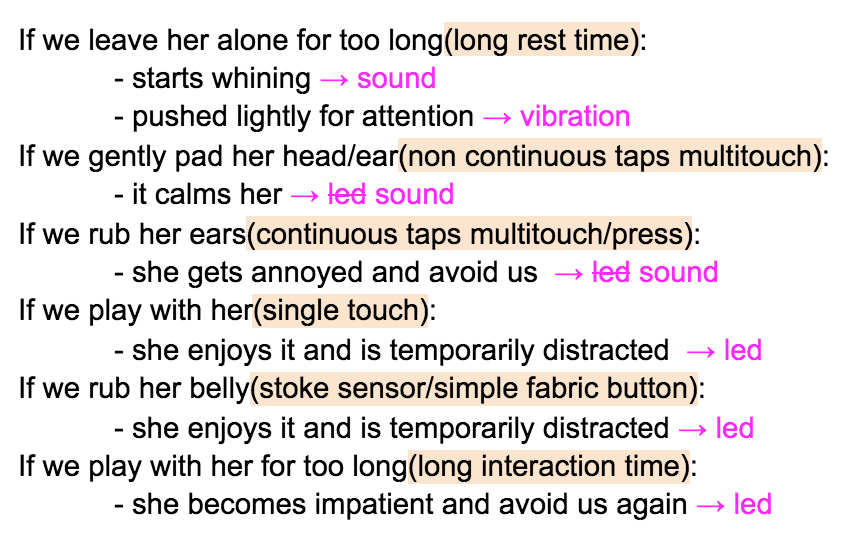

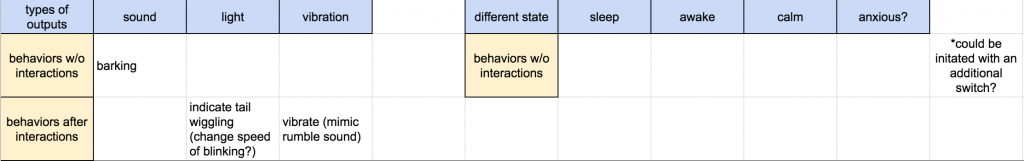

Above is the API we ended up implementing. Note that we use the number of continuous taps to detect slides. Using the available gestures, we were able to map them to output behaviors shown below.

Full page

Close up of the grid

Movable paws

Outcomes

In this section, we will discuss the successes and failures of our choices, things we’ve learned, and how future work would further improve the results.

Successes

- Successful implementation of the multi-touch kit with software modification tailored to our purpose: With the limited resources we had, a lot of our time were spent on how to fabricate the sensor grid. Though our hand-cutting method is nowhere near scalable, it is sufficient for a small sensing area like the one we have.

- Exploration of interactive fabric book with more complex interactions: Earlier works on tangible interactive books have focused on using simple e-textile inputs(e.g. buttons, sliders, and etc.) and thermochromic paint. We believe that our project is a proof of concept for interactive fabric book with un-constrained interaction sites. In other words, the entire page or a detachable piece of the page could act like a touch screen. Further research would be needed to determine effects of such interactions.

- Variations of behaviors: To bring the puppy more to life, we implemented a very naive state machine so that the puppy has different states that represent different moods. The states are very limited right now and not all state changes have corresponding outputs, future works could further the complexity of the state machine.

Failures

- Sensor grid robustness: The sensor grid has a low resolution because our sensor grid is very small. With a 6×6 grid, there are 36 ‘pixels’ in total, but a usual touch contact can easily cover up to 10 pixels. The use of blob detection also introduces errors like a single touch with a too large of a contact area could be detected as two separate touches. We suspect that some of these detection errors might be eliminated with a larger sensor grid, i.e. a larger sensing surface. One may asks about the diamond size and spacings, we chose the size and spacings as recommended by the original research project. Further works could look into replacing blob detections with some other algorithms to detect touches.

- Missing opportunities provided by the multi-touch kit: Many current interactions we hae can be done with simpler inputs. For example, taps/presses can be detected using a fabric button or capacitive touch sensing. Though we do use touch coordinates to decide whether the touches are the same as before for each time

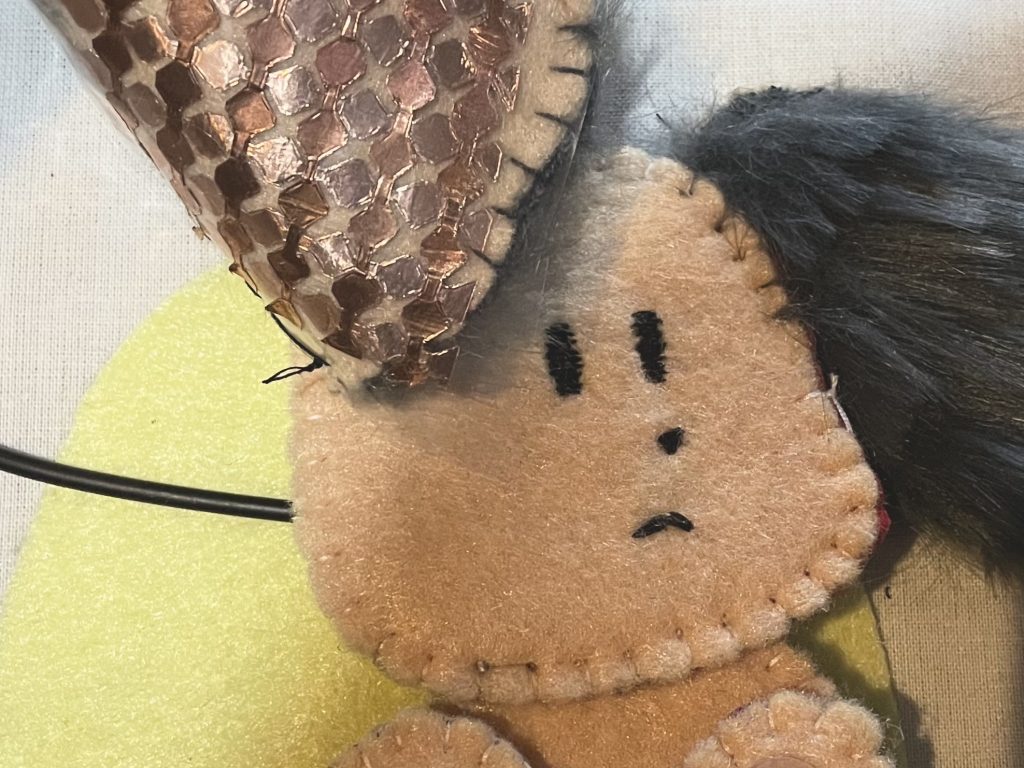

loop()is called, we are not taking advantages of the rich possibilities offered by location information. Further works could explore more gestures such as direction slides, pinches, and other gestures that a 2D touch screen can recognize. One thing to keep in mind here is that although we are mimicking a touch screen using fabric, we should still remember the unique tangible interaction opportunities that soft fabric brings. For example, pinching fabric is drastically different from a hard touch screen as the fabric will be pinched/folded as well. - Unnatural interactions compared with interacting with a real puppy: Right now the sensor grid is placed behind the ear of the puppy and thus makes the interaction of reaching to the back very natural. It was a decision between the aesthetics and the normality of interactions, and we chose to hide the sensor grid. A limitation of the multi-touch kit is that fingers must directly contact with the top conductive layer. The question about using resistive sensing technologies instead of the capacitive one that we chose was raised, and we believe that resistive sensing complicates the fabrication process and causes a more unnatural interaction as it requires firmly pressings on the sensor. For future work, one could use conductive thread to directly sew the sensors onto the fabric and to have the sensors at more natural locations.

Due to limited resources, we weren’t able to integrate as many different textures as we hoped for. Moving forward, we believe that adding more textures to the body of the puppy would allow a more diverse tactile experience.

Sources

- Hardware requirements and schematics can be found from the tutorial by the original research project.

- Software source code(README has instructions on using the arduino and processing sketches.):

Group member contributions

Equal contributions:

- Preliminary research

- Project scope definement

- Storybook storyline

- Interaction design

- Testing/prototyping the sensor grid

- Weekly reports

- Final system troubleshooting

Catherine’s additional contribution:

- Software implementation

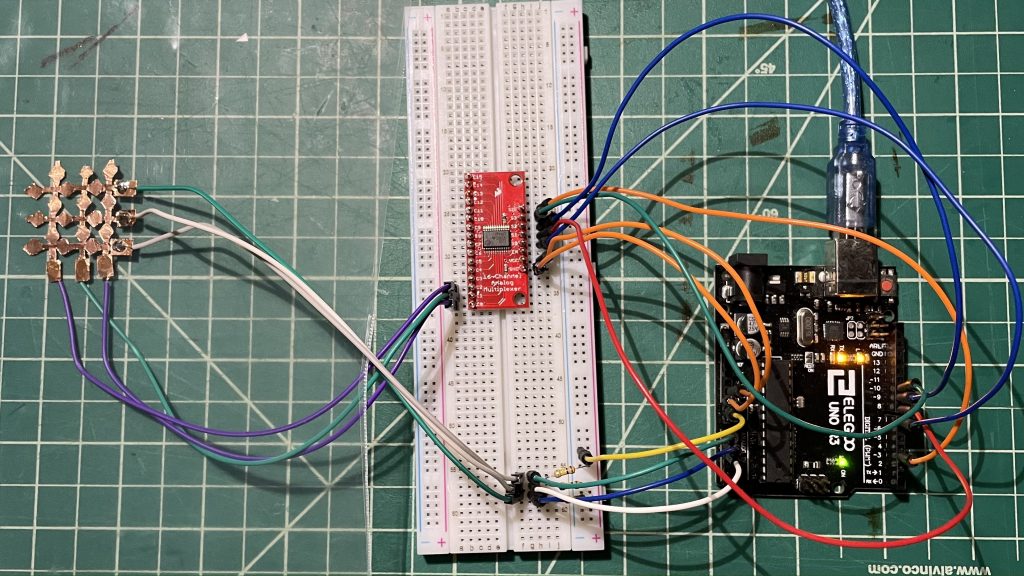

- Troubleshooting on a breadboard

Yanwen’s additional contribution:

- Fabrication of the fabric page

- Integration of soft technology components with fabric materials

References

Narjes Pourjafarian, Anusha Withana, Joseph A. Paradiso, and Jürgen Steimle. 2019. Multi-Touch Kit: A Do-It-Yourself Technique for Capacitive Multi-Touch Sensing Using a Commodity Microcontroller. In Proceedings of the 32nd Annual ACM Symposium on User Interface Software and Technology (UIST ’19). Association for Computing Machinery, New York, NY, USA, 1071–1083. DOI:https://doi-org.proxy.library.cmu.edu/10.1145/3332165.3347895

Jie Qi and Leah Buechley. 2010. Electronic popables: exploring paper-based computing through an interactive pop-up book. In Proceedings of the fourth international conference on Tangible, embedded, and embodied interaction (TEI ’10). Association for Computing Machinery, New York, NY, USA, 121–128. DOI:https://doi-org.proxy.library.cmu.edu/10.1145/1709886.1709909

Irene Posch. 2021. Crafting Stories: Smart and Electronic Textile Craftsmanship for Interactive Books. In Proceedings of the Fifteenth International Conference on Tangible, Embedded, and Embodied Interaction (TEI ’21). Association for Computing Machinery, New York, NY, USA, Article 100, 1–12. DOI:https://doi-org.proxy.library.cmu.edu/10.1145/3430524.3446076

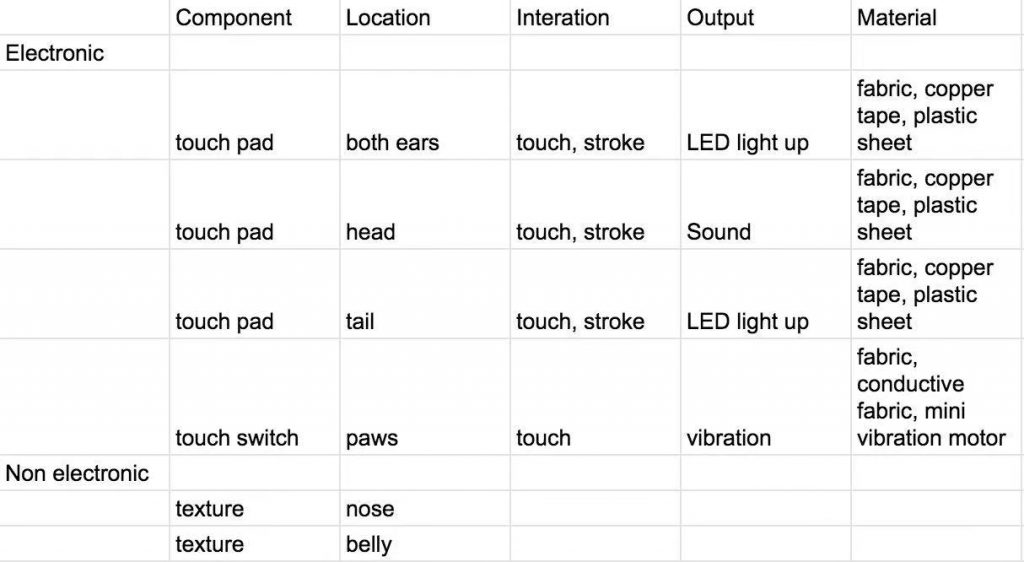

]]>We used the Multi-Touch Kit Software Toolkit and attached it to the back of the left ear of the puppy. The only other digital intput is a fabric button hidden underneath the puppy’s belly.

For outputs, we combined visual, audio, and haptic feedbacks using LEDs, the laptop speaker, and vibration motors.

To minimize the expected feeling of having some changes after doing something, we implmented a realatively naive state machine so that the puppy could be in different moods.

Due to time constraint, we used conductive copper tape instead of conductive yarn to fabricate the sensor grid. Between the aesthetics and the normality of interactions, we chose aesthetics to hide the sensor grid. If we fabricated the sensor grid using conductive yarn, we could interact with the top of the furry ears with gestures that are more similar to how one would pet a puppy.

Due to material constraint, we weren’t able to integrate as many different textures as we would like. Adding more textures to the body of the puppy allows a mroe diverse tactile experience.

This is only a prototype of a single page, we invisioned a puppy-themed interactive book of which the interactive puppy is like a bookmark. Every page is a different setting, and placing the puppy on the page triggers the start of the interaction to tell stories about puppy’s different behaviors and reactions in different settings.

]]>We programmed the microcontrollers, and our setup involves two Arduinos:

- One is connected to the multi-touch grid. This arduino is controlled by a processing sketch and sends signals to the other arduino for outputs other than sounds.

- One is connected to all other input&output components: neopixel LEDs, vibration motors, and fabric buttons. This arduino is controlled by a arduino sketch and receives signals from the other arduino.

The startHappyLed() starts a sequence of colored LEDs synchronous blinkings and is followed with a sequence of synchronous blinking of only a subset of these colored LEDs at a time.

The startUnhappyLed() starts a sequence of red LEDs synchronous blinking and followed with a sequence of synchronous blinking of only a subset of these red LEDs at a time.

On the page fabrication side:

We finished making the page and started integrating the elements:

Full page

Touch grid close up

Besides the multi-touch grid, we decides to use 4 neopixels and 1 fabric button to control the vibration disc. The video shows the effect of the neopixels and multi-touch interactions. We will integrate in vibration feedback later.

]]>

We ran into some troubles when trying to detect sliding behavior, partly because of the grid is actually low resolution, and it cannot slide from touching multiple positions at the same time. Thus we decided to put sliding apart and use the other interactions.

Here are the output behaviors we planned out for now:

We also tested out the vibration motor and LED for preparing to integrate with the fabric prototype.

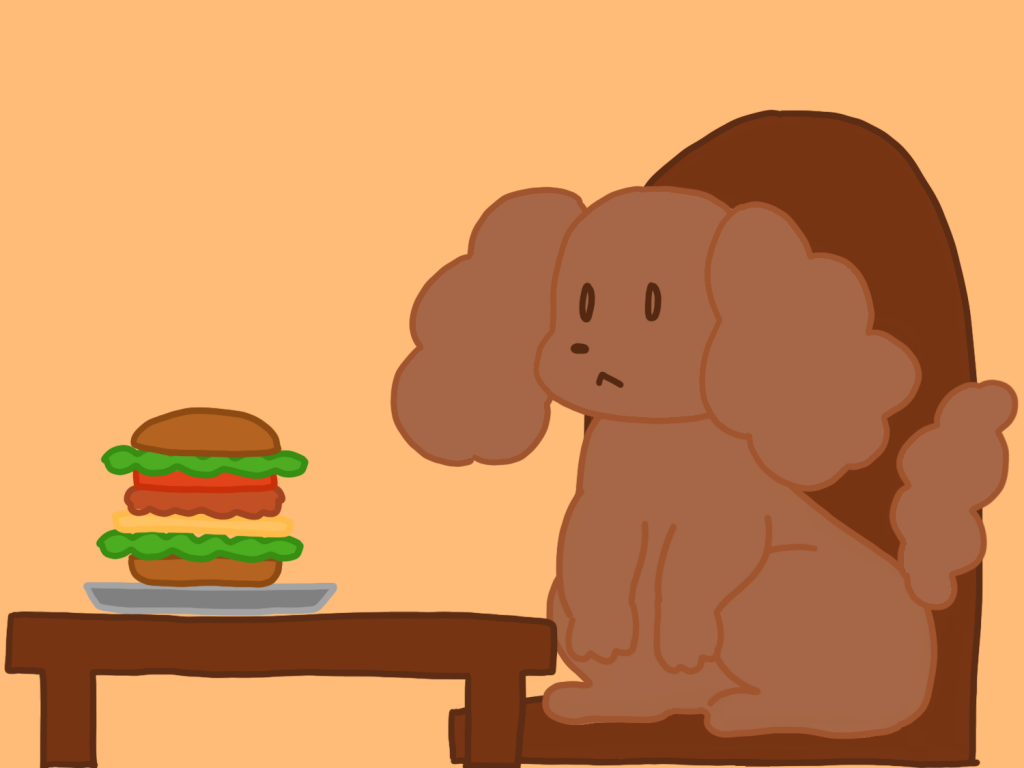

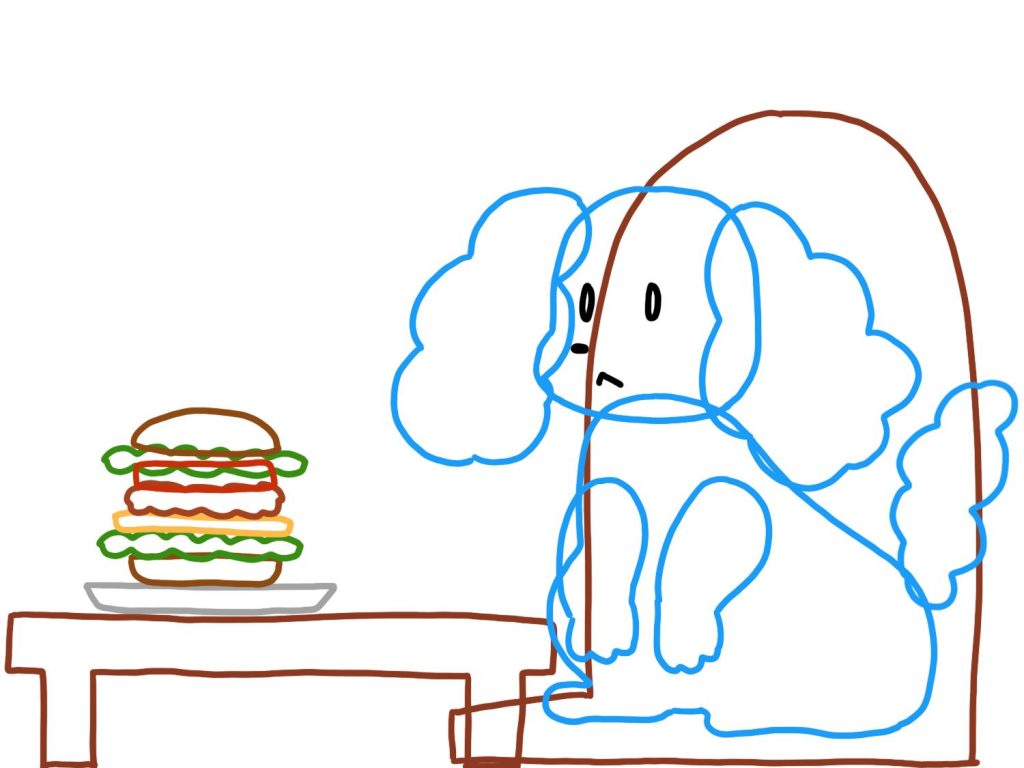

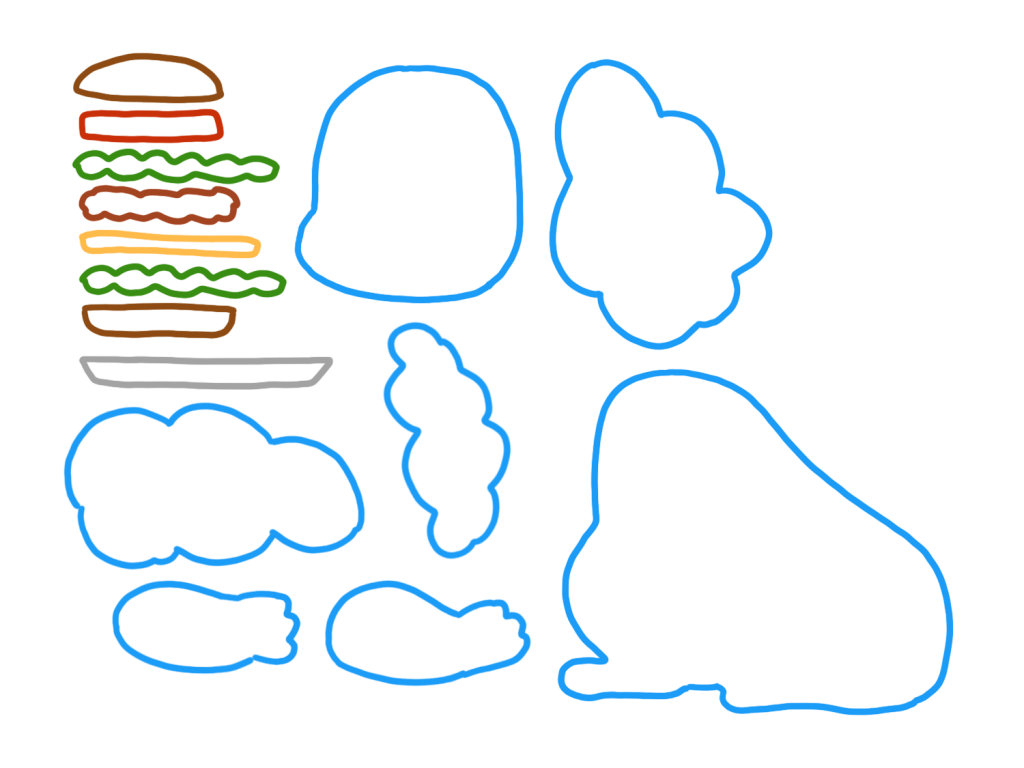

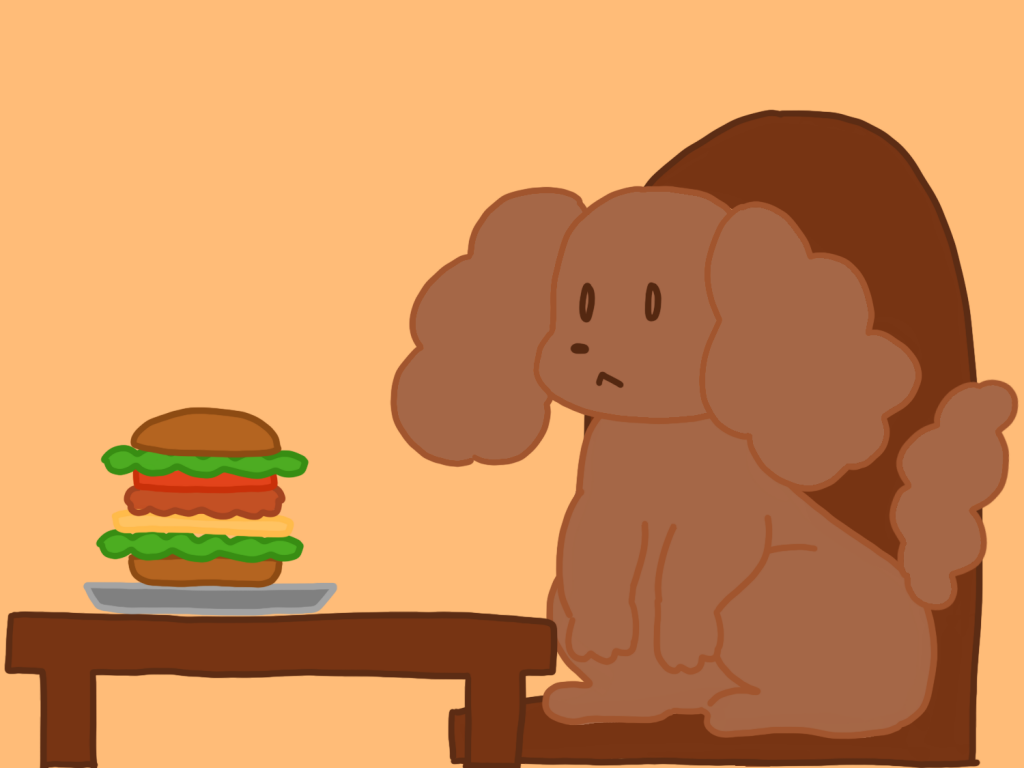

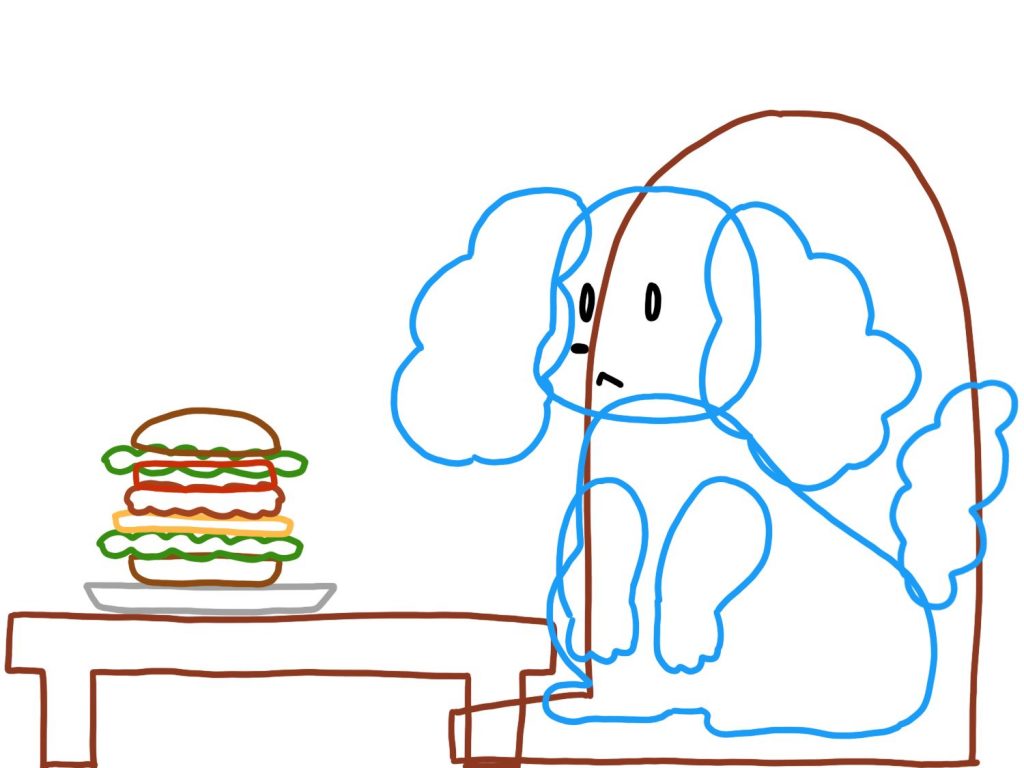

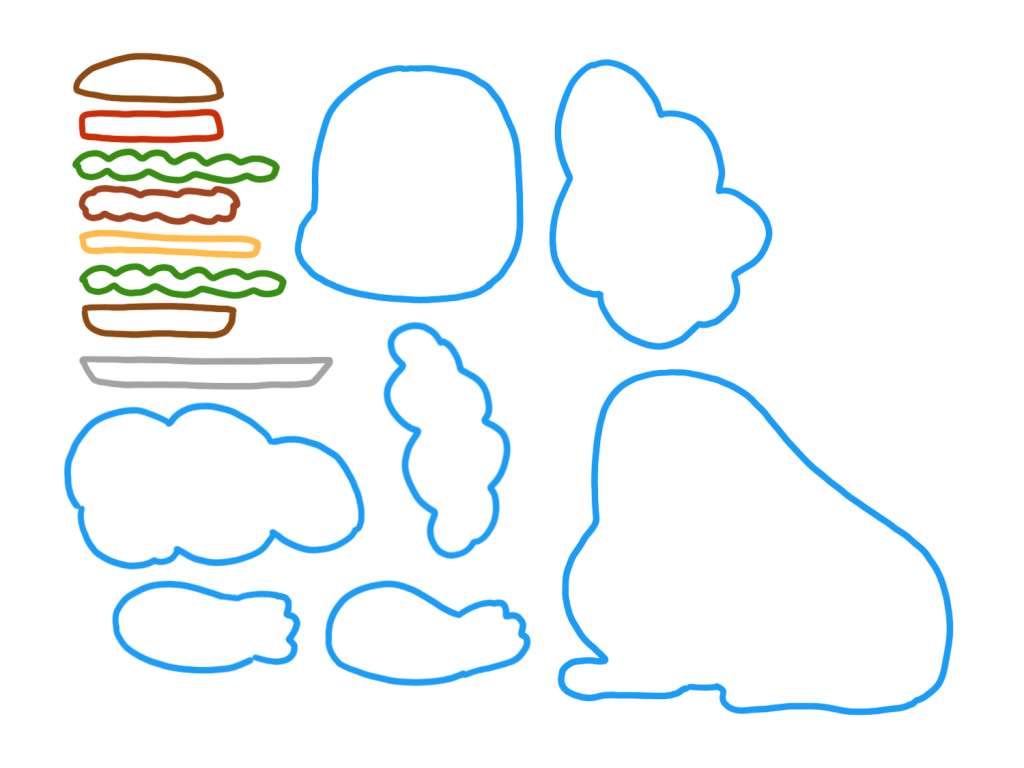

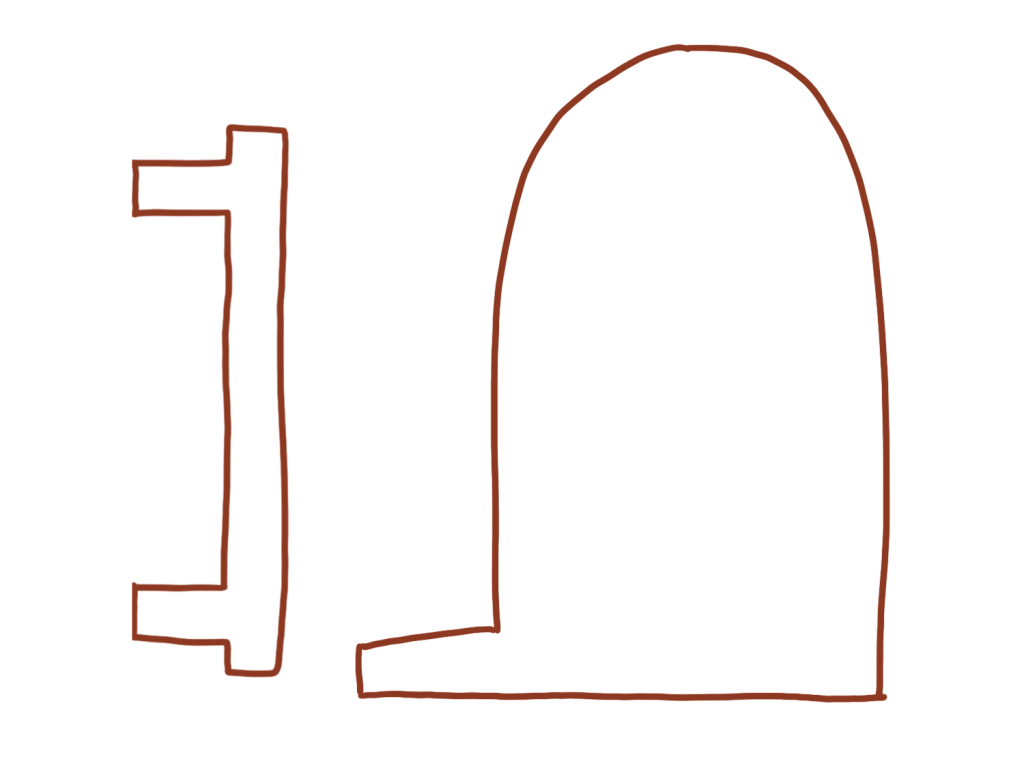

For making the prototype, we made a sample page based on the scenario when the puppy is sitting beside the table and wants to eat human food. We made a sample sketch and the paper template for cutting the fabric pieces.

For the prototype and final demo, we will be working with a single fabric page and one multi touch pad (will be positioned on one ear), but for the actual expectation this would be a book with multiple pages and with different scenes of the puppy being around the house.

For this week, we will be working on:

– Developing possible different states of the puppy

– Testing out the API with actual events

– Fabricating and assembling the fabric pieces

While waiting for the components to be ready for pickup, we will continue on finishing and refining these listed interactions and outputs before applying them to the actual making of the page.

For the upcoming week, we will be working on producing our own API for the data as well as starting to fabricate initial pieces for the fabric page.

]]>We tried adding a fabric layer(felt, muslin, and t-shirt fabric) on top of the sensing grid to see if detections can still be made. Unfortunately it did not work, and even layering a sheet of a piece of paper significantly weakens the raw data.

After looking into the library together with Garth, we took another look into the blob detection library used. The next step on the software side would be writing code to detect swiping and the speed of swiping. We plan to use the normalized coordinates of the blob’s center returned by the blob detection function.

We discussed on possible page designs and materials needed. Below is a rough sketch of the possibilities.

For the upcoming week, we will work on different tasks:

- Catherine: Work on the software.

- Yanwen: Investigate outputs mappings.

We were able to figure out a somewhat efficient way to create the sensor grid and fabricated a 6×6 sensor grid(which still took 2h+) and got it connected/working with both the arduino code and processing code.

Though some issues remain:

- Touch is rarely detected with touching, and the accuracy increases with pressing. We played a little with tunable parameters and thresholds but the issue remains. We think a potential reason for it is the fabrication of the sensor grid. The 3×3 sensor we had has copper tape taped on both sides of a plastic sheet, but this 6×6 sensor has one side on paper, a plastic sheet layered on top of the bottom sheet, and the second layer of copper tape taped on top of the plastic sheet.

- Multitouch is far from robust. Again, we think the fabrication could be the issue. Because we have to press hard on the grid to get a detection, it was difficult to have a single contact point from a single touch.

- Sliding/stroking behavior does not appear obvious. We think one reason could be with sliding/stroking, the finger stays on one contact position for too short a period of time, so we need to go back and look at the paper again for more information. And similarly, the fabrication could cause the issue.

Action item for next week:

- Remake the 6×6 grid using the 3×3 method for a more robust sensor grid

- Look at the paper again

- Decide on what gestures can be detected

Four week plan

| Week | Goals |

| 4.12-4.18 | Decide on what gestures can be detected |

| 4.19-4.25 | Test sound output Decide uses of different outputs(auditory&visual) Decide sensor grid materials: conductive tape/conductive yarn |

| 4.26-5.2 | Start on fabricating the page from a textile learning book |

| 5.3-5.9 | Finish fabricating the page from a textile learning book |

We were also able to download and run the example code from both of the example code in the Arduino and Processing libraries. However, we ran into trouble when trying to get readings from the Processing code:

The detection seemed to be very unstable, and the reasons might be:

- Grid is too small (3×3)

- Grid fabrication may be incorrect

- Potential wiring issues

We will try to sort out the problems before moving on to customizing the outputs.

]]>We also brainstormed on how to integrate the technology into our fabric book in a specific scenario. We decided to extend on the Jellycat If I were a Puppy Board Book and integrate digital tactile inputs, digital outputs(sound, lights, and haptic) with materials of different tactile experiences(rubbery, velvety, furry, and etc.). Below is a sketch of what we envision.

One thing we discussed was that this multi-touch kit enables gesture recognition, which is something we want to take advantage of. We also didn’t want to make a fabric touch screen, we decided to focus on the properties of touches. For example, puppies don’t generally like a rapid swipe. Gentile touches are preferred over hard presses. Feedbacks of the puppy’s preference will be delivered through digital feedback.

There are also possibilities in mapping different sound properties to the location of touches, but we want to first focus on properties of touches/swipes.

For this week, our parts should be arriving soon, and we will be playing with the multi-touch kit and each creates our first version of a multi-touch sensor. We plan to use conductive tape and/or conductive fabric for the first prototype.

Narjes Pourjafarian, Anusha Withana, Joseph A. Paradiso, and Jürgen Steimle. 2019. Multi-Touch Kit: A Do-It-Yourself Technique for Capacitive Multi-Touch Sensing Using a Commodity Microcontroller. In Proceedings of the 32nd Annual ACM Symposium on User Interface Software and Technology (UIST ’19). Association for Computing Machinery, New York, NY, USA, 1071–1083. DOI:https://doi.org/10.1145/3332165.3347895

]]>Electrick uses electric field tomography in concert with an electrically conductive material to detect touch inputs on large surfaces that does not depend on distribution of the electrodes. Its following work Pulp Nonfiction further explores using Electrick with paper interfaces to track finger and writing instruments inputs.

We took inspiration from this and wondered if we could use the same system for fabric and explore where to place conductive yarn, and the density of conductive yarn. One immediate difficulty we see with this approach is that Electrick uses a custom sensor board and its own sensing system, so we are concerned with spending a long time reinventing wheels and further if we are even able to reinvent the wheels.

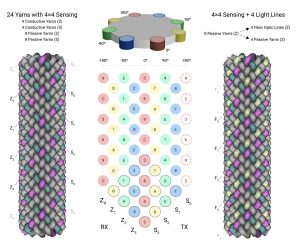

Another inspiration we got was the I/O braid. It uses conductive and passive yarns to create a helical sensing matrix. The I/O braid is able to detect many gestures such as twisting, flicking, sliding, and etc. based on capacitive signal strengths. We wanted to explore how we can apply the same technique in the 2D space. The paper mentions using a PSoC® 4 S-Series Pioneer Kit, but we weren’t able to find much information about its difference compared with other capacitive sensor chips.

For both approaches, there is much to explore to achieve the same goal of detecting gestures(even as simple as being able to detect where the textile is being touched, i.e. a textile touchscreen) on a textile surface. This adds to our kids story book narrative as it allows a wider interaction space and thus contributes to a likely open-ended book, since we could combine this position and touch sensing technique with the interaction of placing assets on to or just directly touching different locations of the page.

For the first approach, we can’t really think of a clear proof-of-concept experiment as of now because of our lack of technical understanding in electric field tomography.

For the second approach, the proof of concept experiment could be hooding up a capacitive sensing board with several different sensing ‘matrices’ using conductive copper tape(thicker and easier to work with than conductive yarn for now).

Our aim for the outcome is to focus on one method of sensing touch input and extend the interaction model from the basic “touch trigger outputs” to capturing different types of touch inputs(like the microinteractions from the second paper) or locationized inputs.

We’d love to have some feedback and advice, especially on the feasibility of this direction and anticipated technical challenge, and more related technologies/references.

References:

Yang Zhang, Gierad Laput, and Chris Harrison. 2017. Electrick: Low-Cost Touch Sensing Using Electric Field Tomography. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems (CHI ’17). Association for Computing Machinery, New York, NY, USA, 1–14. DOI:https://doi-org.proxy.library.cmu.edu/10.1145/3025453.3025842

Yang Zhang and Chris Harrison. 2018. Pulp Nonfiction: Low-Cost Touch Tracking for Paper. Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems. Association for Computing Machinery, New York, NY, USA, Paper 117, 1–11. DOI:https://doi.org/10.1145/3173574.3173691

Alex Olwal, Jon Moeller, Greg Priest-Dorman, Thad Starner, and Ben Carroll. 2018. I/O Braid: Scalable Touch-Sensitive Lighted Cords Using Spiraling, Repeating Sensing Textiles and Fiber Optics. In Proceedings of the 31st Annual ACM Symposium on User Interface Software and Technology (UIST ’18). Association for Computing Machinery, New York, NY, USA, 485–497. DOI:https://doi-org.proxy.library.cmu.edu/10.1145/3242587.3242638

Alex Olwal, Thad Starner, and Gowa Mainini. 2020. E-Textile Microinteractions: Augmenting Twist with Flick, Slide and Grasp Gestures for Soft Electronics. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems (CHI ’20). Association for Computing Machinery, New York, NY, USA, 1–13. DOI:https://doi-org.proxy.library.cmu.edu/10.1145/3313831.3376236

]]>