Problem:

For those who have impaired vision or are blind, understanding the quality and form of the spaces that they inhabit may be quite difficult to perceive (inspired by Daniel Kish’s TED Talk that Ghalya posted in Looking Outward). This could have applications at various scales, both in helping the visually impaired with way-finding as well as in being able to experience the different spaces they occupy.

A General Solution:

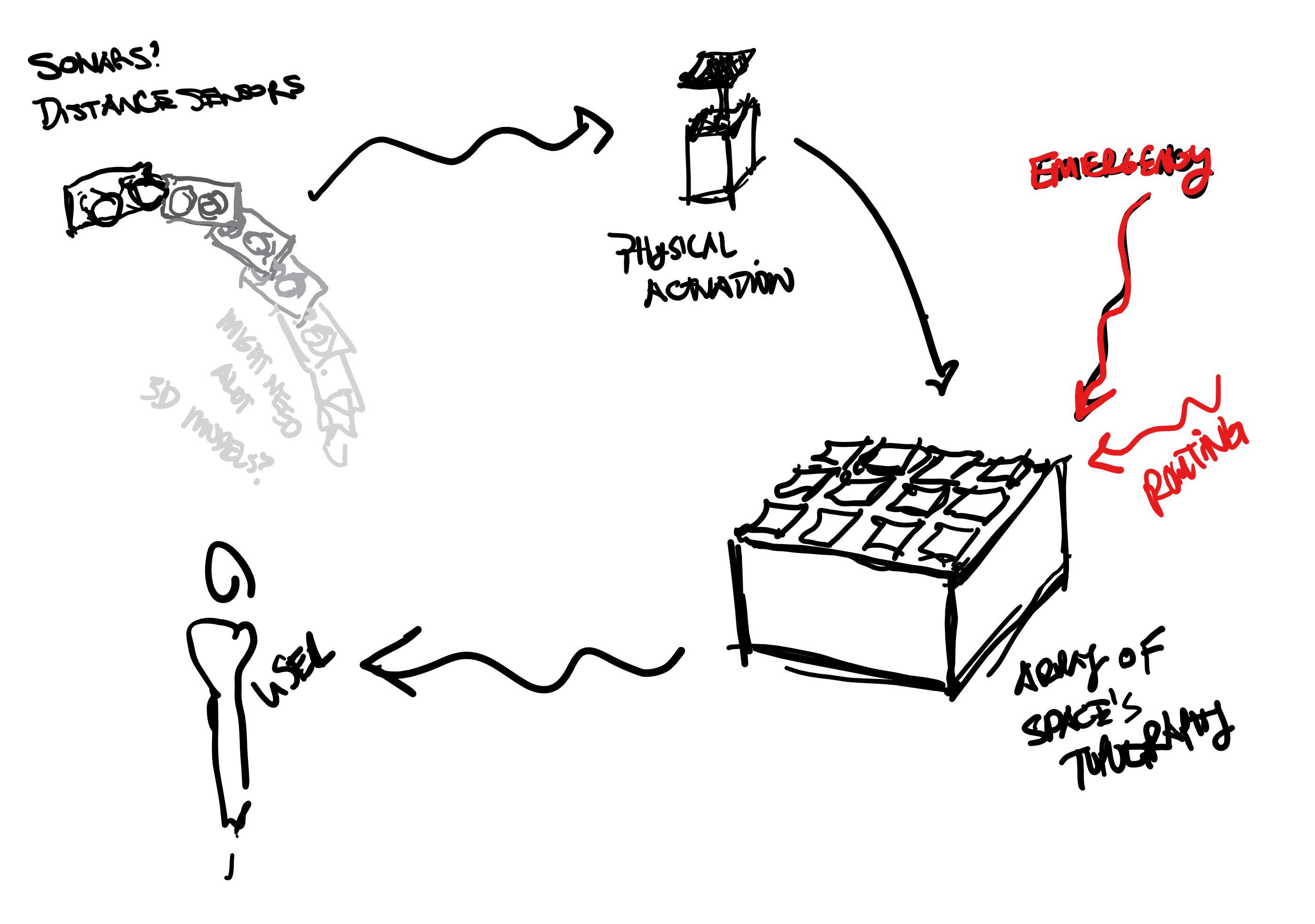

A device that would scan and process a space using sonars, LIDAR, photography, 3D model, etc. which would be processed then mapped onto a interactive surface that would be actuated to represent that space. The user would then be able to understand the space they are in on a larger scale, or on a smaller scale, identify potential tripping hazards as they move through an environment. The device would ideally be able to change scales to address different scenarios. Other aspects such as emergency situation scenarios would also be programmed into the model so that in the case of fire or danger, the user would be able to find their way out of the space.

Proof of Concept:

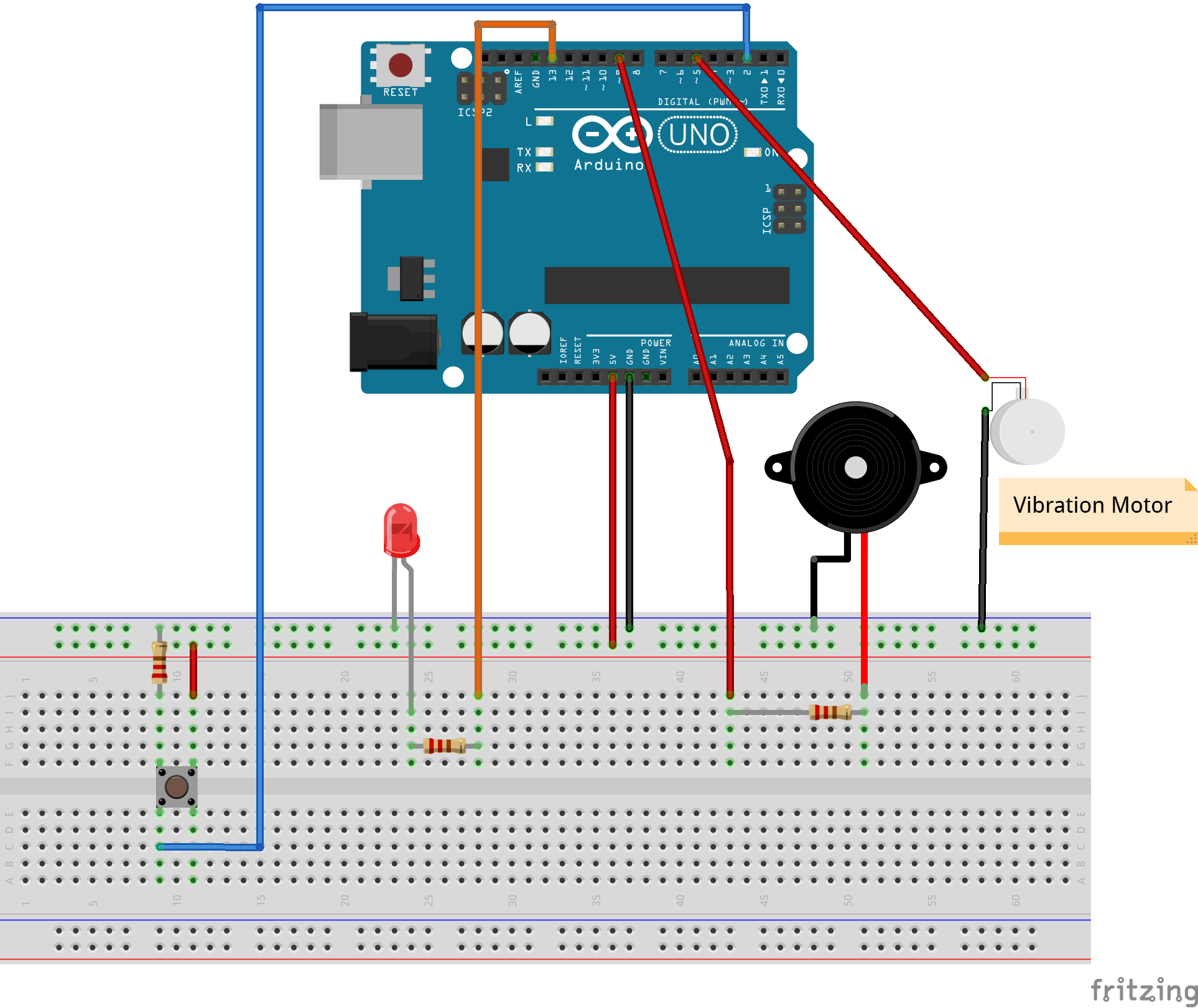

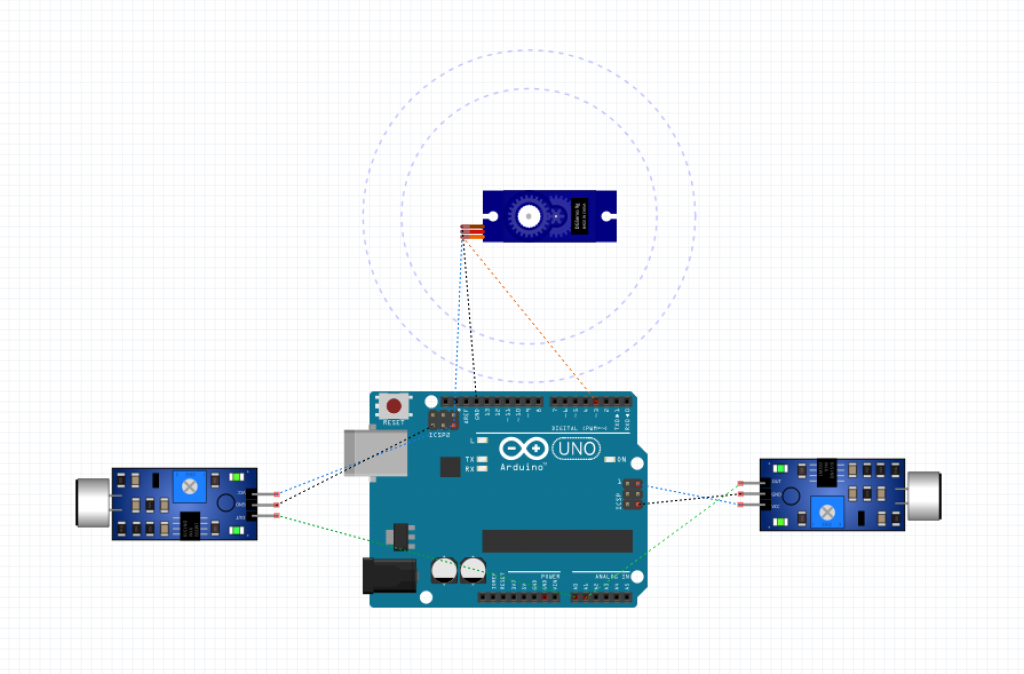

An Arduino with potentiometers (sonars/other spatial sensors ideally) to act as input data to control some solenoids which represent a more extensive network of physical actuators. When the sensors sense a closer distance, the solenoids will pop out and vice versa. The solenoids can only take digital outputs, but the ideal would be more analog so that a more accurate representation could be made of the space. There are also two switches, one that represents an emergency button which alerts the user that there is an emergency, and one that represents a routing button (which ideally would be connected to a network as well, but could also be turned on by the user) which leads the solenoids to create a path out of the space to safety.

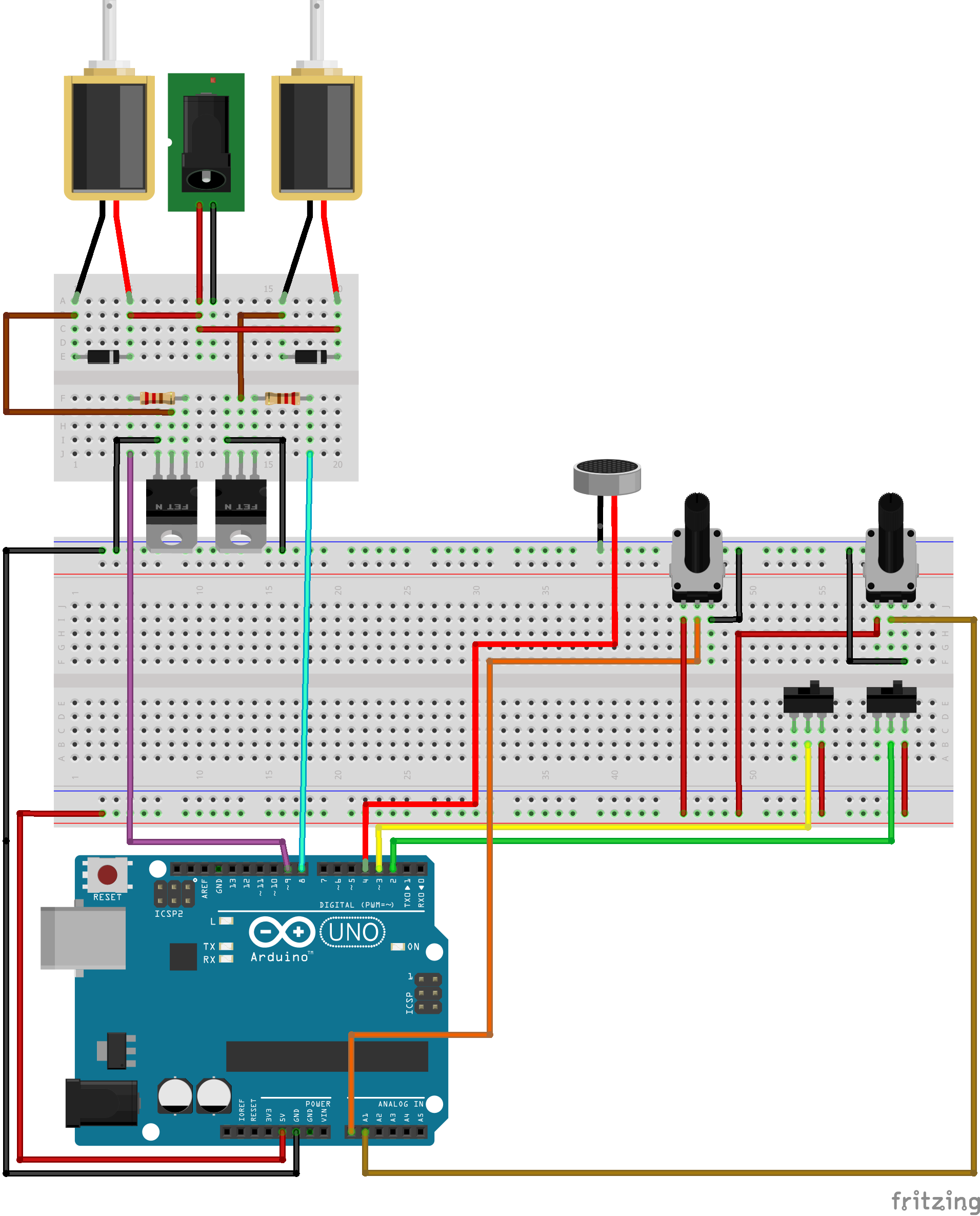

Fritzing Sketch:

The Fritzing sketch shows how the proof of concept’s solenoid are wired to a separate power source and is setup to receive signals from the Arduino as well as how all of the input devices are connected to the Arduino to send in data. The transducer for emergencies has been represented by a microphone, which has a similar wiring diagram. Not pictured, is that the Arduino and the battery jack would have to be connected to a battery source.

Proof of Concept Sketches:

The spatial sensor scans the space that the user is occupying which is then actuated into a physical representation and arrayed to create more specificity for the user to touch and perceive. This system would be supplemented by an emergency system to both alert the user that an emergency is occurring, and also how to make their way to safety.

Proof of Concept Videos:

Files: