Author: mvlachos@andrew.cmu.edu

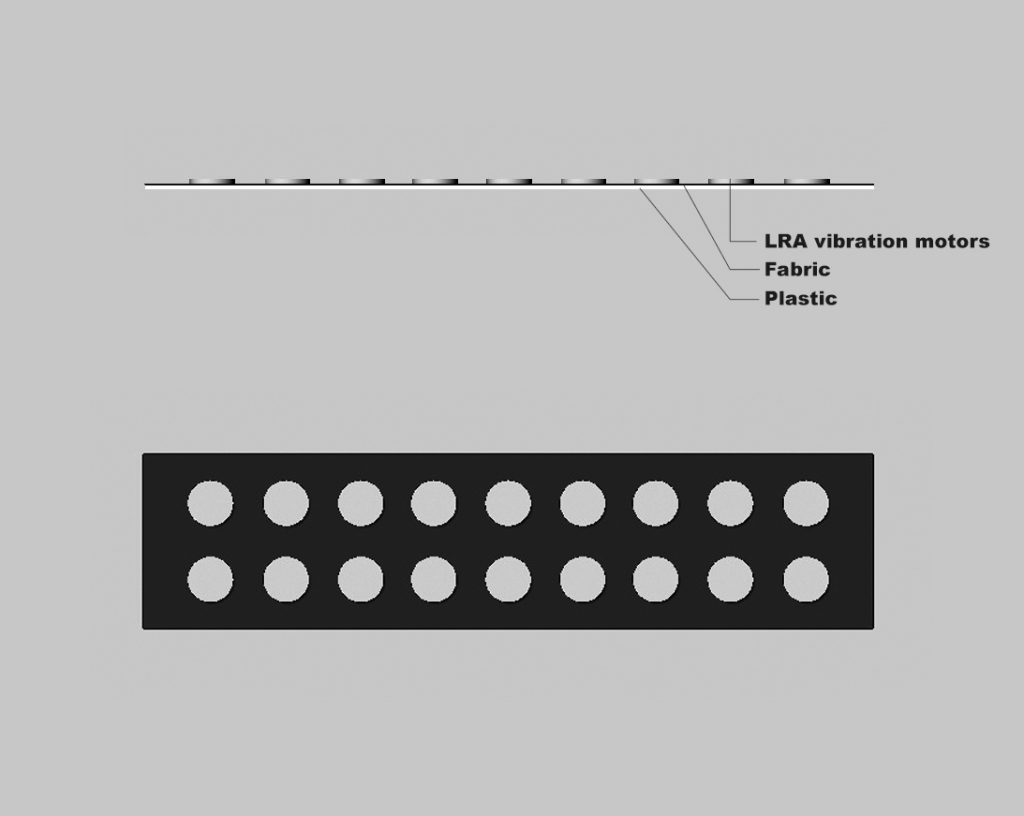

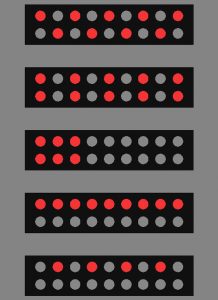

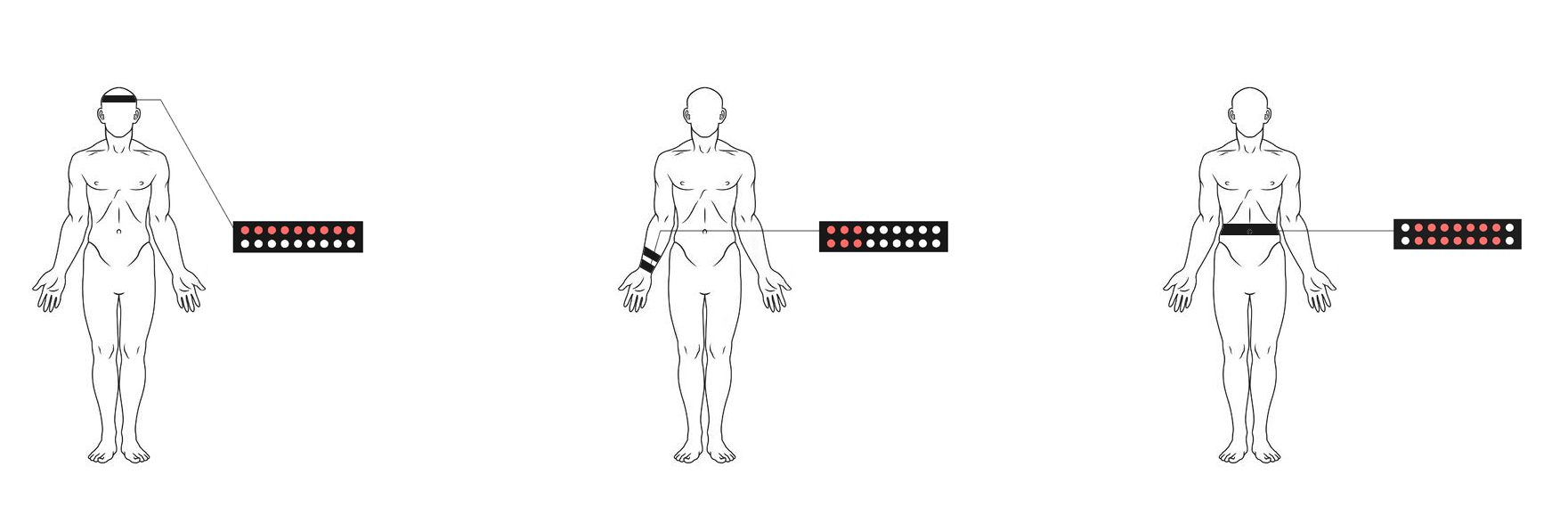

Vibrotactile Sensations: Exploring their impact on human body

Problem: Many people today experience an enormous amount of stress due to the fast-paced and competitive rhythms of a society that tries to survive under the uncertainty that Covid-19 brought. Stress constitutes a psycho-emotional condition that most of the times breaks out through the human body using various forms: headaches, stomach pains, fast pulses and breathing, sometimes even inertia. Many people turn to medicine drugs in an effort to find a way out, neglecting the therapeutic effects that a simple touch may have on their body. Alternative ways of medicine and well being such as pain and stress and management through meditation, therapeutic massage and reflexology are being neglected by the variety of people, especially in West societies. Sometimes, a simple touch and push between your eyes, may prove to be enough to stop a strong headache or force yourself to release significant stress and feel asleep.

Solution: For my final project, I propose the design and computation of an e-bandage that can be wrapped around different areas of the human body (head, stomach, arm) and offer different vibrotactile sensations, that can either calm stress, treat pain or even induce an alert. In this project, I am about to explore different effects of vibrations and their impact on the human body, by playing with factors such as the spatial configuration and distance among the vibrators, their intensity, frequency and location upon the body. I intent to use two arrays of LRA vibration motors, where different combinations of motors are going to be activated for different haptic patterns. A touchpad matrix is going to be used for choosing which effect should be executed.

Components:

- 8 x LRA vibrating motors

- 8 x Transistors to increase power output of the motor

- 8 x Adafruit haptic motor drivers or 8 x SparkFun Haptic Motor Driver (?)

- conductive thread

- optional: Flora microcontroller or LilyPad arduino

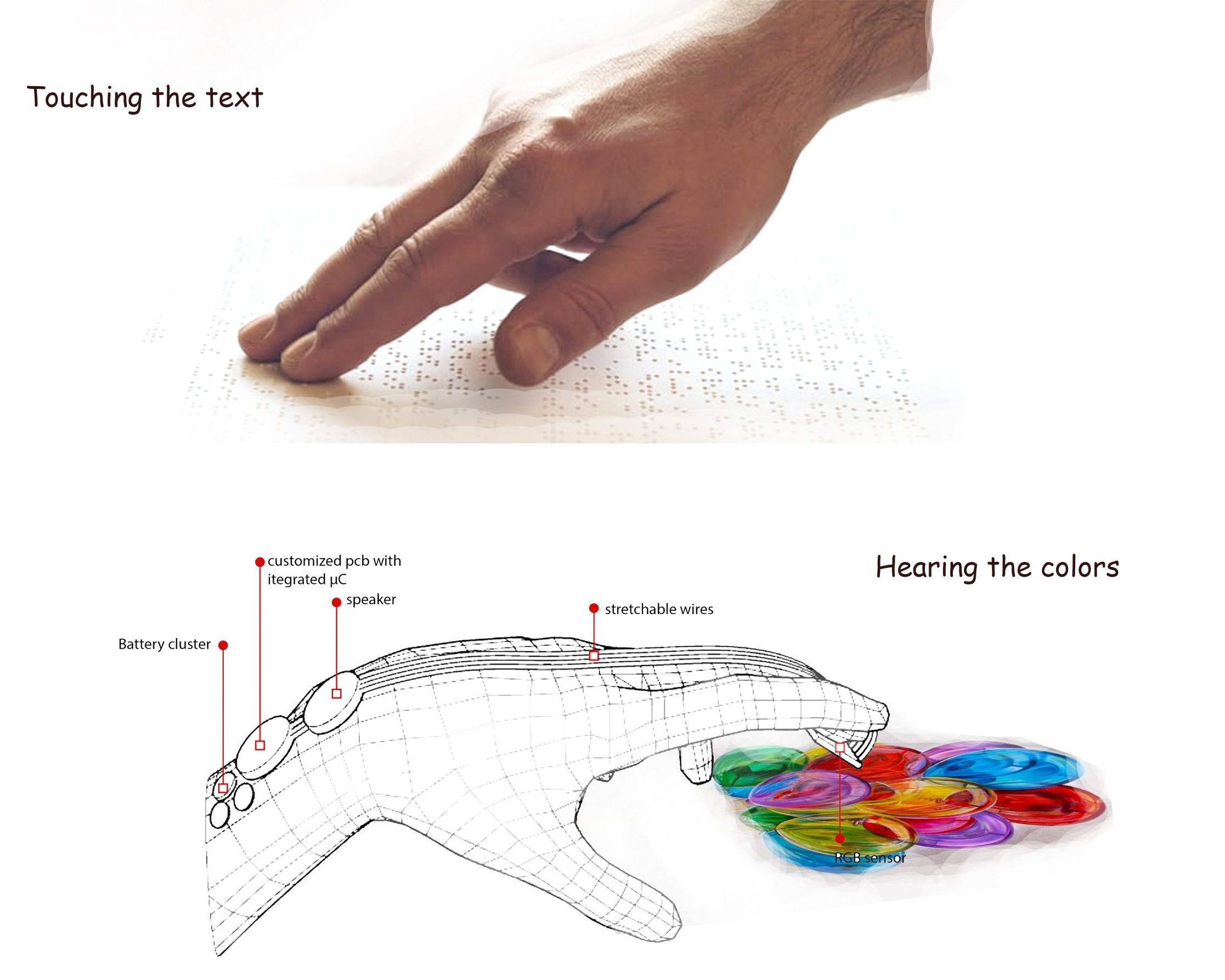

Hearing the Colors

Context: One of the main contributions of technology is mediation: technology mediates the agency of both disabled and fully enabled humans in the world, rebuilding their relation with the environment.

Problem: For visually impaired people, many applications have been developed that scan and recognise objects in space for visually impaired people. This way, the user can perceive spatial information without employing touch. The visual information is translated into auditory words, which describe an object or a spatial condition.

This method facilitates the daily life for people with visual impairment. However, it is inadequate when it comes to the perception of art. A colourful painting cannot be described through a sequence of colour matches that may bear no meaning to people with congenital vision disability.

Solution: ‘Hearing the colours is a wearable interface that turns the experience of colours and visual art into an audio performance. The wearable prompts the user to move their hand and ‘grasp’ the sounds of the colours in a similar way with that they execute to ‘grasp’ the Braille dots for reading.

The interface plays the role of a sensory substitution device (SSD). After practice, the user learns to stimulate spontaneously the experiential quality of “seeing a color” through a new set of sensoricognitive skills. . This changes the classical definition of sensory modalities and contributes to the emergence of a form of “artificial synaesthesia”.

The interface is designed to transform the static observation of art into an interplay of visuals, sound and body movement. However, hearing the colors through a wearable device should not only be constrained to the spectrum of artwork. It contains the potential to turn the body into a scanning device, that moves and interacts with the space itself, even with other human beings.

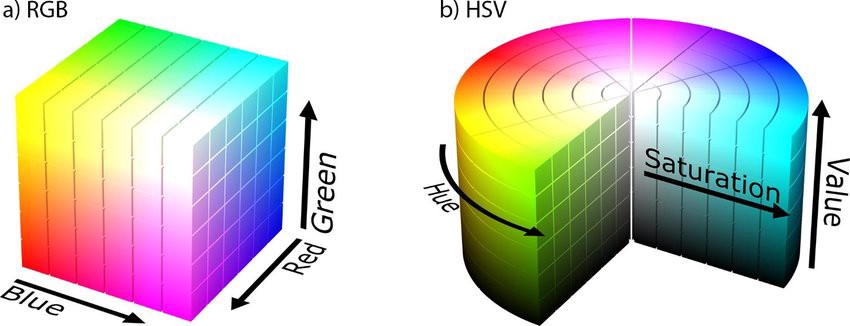

Color as a R:G:B and H:S:B code

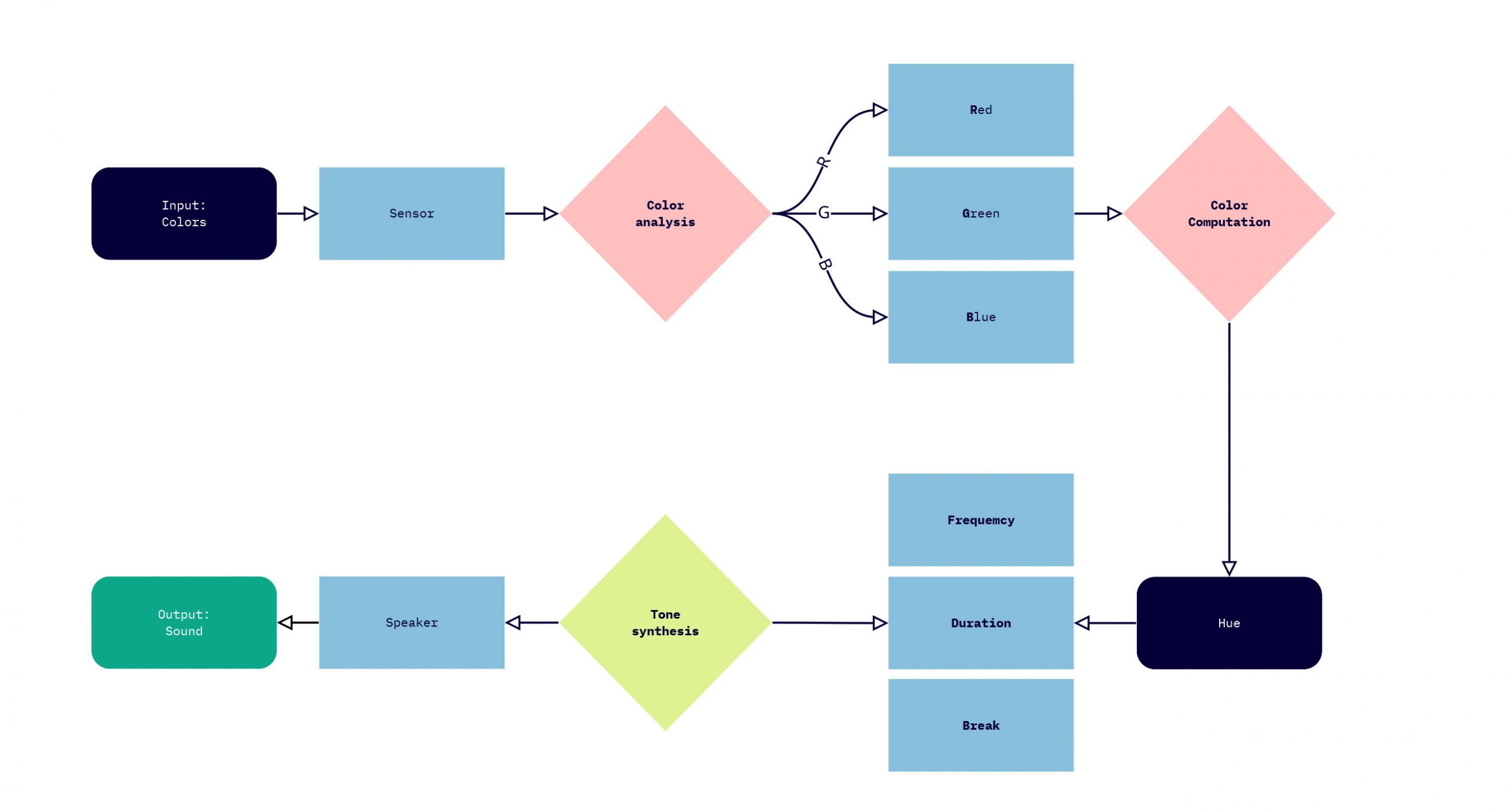

Computational Logic:

Demonstration Demo:

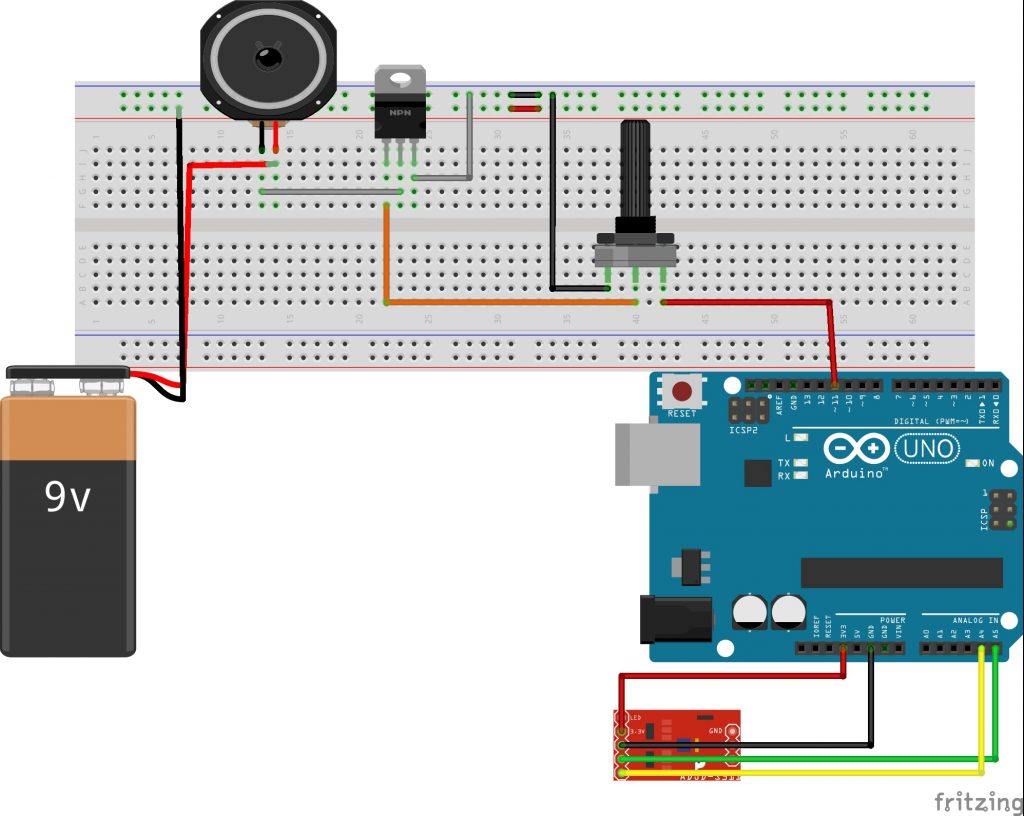

Circuit design:

Code:

#include <Wire.h>

#include "Adafruit_TCS34725.h"

/* Initialise with specific int time (700ms) and gain(1x) values

Integration time = how often it reads the colors

Longer integration times can be used for increased sensitivity at low light levels.

Valid integration times are: 2.4MS, 24MS, 50MS, 101MS, 154MS, 700MS

Sets the gain of the ADC to control the sensitivity of the sensor.

Valid gain settings are: 1x(no gain), 4x, 16x, 60x

*/

Adafruit_TCS34725 myRGB = Adafruit_TCS34725(TCS34725_INTEGRATIONTIME_50MS, TCS34725_GAIN_4X);

int R = 0;

int G = 0;

int B = 0;

int hsv [3];

const int speaker = 11;

int pitch; int duration; int delayT;

/*********************************************************************************************/

void setup() {

Serial.begin(9600);

if (myRGB.begin()) {

Serial.println("The colorSensor is found");

} else {

Serial.println("No colorSensori is found");

while (1);

}

Serial.println("*****************************************************");

Serial.println();

}

/*********************************************************************************************/

void loop() {

getRGBValues();

rgb2Hsv(R, G, B, hsv);

colors2Sounds();

tone(speaker, pitch, duration);

delay(delayT);

} // loop

/*********************************************************************************************/

void colors2Sounds() {

if (hsv[0] <= 1) {

pitch = 100;

duration = 400;

delayT = 0;

Serial.println("red");

} else if (hsv[0] <= 5) {

pitch = 300;

duration = 400;

delayT = 50;

Serial.println("purple");

} else if (hsv[0] <= 13) {

pitch = 500;

duration = 400;

delayT = 100;

Serial.println("orange");

} else if (hsv[0] <= 17) {

pitch = 1000;

duration = 400;

delayT = 180;

Serial.println("yellow");

} else if (hsv[0] <= 25) {

pitch = 1800;

duration = 400;

delayT = 230;

Serial.println("green");

} else if (hsv[0] <= 33) {

pitch = 2400;

duration = 400;

delayT = 300;

Serial.println("greenish blue");

} else if (hsv[0] <= 55) {

pitch = 3000;

duration = 400;

delayT = 400;

Serial.println("blue");

}

}

void getRGBValues() {

float _red, _green, _blue;

myRGB.setInterrupt(false);

delay(60);

myRGB.getRGB(&_red, &_green, &_blue);

myRGB.setInterrupt(true);

//Print RGB values

/* Serial.print("Red: "); Serial.print(_red); Serial.print(" *** ");

Serial.print("Green: "); Serial.print(_green); Serial.print(" *** ");

Serial.print("Blue: "); Serial.print(_blue); Serial.print(" *** ");

Serial.println(); */

R = _red;

G = _green;

B = _blue;

} // getRGBValues

void rgb2Hsv(byte r, byte g, byte b, int hsv[]) {

float rd = (float) r / 255;

float gd = (float) g / 255;

float bd = (float) b / 255;

float max = threeway_max(rd, gd, bd), min = threeway_min(rd, gd, bd);

float h, s, v = max;

float d = max - min;

s = max == 0 ? 0 : d / max;

if (max == min) {

h = 0; // achromatic

} else {

if (max == rd) {

h = (gd - bd) / d + (gd < bd ? 6 : 0);

} else if (max == gd) {

h = (bd - rd) / d + 2;

} else if (max == bd) {

h = (rd - gd) / d + 4;

}

h /= 6;

}

hsv[0] = int(h * 100);

hsv[1] = int(s * 100);

hsv[2] = int(v * 100);

Serial.println((String)"Hue: " + hsv[0] + " Saturation: " + hsv[1] + " Value: " + hsv[2]);

}

float threeway_max(float r, float g, float b) {

float res;

res = r;

if (g > res) {

res = g;

}

if (b > res) {

res = b;

}

return res;

}

float threeway_min(float r, float g, float b) {

float res;

res = r;

if (g < res) {

res = g;

}

if (b < res) {

res = b;

}

return res;

}

Mini Assignment 9

- nut allergy: Co-living with people who just met you, makes it difficult to always remember about your food allergies. If not properly cleaned, some of the ingredients you are allergic to, may have come into contact with surfaces. Sound production when nuts have touched specific areas.

- When you are stressed, house should automatically produce calm sounds or light condition, that could help you calm down and force you into short breaks from studying.

- Never been locked out by your flatmates. Notify if everybody is home/returned, so that people know when to lock the door.

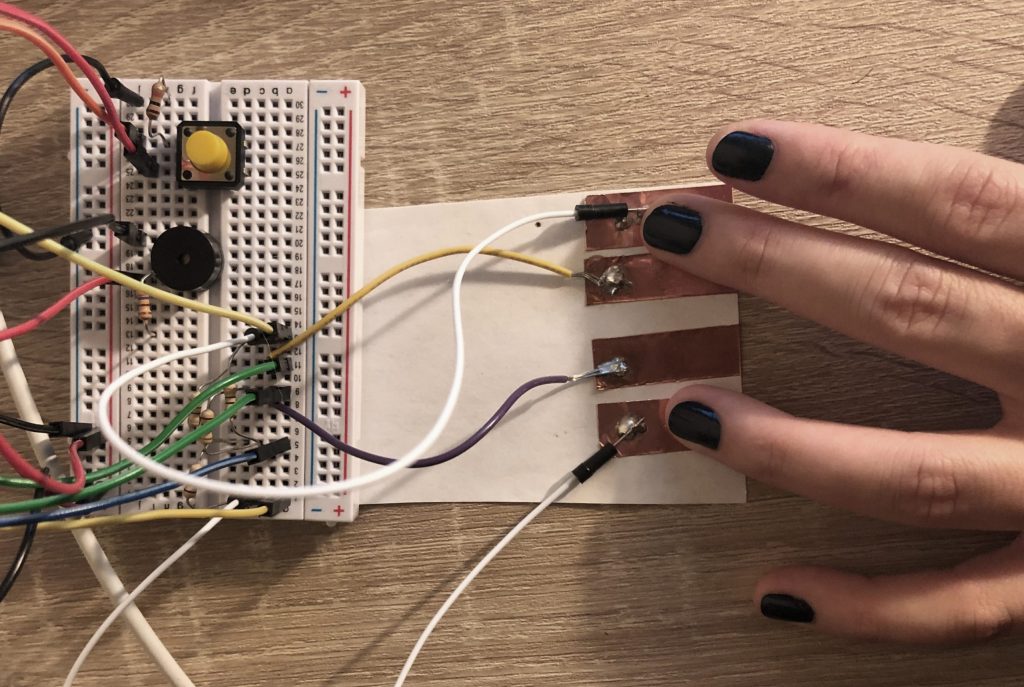

Capacitive μPiano: Cultivating an ear for music

Problem: Today, many children are taking music lessons. They learn to read notes and play them on different instruments. But is it enough to learn notes and scores by heart in order to cultivate an ear for music? Or to recognise notes both auditory and visually to compose them into pleasant melodies that make sense?

Solution: Capacitive μPiano helps beginners to do exactly that. You can repeat your favourite melody as many times you want, until you finally perceive how to reproduce it. Each capacitive sensor represents a different note. The melody’s reading becomes an auditory experience for the user, who previously used visual scores. This process helps new practitioners to train an ear for music from the early beginning.

Demo:

https://www.youtube.com/watch?v=L1W8SxbK3Og

Code:

# include <CapacitiveSensor.h>

#include <pitches.h>

// Capacitive Sensors

CapacitiveSensor cs1 = CapacitiveSensor(6, 9);

bool cs1Touched = true; long cs1Val;

CapacitiveSensor cs2 = CapacitiveSensor(6, 7);

bool cs2Touched = true; long cs2Val;

CapacitiveSensor cs3 = CapacitiveSensor(6, 5);

bool cs3Touched = true; long cs3Val;

CapacitiveSensor cs4 = CapacitiveSensor(6, 8);

bool cs4Touched = true; long cs4Val;

// Speaker + Music

#define speakerPin 4

int melody1[] = {NOTE_E4, NOTE_G3, NOTE_G3, NOTE_C4, NOTE_G3, 0, NOTE_B3, NOTE_C4};

int noteDurations[] = {4, 8, 8, 4, 4, 4, 4, 4};

// Switch

#define switchPin 2

typedef struct switchTracker {

int lastReading; // last raw value read

long lastChangeTime; // last time the raw value changed

byte pin; // the pin this is tracking changes on

byte switchState; // debounced state of the switch

} switchTrack;

void initSwitchTrack(struct switchTracker &sw, int swPin) {

pinMode(swPin, INPUT);

sw.lastReading = digitalRead(swPin);

sw.lastChangeTime = millis();

sw.pin = swPin;

sw.switchState = sw.lastReading;

}

switchTrack switchInput;

bool practiseTime = false;

void setup() {

Serial.begin(9600);

pinMode(speakerPin, OUTPUT);

initSwitchTrack(switchInput, switchPin);

attachInterrupt(digitalPinToInterrupt(switchPin), changeMode, RISING);

}

void loop() {

Serial.println(practiseTime);

if (practiseTime) {

practise();

} else {

playMelody();

delay(3000);

}

}

void changeMode() {

practiseTime = !practiseTime;

}

void practise() {

capacitiveSensor1();

capacitiveSensor2();

capacitiveSensor3();

capacitiveSensor4();

}

void capacitiveSensor1() {

cs1Val = cs1.capacitiveSensor(80); // resolution

if (cs1Touched) {

if (cs1Val > 1000) {

Serial.println(cs1Val);

cs1Touched = false;

tone(speakerPin, NOTE_E4);

}

}

if (!cs1Touched) {

if (cs1Val < 100) {

Serial.println(cs1Val);

noTone(speakerPin);

cs1Touched = true;

}

}

}

void capacitiveSensor2() {

cs2Val = cs2.capacitiveSensor(80); // resolution

if (cs2Touched) {

if (cs2Val > 1000) {

Serial.println(cs2Val);

cs2Touched = false;

tone(speakerPin, NOTE_G3);

}

}

if (!cs2Touched) {

if (cs2Val < 100) {

Serial.println(cs2Val);

noTone(speakerPin);

cs2Touched = true;

}

}

}

void capacitiveSensor3() {

cs3Val = cs3.capacitiveSensor(80); // resolution

if (cs3Touched) {

if (cs3Val > 1000) {

Serial.println(cs3Val);

cs3Touched = false;

tone(speakerPin, NOTE_C4);

}

}

if (!cs3Touched) {

if (cs3Val < 100) {

Serial.println(cs3Val);

cs3Touched = true;

noTone(speakerPin);

}

}

}

void capacitiveSensor4() {

cs4Val = cs4.capacitiveSensor(80); // resolution

if (cs4Touched) {

if (cs4Val > 1000) {

Serial.println(cs4Val);

cs4Touched = false;

tone(speakerPin, NOTE_B3);

}

}

if (!cs4Touched) {

if (cs4Val < 100) {

Serial.println(cs4Val);

noTone(speakerPin);

cs4Touched = true;

}

}

}

void playMelody() {

for (int thisNote = 0; thisNote < 8; thisNote++) {

int noteDuration = 1000 / noteDurations[thisNote];

tone(speakerPin, melody1[thisNote], noteDuration);

// to distinguish the notes, set a minimum time between them.

// the note's duration + 30% seems to work well:

int pauseBetweenNotes = noteDuration * 1.30;

delay(pauseBetweenNotes);

// stop the tone playing:

noTone(1);

}

}

boolean switchChange(struct switchTracker & sw) {

const long debounceTime = 100;

// default to no change until we find out otherwise

boolean result = false;

int reading = digitalRead(sw.pin);

if (reading != sw.lastReading) sw.lastChangeTime = millis();

sw.lastReading = reading;

// if time since the last change is longer than the required dwell

if ((millis() - sw.lastChangeTime) > debounceTime) {

result = (reading != sw.switchState);

// in any case the value has been stable and so the reported state

// should now match the current raw reading

sw.switchState = reading;

}

return result;

}

Assignment 8 – Sound is spatial

Sound to me is spatial and that because it triggers my spatial modalities of perception such as vision, touch and proprioception. Through the latter ones, a person perceives space and its properties such as shapes, relations, textures, materiality, as well as his/her body’s relation to space and particular objects. Therefore, when I hear a sound, I have the tendency to visualise, spatialise and make kinaesthetic projections onto my body.

For example, when hearing the workers building on the construction site, I particularly focus on the sounds being produced using their tools. Those make me visualise materials being transformed; I try to understand the nature of the material itself, how rigid that is, what kind of texture it may have and the kinds of visual transformations that take place when it collides with different tools.

Or when hearing the echo of a person walking or talking in a space, I close my eyes and visualise how spacious and tall this room is. Or when raining, I render spatial the rain’s strength or the environment’s humidity degree. Finally, because of my dancing experience and the effect of neural mirroring on me, when hearing to music such as the sleeping beauty, I visualise famous dancing patterns and sometimes I perceive the difficulty to execute them.

Ambient Sound?

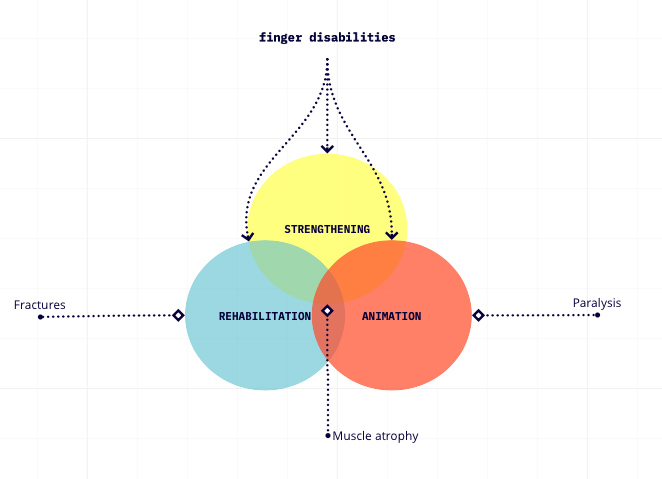

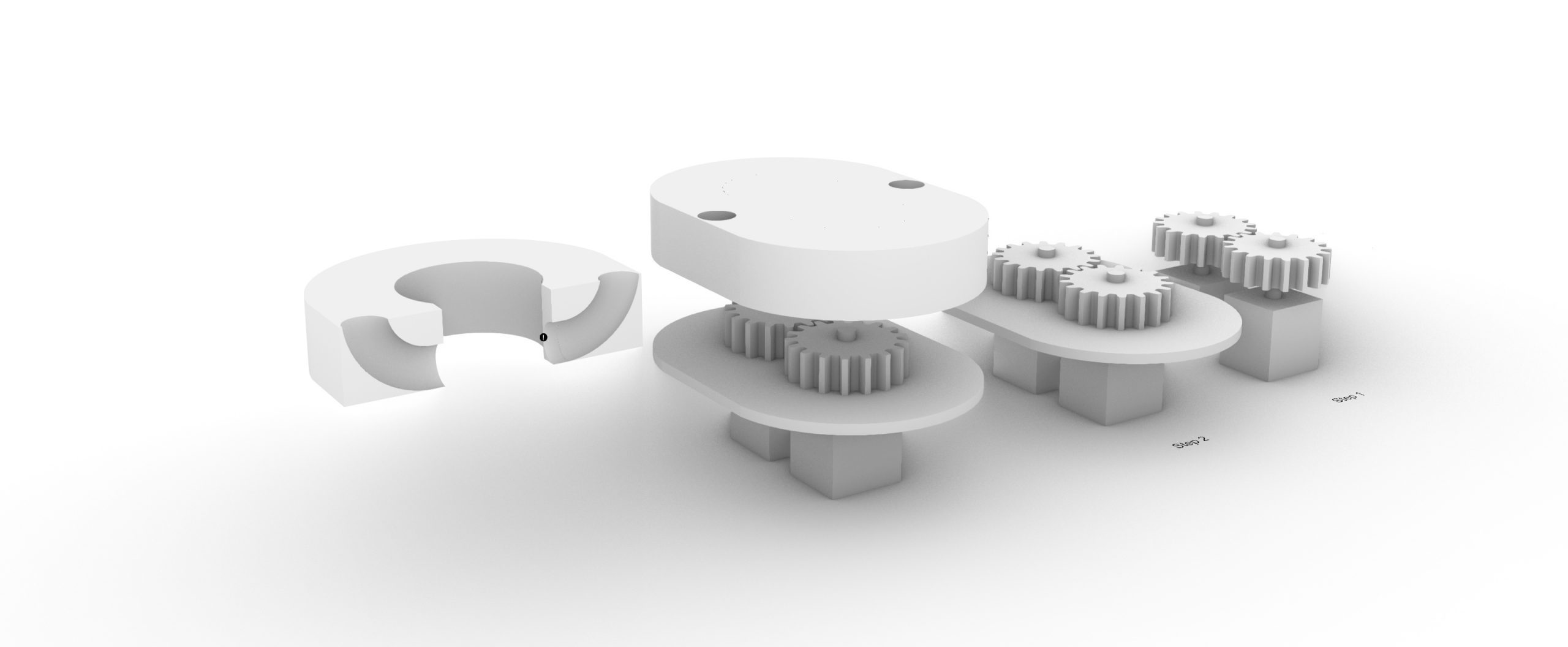

Portable RehabActuators

PROBLEM: People experiencing finger -specific motor disabilities are often asked to participate in rehabilitation sessions, either within clinical settings or at home. Unfortunately, a lot of them (including me) do not execute the instructed exercises for finger strengthening and as a result their traumatised fingers may not fully recover back to their original potential.

SOLUTION: RehabActuators constitute a portable, soft robotic wearable that exploits tangible interaction to motivate patients to execute finger exercises like bending/unbending. RehabActuators turns rehabilitation into a playful activity, where the participant can manipulate its own finger by using interacting with the interface.

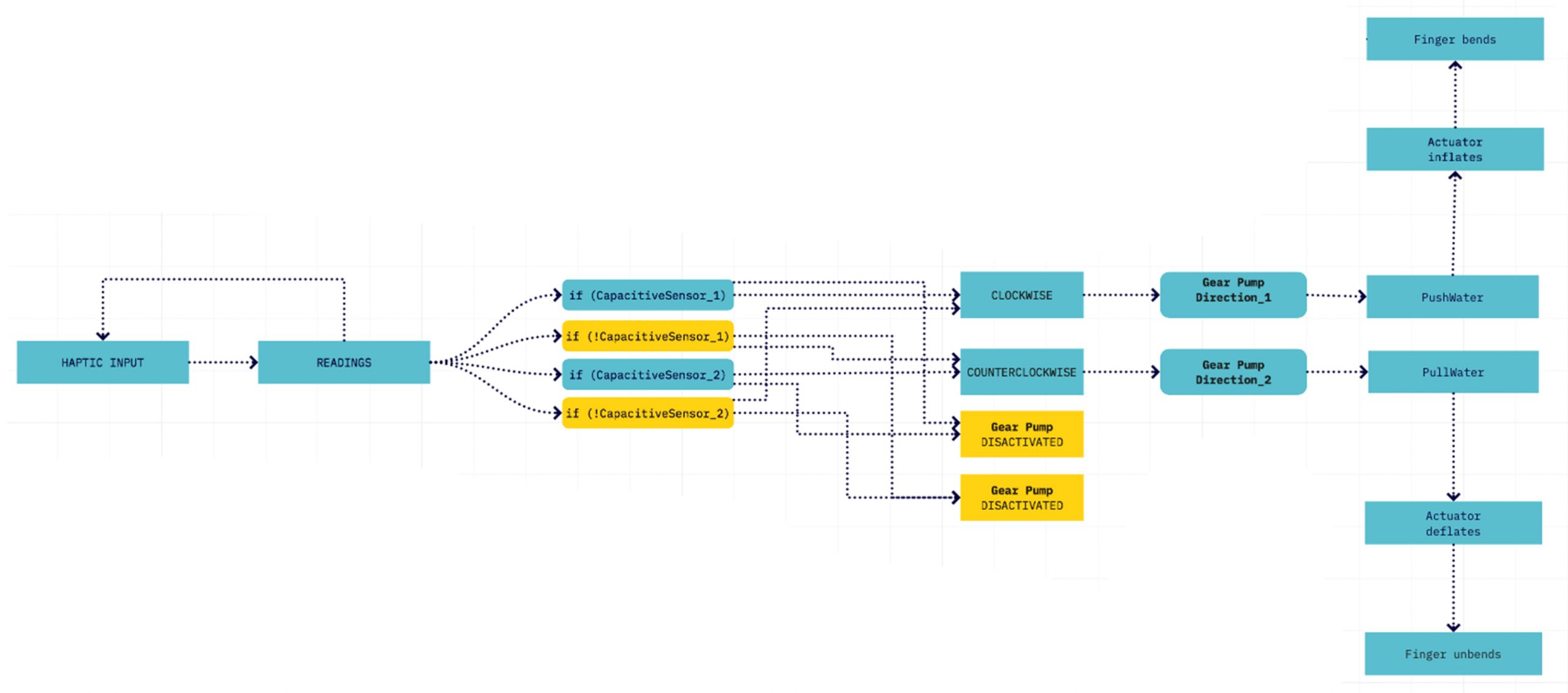

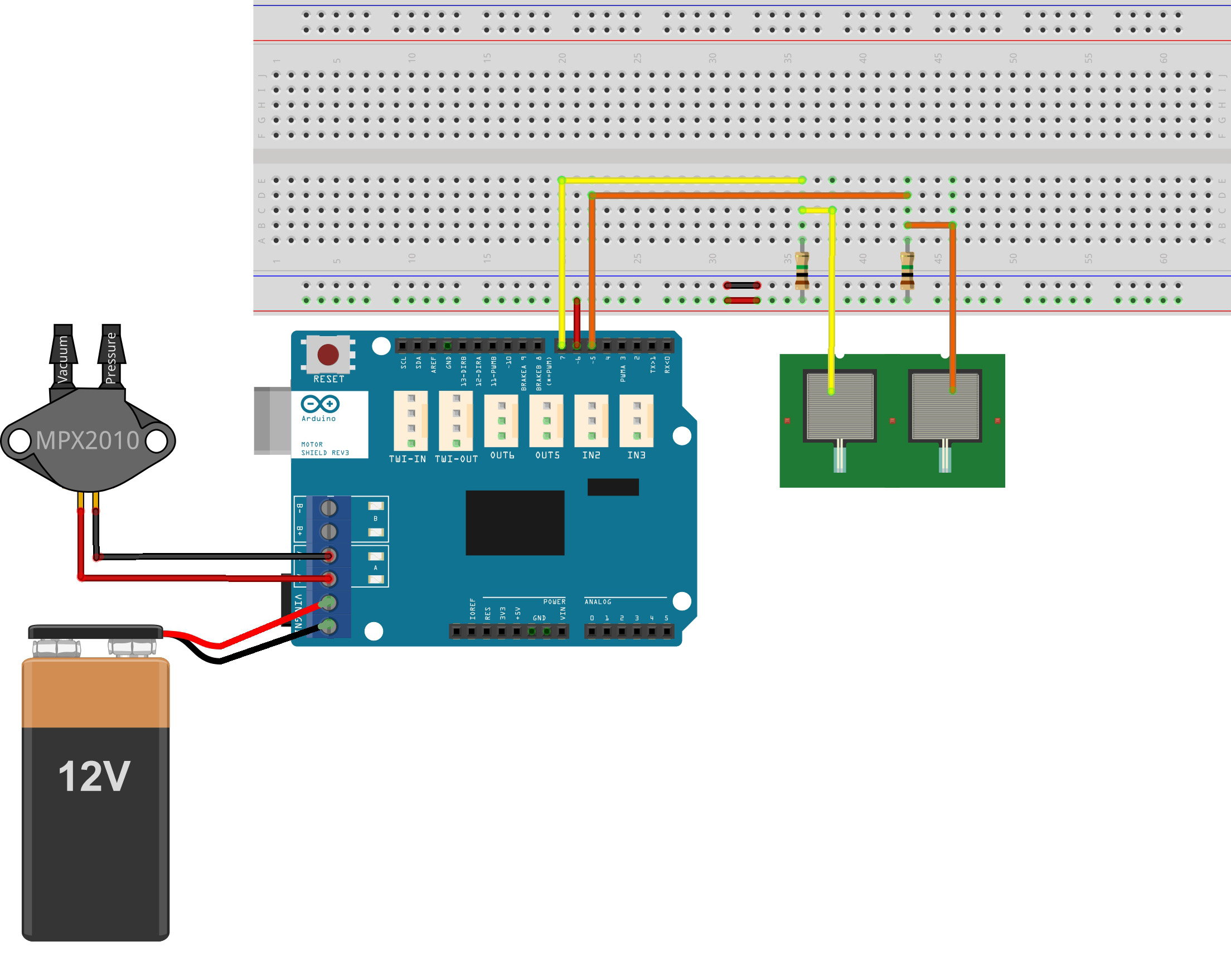

Computational Logic:

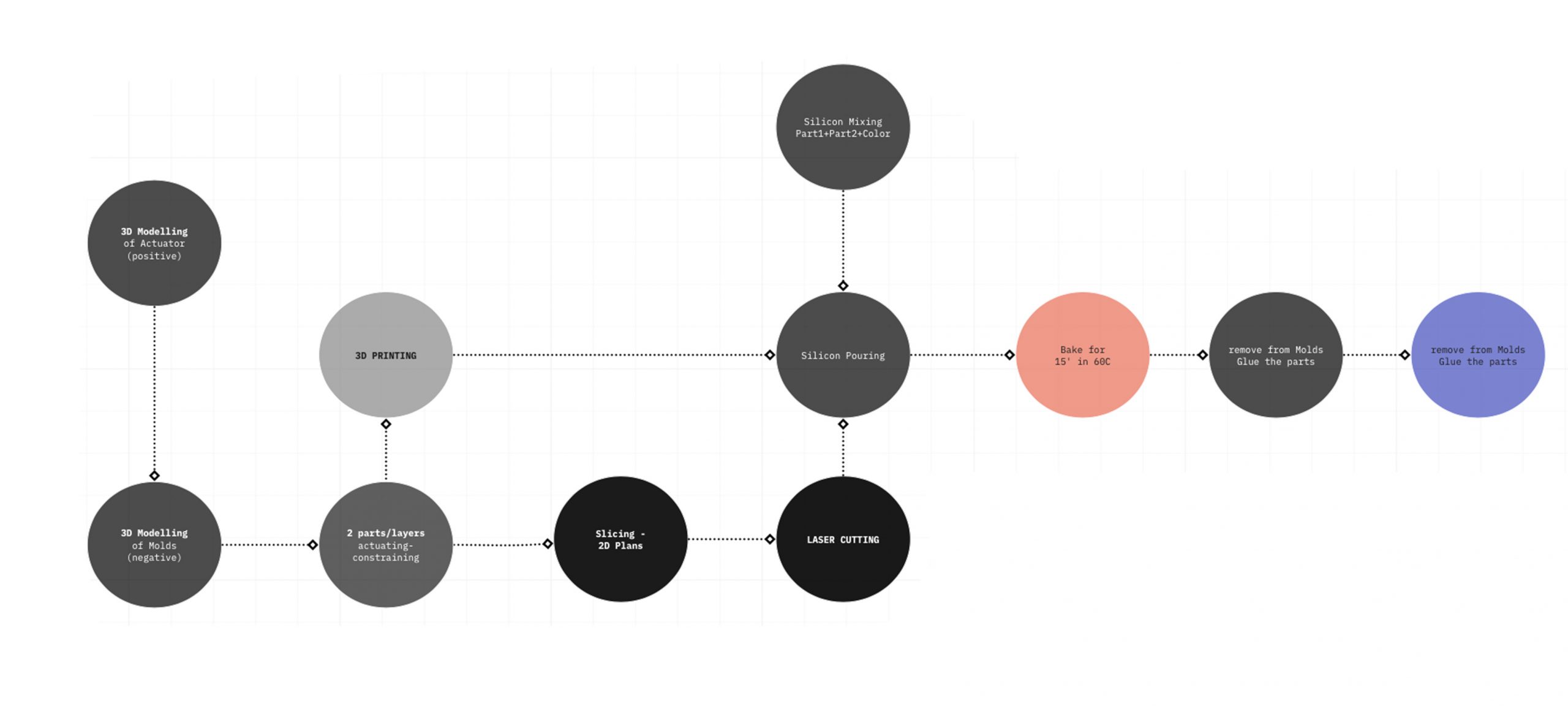

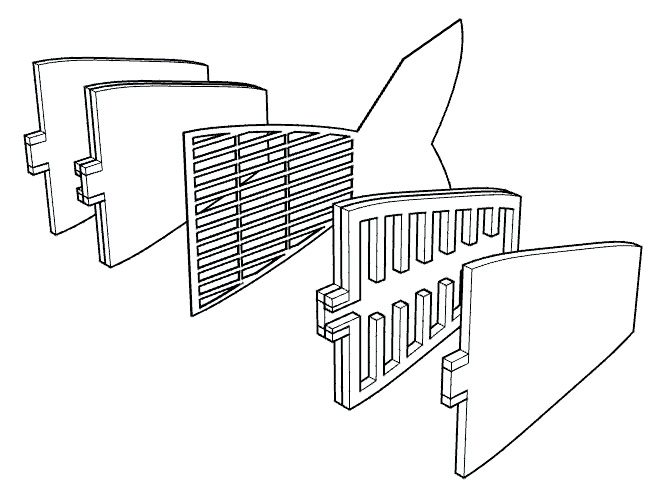

Told Design & Fabrication:

Mechanism Overview:

Control Manipulation:

Interaction Demonstration: https://drive.google.com/drive/folders/1ldiV7o1EZ_QfcC9cEiDaGpcplat2HtpA?usp=sharing

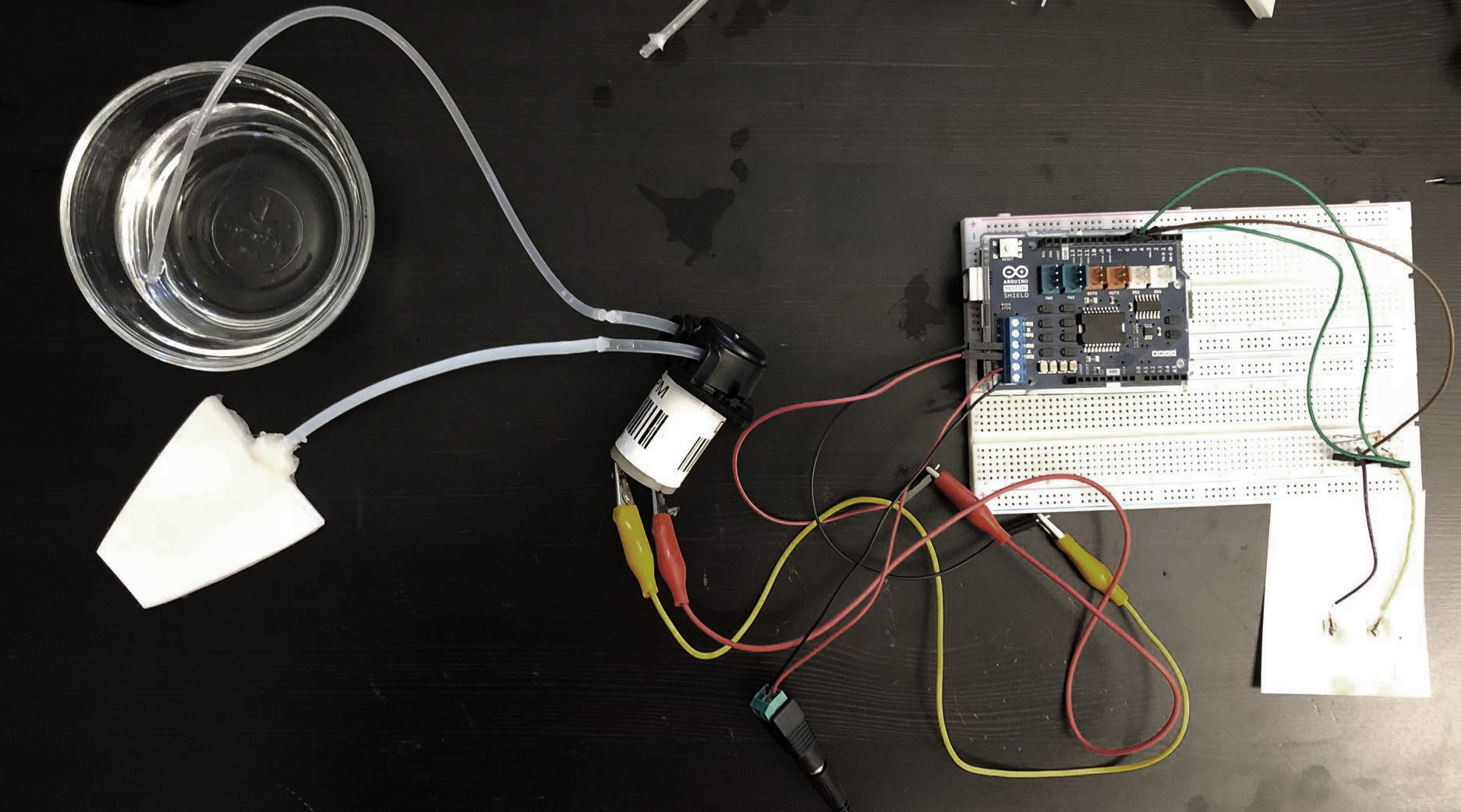

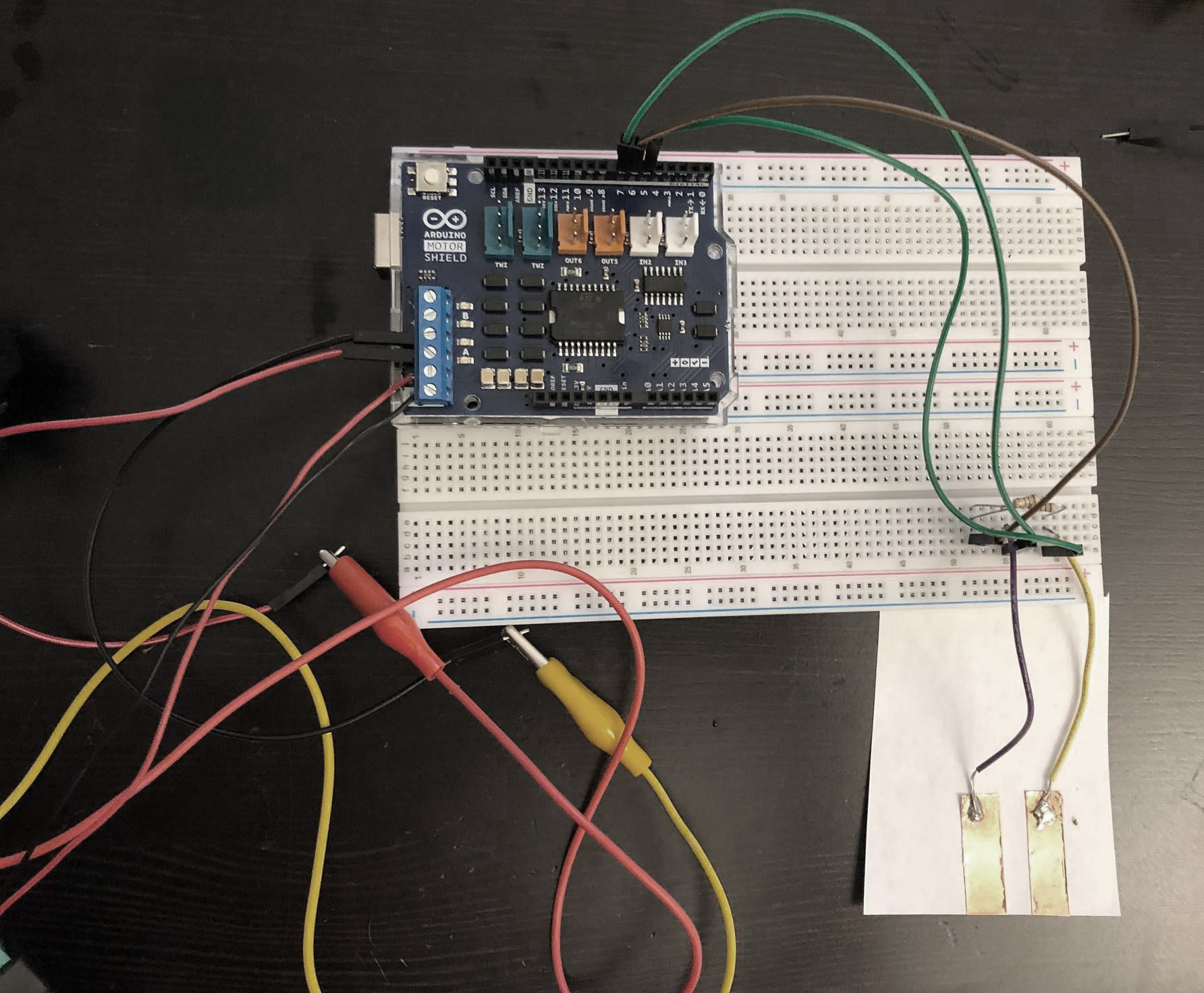

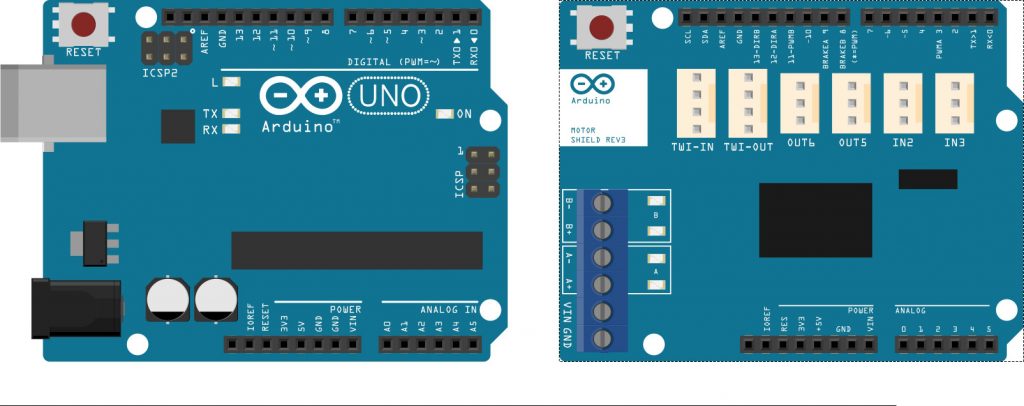

Arduino + Motor Shield REV3

Arduino Wiring:

-Copper tape for the two capacitive sensors

-Peristaltic liquid pump

Arduino Code:

# include <CapacitiveSensor.h>

// Capacitive Sensors

CapacitiveSensor csLeft = CapacitiveSensor(6, 7);

bool csLeftTouched = true;

long csLeftVal;

CapacitiveSensor csRight = CapacitiveSensor(6, 5);

bool csRightTouched = true;

long csRightVal;

// water pump

const int pumpPin = 13; const int speedPin = 11; const int brakePin = 8;

const int cw = HIGH;

const int ccw = LOW;

void setup() {

//Serial.begin(9600);

pinMode(pumpPin, OUTPUT); pinMode(speedPin, OUTPUT); pinMode(brakePin, OUTPUT);

}

void loop() {

capacitiveSensorLeft();

capacitiveSensorRight();

activatePump();

}

void capacitiveSensorLeft() {

csLeftVal = csLeft.capacitiveSensor(80); // 80: resolution

if (csLeftVal > 1000) {

csLeftTouched = true;

//Serial.println("left on");

} else if (csLeftVal < 100) {

csLeftTouched = false;

//Serial.println("left off");

}

}

void capacitiveSensorRight() {

csRightVal = csRight.capacitiveSensor(80); // resolution

if (csRightVal > 1000) {

csRightTouched = true;

//Serial.println("right on");

} else if (csRightVal < 100) {

csRightTouched = false;

//Serial.println("right off");

}

}

void activatePump() {

while (csRightTouched == false && csLeftTouched == false) {

digitalWrite(brakePin, HIGH);

}

while (csRightTouched == true && csLeftTouched == false) {

digitalWrite(brakePin, LOW);

digitalWrite(pumpPin, cw);

analogWrite(speedPin, 200); // 200 is the rotation speed

}

while (csRightTouched == false && csLeftTouched == true) {

digitalWrite(brakePin, LOW);

digitalWrite(pumpPin, ccw);

analogWrite(speedPin, 200); // 200 is the rotation speed

}

}

Hands-free manipulation of underwater soft robotics

My idea is to use motion/distance sensors in order to manipulate the locomotive patterns of a soft robotic fish tail. Using simple gestures such swiping left/right with different rotation angles or giving various values to two distance sensors alternatively, one’s could manipulate the morphing of fluidic elastomer actuators, causing this way the tail to swim in different patterns (swim straight/turn right/left, big/small maneuver) .

In a greater scale and later one, this semi-autonomous fish tail could be designed and fabricated for motion impaired people, who meet difficulties exploring their body’s motion underwater. Having a personal trainer who manipulates externally their prosthetic tail, their body could develop different speeds, turns and navigation using dolphin style.

The fish’s body is composed out of two fluidic elastomer actuators (with chambers) separated by a constraint layer. The latter one tries to constraint each actuators deformation and as a result the tail bends.

The liquid transfers from one chamber into the other using a gear pump, where two DC motors cause the gears to rotate in different directions.

Body recognition in HCI for diverse applications

A] Behance Portfolio Review Kinect Installation

An open-minded approach to natural user interface design turned a portfolio review into a memorable interactive event.

B] Stroke recovery with Kinect

The project aims to provide a low-cost home rehabilitation solution for stroke victims. Users will be given exercises that will improve their motor functions. Their activities will be monitored with Kinect’s scanning ability, and a program that helps keep track of their progress.

This allows the patients to recover from home under private care or with family, instead of hospital environments. Their recovery levels can be measured and monitored by the system, and researchers believe the game-like atmosphere generated will help patients recover faster.

C] Kinect Sign Language Translator

This system translates sign language into spoken and written language in near real time. This will allow communication between those who speak sign languages and those who don’t. This is also helpful to people who speak different sign languages – there are more than 300 sign languages practiced around the world.

The Kinect, coupled with the right program, can read these gestures, interpret them and translate them into written or spoken form, then reverse the process and let an avatar sign to the receiver, breaking down language barriers more effectively than before.

D] Retrieve Data during a Surgery Via Gestures