It’s a legitimate story, because I wasn’t sure how we were supposed to do these:

1

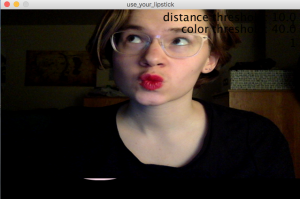

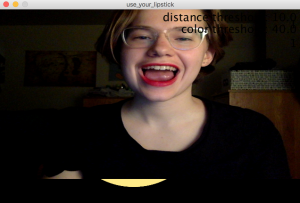

It was 00:07. That’s when I usually go for my run, because I don’t really like it when all of my neighbors can watch me try to exercise. That, and I never run into anyone cars or anything, which is always a bonus. I heard a huge screech at exactly 00:07.23, and I turned around really sharply, like how my karate instructor taught me. That’s when I saw you. You saw me, and you started laughing maniacally before sprinting past me. Your were all sweaty and your hair was a stringy mess, and I remember asking myself what you were doing, and then asking you. “Hey, you, what are you doing?” You looked harmless enough, but just in case, I took my fighting stance, one fist to guard, one ready to punch, knees apart. You pulled your earbuds out. “I’m running with Marie Antoinette.” Your voice sounded sweet. But then you put your earbuds back in and started screaming again. At 00:09 I lost sight of you. You had just ducked into the bushes off of the Main Street, nervously looking back over your shoulder a few times before doing that.

3

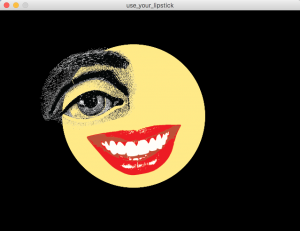

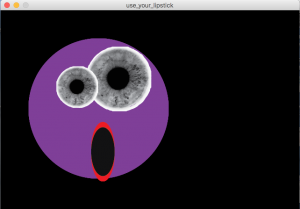

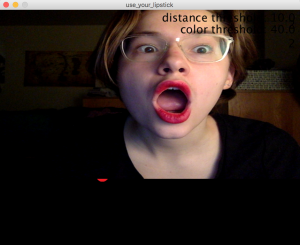

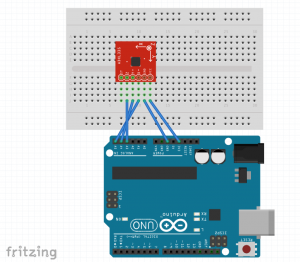

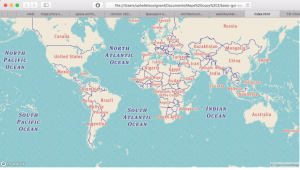

You don’t have to explain yourself. I saw your watch-gadget. My friend Marc has one of them, he says it’s the best thing that’s ever happened to him, which is saying something. He definitely put on some more muscle since he got it, he says it’s better than playing a video game. He’s totally obsessed. He tried to suck me in, too, he thinks I’d be a natural, because I like history and stuff. I don’t know, the whole ghost thing sort of freaks me out. I’d rather just learn history from books. And I don’t really want the ghost of Benjamin Franklin telling me I need to be going at a faster pace. Plus, I don’t know, I feel like you’d get less checkpoints in the country. There are more creepy things, but they’re sort of spread out, you know? It would be good for sprinters, but not for people who actually want to run long distance. If you’re in the city, and you just walk by a skyscraper you could get, like, five different ghosts right there. They’d all be some plain old minor building celebrity or whatever, not very interesting, but at least it’d be something. And they’d just talk to you instead of chasing you. City folk are lazier than you might think. Marc was saying he wanted to add one. Apparently there was this guy, Keith, who lived in his building who loved birds, like 50 years ago. Local legend says he would squawk at the other residents in the halls, and I think Marc thinks it would be a funny joke for other runners. Like, if they run past his building, they run into Keith who squawks at them if they’re not running fast enough. But he’s too lazy to actually go ahead and get all the community votes to add him in.

2

You stopped right in front of my house and screamed before running diagonally across the road, really fast. I think you said something like “I’ve got to escape the Leatherman ghost.” I almost called the police, when I saw you suddenly stop and look at your wrist. It looked like you were dialing a watch, which sounds a little weird but I don’t think much could’ve been weirder than that screaming bit you just did I saw. You kind of stopped making noise after you’d played around on your wrist. Anyways, you seemed to enjoy it, because you smiled before leaving my neighbor’s yard, like you were content about something. And you were talking to yourself in low tones. I don’t know, I felt kind of bad. I figured so what if you’re a little crazy, no reason to put you in jail or anything. I don’t know, I haven’t seen many crazy people in my life, maybe you weren’t actually crazy. Everyone has weird days, right? And some people do believe in ghosts. I don’t know, they’re pretty scary, I’d scream if I thought one was following me.