BLOOM from Brooke Ley on Vimeo.

Video

I originally created these videos for an installation piece for Augmented Sculpture, which is where the opening picture is from. The video is found footage of cacti and mushroom timelapses distorted through datamoshing. More information about the method of datamoshing I use can be found at this link. I used I-Frame destruction on the left and P-frame duplication on the right

Note of caution: Datamoshing is really annoying to do. I like this method the most because it gives you the most range of freedom over how it is distorted. However, you have to use this very ancient software since you’re not supposed to be able to corrupt video files in this way. If you want to get into datamoshing, feel free to contact me for some tips and techniques I’ve found that help.

Audio

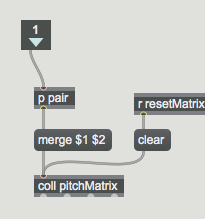

The original piece I used was a paulstretched version of this song by my friend from Pitt. For this project, I wanted to recreate what I did with my video by distorting it in an artistic way. I used the Vinesauce ROM Corruptor which is typically used for distorting ROM files for video game emulators. It was named after this channel which will play corrupted videogames for fun results. Since it uses bit corruption, you can use this tool for more than just ROM files. I sent a wav of the paulstretched track through the corruptor twice, using bitshifting both times. I edited the track a bit afterwards in Ableton live to make it a little bit more listenable. I hope to experiment more with this tool in the future.