The piece of work I selected is “data.matrix” by Ryoji Ikeda . The piece was released in December 2005. When listening to the piece its electronic quality comes to the forefront. It sounds almost as if it is made up of “beep boops” and tapping. However, there is an effect added to the sound which really enhances its electronic energy. Additionally, the piece sounds closer to noise frequencies than a traditional music piece. Ikeda’s pieces are often compared to a soundscape and that is apparent in data.matrix. Ikeda has had large scale sound installations all across the world and had prominent work at the TWA flight center at JFK. It reads more as art, and even though I was not able to find what algorithm Ikeda used for this piece it is apparent that some kind of algorithm must have been used. There is a clear order and organization to the piece that makes it feel quite mathematical and computational.

Tag: LookingOutwards-04

LO-04

I get to talk about my favorite coding platform Max again! I found this pretty interesting granular synthesis patch, where the creator set up a patch where you can take samples of music playing and loop them back quickly, and still be locked into the set tempo (not sure if this is algorithmically read off of the sound or input by the person running the patch) when you release it. Makes for some pretty cool audio effects. The creator demonstrates this using a sort of folk-world-choral piece, with mostly vocals and drums/percussion, but it would be really interesting to use this on a multitrack recording of an orchestra or other multi-part ensemble, to play with different parts of the music while the rest of the orchestration continues underneath it. This video is from 2013, and the software has improved a lot since then, so I would be really interested to play with the patch in a newer version of Max and see how I could clean it up in presentation mode so it could possibly be used in a live performance format.

04-Blog Post

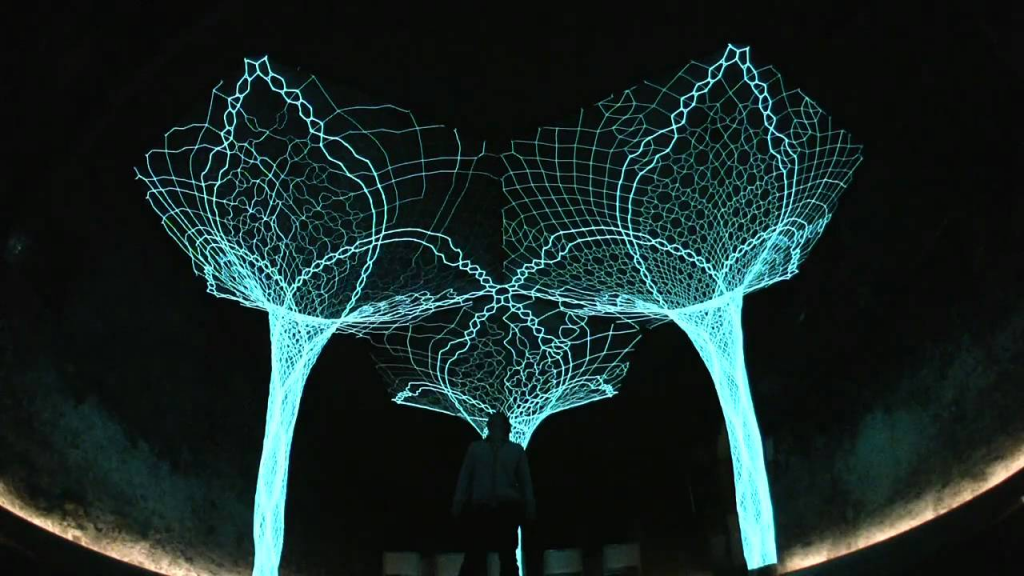

Sonumbra was made as an interactable space in 2006 by Loop.pH, and utilized the movements of the audience. This entire project not only was so beautiful to look at, but also so admirable in how they treated the entire aesthetic of the place, taking into account the multiple variables of a setting, including sound, placement, sizing, and lighting. I suppose the algorithms took in the movements of the visitors and paired them with the fiber lights that made up the structure as well as computer-generated sound. The creator did an excellent job creating beauty in the structure that could have otherwise been rather chaotic or ugly-looking.

Link here

Blog 04: “Sound Machines”

By Ilia Urgen

Section B

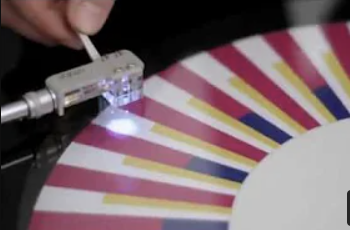

“A visual instrument to compose and control electronic music in a comprehensive and responsible way.” – MediaArtTube, January 28, 2012. I love how this modern audiovisual concept is based on a timeless design used throughout the greater portion of the 20th Century – the record player.

I am truly fascinated and intrigued by this stunning piece of technology. As quoted by the creator, Sound Machines consist of 3 units, each unit resembling Vinyl record players. Each unit has the capacity of holding 3 tracks, just like traditional record players.

MediaArtTube, however, embodies this classical design with a 21st Century makeover. There is no direct contact of the needle to the groove in the disc in a Sound Machine. Signals received from the laser light of the “needle” is synced to a sequencer, producing a sound output.

Sound Machines are definitely a cool way to mix various digitally-transmitted tracks together, and I hope that we continue to see a greater implementation of this technology in everyday life.

(YouTube link: https://www.youtube.com/watch?v=_gk9n-2lBb8)

LO 4 – Sound Art

I really like the albums that the Fat Rat, a music producer, made because I really like his style and his unique use of those special sound effects. (I’ll put a link for one of the songs he made (Unity) here) Most digital music producers always use a DAW (digital audio workstation) to make their music, and I would briefly explain how those producers use a DAW to make their music. First, they put down chords on some specific track lists (that look like a timeline) using pre-recorded chords of different instruments (which the Fat Rat mostly uses pre-recorded game sound effects, usually electronic music using programmable sound generator sound chips) in order like building blocks and then, they arrange those musical sentences (chords) so it all fits together to form a rhythm. After arranging those notes, producers have to adjust the audio levels of each track list (usually different instruments) to make them sound right, and this process is called the mix stage. In between the stages, the producer can easily add effects or change chords (like laying on top of the base note), making the music original and flexible to changes.

anabelle’s blog 04

Since middle school, I’ve always been a huge Vocaloid fan. Vocaloid is a software used to simulate human singing and was originally created to help performers and producers add vocal music even if they did not have access to a real singer. However, Vocaloid expands beyond the software and includes “characters” for each voicebox/voicekit, with popular examples including Hatsune Miku and Kagamine Len&Rin. Initially released in 2004 in a project led by Kenmochi Hideki in Barcelona, the Vocaloid software continues to be updated and rereleased today, with numerous versions and iterations of the same character with new singing abilities. If popular enough, Vocaloids are also given 3D holograms that are capable of holding real-life concerts in real-life venues (with really great turnout). I think the algorithms to create Vocaloid are fairly simple — a commissioned singer records the base notes for a character, which can be modified and edited by producers. What I love about Vocaloid is how each character is given vocal “limitations” to produce their own unique sounds. For example, Teto is best used for soft, low energy ballads, and Kaito’s deeper range will sound distorted in higher ranges.

Here’s an example of a vocaloid concert — the turnout is actually crazy for these things:

Link to Vocaloid website (anyone can buy the software): https://www.vocaloid.com/en/

![[OLD SEMESTER] 15-104 • Introduction to Computing for Creative Practice](https://courses.ideate.cmu.edu/15-104/f2022/wp-content/uploads/2023/09/stop-banner.png)