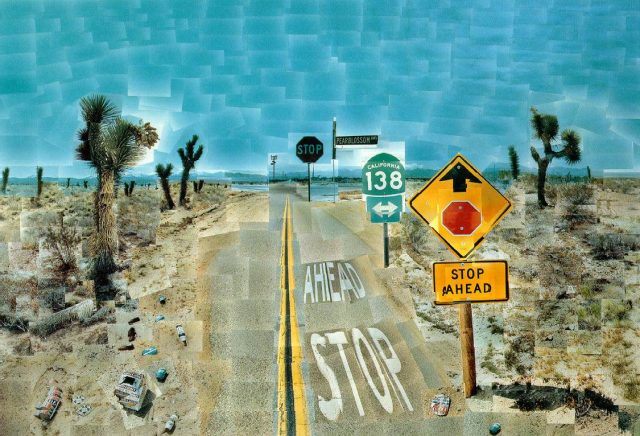

How can the medium of capture influence a typology?

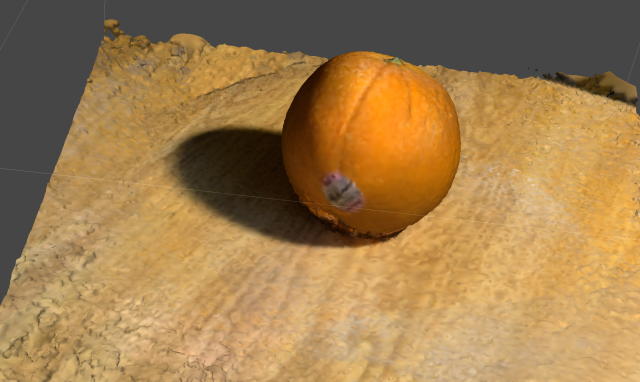

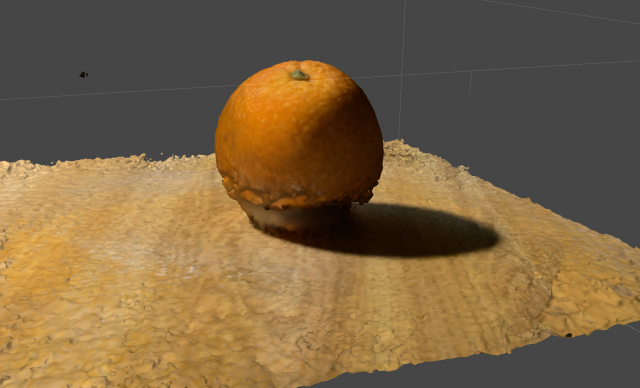

A typology is just as much about how it’s captured as what’s captured. The Venus typology in the chapter is a good example. The typology is a collection of photographs of Venus. It is about Venus and also about the way that Venus has been captured, because the way that it’s been captured is what allows us to see the representation of Venus. The medium of capture is necessarily a part of the typology.

It is also a part of the typology in the sense that the capture medium plays a role in what’s included in the typology. Placing people’s forearms under a camera reveals a typology of the visible exterior of forearms, while placing people’s forearms under an x ray reveals a typology of bones in the forearm. In this case the typology is dictated by the device doing the capturing and what it’s able to “sense.”

Is the medium objective? Objectivity is a separation of the interpretation and biases from the perception. It is pure perception, without any top-down influence. The capture method’s perception of something is objective in this sense, but what is being captured is not. The capture method has been designed to do something; to have a particular kind of perception. There’s nothing objective about this- it was designed. The capture itself, however, is perfectly objective; a transformation of information without interpretation. Yet it exists within a system that has been created to do something, biased in what it perceives.

Another way to think about this is with an aspect of human perception: seeing. There is seeing in the sense of the light hitting the cones and rods in the retina, and then what is seen after it has been processed in the brain with the many top-down influences that humans have. Seeing is the process of converting light to action potentials, while the experience of seeing is different and biased by other aspects of human cognition. I think we can separate the capture from the perception, the pure objective from the interpretative, even though they exist within the same system.