Project title: Pawterns

Description: An exploration in generating novel patterns created by running Image Segmentation on YouTube videos.

60-461/761: Experimental Capture

CMU School of Art / IDeATe, Spring 2020 • Profs. Golan Levin & Nica Ross

Project title: Pawterns

Description: An exploration in generating novel patterns created by running Image Segmentation on YouTube videos.

Catterns

A project to turn cats and dogs into tiled wallpapers.

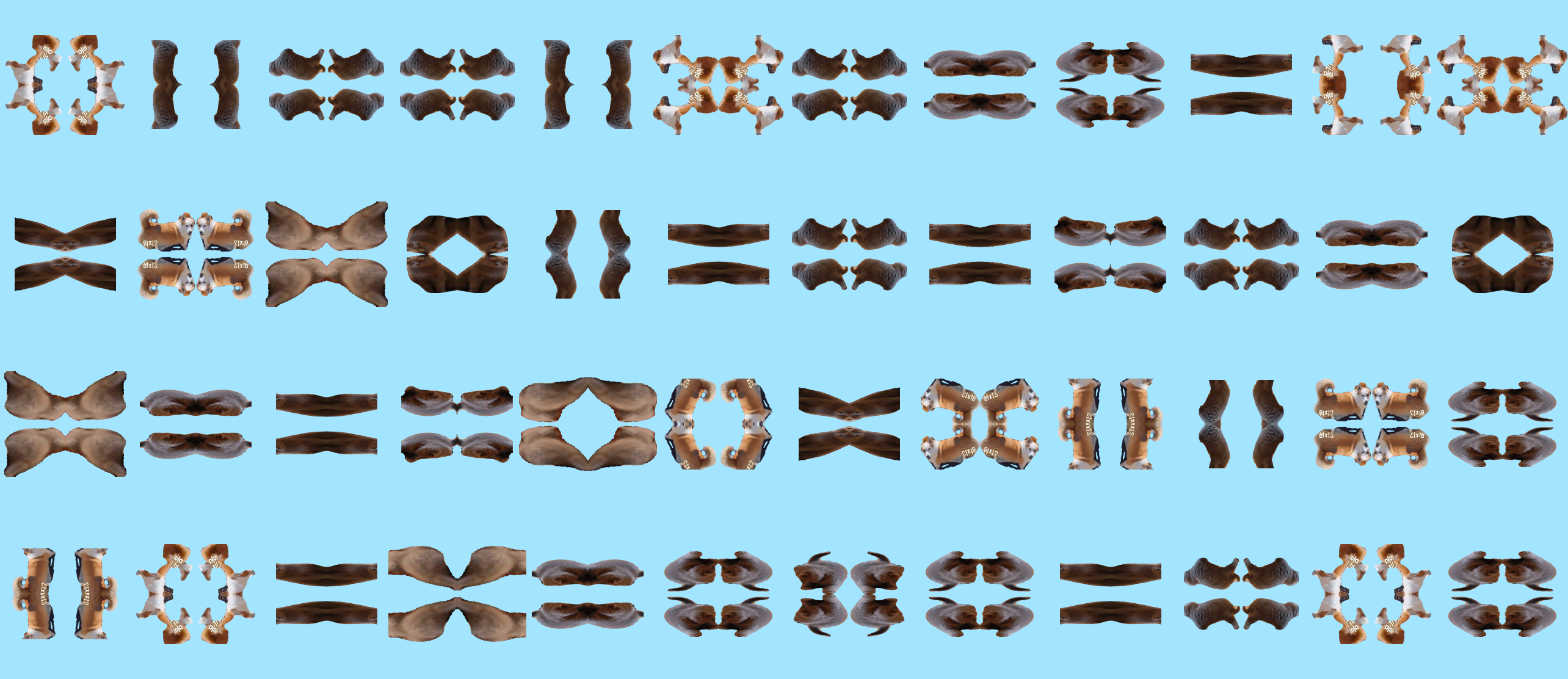

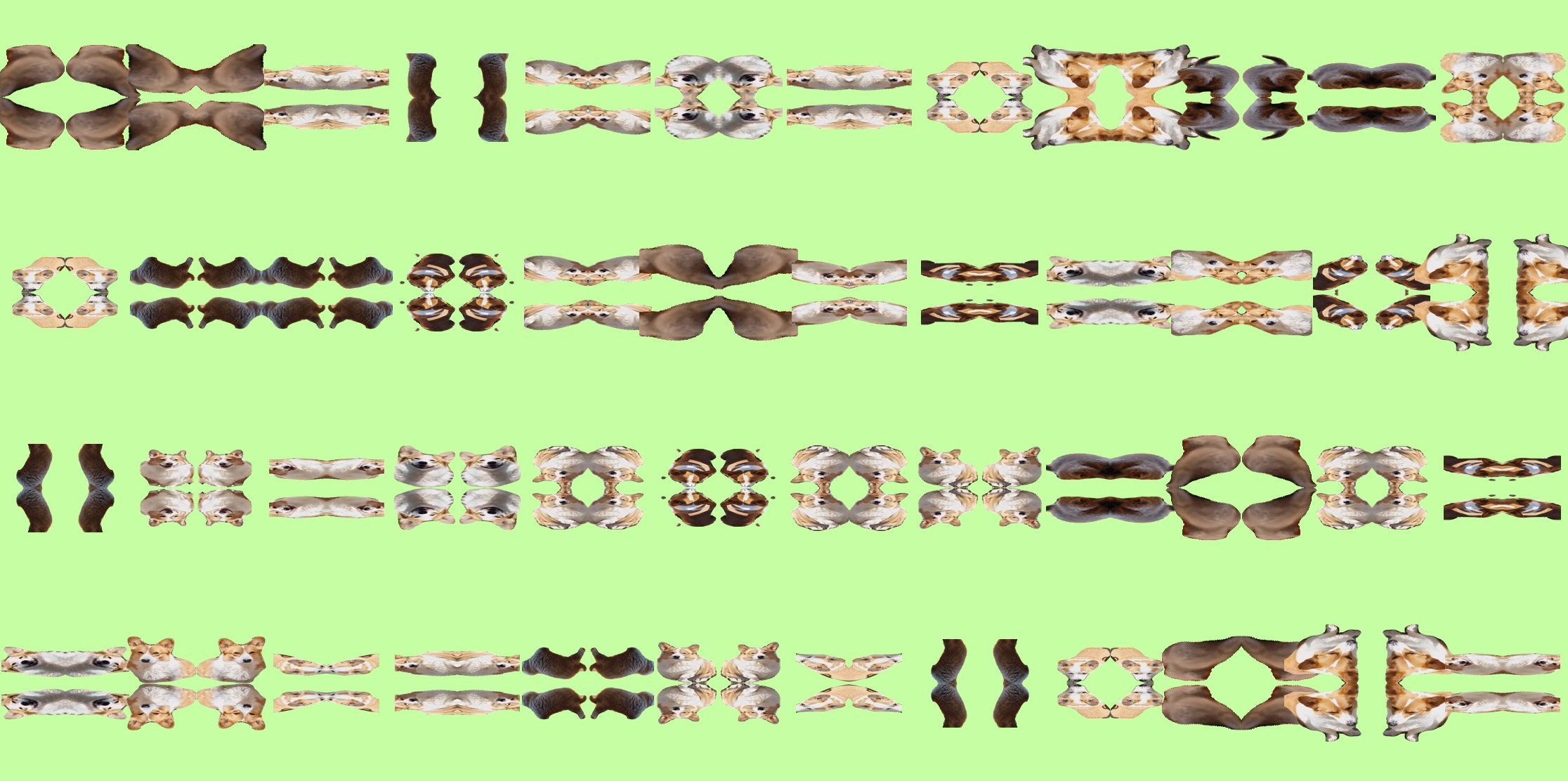

This project is a technical and artistic exploration in creating tiled wallpapers using Detectron2 to segment cute animal videos. The project lives in a Google Colab notebook, where one can paste a YouTube video link, and a random frame from the video will be pulled, which is then used to generate the collage. At the end of the process, a media object is created using the segmented images.

Throughout the semester, I have been interested in the role of data, machine learning, and APIs in relation to my artistic practice. This focus can be seen with my initial project in the class, which used WPRDC’s Parcels ‘N At database to explore small estates in Pittsburgh, and then locations with the address of 0. I then moved on to machine learning, using Monodepth2 to create a depth visualization of a location that does not exist.

Though I am proud of this work, I did fall short in a few areas. I had originally wanted to create a web interface and run this application as a user facing tool, and still intend to explore the possibility. I also had originally had planned on making it take an ‘interesting’ frame from the video, but right now it picks one at random, meaning I have to occasionally re-roll the image. Overall, I consider the output of the project to be interesting given that it can be read at a far scale as geometric, while close up it becomes novel.

A lot of this project was spent combing through documentation in order to understand input and output data in Numpy and Detectron2.

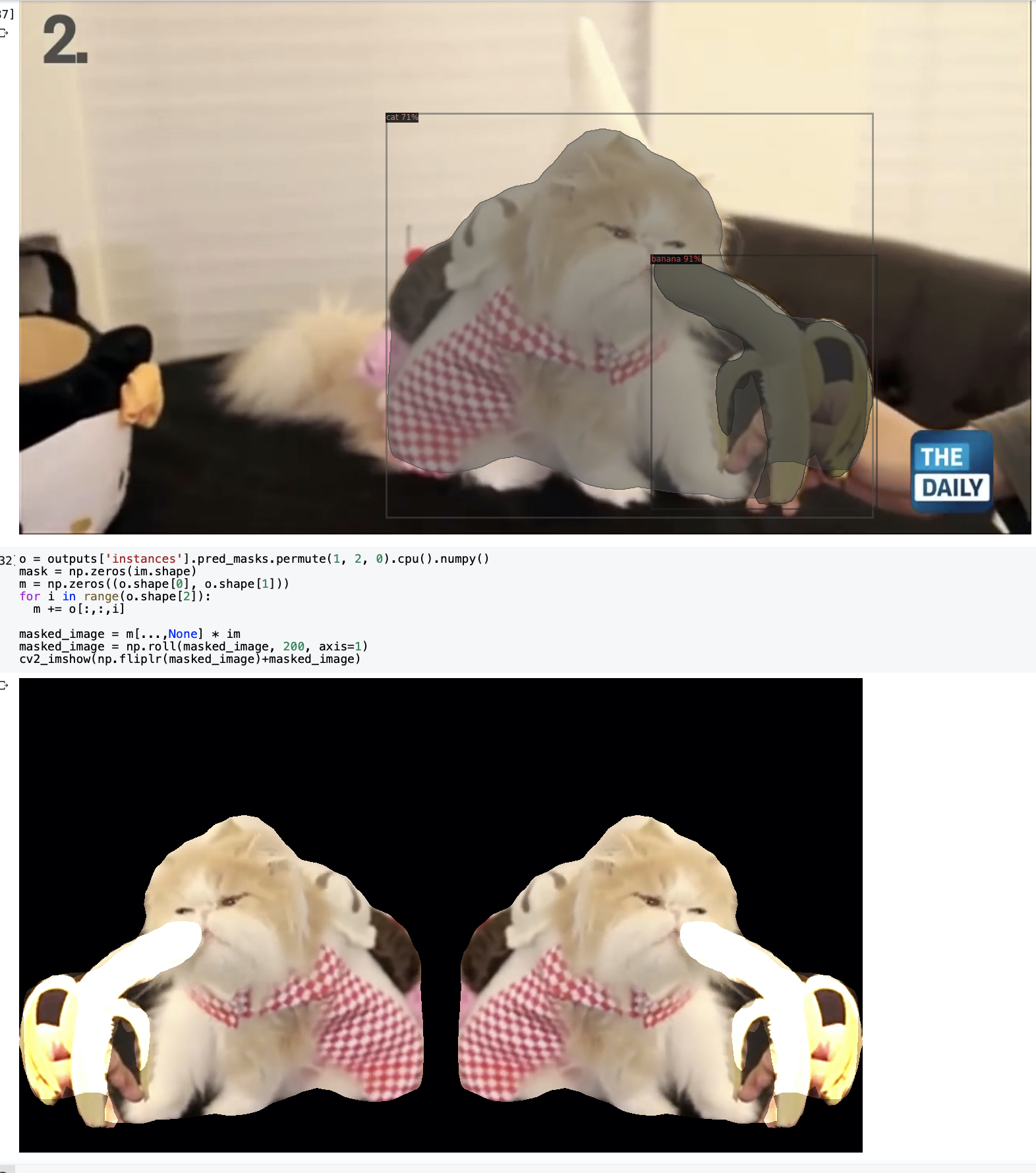

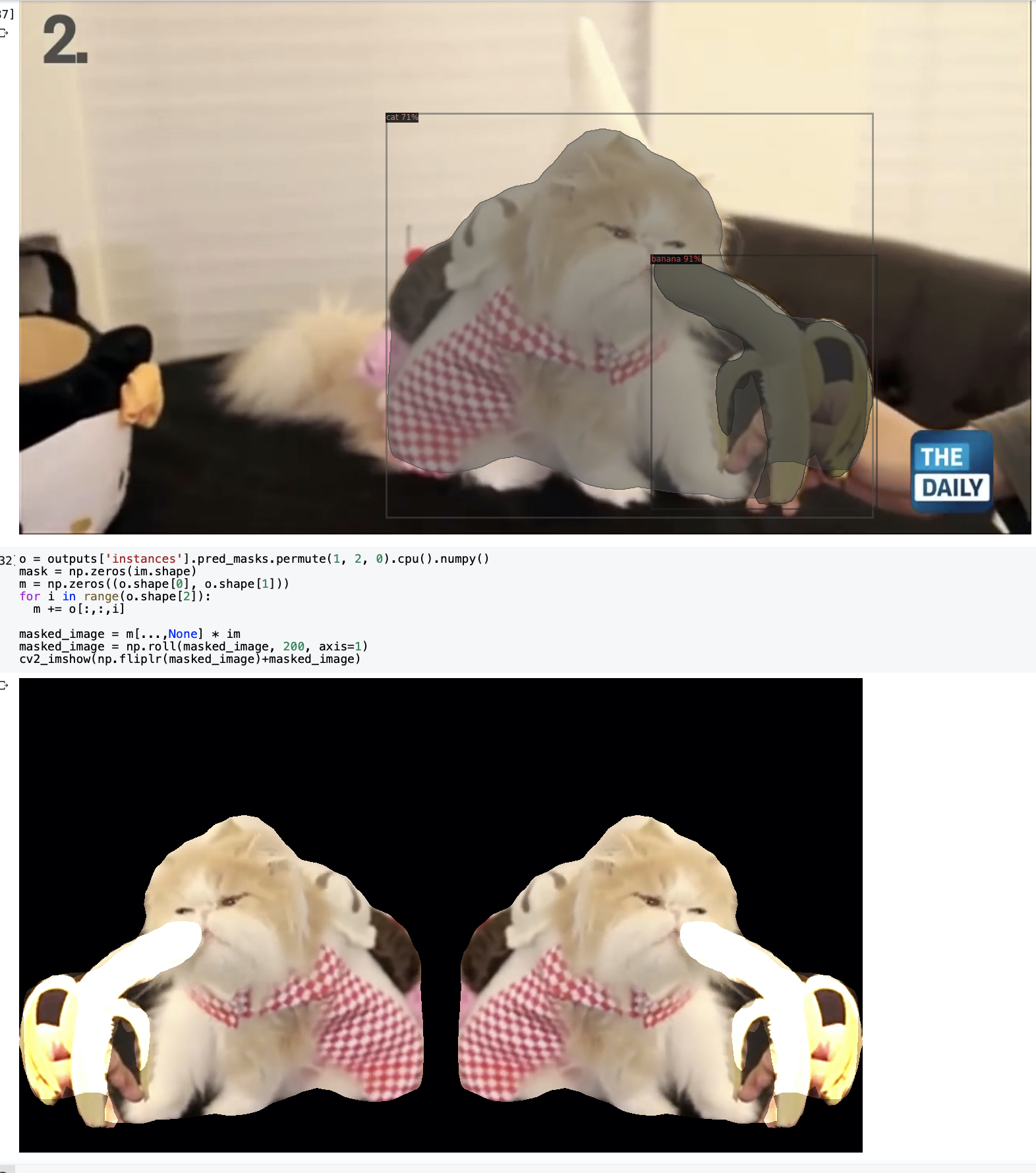

The input itself is simple enough: using the YouTube-dl Python lib, download a video from a supplied link, run it through FFMPEG, then pull a frame and run Detectron’s segmentation on it. Finally, take those results and use PIL to format them as an image.

However, my experience with Python was more focused on web scraping, data visualization, and using it in conjunction with Houdini or Grasshopper. I did know a little bit about Numpy, which helped when getting started, but actually understanding the data turned out to be a process.

At first, I could only export images in black and white, with no alpha segmentation.

After some help from Connie Ye, I was able to understand more about how Numpy’s matrices pass data around, and started getting actual colorful outputs

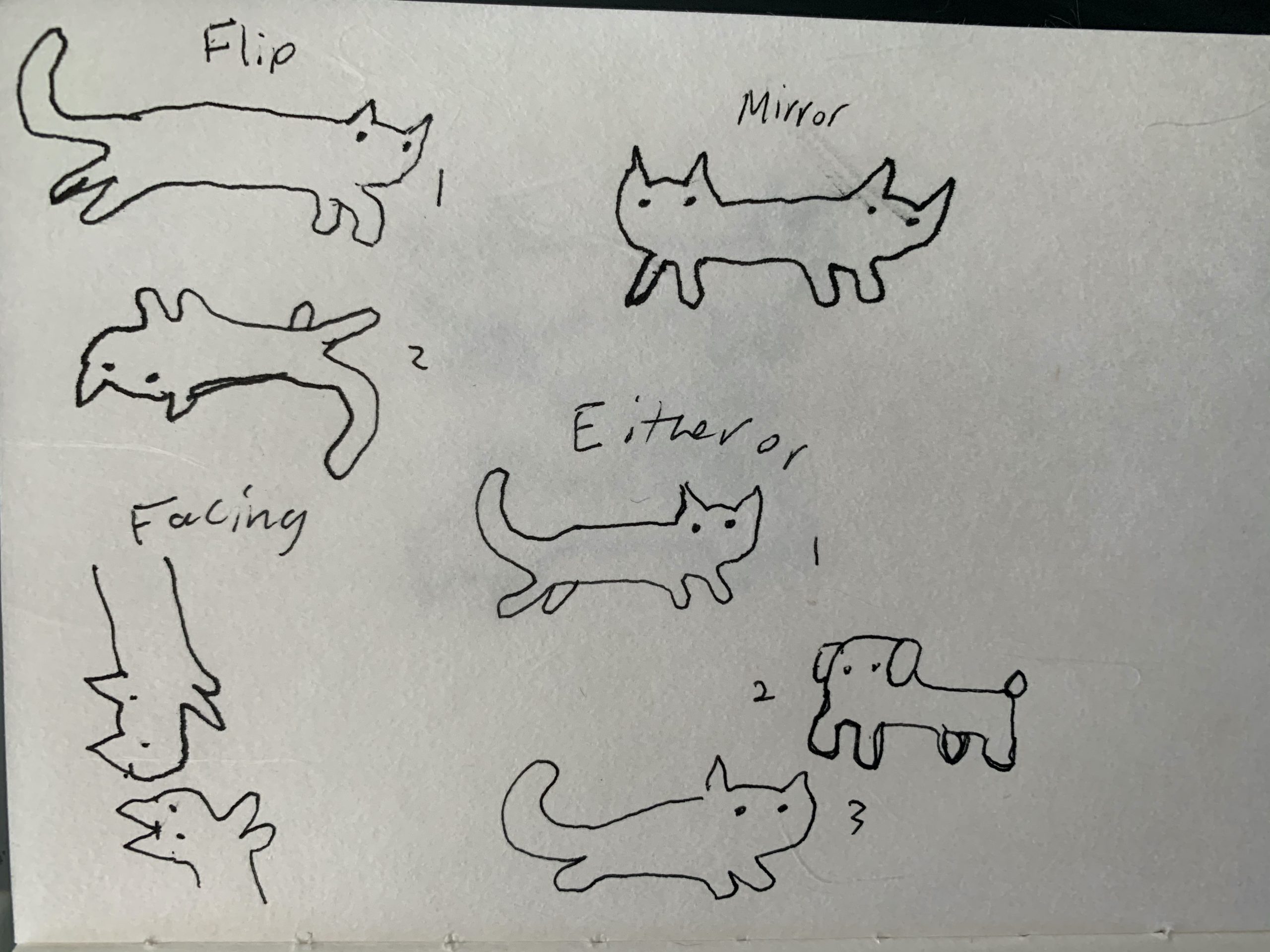

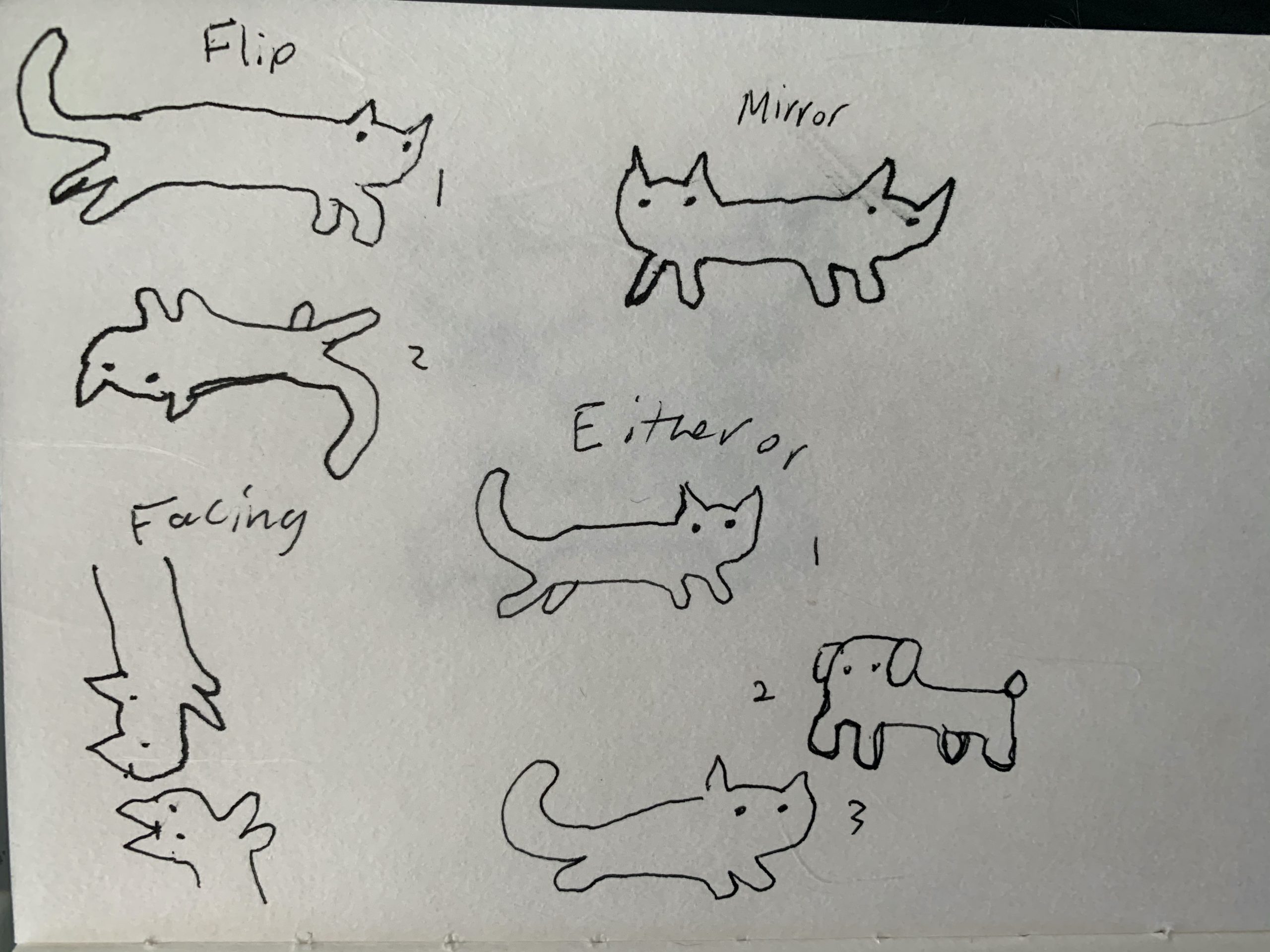

At the same time, I also began exploring how to format my output

I also spent a lot of time browsing GitHub trying to understand how to read segment classification from the output of Detectron. As a last little bump in the road, after taking a day off from the project, I forgot that the datasets are organized as (H,W,Color), and spent a while debugging improperly masked images as a result.

After that it was relatively smooth sailing in working through the composition.

POSTREVIEW UPDATE:

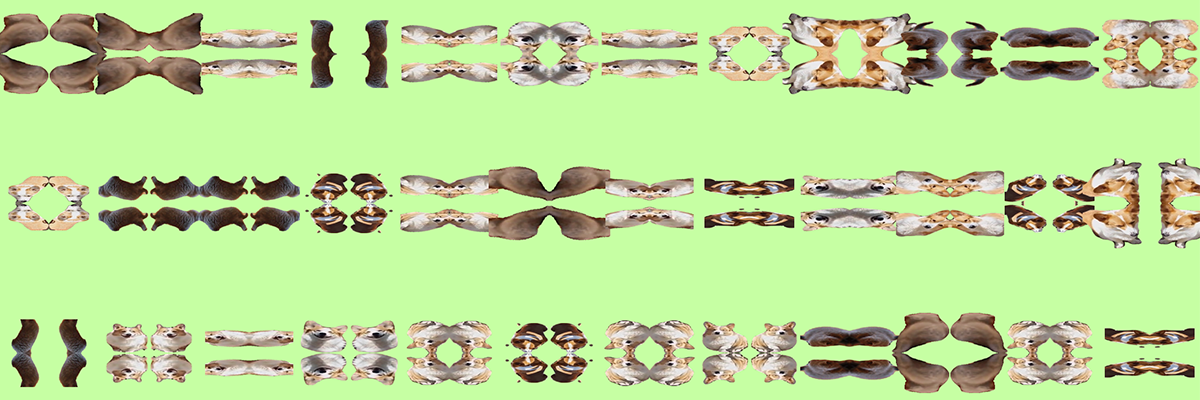

I thought about the criticism I got in the review, and realized I could make the patterns cuter mostly with scale changes. Here are some of the successes

I’ve managed to segment with color and can also export the images with alpha data. It exports images with a given tag to look for (using the COCO dataset). The plan now is to take the images into something like PIL and collage them there. I can also move towards being more selective about frames chosen.

I’ve managed to segment with color and can also export the images with alpha data. It exports images with a given tag to look for (using the COCO dataset). The plan now is to take the images into something like PIL and collage them there. I can also move towards being more selective about frames chosen.

After my last post, I have began sketching ideas about layout and tiling patterns for the images.

I also managed to segment out content from a frame using Numpy!

I am mostly on track with my project. I managed to make a Colab notebook based on Detectron2’s tutorial notebook which has:

What I need to do next is:

I am still deciding on what I want the output collages style to be.

For the end of the semester I’m thinking of using Detectron2 to create a novel tool for collage.

Right now, my idea is to take a YouTube link of one’s favorite cute animal video and use FFMPEG to pull frames from it, then run Detectron2 to mask those images, and finally mask the images (probably with GPU numpy). Then I hope to translate the images and make them into a collage from those results.

My goal for next week would be to take a segmented YouTube video and mask out the background as a test.

I spend most o my time on tutorial sites and picking apart code. This was my reading list last week.

I spend most o my time on tutorial sites and picking apart code. This was my reading list last week.

My Amazon suggested purchases. There’s something funny about these small kitschy items compared to the expensive graphics card.

My Amazon suggested purchases. There’s something funny about these small kitschy items compared to the expensive graphics card.

My rat persona made with the amazing Algorat Rat Maker.

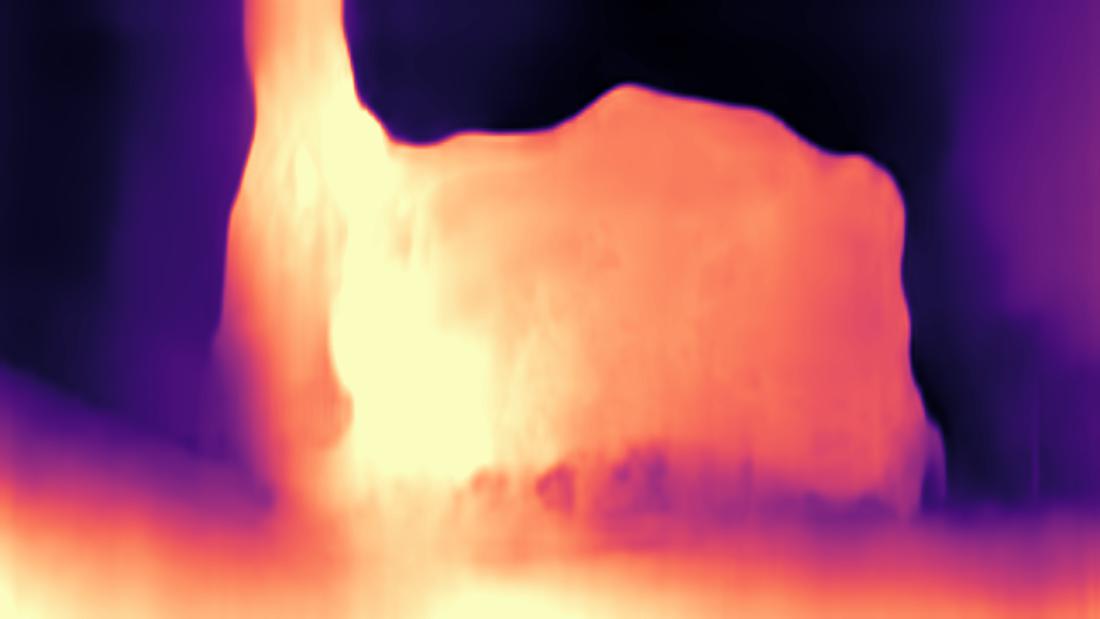

I ended up using Monodepth2, a machine learning library made by Niantic Labs and UCL.

I created a Google Colab notebook to make it easier to use the software and converted some of the timelapse below

to this:

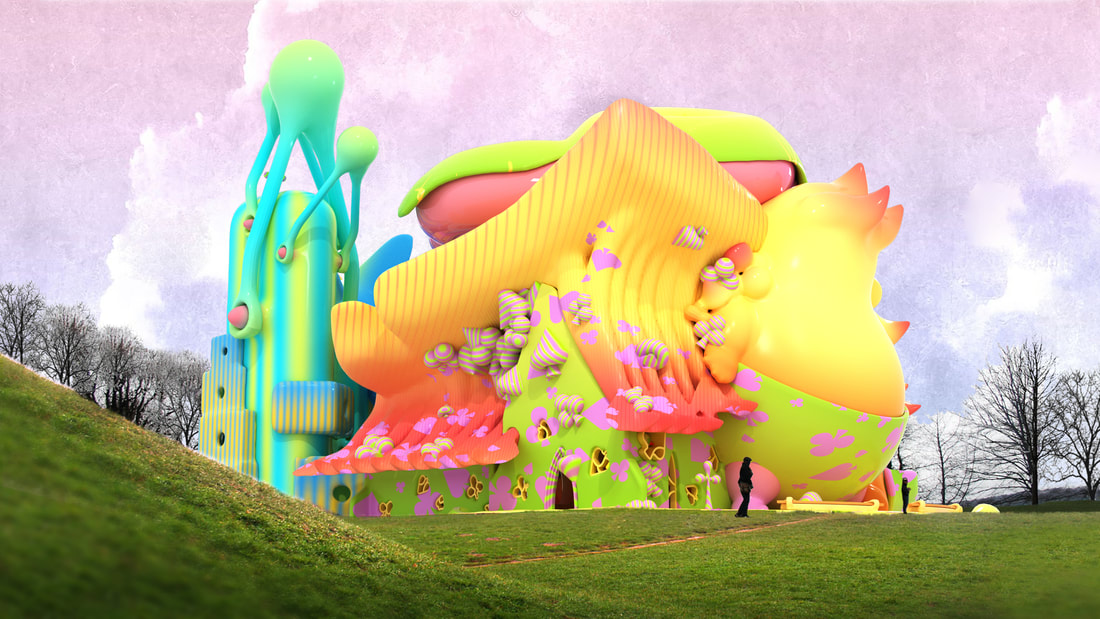

I also tried running the algorithm on a scene that doesn’t exist from SCI-Arc student Siyao Zheng’s thesis project.

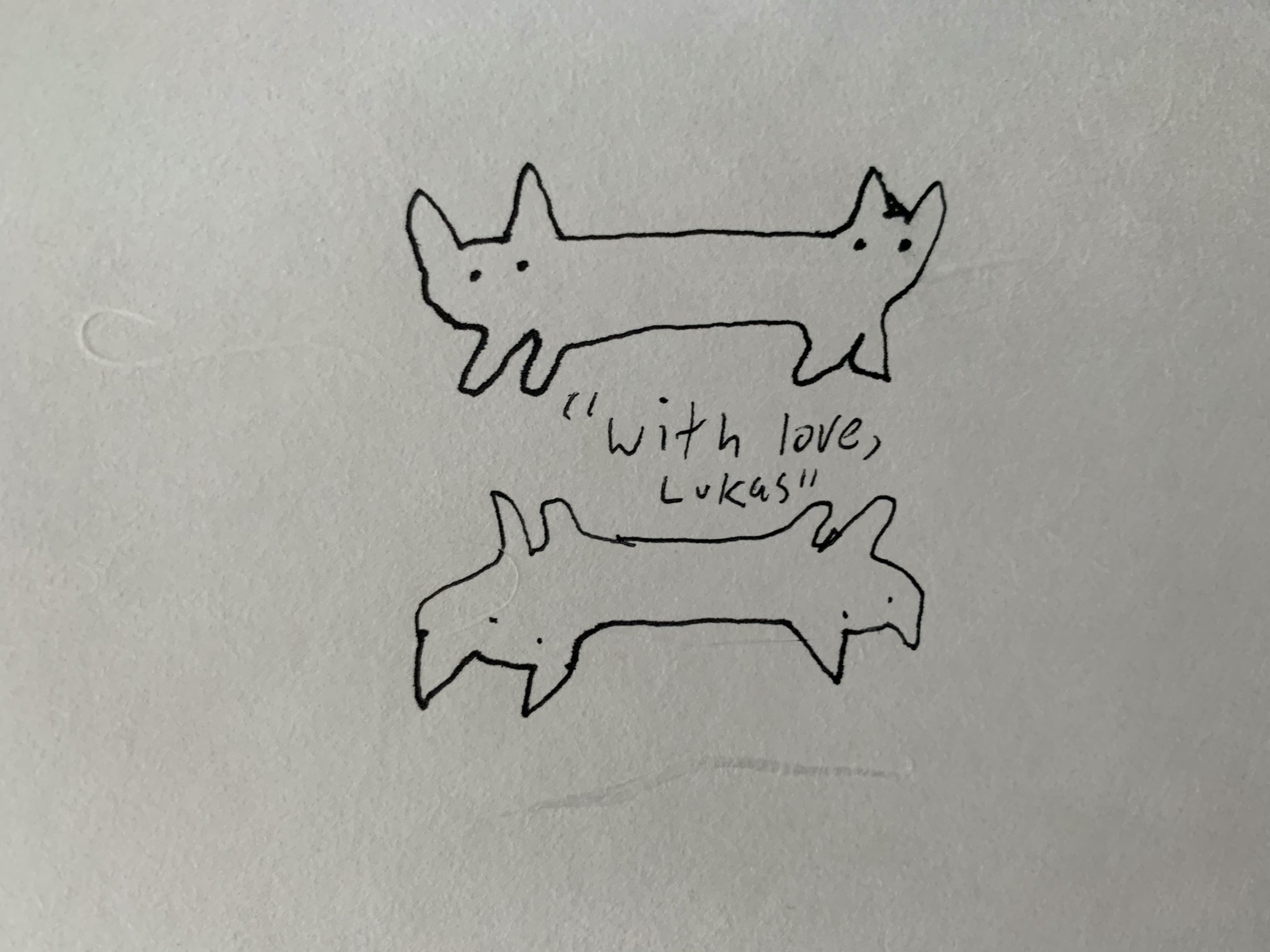

I ended up calling my twin brother and following the prompt:

Ask your family to describe what you do.

I think there’s something sweet about this prompt, in that at the core of having family describe you, they want to convey their love.

I tried to make some ambient visual accompaniment to this audio, but decided to just leave it as a sound clip after attempting a few iterations.

This post was based on an email to Golan.

I’m pretty interested in the tools of the creative tech trade, and there are a lot of tools that I’m interested in or have used that I can recommend as helpful for this course.