Catterns

A project to turn cats and dogs into tiled wallpapers.

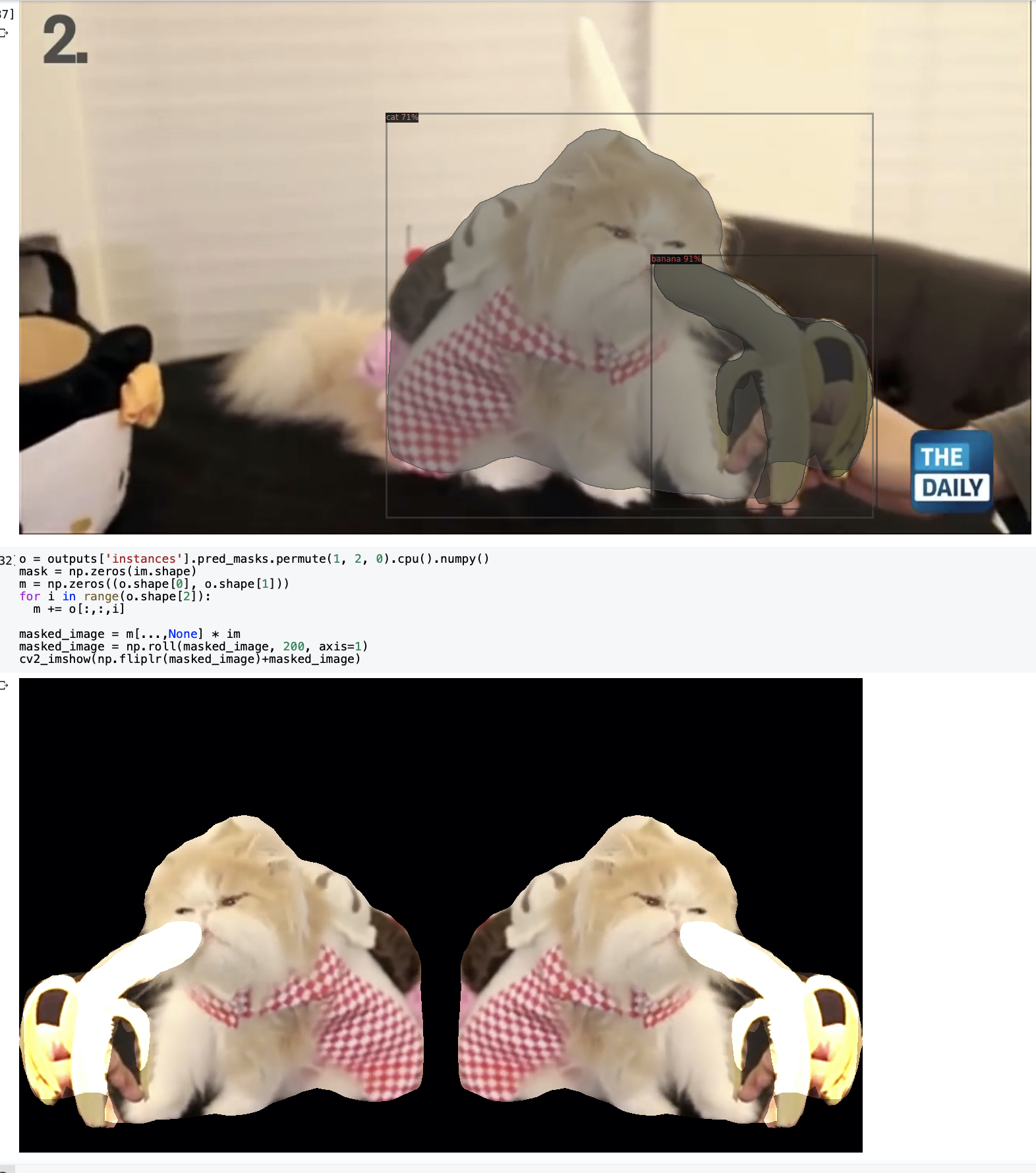

This project is a technical and artistic exploration in creating tiled wallpapers using Detectron2 to segment cute animal videos. The project lives in a Google Colab notebook, where one can paste a YouTube video link, and a random frame from the video will be pulled, which is then used to generate the collage. At the end of the process, a media object is created using the segmented images.

Throughout the semester, I have been interested in the role of data, machine learning, and APIs in relation to my artistic practice. This focus can be seen with my initial project in the class, which used WPRDC’s Parcels ‘N At database to explore small estates in Pittsburgh, and then locations with the address of 0. I then moved on to machine learning, using Monodepth2 to create a depth visualization of a location that does not exist.

Though I am proud of this work, I did fall short in a few areas. I had originally wanted to create a web interface and run this application as a user facing tool, and still intend to explore the possibility. I also had originally had planned on making it take an ‘interesting’ frame from the video, but right now it picks one at random, meaning I have to occasionally re-roll the image. Overall, I consider the output of the project to be interesting given that it can be read at a far scale as geometric, while close up it becomes novel.

A lot of this project was spent combing through documentation in order to understand input and output data in Numpy and Detectron2.

The input itself is simple enough: using the YouTube-dl Python lib, download a video from a supplied link, run it through FFMPEG, then pull a frame and run Detectron’s segmentation on it. Finally, take those results and use PIL to format them as an image.

However, my experience with Python was more focused on web scraping, data visualization, and using it in conjunction with Houdini or Grasshopper. I did know a little bit about Numpy, which helped when getting started, but actually understanding the data turned out to be a process.

At first, I could only export images in black and white, with no alpha segmentation.

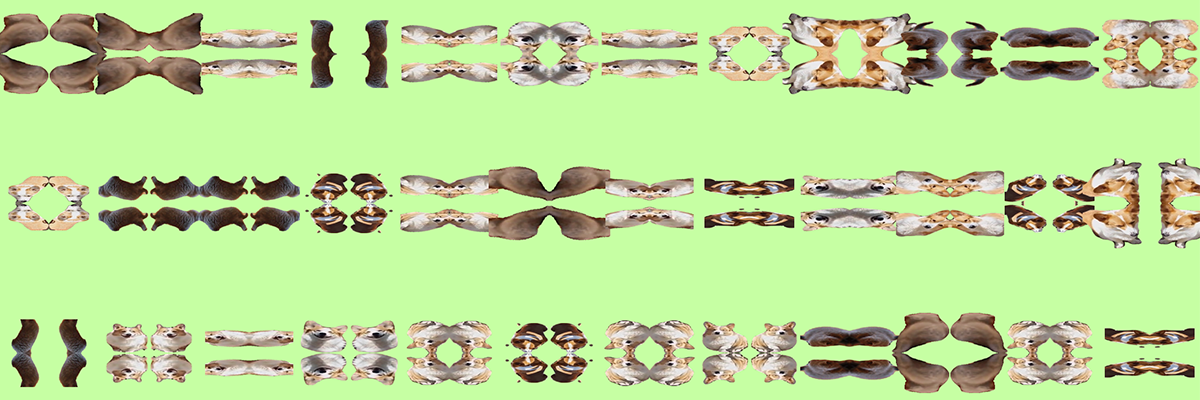

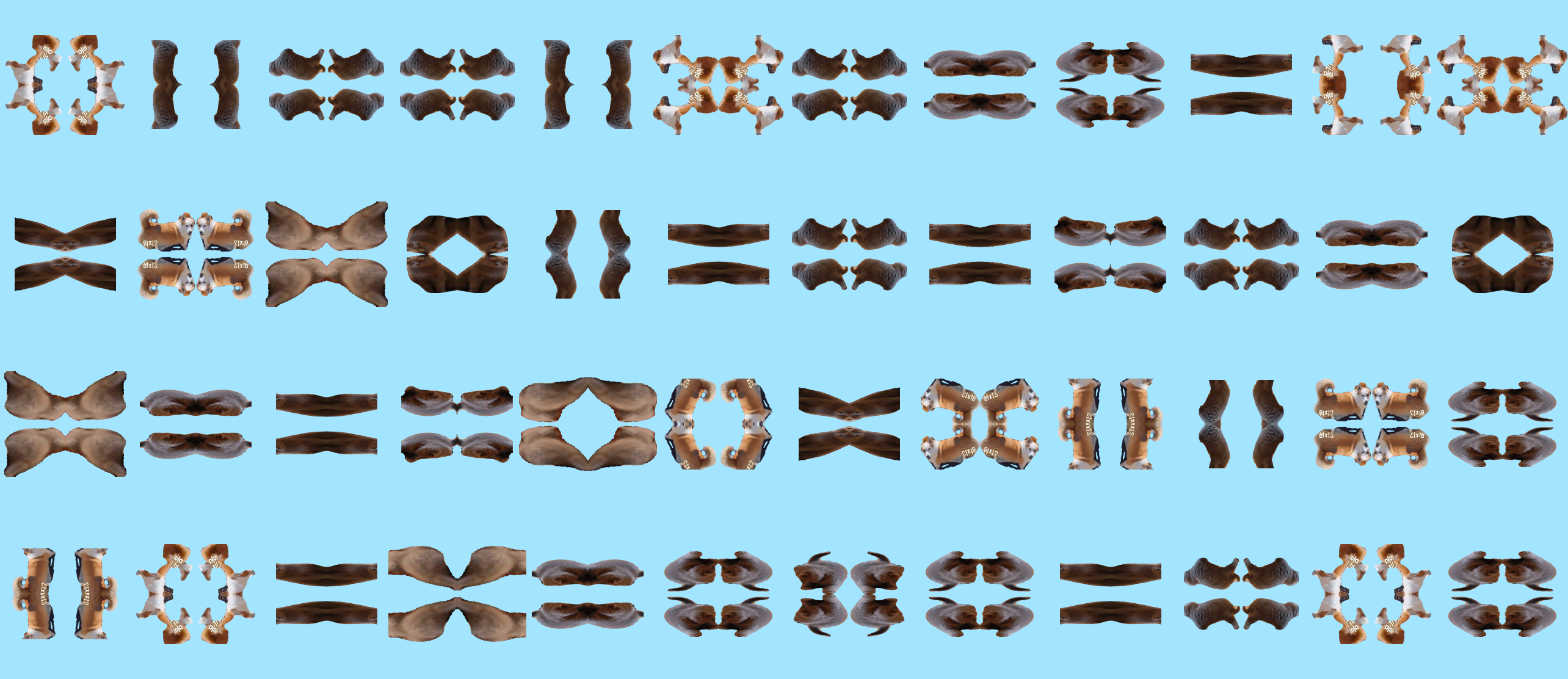

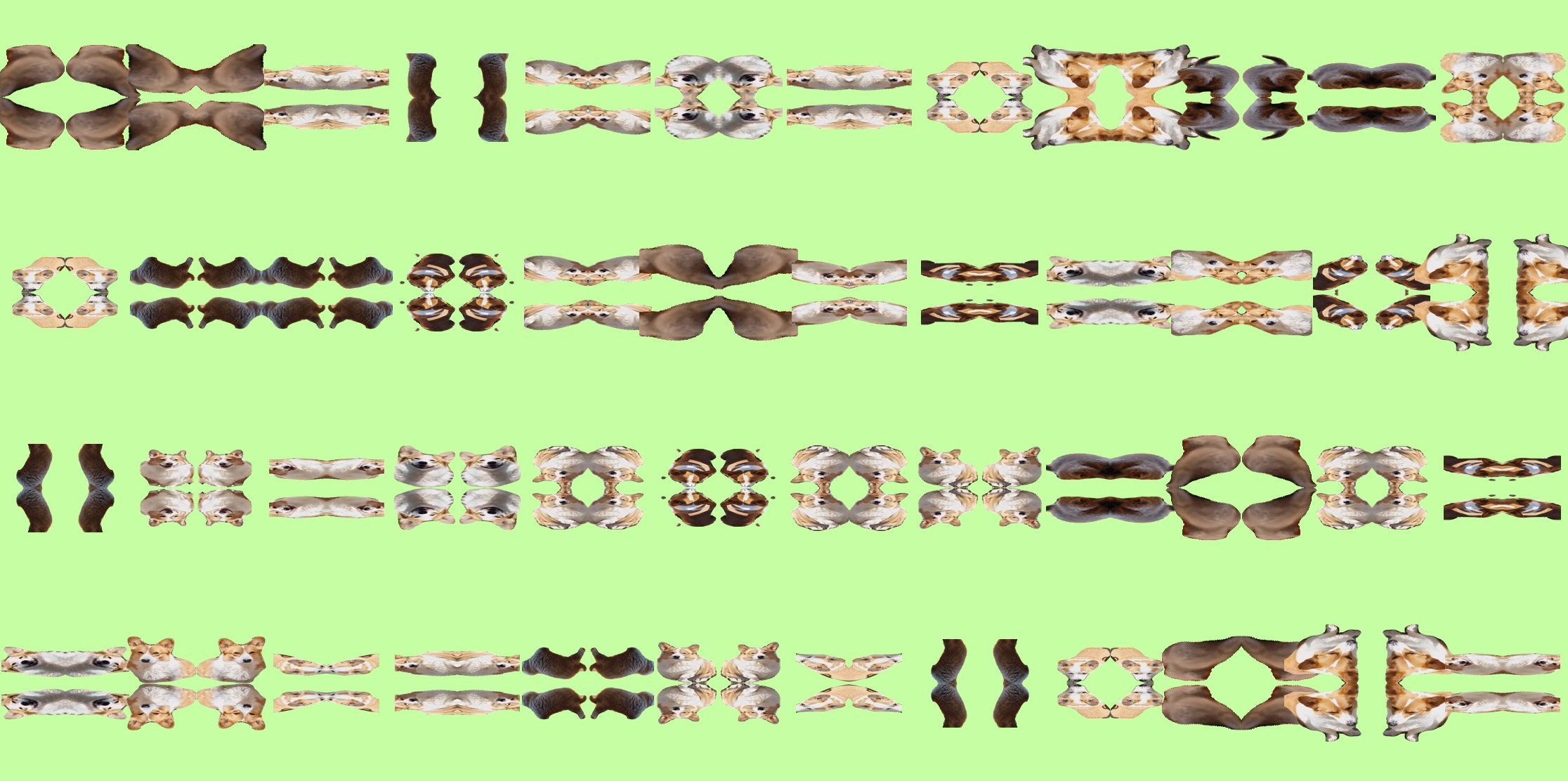

After some help from Connie Ye, I was able to understand more about how Numpy’s matrices pass data around, and started getting actual colorful outputs

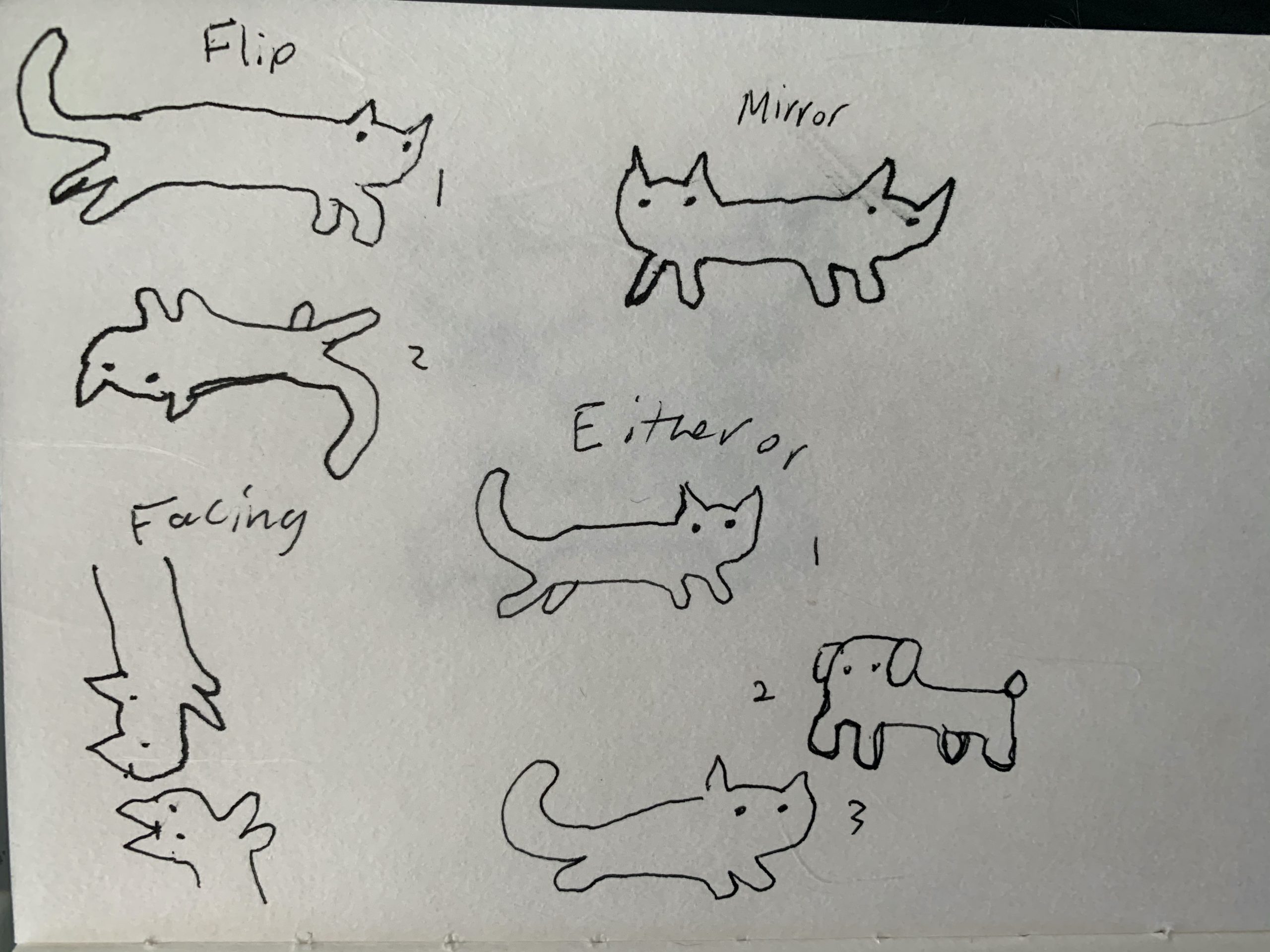

At the same time, I also began exploring how to format my output

I also spent a lot of time browsing GitHub trying to understand how to read segment classification from the output of Detectron. As a last little bump in the road, after taking a day off from the project, I forgot that the datasets are organized as (H,W,Color), and spent a while debugging improperly masked images as a result.

After that it was relatively smooth sailing in working through the composition.

POSTREVIEW UPDATE:

I thought about the criticism I got in the review, and realized I could make the patterns cuter mostly with scale changes. Here are some of the successes