360 Ings (April 2020)

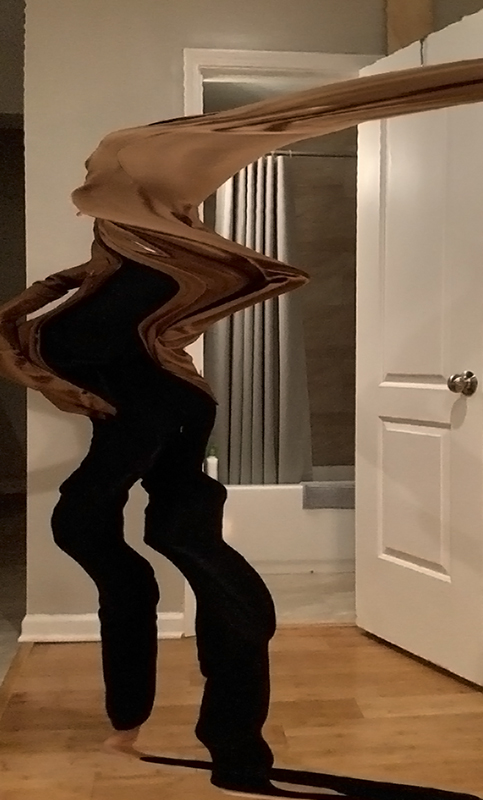

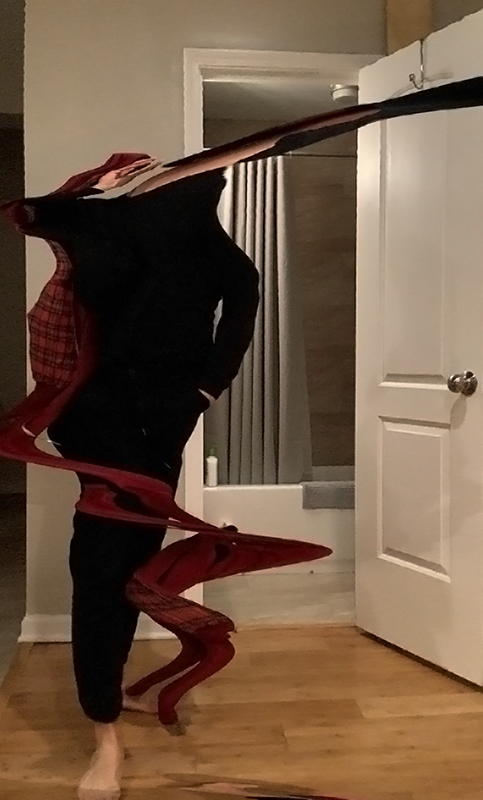

A 360° video performance of hectic and frisky actions and gestures overlapped in the domestic space.

60-461/761: Experimental Capture

CMU School of Art / IDeATe, Spring 2020 • Profs. Golan Levin & Nica Ross

A 360° video performance of hectic and frisky actions and gestures overlapped in the domestic space.

The stay-at-home order has enabled the transfer of our outdoor activities and operations into the domestic space. What used to happen outside of our walls, now happens infinitely repeated in a continuous performance that contains each of our movements.

Return home indefinitely produces an unsettling sentiment of oddness. For some people, this return will affect identically to that man in by Chantal Akerman’s ‘Le déménagement,’ that stands bewildered to the asymmetry of his apartment. For me, it represents an infinite loop of actions that kept me in motion from one place to another. A perpetual suite of small happenings similar to the piano pieces that the composer Henri Cowell titled ‘Nine Ings’ in the early 20th century, all gerunds:

Floating

Frisking

Fleeting

Scooting

Wafting

Seething

Whisking

Sneaking

Swaying

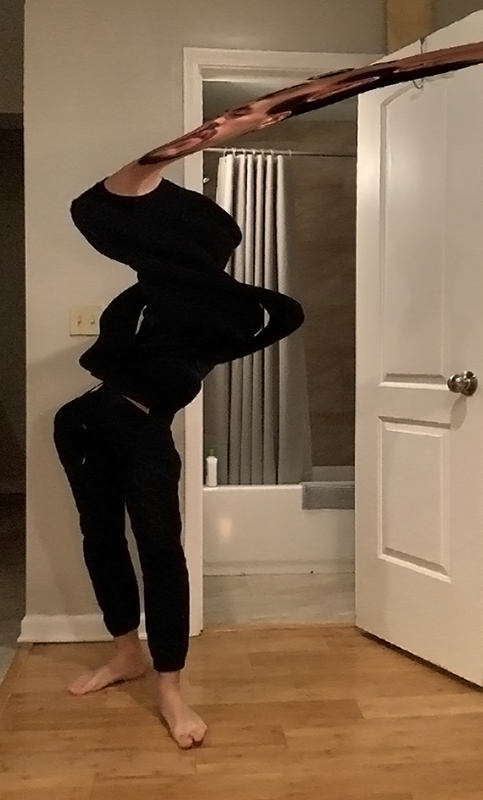

In ‘360 Ings’ those small gestures and their variations are recorded in the 360-degrees that a spherical camera can capture, isolated and cropped automatically with a machine learning algorithm, and edited all together to multiply the self of the enclosed living.

A 360 interactive version is available on Youtube.

I used the Xiaomi Mi Sphere 360 Camera which has a resolution of 3.5K video, and therefore the sizes of the video files were expected to be quite big. For that reason, I had to reduce the time of the performance under 5 min (approx. 4500 frames at 15fps). Time and space planning has been fundamental.

The footage provided is then remapped and stick into a panoramic image using the software provided by the company.

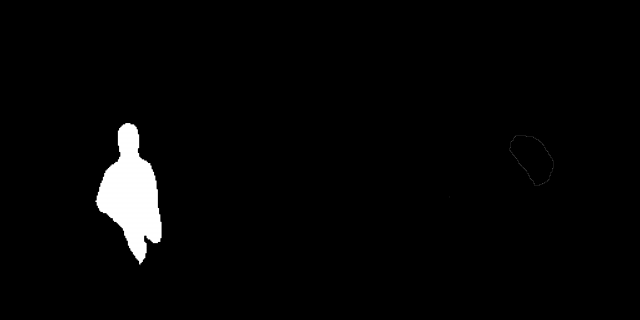

After the recording process, I used a machine learning method to subtract the background of an image and produce an alpha matte channel with crisp but smooth edges to rotoscope myself in every frame. Trying many of these algorithms has been essential. This website provides a good collection of papers and contributions to this technique. Initially, I tried MaskRCNN and Detectron 2 to segment the image where a person was detected, but it did not return adequate results.

Deep Labelling for Semantic Image Segmentation (DeepLabV3) produces good results, but the edge of the object was not clear enough to rotoscope me in every frame. A second algorithm was needed to succeed in this task, and this is exactly what a group of researchers at University of Washington are currently working on. ‘Background Matting’ uses a deep network with and adversarial loss to predict a high-quality matte by judging the quality of the composite with a new background (in my case, that background was my empty living room). This method runs using Pytorch and Tensorflow with CUDA 10.0. For more instructions on how to use the code, here is the Github site (they are very helpful and quick responding).

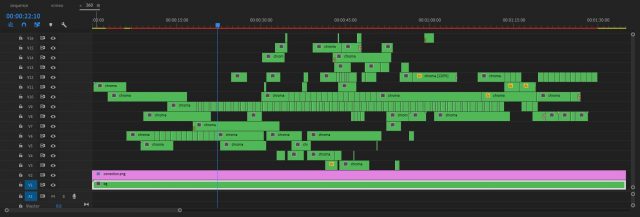

With the new composite, I edited every single action on time and space using Premiere. The paths of my movements often cross and I had to cut and reorganize single actions into multiple layers.

Finally, I produced a flat version of the video controlling the camera properties and transition effects between different points of view using Insta360 Studio (Vimeo version).

Source code: https://github.com/senguptaumd/Background-Matting

I plan to continue working on my Person In Time project, a 360 video performance that simultaneously will show all my movements, actions, and possibly, affections, over a 24h quarantine day.

The stay-at-home order has enabled the transfer of our outdoor activities and operations into the domestic space. What used to happen outside of our walls, now happens virtually all over our places. Our house is, therefore, the control room for the production, consumption, and biopolitics of our lives (P. Preciado, 2020). This project aims to complete the cycle signifying the surveillance techniques of our bodies by public and private institutions, but now inside of the new country demarcated within our partitions.

My main goal during the rest of the semester will be to speculate with the deformation of time along the spatial axis of my home in different ways:

I will use a fixed 360 camera to record my whole living space at once. Then, I will use a background subtraction algorithm (possibly, this one) to rotoscope myself in various clips and edit a final video piece with all the different fragments. Although the performance will contain unhomely activities and distorted views of the anxious experience of enclosed living, I will avoid choreographing and beautifying the movements to produce a pseudo-surrealistic documentary.

References:

Readings:

I have been a few days looking for lossy images of myself, avatars, Google results, but due to an intense effort of erasing my online history, now I do not keep many examples of the good old Internet times… In the process of understanding how can work with these lossy images, first I researched how our JPEG images are generated and compressed and I found the website FotoForensics, which explains different methods to analyze images in search of digital manipulations and retouches. One of them is called Error Level Analysis which ‘permits identifying areas within an image that are at different compression levels.’ Original raw images from cameras normally have a great level of detail and contrast which produces higher ELA values (brighter colors), especially in the borders:

‘JPEG compression attempts to create patterns in the color values in order to reduce the amount of data that needs to be recorded, thereby reducing the file size. In order to create these patterns, some color values are approximated to match those of nearby pixels.’ The resulting image usually is less contrasted (borders are less defined) and therefore, ELA will produce a darker image.

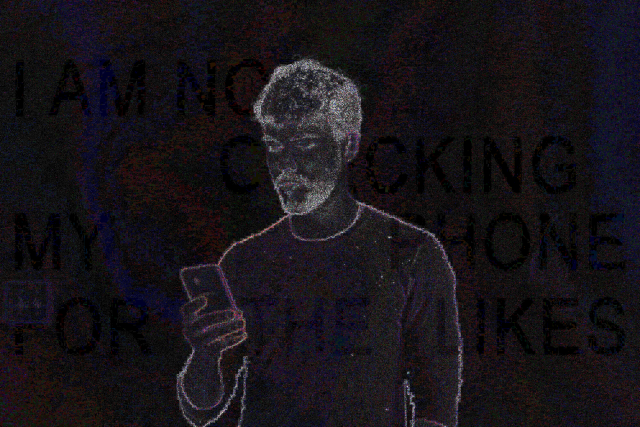

When editing the image removing the background and placing a radial gradient made of two very similar colors, ELA reveals a ‘visible separation between the luminance and chrominance channels as a blue/purple/red coloring called rainbowing’. Under ELA, uniform areas indicate image manipulation.

After all this testing time, I thought this analysis also could be reverted to encrypt messages under the areas that have been digitally manipulated, although not so much to be perceived from the JPEG image. Here, I have used a text as a mask in Photoshop and later a gaussian blur affecting that area of pixels.

Check also ‘steganography,’ a method that attaches bits of a file to the background noise of JPEG images. It is used to encrypt conversations or documents inside image files: Steganography in contemporary cyberattacks

Some experiments posing in slit-scan mode powered by Poloska app.

I am very interested in how Dirk Doy’s Fixed series are able to relocate the subject of a video narrative by centering the location of a moving object in a scene. Following his method, I thought it will be interesting to track the position of moving elements along the facade of a building such as construction cranes, window washing climbers, or furniture lifts. This new perspective could create the impression of the whole city being pushed upwards. Here, I made a short clip based on a YouTube video:

360 soundscape of a meal

A 360 video and audio composition isolating every sound recorded during a meal (self-produced and peripheral sounds) with its decontextualized visual representation (a mask will cover the video footage partially only uncovering the area that produces the sound).

A dining visual score

Inspired by Sarah Wigglesworth’s dining tables I would like to explore the conversation between the objects used during a meal. For this, I will track the 3d positions and movements of cutlery, plates, utensils, and glasses during a meal for one or more people, and after I, I will create a video composition with 3d animated objects that act by themselves, video fragments of the people spatially placed where they were seated, and audio pieces of the performance.

A meeting of conversational fillers

As a non-native English speaker, I have been noticing lately that when I am very tired my mind can detach from a conversation and suddenly, the only thing I can listen to are repetitive words and conversational fillers. I would like to reproduce that experience, in the same way as my first idea, capturing a 360 video of decontextualized ‘uh’, ‘um’, ‘like’, ‘you know’, ‘okay’ and so on.

‘Remnant of affection’ is a typology machine that explores the thermal footprint of our affective interactions with other beings and spaces.

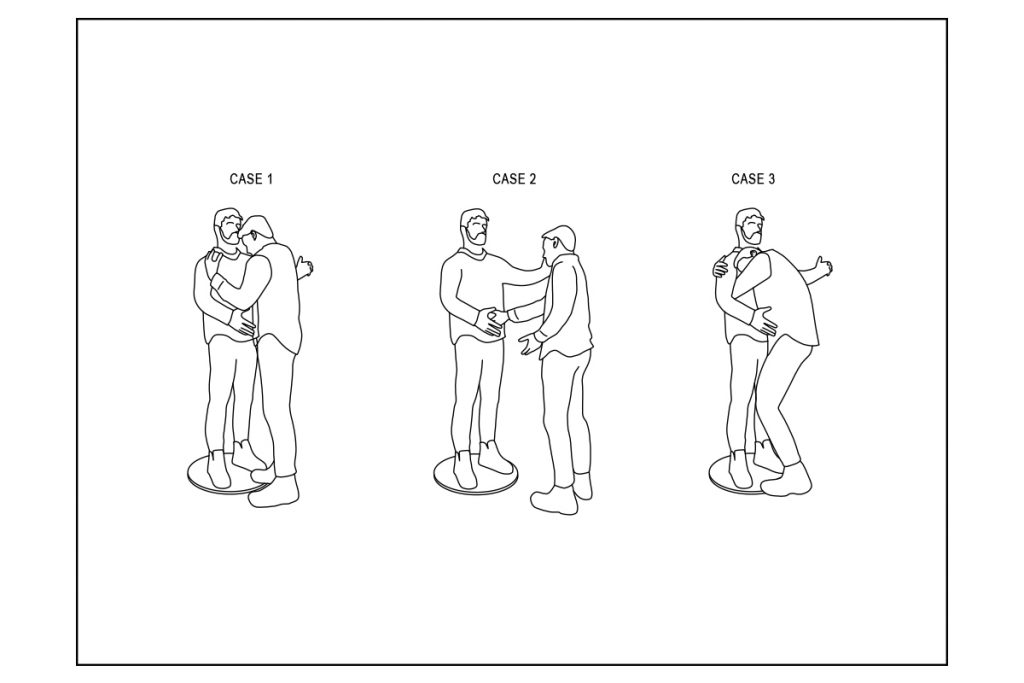

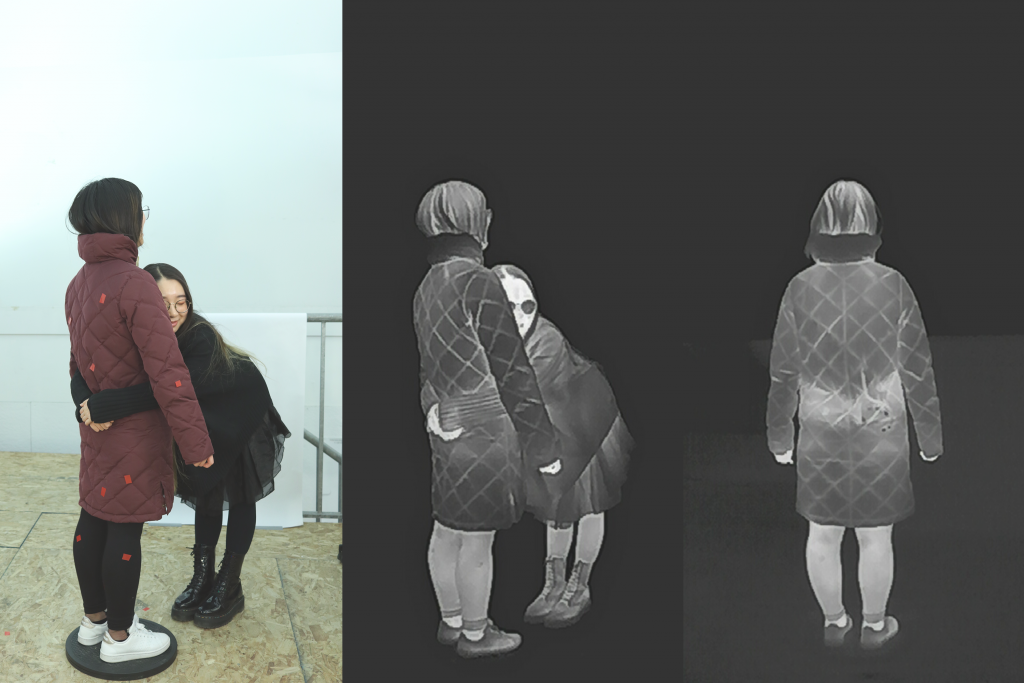

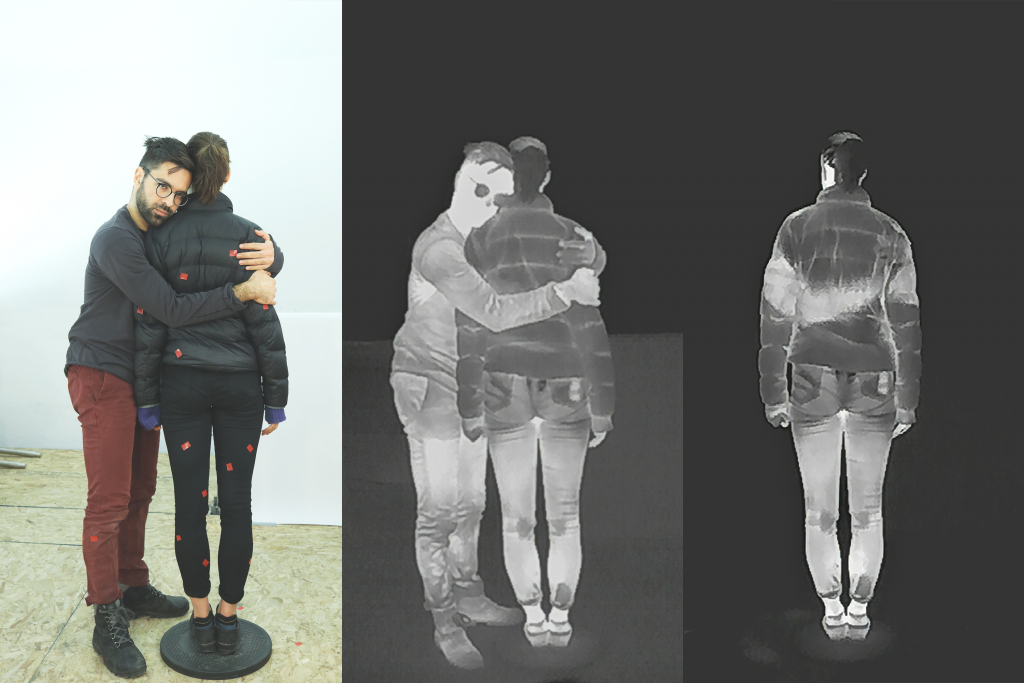

For this study, I have focused on one of the most universal shows of affection: a hug. Polite, intimate, or comforting; passionate, light, or quick; one-sided, from the back, or while dancing. Hugs have been widely explored compositionally from the perspective of an external viewer, or from the personal description of the subjects that intervene in its performance (‘Intimacy Machine‘ Dan Chen, 2013). In contrast, this machine explores affection in the very same place where it happens: the surface of its interaction. Echoing James J. Gibson, the action of the world is on the surface of the matter, the intermediate layer between two different mediums. Apart from a momentaneous interchange of pressure, a hug is also a heat transfer that leaves a hot remnant on the surface once hugged. Using a thermal camera, I have been able to see the radiant heat from the contact surface that shaped the hug and reconstruct a 3d model of it.

The Typology Machine

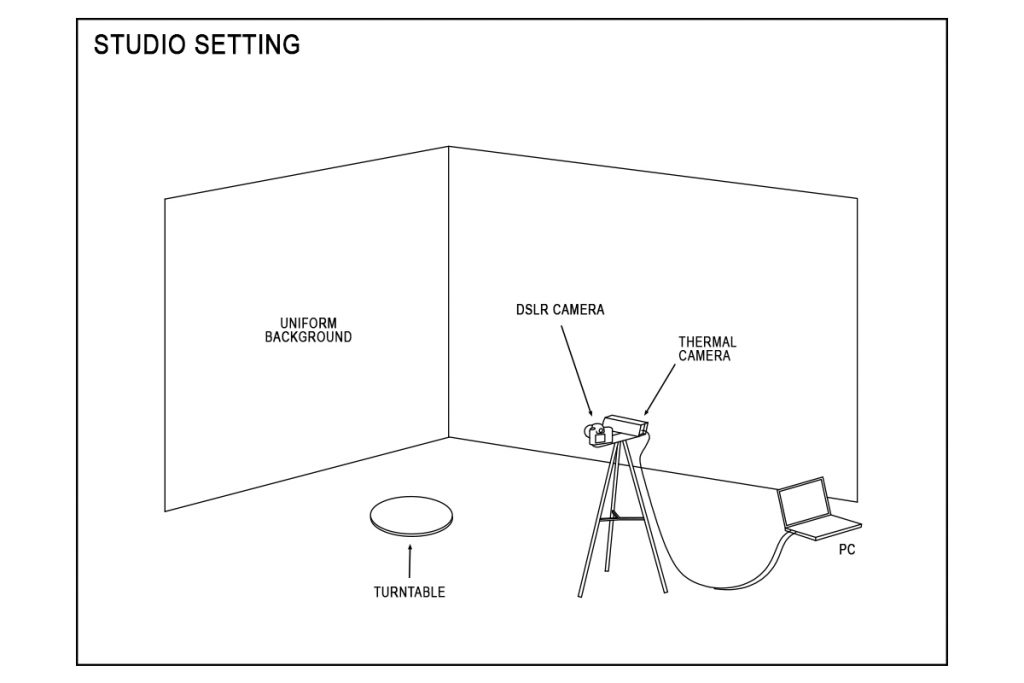

Reconstructing the thermal interchange of a hug required a fixed background studio setting due to the light conditions and the unwieldy camera framework needed for a photogrammetry workflow. After dropping the use of mannequins as ‘uncanny’ subjects of interaction in the Lighting Room at Purnell Center, the experiment was performed on the second floor of the CodeLab at MMCH.

First, I asked a different couple of volunteers if they would like to take part in the experiment. Although a hug is bidirectional, one of the individuals had to take the role of the ‘hugger,’ standing aside from the capture, while the other played the role of ‘hug’ retainer. The second one had to wear their coat to mask their own heat and allow more contrasted retention of the thermal footprint (coats normally contains normally synthetic materials, such as polyester, with a higher thermal effusivity that stores a greater thermal load in a dynamic thermal process). Immediately after the hug, I took several pictures of the interaction with two cameras at the same time: a normal color DSLR with a fixed lens, and a thermal camera (Axis Q19-E). Both were able to be connected to my laptop through USB and Ethernet connection, so I could use an external monitor to visualize the image in real-time and store the files directly on my computer.

Camera alignment

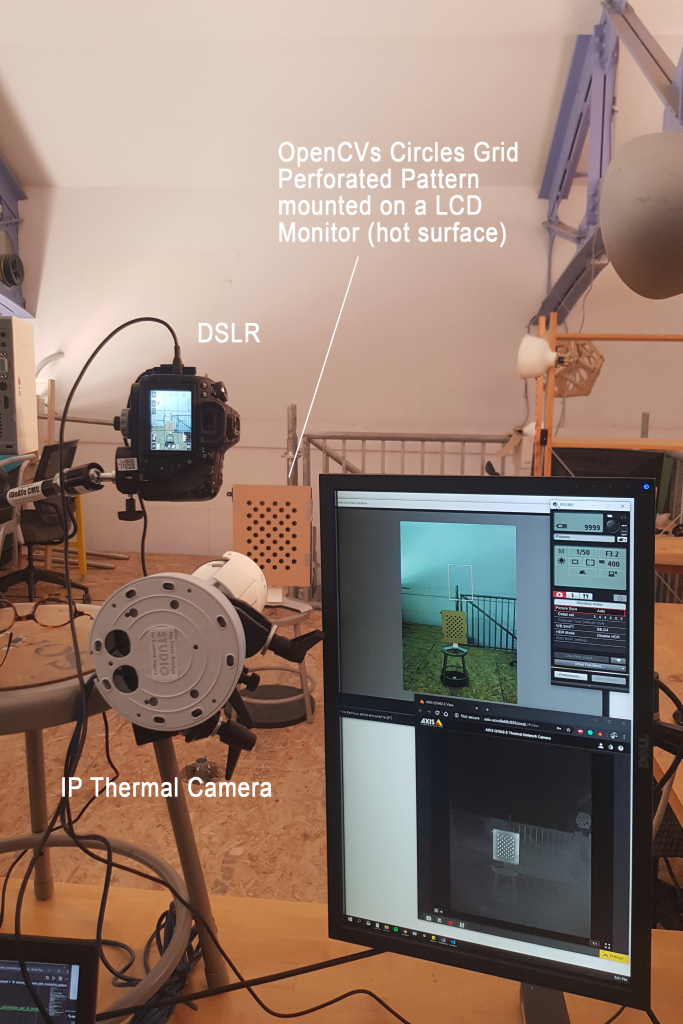

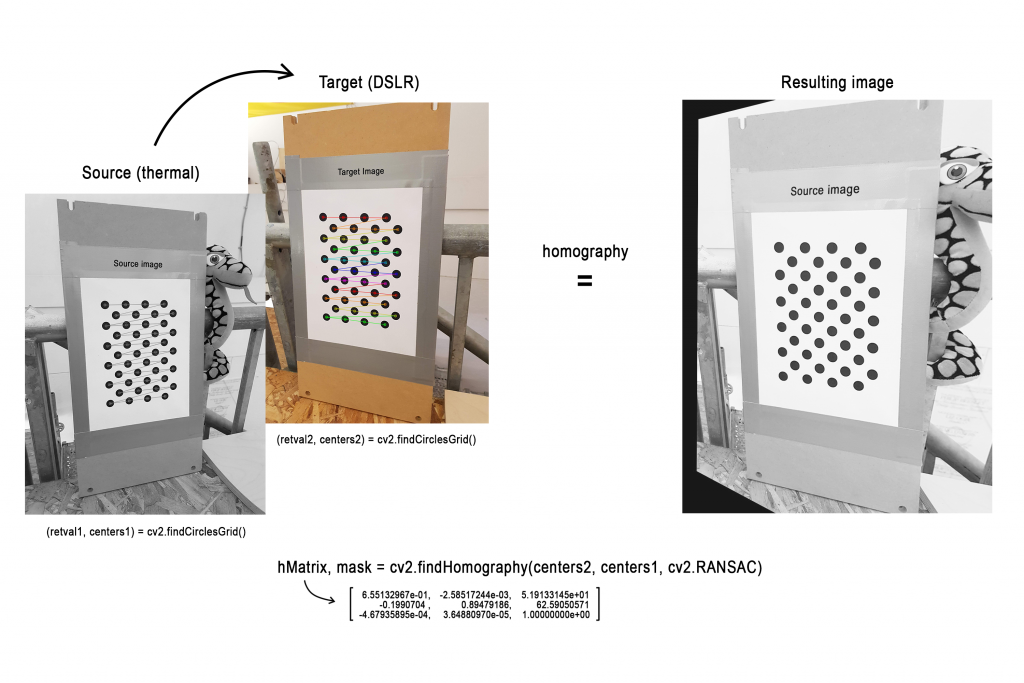

One of the big challenges of the experiment was to calibrate both cameras so the texture of the thermal image could be placed on top of the high-resolution DSLR photograph. To solve this problem, I used an OpenCV’s circles grid pattern laser cut onto an acrylic sheet that showed every circle as a black shadow for the normal camera, and simultaneously, placed on top of an old LCD screen with a lot of heat radiation, showed every circle as a hot point for the thermal camera.

After that, the OpenCV algorithm finds the center of those circles and calculates the homography matrix to transform one image into the other.

The subsequent captured images were transformed using the same homography transformation. The code in Python to perform this camera alignment can be found here.

Machine workflow

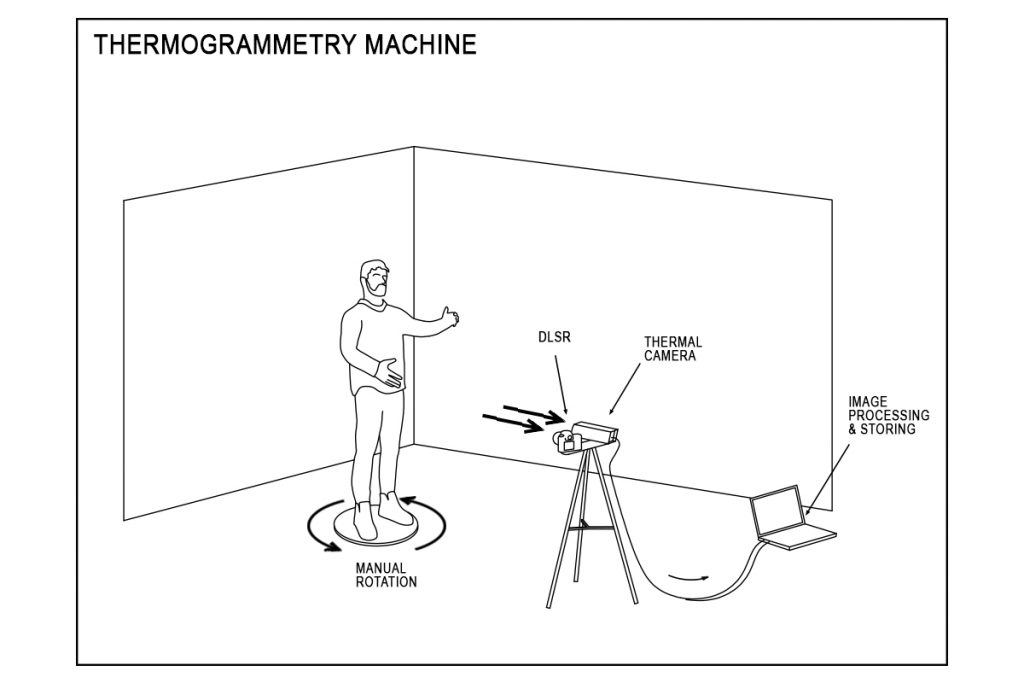

The typology machine was ultimately constituted by me. Since the Lazy Susan was not motorized, I had to spin the subject to obtain a series of around 30 color and thermal images per hug.

I also had to placed little pieces of red tape in the subject’s clothes to improve the performance of the photogrammetry software to build a mesh of the subject out from the color images (the tape is invisible for the thermal camera).

Thermogrammetry 3d reconstruction

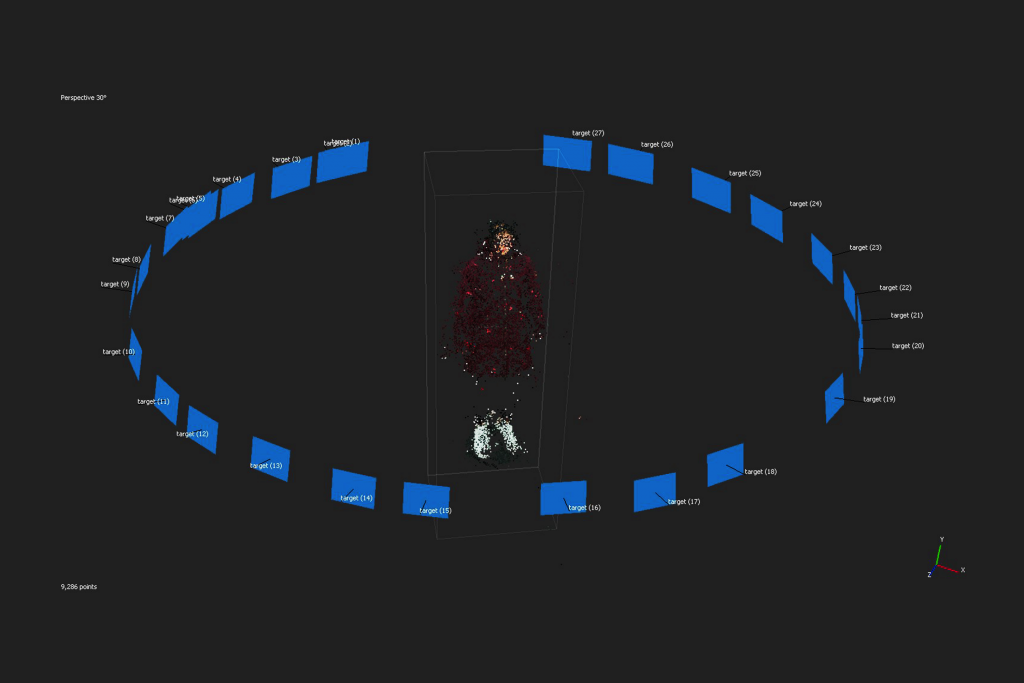

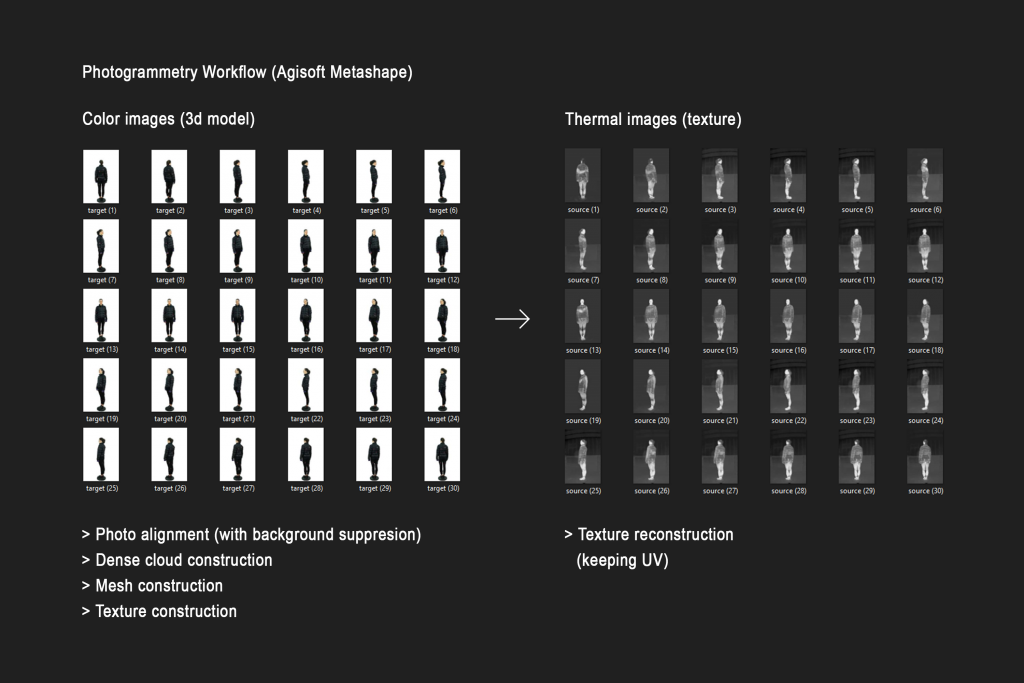

Based on the principles of photogrammetry and using Agisoft Metashape, I finally built a 3d model of the hug. Following Claire Hentschker‘s steps, I built a mesh of the hugged person.

Since the thermal images have not features enough to be used by the software to build a comprehensible texture I used first the color images for this task. Then, I replaced the color images with the thermal ones and reconstruct the texture of the model using the same UV distribution of the color images.

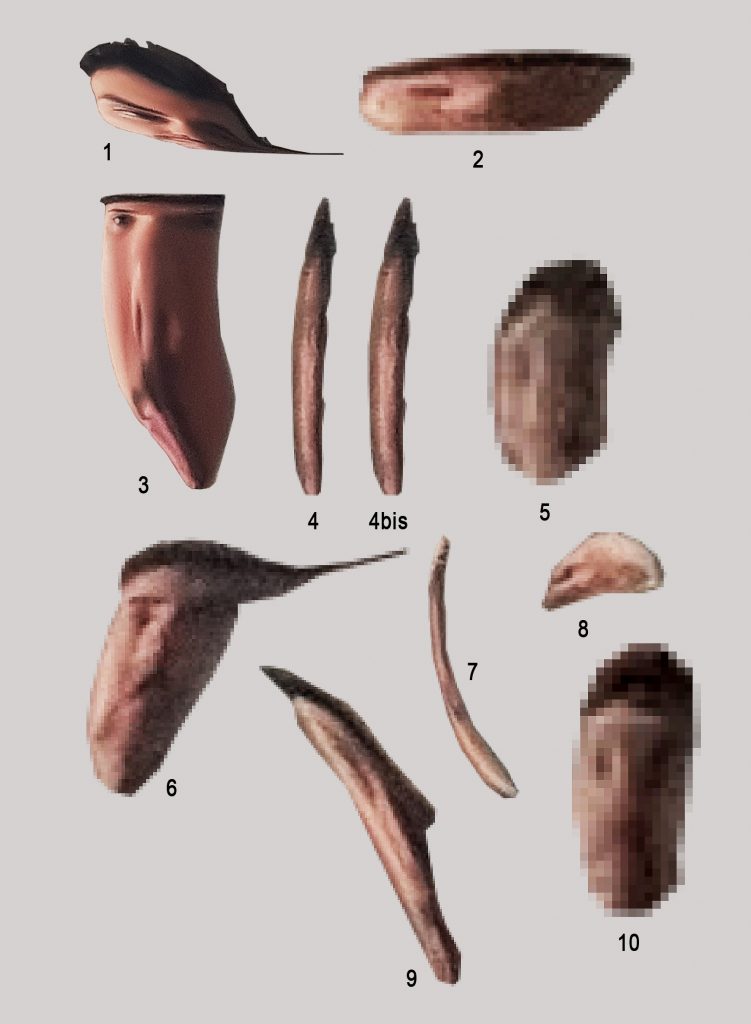

Finally, the final reconstruction of each hug is processed to enhance the contrast of the hug in the overall image with Premiere and Cinema 4D. Here the typology:

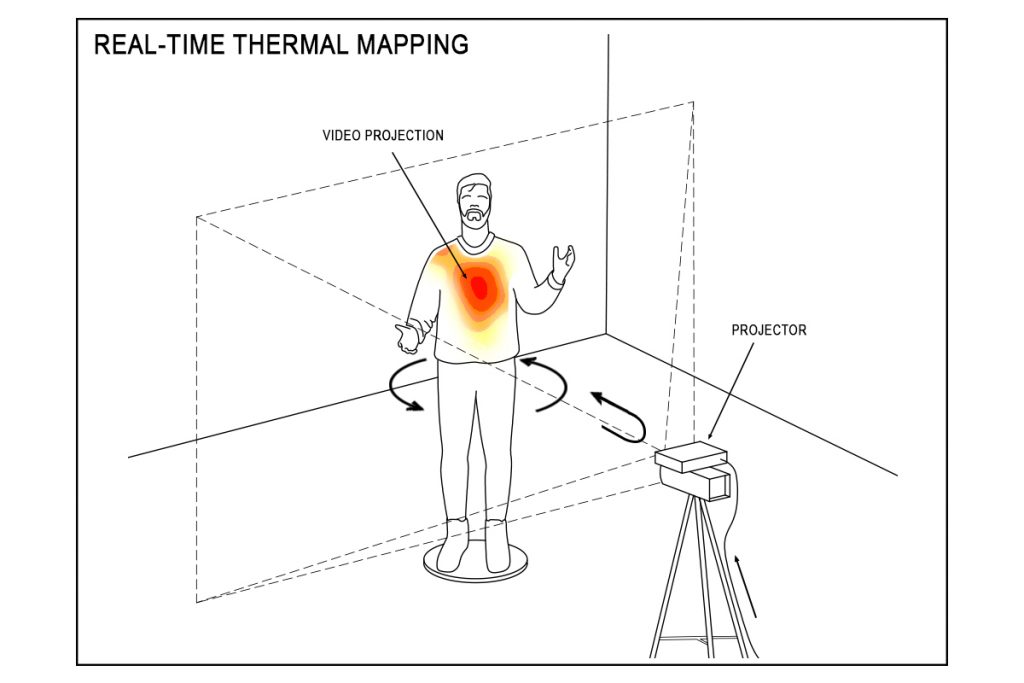

A real-time machine

My initial approach to visualize the remnant heat of human interaction was to project on top of the very same surface of the subject a thresholded image of the heat footprint. The video signal was processed using an IP camera filter that transformed the IP streaming into a Webcam video signal, and TouchDesigner, to reshape the image and edit the colors.

Initially, that involved the use of a mannequin to extract a neat heat boundary. Although I did not use this setting at the end due to the disturbance that provokes to hug an inanimate object, I discovered new affordances to explore thermal mapping.

I hope to explore this technique further in the future.