- Track the flow field of people’s movement and apply its motion vector to other videos of people moving. (Inspiration)

- Put people in a dark room with a projector that maps onto anything that moves.

- Capture a series of people’s The Graduate Ending moments.

Author: Lukas

Small Spaces

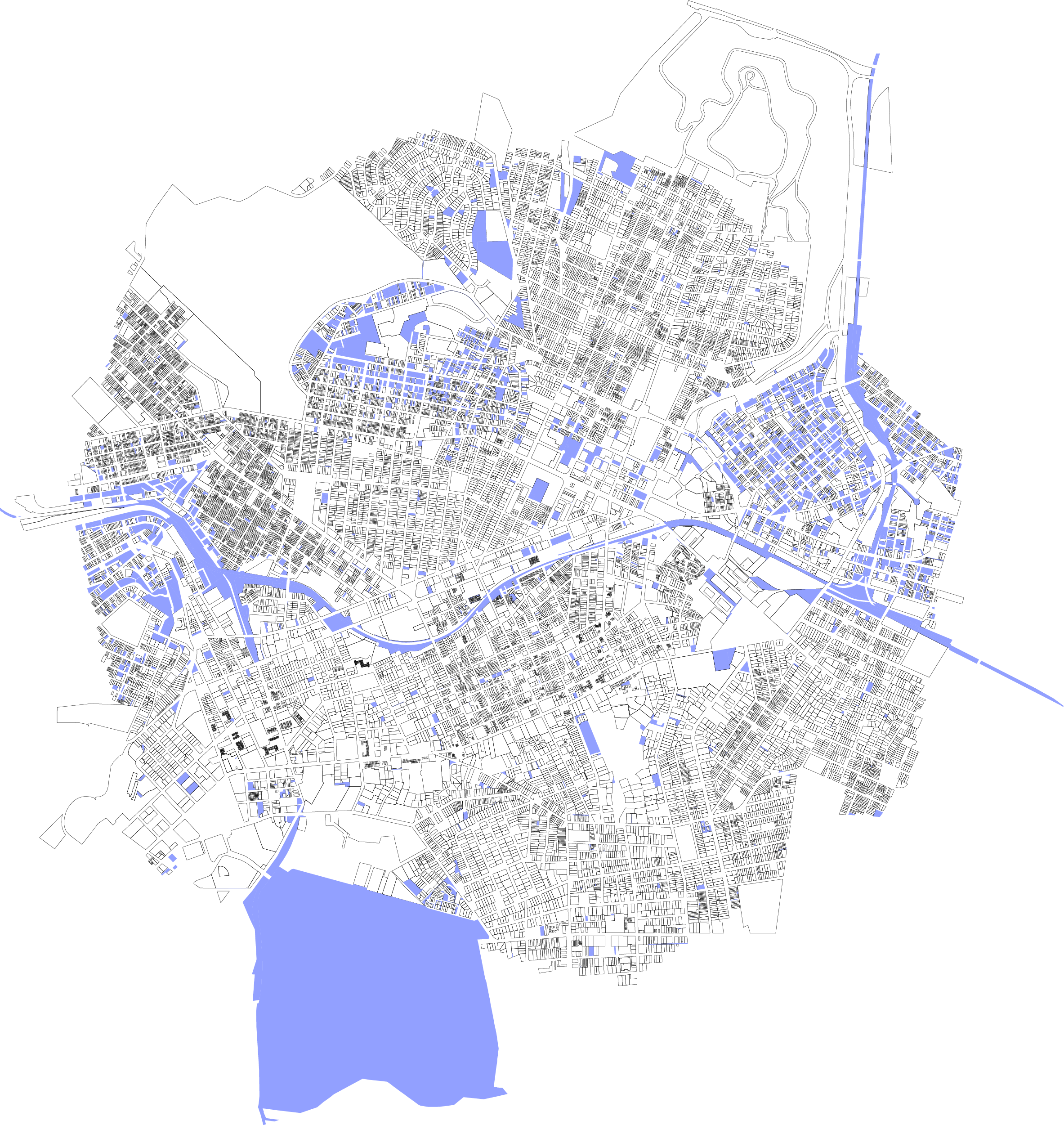

Small Spaces is a survey of micro estates in the greater Pittsburgh area as an homage to Gordon Matta-Clark’s Fake Estates piece.

Through this project, I hoped to build on an existing typology defined by Gordon Matta-Clark when he highlighted the strange results of necessary and unnecessary tiny spaces in New York City. These spaces are interesting because they push the boundary between object and property, and their charm lies in their inability to hold substantial structure. It’s interesting that these plots even exist—one would assume some of the properties not owned by the government would be swallowed up into surrounding or nearby properties, but instead they have slipped through bureaucracy and zoning law. To begin, I worked from the WPRDC Parcels N’ At database and filtered estates using a max threshold a fifty square feet lot area. After picking through the data, I was left with eight properties. I then used D3.JS and an Observable Notebook to begin parsing data and finding the specific corner coordinates of these plots. At this point I reached the problem of representation. The original plan was to go to these sites and chalk out the boundaries, then photograph them. As I looked through the spaces, I realized many of them were larger than the lot area listed on the database. They were still small spaces, but large enough that the chalking approach would be awkward, coupled with some existing on grass plots, and one being a literal alley way. Frustrated, I went to the spaces and photographed them, but I felt that the photos failed to capture what makes these spaces so charming. Golan suggested taping outlines of these spaces on the floor of a room to show that they could all fit, which would definitely include some of the charm, but also lose all of the context. In an attempt to get past this block, I made a Google map site to show off these plots, hoping to strike inspiration from exploring them from above. Talking with Kyle led to a series of interesting concepts for how to treat these plots. One idea was to take a plot under my own care. What seemed to be a good fit was drone footage zooming out, so one can see these plots and their context. By the time I reached this conclusion, Pittsburgh had become a rainy gloom, so as a temporary stand in, I also made a video using Google Earth (as I was not able to get into Google Earth Cinema). Overall, I would call this project successful in capturing and exploring the data, but I have fallen short in representation. I hope to continue exploring how best to represent these spaces, and convey why I find them so fascinating using visual expression.

POST CRITIQUE FOLLOW UP:

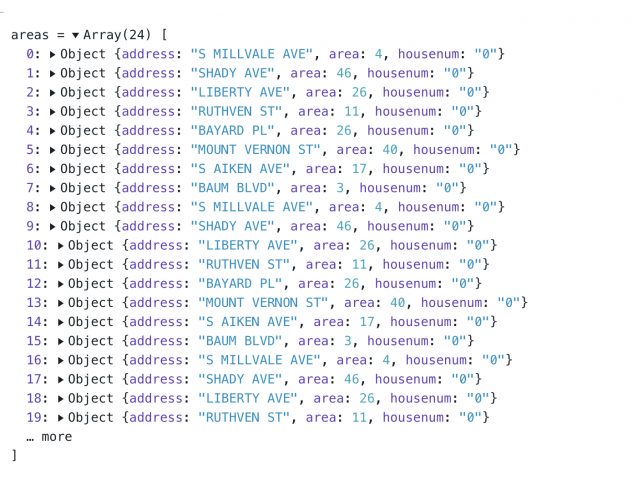

As something Kyle and I discussed earlier, the question of what lots have the address of zero is an interesting one, given all of these spaces bear that address. I decided to make a small change to my filter script to find out the answer. Below is the result. Feel free to check out the Observable notebook where I filter this data.

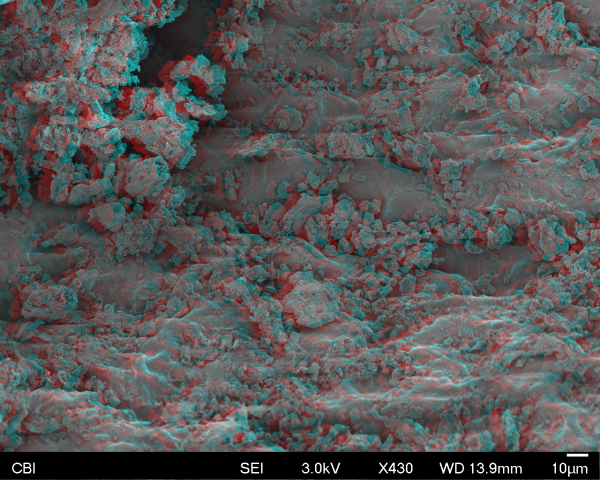

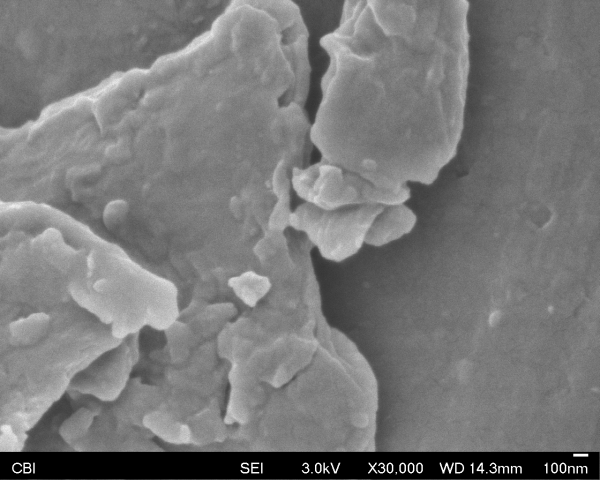

SEM

Typology Machine Proposal

I propose, as an homage to Gordon Matta-Clark’s Fake Estates project, a survey of land plots in Pittsburgh that are under 20 feet. These plots are fascinating as they push and question the boundary between architecture and object scales, and begin to explore societal requirements that result in such spaces.

I have already begun researching this topic, and have found a series of these plots.

Postphotography

Nonhuman photography as described in the article is the ability for a sensor to capture data that would normally be invisible or inconspicuous to a human. An example of this nonhuman photography would be an MRI scanner, which reveals the insides of a human in slices of volume. A less practical example is Benedict Groß’s “Who Wants to Be A Self Driving Car,” which explores what the data self driving cars parse looks like. Photography from these nonhuman conditions becomes interesting in that it can enhance data that we would never notice, or obscure data that would normally dominate one’s understanding. These images are useful as a lens of understanding, but also as a generator of artistic ideas.

Photography and Observation

Capture’s influence on typology and objectivity is a debated topic. Some lean to the side of “the camera cannot lie,” while others say that the camera “does not do justice” to its subject. To capture, one generally uses tools. The more complex these tools become, the more chance bias can appear inherently in the data secured. One can look at Kodak and Shirley Cards as an example of bias in even how a photograph represents its own capture. Furthermore, in the context of art or design, a typology displayed is the result of some curatorial decision. Even to show every photo, recording, or point captured is a value judgement about the data, which is subject to the bias of the curator. To say one captured some datum is to say that datum was processed through a series of filters logical and mechanical, which inherently changes the perception of the subject.

That’s not to say capture is not useful, or reliable. Many tools are predictable, and allow for scientific research. MRIs, X-Rays, thermostats, barometers and other capture tools reliably feed out similar enough data each iteration that we feel comfortable letting it inform our decisions.

In the context of typologies, there is a certain ego to curating a selection of data and proclaim one has discovered or identified some special category. Who is anyone to proclaim that someone is a hero, or that this is a house, while this is a home?

Photogrammetry

Claire’s workshop was incredible. I made a 3D scan of Connie and my plush Dinosaur, Wobble.

Then I got meshes of me and Tatyana to turn us into sweater people using KeyShot.

Response To Steven

I really appreciate Steven’s description of Marmalade Type by Rus Khasanov. Specifically, Steven discusses how art can use a rigor and understanding of science as a means to create visually interesting pieces. The train exploration Steven described is a wonderful example of how one can begin asking standard scientific questions, such as “what is birefringence?” and use the response as a generator for a project. I would be interested to see to what extent the images were edited and color corrected, and am curious to what extent it would it matter if someone is staying true to the science of the project given its art context.

Other projects viewed:

Escape Route

Dirk Koy is an incredible visual artist, and I could have mentioned a number of their pieces. One particularly relevant to the course would be Escape Route, a video in which the viewer flies through a 360 degree photogrammetric scan of portions of Basel. As one flies through, the city itself deforms through a series of effectors, such as vertex displacement and different visualizations of color to the data, such as mesh lines, photo color, or depth. As one moves, a catchy soundtrack meant to evoke a spy movie escape scene plays in the background.

This piece is impressive partially due to the sheer size of the capture, which I feel is worth mentioning. What becomes particularly relevant to the course is the choices that Dirk makes about visualization. There exists the objective Basel, the captured Basel, and the Basel that is visualized at a given moment, including the displaced geometric version.

The Camera, Transformed by Machine Learning

Since the advent of the camera, the interaction of user/device has evolved into a complex series of choices that allow for artistic opportunities. One can take advantage of sensor options, such as in long exposure photography. While exploring one may run into the limitations of a sensor, which can be technical, such as max distance on a depth camera, to phenomenal. The influx of machine learning tools allow for the relationship between operator and camera to change. Limits such as a regular photograph being flat are surpassed with relative ease. The question of what the photographer is collecting becomes a focus. Is it an unprocessed data set to be used? Furthermore, a duchampian relationship with AI becomes apparent, questioning whether the AI is the photographer, or the one who frames the camera the AI uses, and the degree of collaboration between the two.