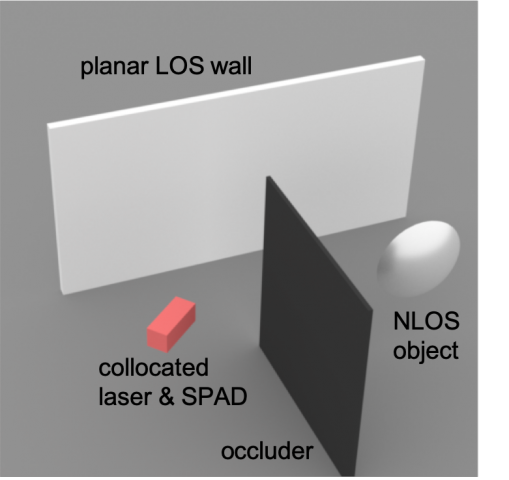

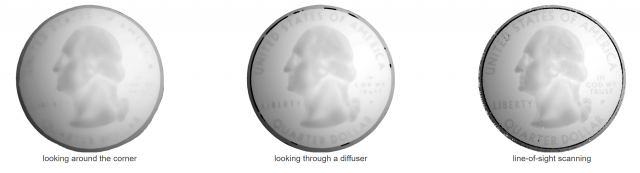

A group out of the CMU Robotics institute and a couple other Universities are working on a project that allows the capture of visual data that is outside of the field of sight. The method works by capturing light reflected or otherwise displaced on a wall that is within the line of sight, and then using that information to reconstruct an image of the object on the other side of the obstructing surface. The method works because something outside of the line of sight will still be reflecting/absorbing light, and so that reflection/absorption will affect what light is absorbed/reflected from the wall within the line of sight. (you can read more about it here if you’re interested)

I find the project really interesting because it changes one of the fundamental understandings of what we think a camera can do: capture images that are within the line of sight. This has potential implications, for example, in driving, by allowing drivers to see around corners at intersections and avoid potential crashes.