Due to my frustration with my output in this class, art in general, and life, I am definitely behind schedule with my final project. Ultimately, I’ve decided to switch directions and work on something that may be less interesting as a capture method, but is more meaningful to me personally (and will hopefully be more meaningful to the viewer as well.)

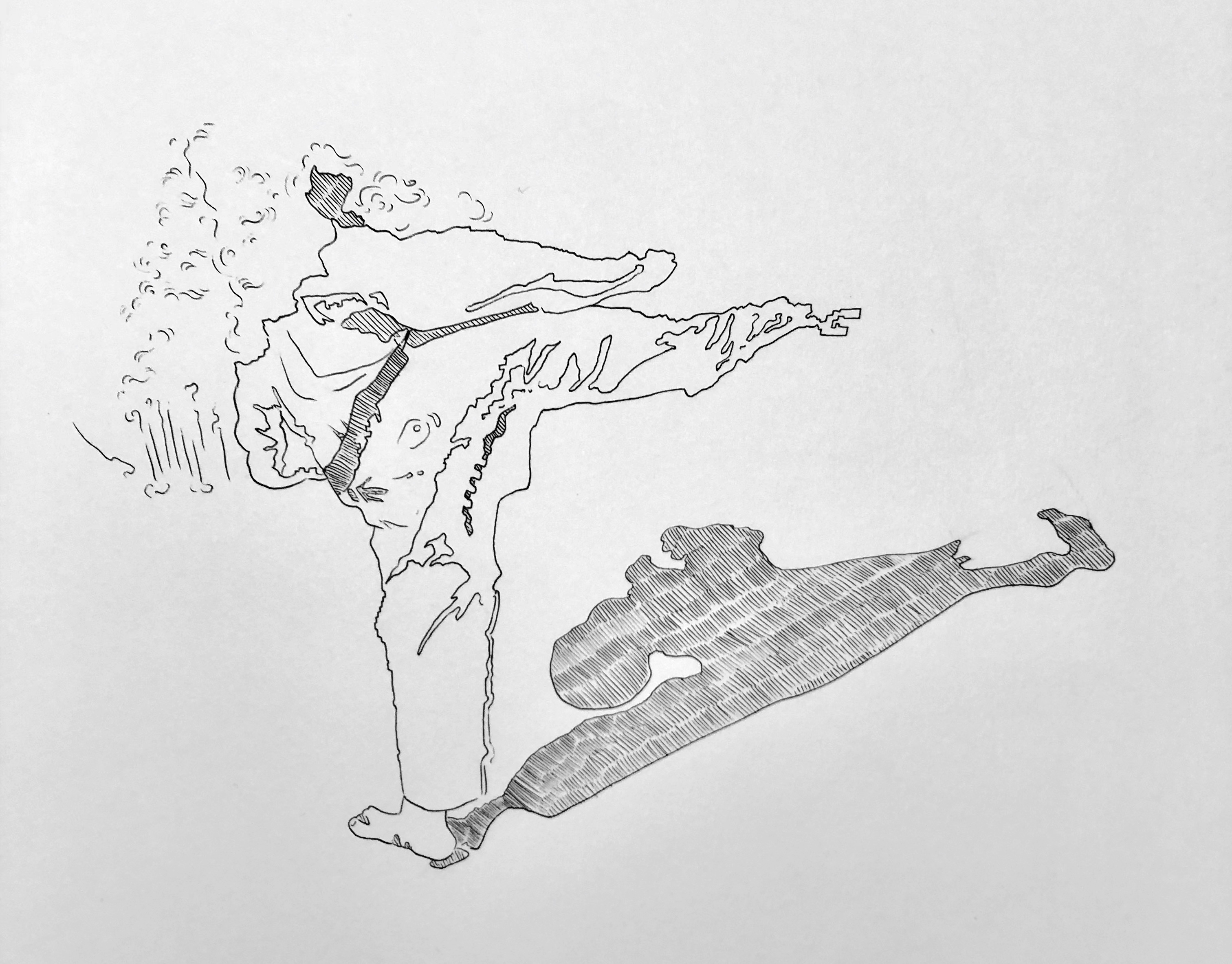

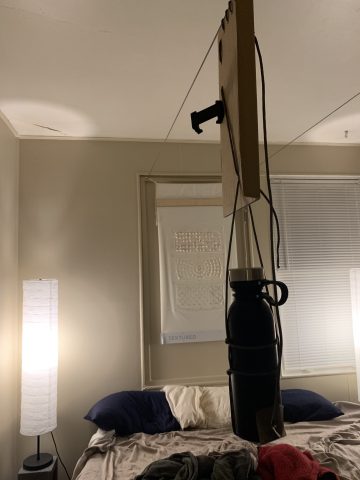

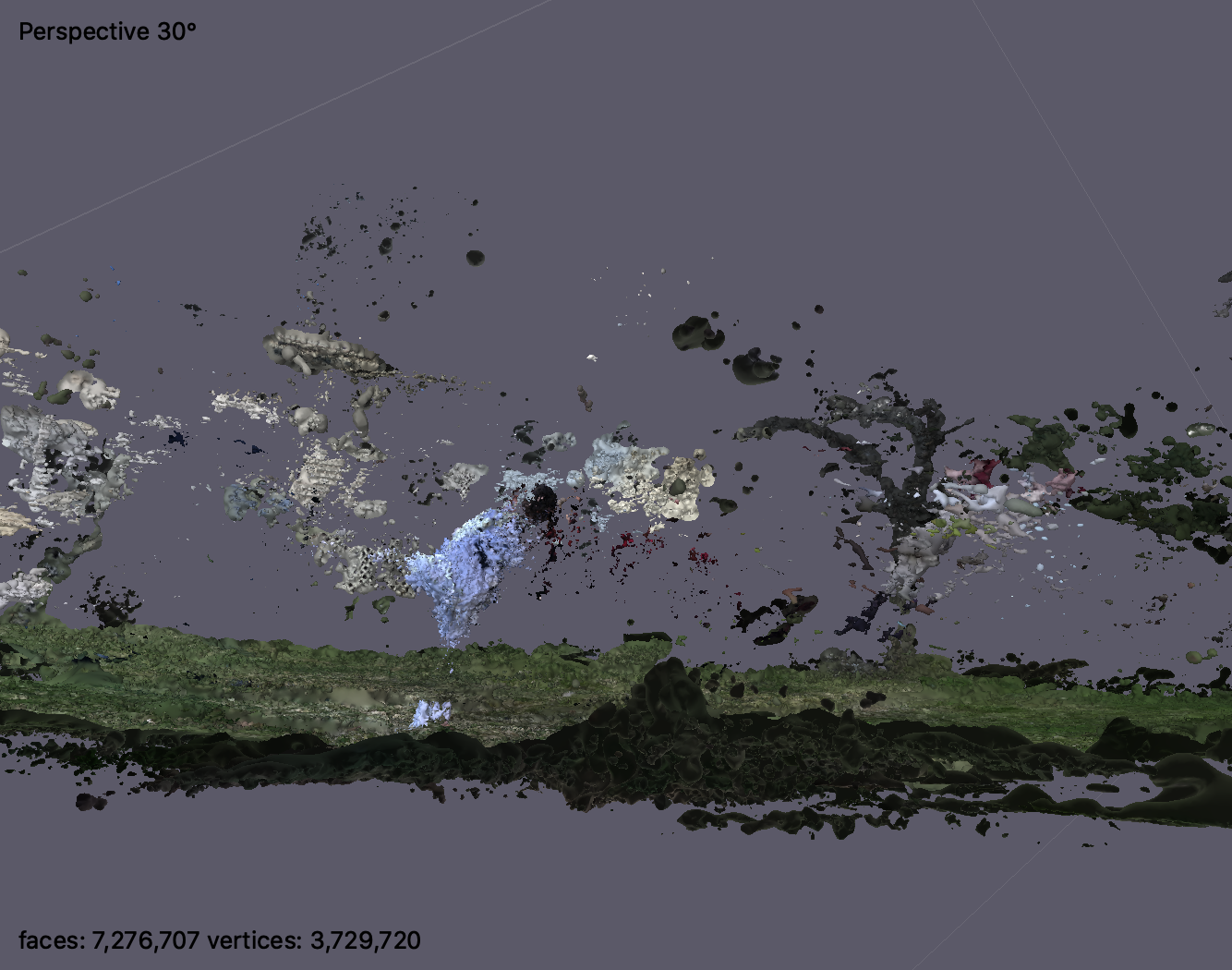

Over the past couple weeks, I had my roommate Katy pose several times for Tae Kwon Do photogrammetry. We tried it with 5 people (the entire isolation pod) taking photos from different angles, but this led to background noise that reduced Katy herself to a sad blob. This is where I was at last Thursday, from which point I’ve worked on three further plans.

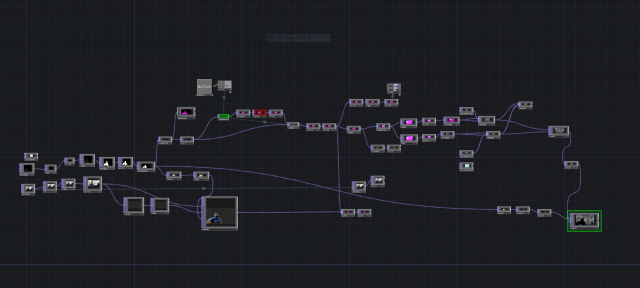

Plan A: A few days later we tried again with two photographers, which a lot better but still not perfect. The model of Katy’s body lacked detail and the nunchuks she was holding never rendered correctly. Even using Metashape on her gaming GPU with 16GB of RAM, it did not have enough memory to attempt a higher-quality mesh. I’m considering attempting to model in the nunchucks myself using Maya.

Plan B: I tried extracting frames from a 20-second video of Katy holding a kick (I had to learn FFMPEG to do this, and command line tools are scary) which output over a thousand high-quality images. Metashape is thought long and hard about these, but it cut off Katy’s head. Overall, this process has been frustrating because I’ve made decent photogrammetry of my own face before, so I don’t know what I’m doing wrong this time. Maybe the lighting was optimal in the STUDIO but not here, or maybe Katy was shifting her balance slightly which can’t really be helped.

Plan C: The content I am interested in working with is my old family video and photos. I want to create a virtual space representing what my little brother’s room would have looked like in early 2008. I’ve been experimenting with the Colab notebook for 3D photo inpainting, which produces awesome images, but the actual 3D objects it produces are fairly useless height maps. Therefore, the space will incorporate two techniques. First, I will ask my parents to take photogrammetry-style pictures of my brother’s old stuffed animal dog. No matter how fucked up the model is, I will use it. In fact, it’ll be better if it’s a complete mess. I’ll also incorporate illustration by attempting to redraw the rug he had, which had train track graphics on it, and the rest of his stuffed animals. Overall, I hope this combination of 3D and 2D elements would make for an interesting virtual space.