I have an incredible friend in the costume design program, Julie Scharf, and she is an artwork in herself. She is incredibly dedicated to her vintage clothing collection, the history and practice of performance costume, and queer imagery in the entertainment industry–and since seventh grade, she has not worn the same outfit twice.

Her, as a stylist, and I, as a photographer, and both of us as queer artists, have partnered on an indefinitely-long project of creating a critical photographic anthology of queer costume. This is not nearly a detailed enough description of it, but the idea is still in development and we don’t want to reveal too much about it yet.

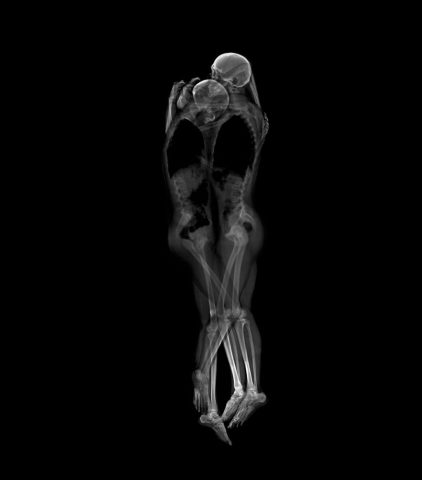

However, for about a month now, I have been photographing Julie’s outfits and her accompanying performances on many days of the week. Some of my favorites so far:

I would like to use the Person In Time project to create a work that would contribute to this larger project. The relevant “time” component here is that we are documenting Julie over time, which is in itself based on the historical timelines of costume, queerness, and performance. Julie and I are interested in expressing our ideas non-traditionally (media more queer than photography), so ExCap provides a perfect opportunity to start.

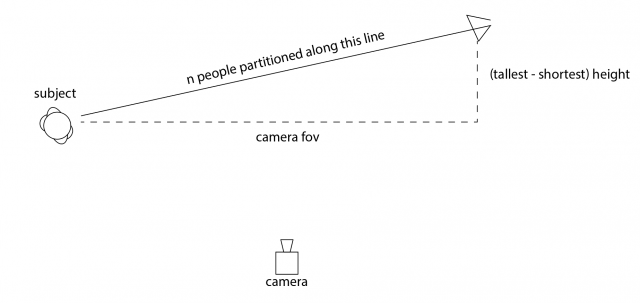

First, I would be most excited to computationally create my own slit-scan camera and take strange images of Julie and her outfits with it. This was inspired by Golan’s description of my last project as slit-scanned spaciotemporal sculptures. I wasn’t exactly sure what he meant by “slit-scanned”, so I looked it up and I am absolutely obsessed with it. Slit-scanning is essentially a long-exposure photography technique, except instead of layering entire frames taken over time on top of each other, mere slits of the frame are captured and stitched chronologically left to right. This is the photography of time, not space. The images below are just a few of the incredibly beautiful applications of this technique.

Since I’d be making the camera myself, I see the potential for a lot of experiments as well: I could order the slits left to right, as is normally done, but I could also go right to left, up and down, and randomly, to name a few.

My second idea is to create a video like Kylie Minogue’s Come Into My World. I don’t know how I’d be able to do this easily without the precision of the robot arm, so I guess I’d program the robot arm to film videos of Julie doing different performances in an exact circle and layer them on top of each other.

Finally, I also think it would be interesting to document Julie’s outfits with photogrammetry instead of regular photography, perhaps suspending them from the ceiling with string (which I could remove in post-production) to get 3D versions of this: