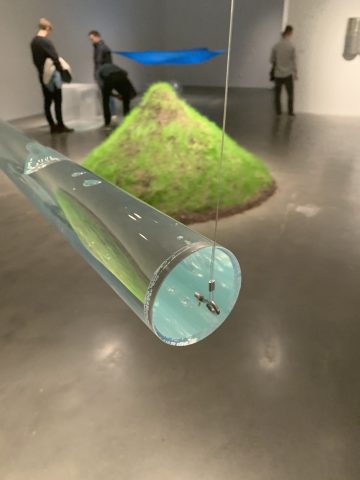

I was recently at the New Museum In New York City. I had been drawn there by the work of Hans Hacke, specifically his kinetic and environmental art. The museum had devoted one large space to the pieces in question — the room was filled with undulating fabric, a balloon suspended in an airstream, acrylic prisms filled with water to varying degrees with water, and more. Each of these pieces represented a unique physical system that was dependant on the properties of the physical space it was curated in or the viewers that interacted with it. One specific piece that caught my eye was a clear acrylic cylinder filled, almost completely, with water, and suspended from the ceiling. It was in essence a pendulum with water sloshing around inside. I found myself intrigued by the way the water moved and air bubbles oscillated as the larger system moved. There’s a highly complex relationship between the dynamics of the fluid and that of the pendulum. Connecting this back to experimental capture: how we can capture and highlight this kind of complex dynamic system? What if you had a robot driven camera followed the motion of the watery pendulum? What if you used a really big camera that highlighted the act of robot capturing the pendulum? If you curated the robot, the pendulum, and the captured video together how would that change the piece?

I get excited by art that can spur ideas and inspiration in me – that’s why I got excited about Hans Haacke’s kinetic art.

Link to New Museum Show: https://www.newmuseum.org/exhibitions/view/hans-haack