A Collection of Liquids Going from Cold to Hot, then (if possible) Hot to Cold.

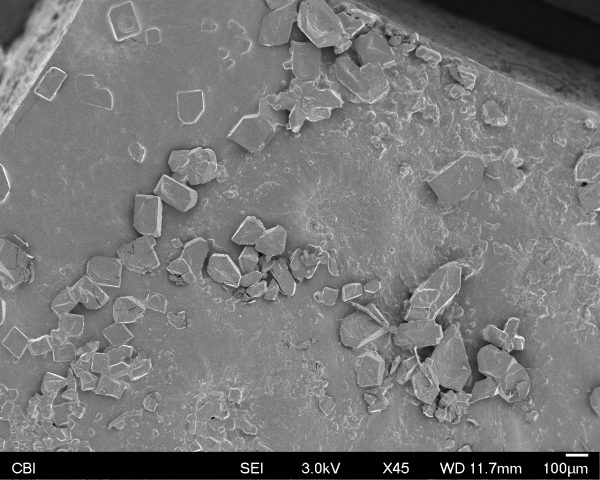

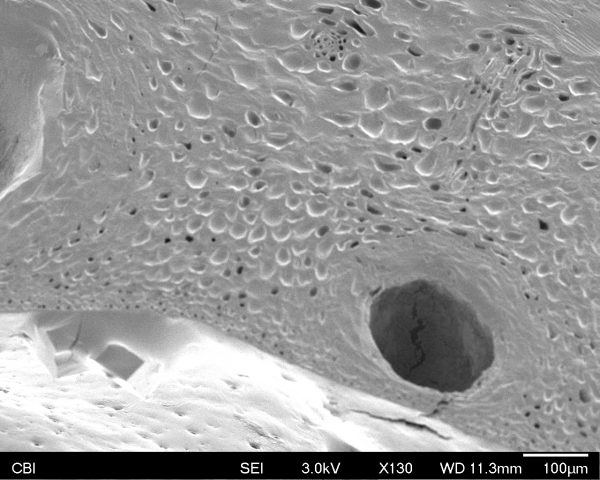

Sink Test

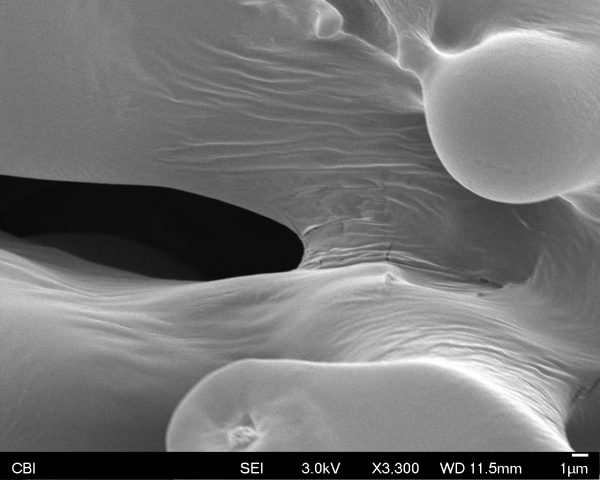

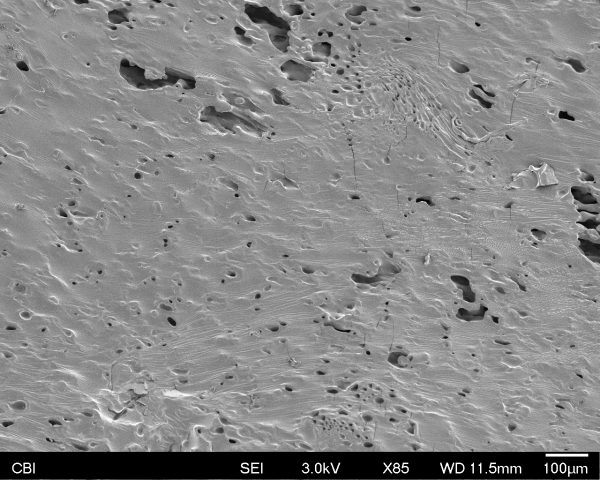

Shower Test

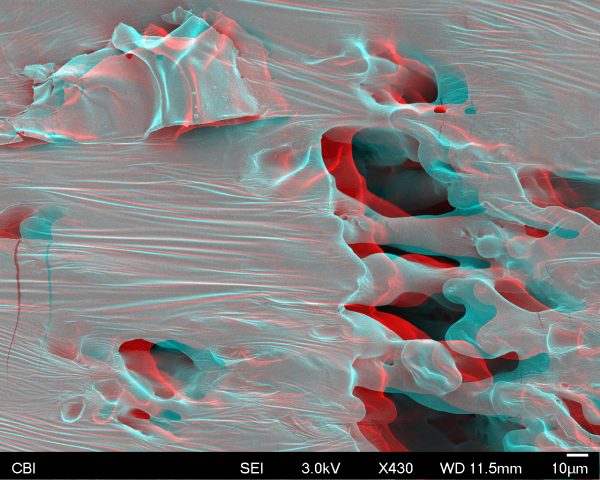

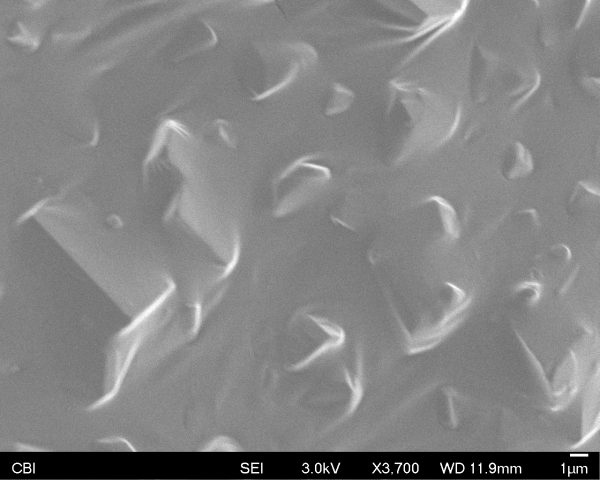

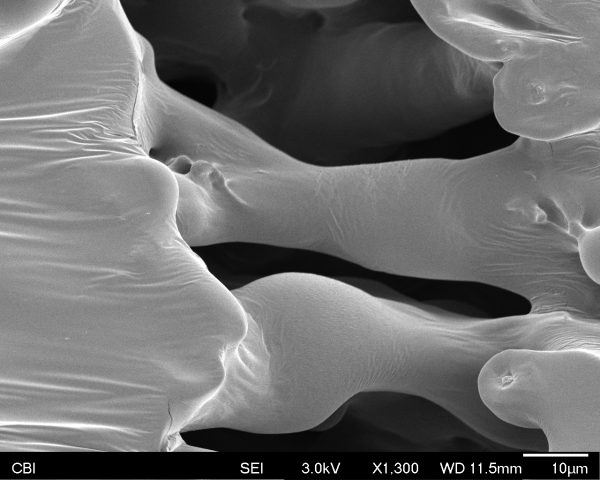

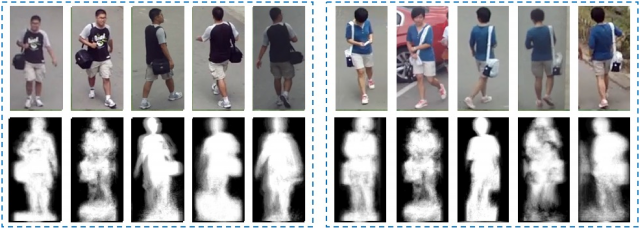

For my typology project, I am going to capture the the thermal changes of liquids going from hot to cold, and then (if possible) cold to hot. I am interested in capturing not just the liquids, but the surrounding environments that change in response to liquids, too. I feel like I often make art projects that have a specific (political) am in mind from the outset. For this project, I will try a different approach and use the time to try and document a phenomenon I found interesting when playing around and experimenting with this heat camera. The FLIR E30bx Thermal Camera will be the capture device. All of this capture is clear and ‘invisible’ without this type of capture. I will let this inquiry drive my projet instead of a more preconceived idea.

Questions I have:

- Is this remotely interesting?/why do this?

- How can I narrow or expand the scope of my inquiry?

- A few technical/aesthetic questions:

- in my examples above I am using timelapse, how are you responding to that? Is something being missed by the slow change of the sides? I have the original version on my comp and will also show for comparison during our discussion.

- What about seeing the heat information on the image?

- The capture is only at 160×120 pixels. I am capturing it by recording the live stream off of my computer; is the above magery to grainy? Should I keep them smaller?

- How am I going to display my typology project?

Places/things I will film:

- a variety of sinks

- a variety of showers

- ice melting

- water boiling

- rivers/streams at sunset/sunrise

- coffee dispensing from a large shop size dispenser.

- hosses

- public and private locations of the above.

- park faucet

- eyewash station

- water fountain

- What about more gaseous liquid situations, like steam? Or car exhaust? Does this ‘count’?

- ok and some of these ideas towards the end here are just water dispensers and the change in temperature might be very minute (if at all/visible) should I include these? re: possibly narrowing or expanding my scope from question above.

Some related things I found inspiring/interesting/surprising:

- Linda Alterwitz, Signatures of Heat, Canine

- Picturing heat loss in buildings for ecological purposes

- Seeing air pollution with thermal imaging.

- Thermal weapon sights. Hunting hogs(graphic)