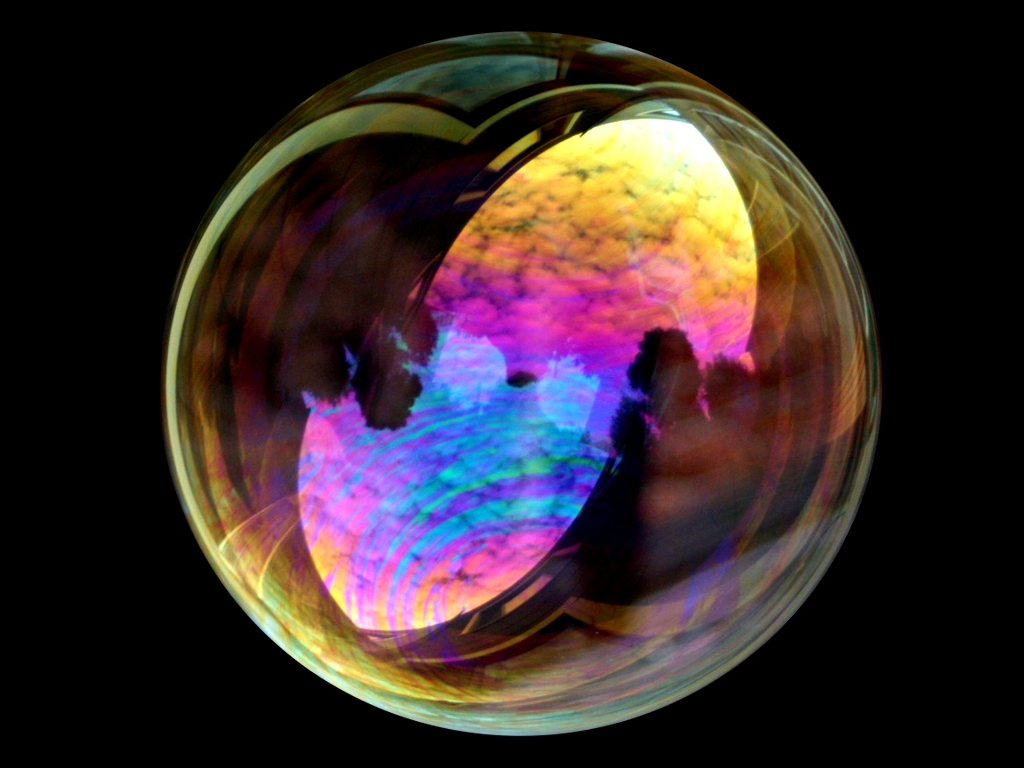

Photographing bubbles with a polarization camera reveals details we don’t see when we look at them with our bear eyes, details including strong abstract patterns, many of which look like faces.

I wanted to know what bubbles looked like when photographed with a polarization camera. How do they scatter polarized light? I became interested in this project after realizing that the polarization camera was a thing. I wanted to see how the world looked when viewed simply through the polarization of light. The idea to photograph bubbles with the camera came out of something I think I misunderstood while digging around on the internet. For some reason I was under the impression that soap bubbles specifically do weird thing with polarized light, which is, in fact, incorrect (it turns out they do interesting things but not crazy unusual things).

To dig into this question, I took photographs of bubbles under different lighting conditions with a polarization camera, varying my setup until I found something with interesting results. As I captured images, I played around with two variables: the polarization of the light shining on the bubbles (no polarization, linear polarized, circular polarized), and the direction the light was pointing (light right next to the camera, light to the left of the camera shining perpendicular to the camera’s line of sight).

I found that placing the light next to the camera with a circular polarization filter produced the cleanest results, since putting the camera perpendicular to the camera resulted in way too much variation in the backdrop, which made a ton of visual noise. The linear polarization filter washed the image out a little bit, and unpolarized light again made the background a bit noisy (but not as noisy as the light being placed perpendicular to the camera).

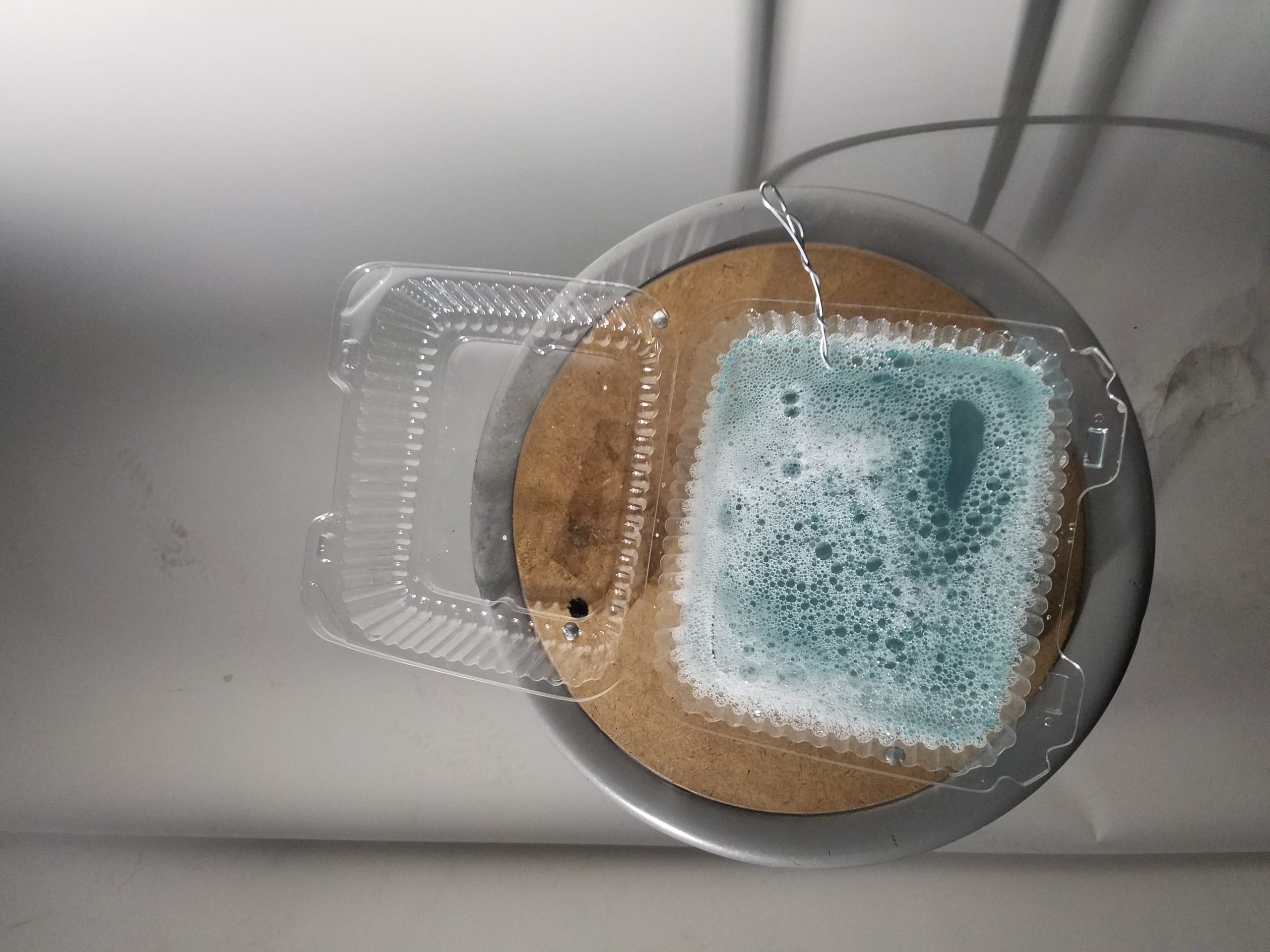

The photo setup I ended up using with the polarization camera, light with circular polarization filter, and my bubble juice.

The photo setup I ended up using with the polarization camera, light with circular polarization filter, and my bubble juice.

My bubble juice (made from dish soap, water, and some bubble stuff I bought online that’s pretty much just glycerin and baking powder)

My bubble juice (made from dish soap, water, and some bubble stuff I bought online that’s pretty much just glycerin and baking powder)

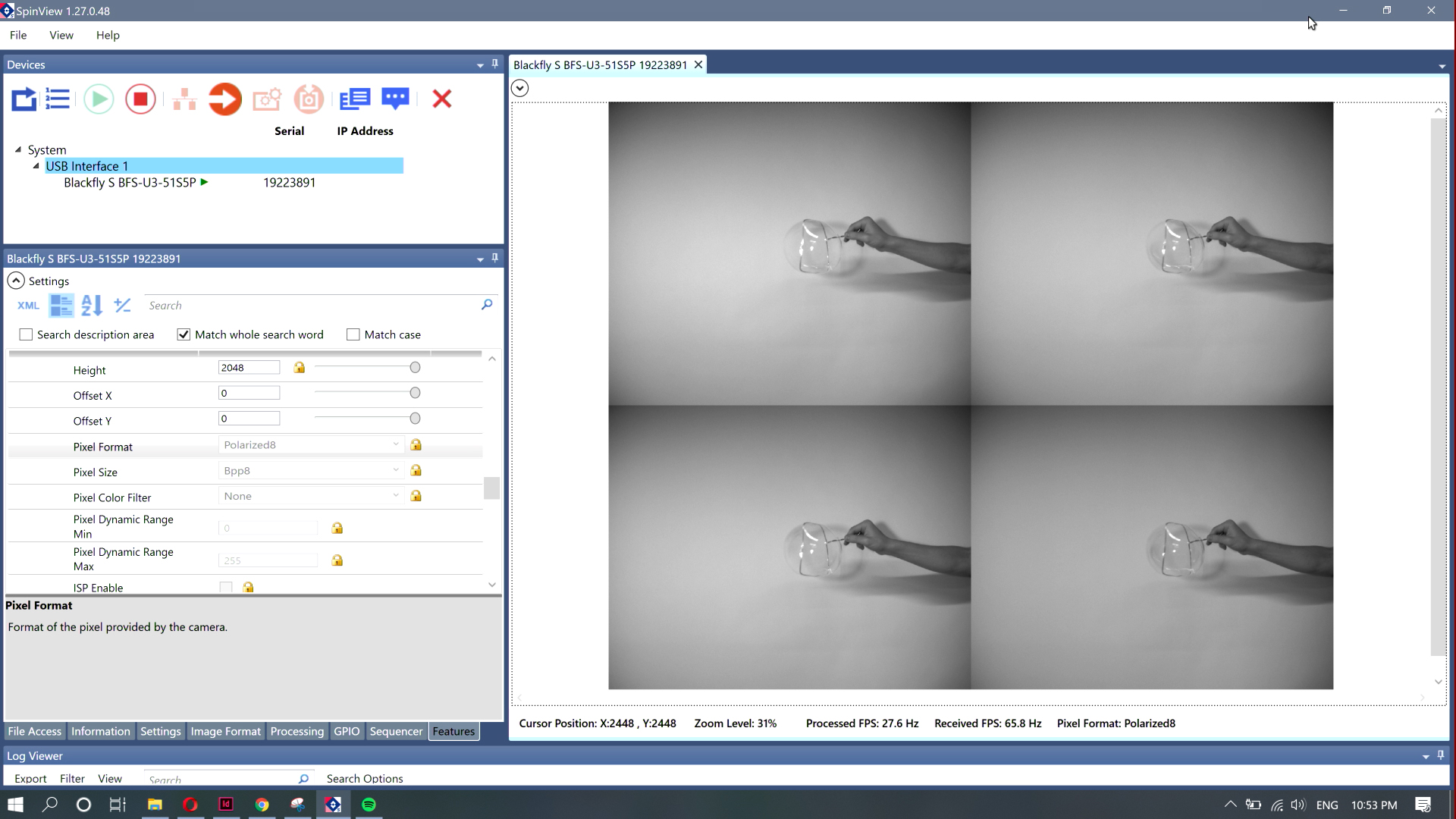

I recorded a screen cap of the capture demo software running on my computer (I didn’t have enough time to actually play with the SDK for the camera). I viewed the camera output through a four plane image showing what each subpixel of the camera was capturing (90, 0, 45, and 135 degrees polarized).

An image of my arm I captured from the screen recording.

An image of my arm I captured from the screen recording.

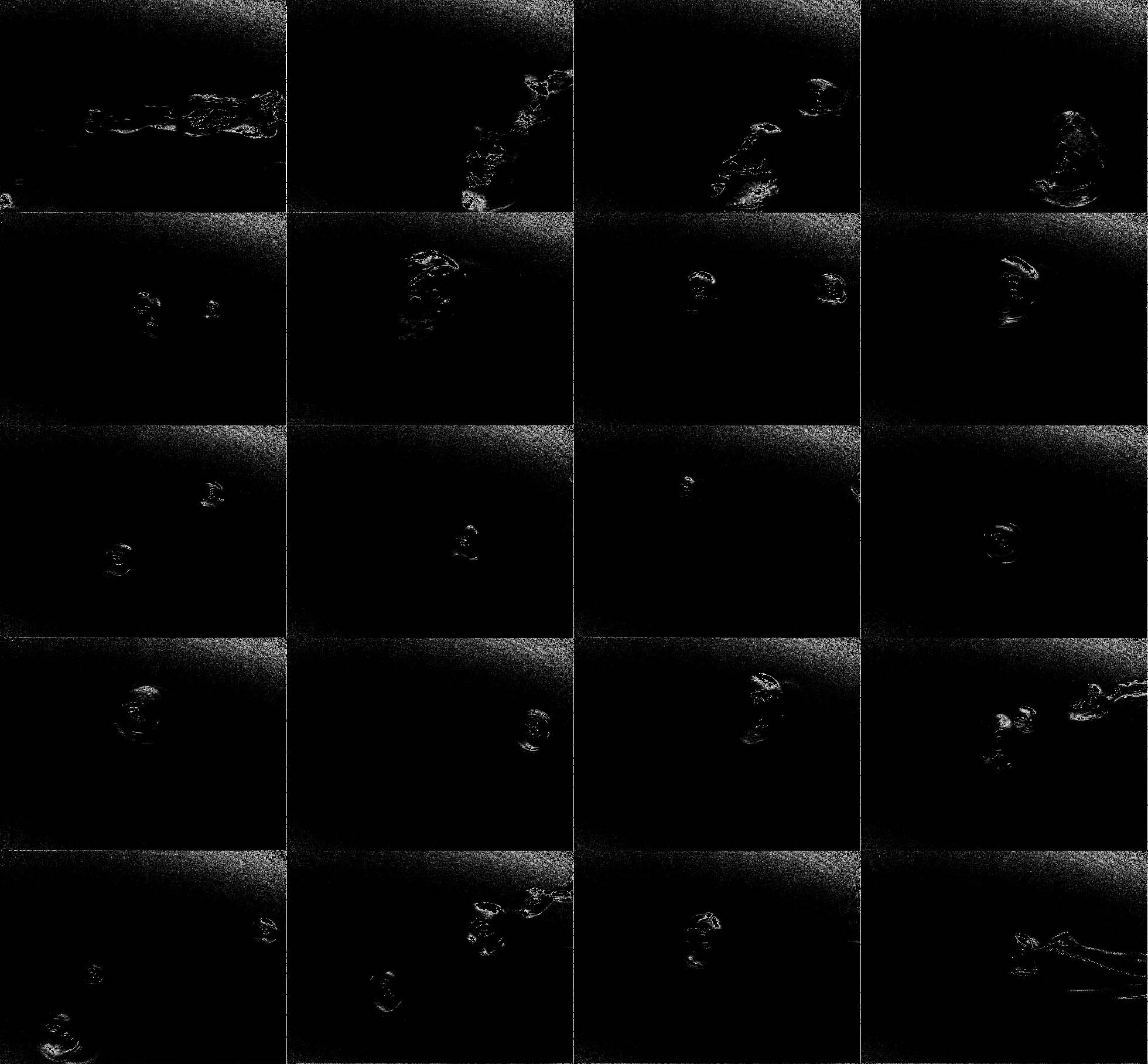

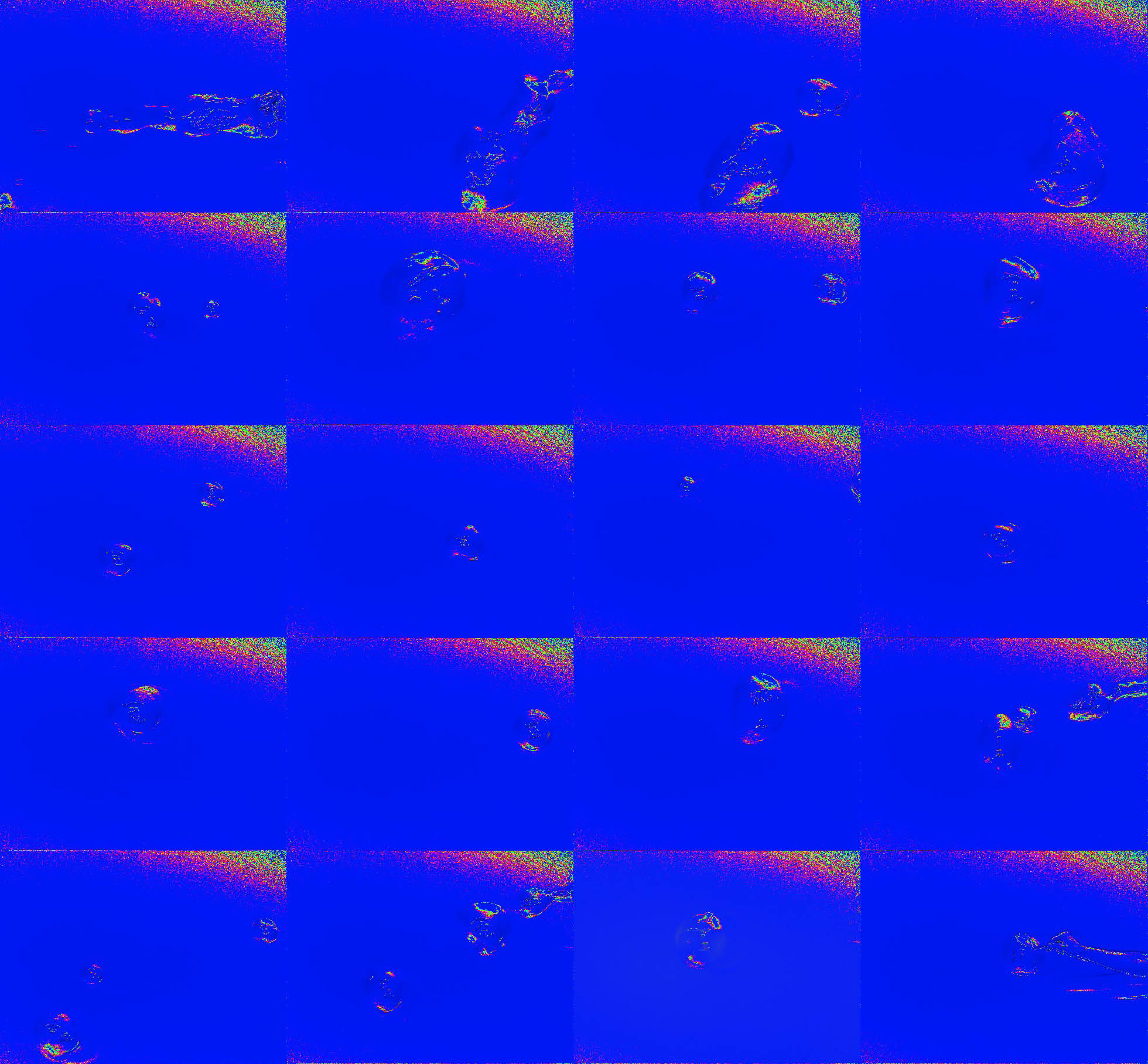

I grabbed shots from that recording and then ran those shots through a Processing script I wrote that cut out each of the four planes and used them to generate an angle of polarization image (black and white image mapped to the direction the polarized light is pointing), degree of polarization image (black and white image showing how polarized the light is at any point), and a combined image (using the angle of polarization for the hue, and mapping the saturation and brightness to the degree of polarization)

degree of polarization image of my arm

angle of polarization image of my arm

combined image of my arm

It ended up being a little more challenging than I had anticipated to edit the images I had collected. If I was actually going to do this properly, I should have captured the footage with the SDK instead of screen recording the demo software, because I ended up with incredibly low resolution results. Also, I think the math I used to get the degree and angle of polarization was a little wacky because the images I produced looked pretty different from what I could see when I looked at the same conditions under the demo degree and angle presets (I captured the four channel raw data instead because it gave me the most freedom to construct different representations after the fact).

While I got some interesting results (I wasn’t at all expecting to see the strange outline patterns in the DoLP shots of the bubbles), the results were not as interesting or engaging as they maybe could have been. I think I was primarily limited by the amount of time I had to dedicate to this project. If I had had more time, I would have been able to explore more through more photographing sessions, experimenting with even more variations on lighting, and, most importantly, actually making use of the SDK to get the data as accurately as possible (I imagine there was a significant amount of data loss as images were compressed/resized going from the camera to screen output to recorded video to still image to the output of a Processing script, which could have been avoided by doing all of this in a single script making use of the camera SDK).