Pre Warning: This post contains flashing images.

Disclaimer: I do not own any of the footage used, they are the property of the associated film studios listed below:

The Godfather (1972)- Paramount Pictures, Raging Bull (1980) – United Artists, The Matrix (1999) – Warner Bros., Whiplash (2014) – Sony Pictures Classics, Jaws (1975) – Universal Pictures

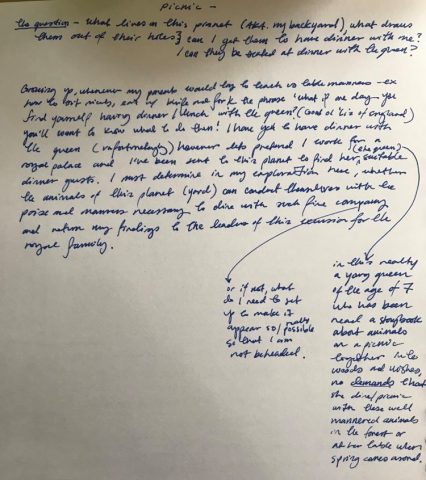

For my Typology machine I wanted to explore Walter Murch’s theory (as proposed in his book In The Blink Of An Eye) that if a film captures its audience that the viewers will blink in somewhat unison. Taking this, I expanded it and asked a series of questions:

1.) Will the audience blink in unison?

2.) Does Genre/The content of the film affect the rate at which people blink?

3.) Are there specific moments/edits you would expect the viewer to blink on?

4.) To what extent can the frames that one misses when one blinks summarise the watched scene?

How did I do it?

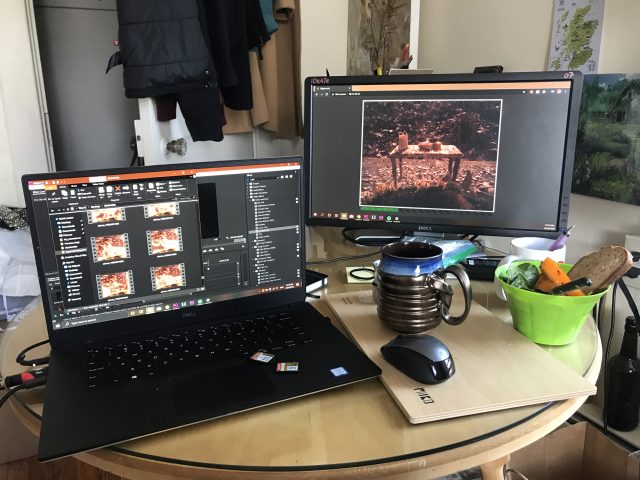

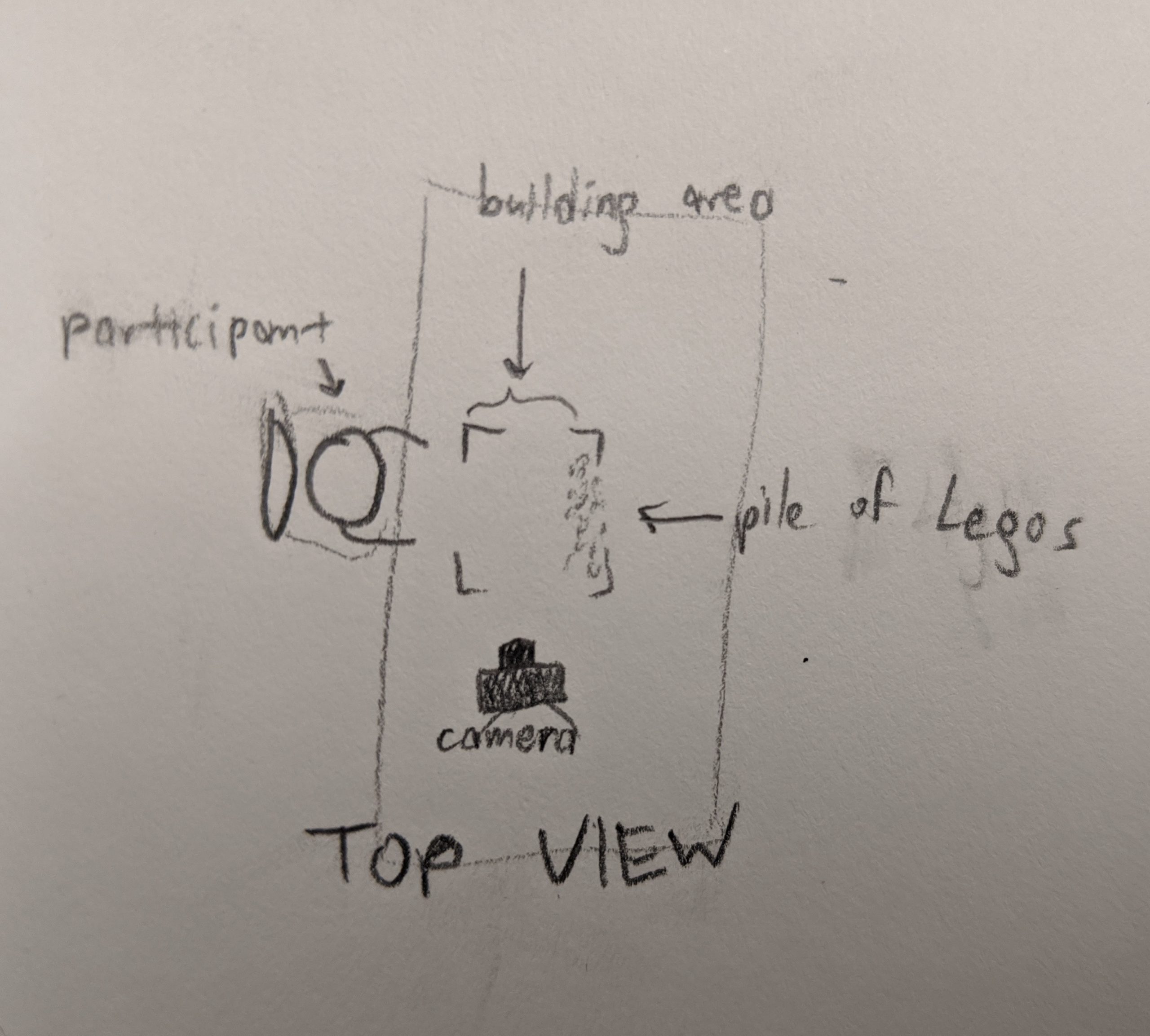

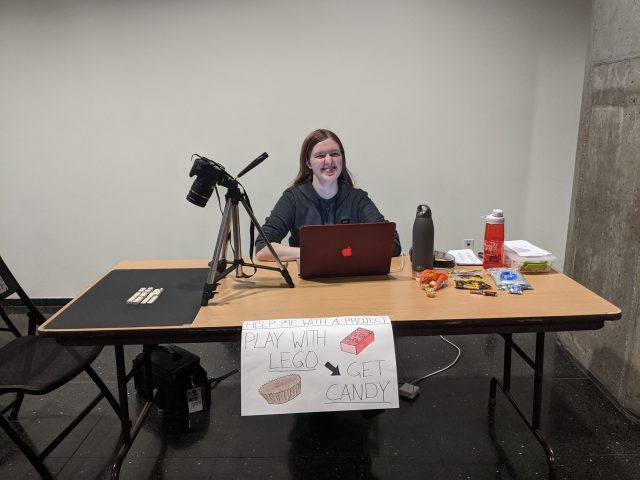

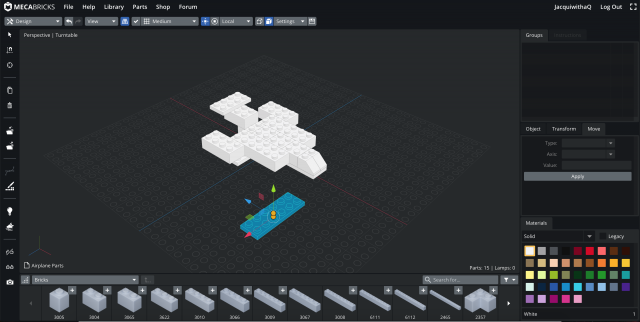

Using Kyle McDonald’s BlinkOSC, I set up as below (just in a different space):

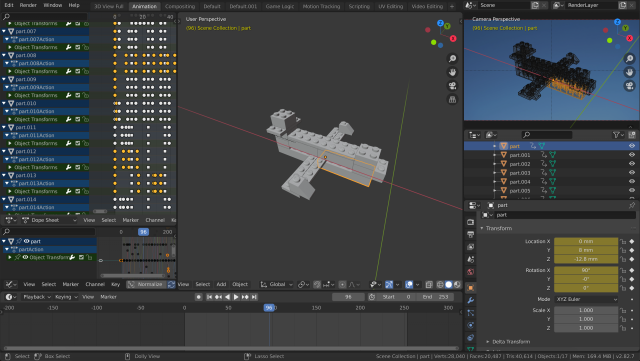

I had four participants watch the footage for me. These four cases were all recorded in the same room, I was sat on the same side of the table as them about 3 feet down so that they couldn’t see my screen but so that I could see the monitor and make sure the face capture was stable. I had Blink OSC modified so that whenever the receiver monitored a blink it would save a PNG of the frame at that instance they blinked, on top of this I had another version of the receiver going which oversplayed a red screen whenever someone blinked which was screen recorded.

I had the viewers watch 5 clips in this order:

1.) The opening sequence of The Godfather (1972) (WHY? This was edited by Walter Murch, so as to test Murch’s theory on his own work)

2.) The montage sequence from Raging Bull (1980) (WHY? Raging Bull is largely considered one of the best edited movies ever (according to a survey by the Motion Picture Editors Guild) and I chose this montage sequence not only as a very specific kind of editing, but also as a kind of editing that is fairly familiar to people (the home video))

3.) The bank sequence from The Matrix (1999) (WHY? A very action heavy scene with not only person but environmental destruction)

4.) The ‘Not Quite My Tempo’ sequence from Whiplash (2014) (WHY? Not only a very emotionally heavy scene, but also a scene from a contemporary movie lauded for its editing)

5.) The shark reveal scene jump-scare from Jaws (1975) (WHY? A jump-scare, and another movie lauded for it’s editing)

They watched these will slight breaks in-between (time not only for me to setup the receiver for the next scene, but also for the viewers to somewhat emotionally neutralise).

The Results

Firstly: Will the Audience blink in Unison?

The short of it: no.

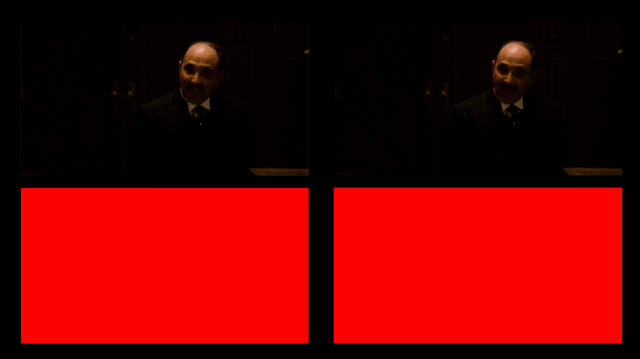

At no point in any of the five clips did I have all 4 people blink together, I had a couple instances of doubles. I sorted these out by editing the videos into a 4 channel video and then scrubbed through for all the instances overlapped blinks happened. [The videos are too large for this website but they will live here for interested parties.]

Godfather:

Raging Bull:

The Matrix:

Whiplash:

Jaws:

Does Genre affect blinking?

To crutch the numbers on this:

For the Godfather I had an average of 109 blinks, over a 6:20 minute video = 0.29 blinks per second

For Raging Bull I had an average of 48 blinks, over a 2:34 minute video = 0.31 blinks per second

For the Matrix I had an average of 79 blinks over a 3:18 minute video = 0.4 blinks per second

For Whiplash I had an average of 80 blinks over a 4:21 minute video = 0.3 blinks per second

For Jaws I had an average of 17 blinks over a 1:24 minute video = 0.27 blinks per second

From this I can make the somewhat tenuous conclusion (I need to do the actual mathematics) that Action does indeed cause us to blink more.

Are there specific moments or edits you expect a viewer to blink on?

I would hazard a tenuous no.

The ‘jump-scare’ from Jaws:

The chair throwing scene from Whiplash:

While in this second case it is harder to distinguish an exact moment, I feel that the communal (/only 2 real responses at a time) is somewhat of a factor in deciding that there is no definitive communal moment when everyone blinked.

Summarising the scenes?

Now we get into a much more subjective domain. The below gifs are from all the collected blink frames.

We can conclude a individual’s sentiment of what was missed:

or

The shared communality of what is missed. (These are all presently different speeds, but I will re-edit them later)

On top of this, we can also scale this up to the original frame rate, which at a level explores notions of linearity and indeed the functioning of our eye.

Then there is a collectiveness of individual viewed content that I feel also spells a potentially interesting discourse in comparing our individual collectiveness of what we view through what we missed.

Going further:

1.) I had the stark realisation after I had recorded all my content that all of the clips I choose were predominantly male filled. Thelma Schoonmaker (Raging Bull) and Verna Fields (Jaws) were both the main editors of their respective movies, but I feel this isn’t enough of a hallmark to balance it out. As such more varied/inclusive content rage should be a must going forward.

2.) This also in turn spells an interesting notion towards different contents of drama that might be worth looking at, ie how different kinds of emotion might affect how we blink.

3.) I feel more abrupt jump-scares would be worth looking at/Horror as a genre overall. The jump-scare itself has evolved a fair amount through time, and the contemporary horror might be worth comparing to older horror.

4.) All of my clips were viewed on a monitor and in singular format and then compared. While more indicative how the impact of how technology has affected how we view film today, I feel the idea of the group aura that is a part of viewing film in a cinema is worth looking at. This would entail a much more large scale capture, and mostly like a re-orientation of the technology I use.

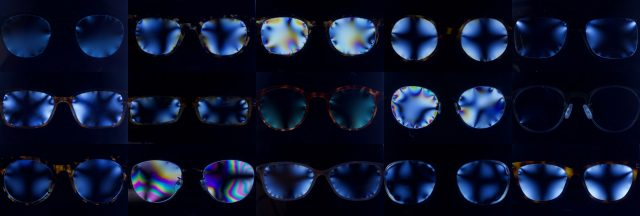

Many people are completely dependent on corrective lenses without knowing how they work; this project examines the invisible stresses we put in & around our eyes every day.

Many people are completely dependent on corrective lenses without knowing how they work; this project examines the invisible stresses we put in & around our eyes every day.