When sonogram is used as a device for portraiture, what nuances in the motion of the heart can be seen across people? When people encounter a sonogram-enabled view of their heart, it’s usually in a scary, small, cold room. Our relationship is a clinical one; terrifying and disembodying.

However, there is value in re-encountering the heart outside of a medical context. Contact with hidden parts the body can become, as opposed to an experience of fear, can be one of joyful exploration. We can observe our hearts moving with rhythm and purpose; a thing of beauty. Conversely, we can appreciate the weird (and meaty) movement that is (nearly) always there with us. A collection of hearts help us explore the diversity in this motion.

Check out the interactive —> cathrynploehn.com/heart-sonogram-typology

Toolkit: Wireless ultrasound scanner, by Sonostar Technologies Co., Adobe After Effects, Ml5.js

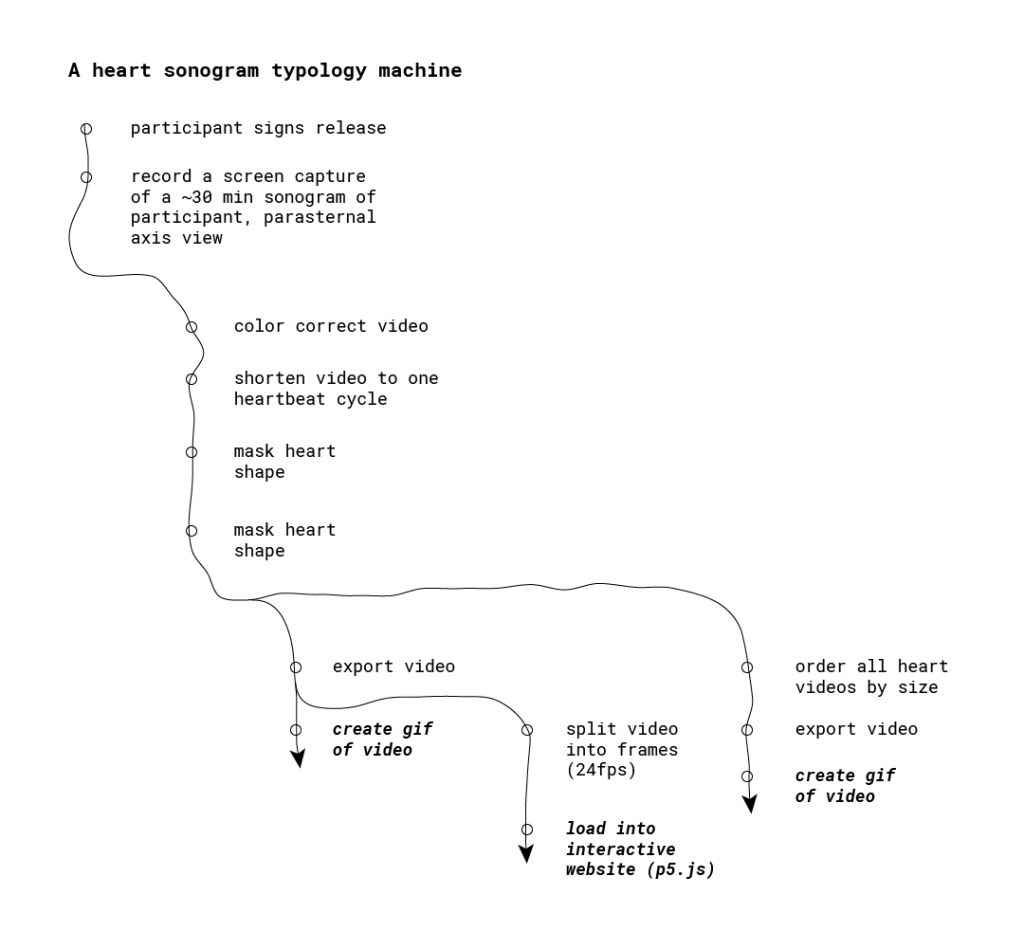

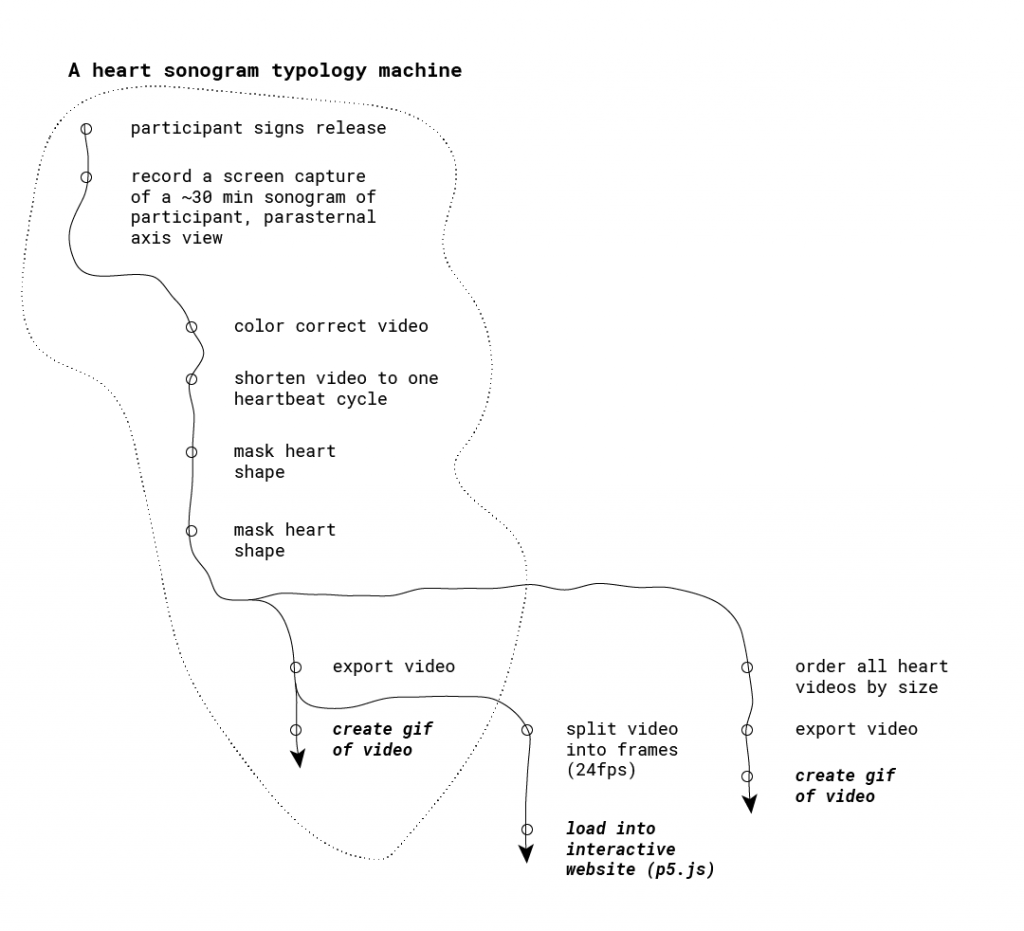

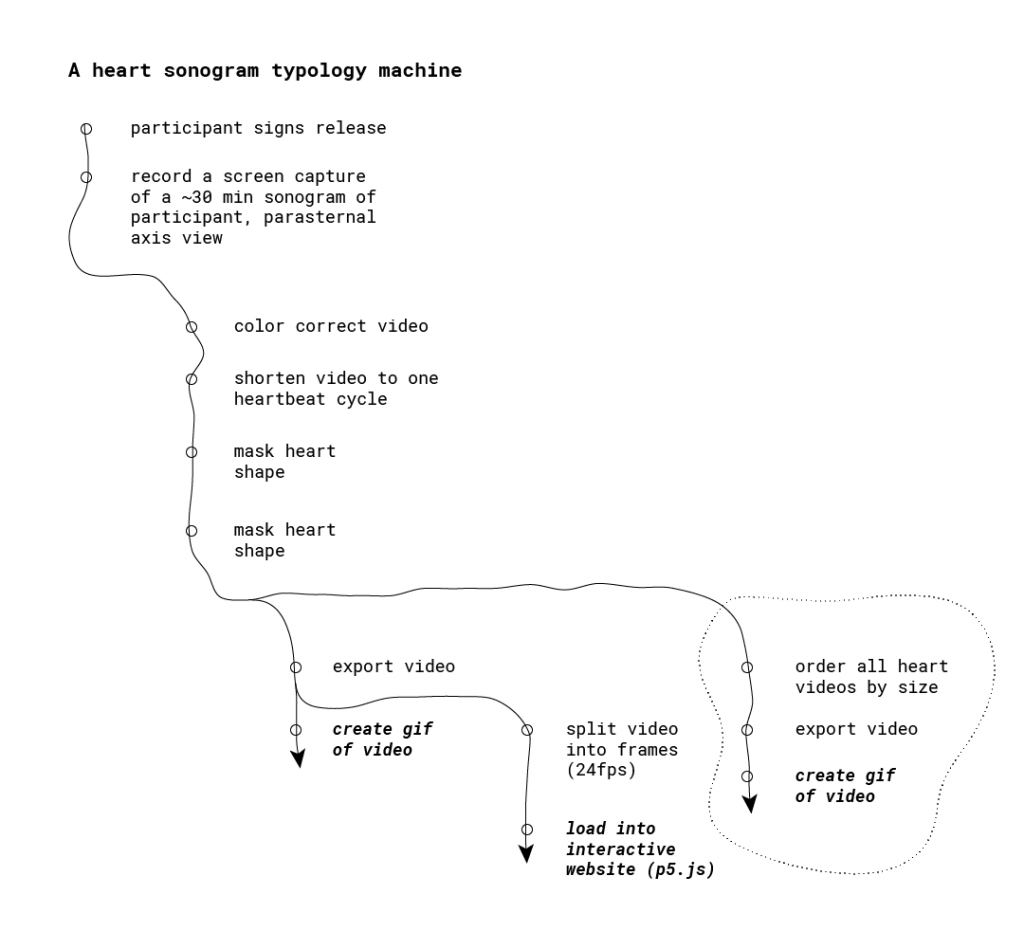

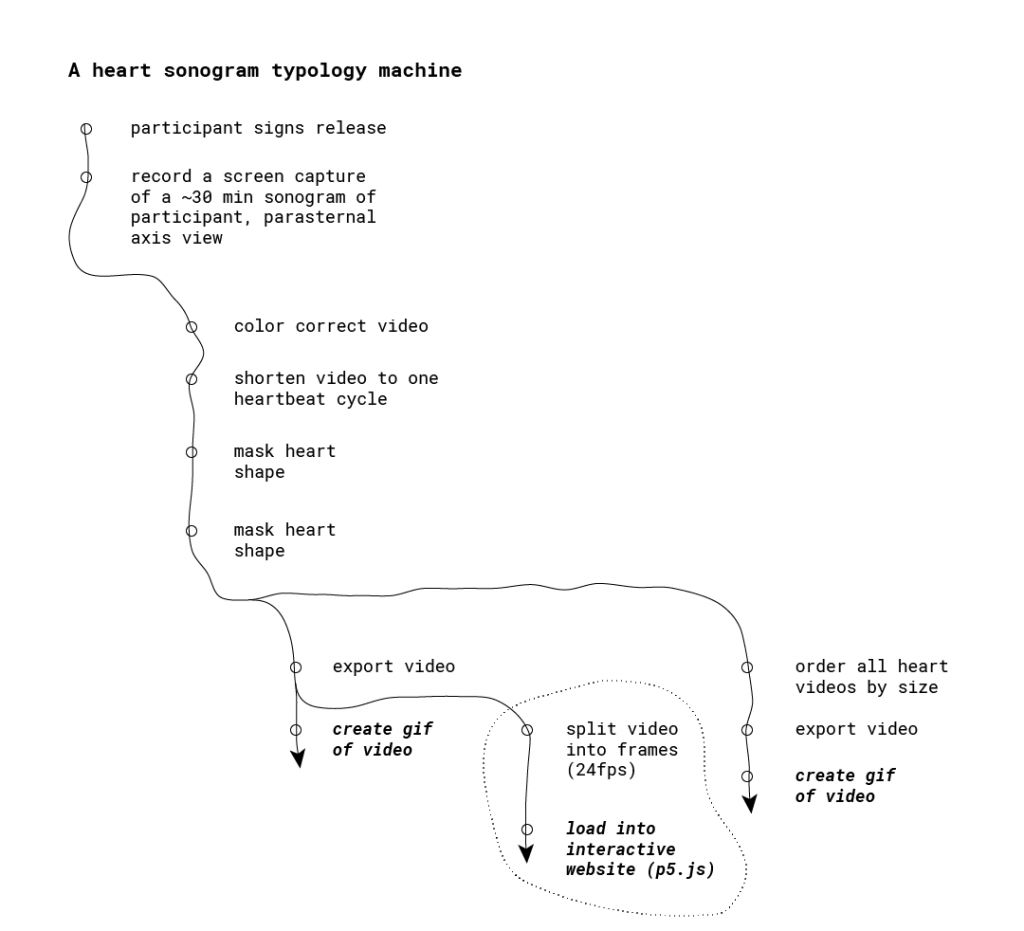

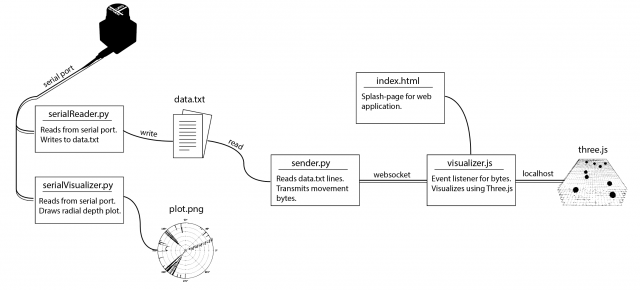

Workflow

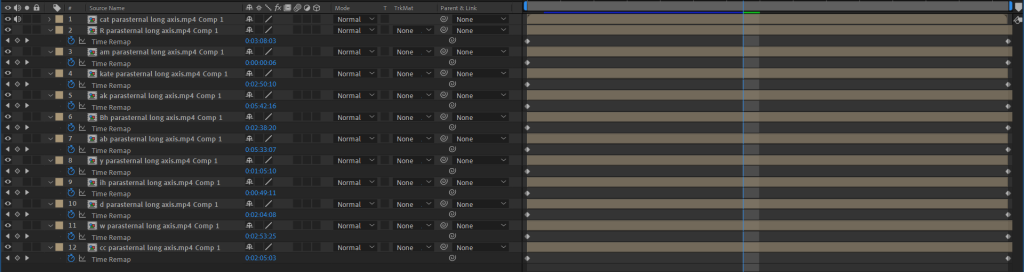

I created three deliverables for this project, using the following machine:

In developing this process, I encountered the following challenges:

Legibility. Learning how to operate a sonogram and make sense of the output was a wild ride. I spent several hours experimenting with the sonogram on myself. Weirdly, after this much time with the device, I became accustomed to interpreting the sonogram imagery. In turn, I had to consider how others might not catch on to the sonogram images; I might need to provide some handholds.

Consistency. Initially, I was interested in a “typology of typologies,” in which people chose their own view of their heart to capture. I was encouraged instead to consider the audience and the lack of legibility of the sonograms. I asked myself what was at the core of this idea; relationship with body. Instead of act choosing an esoteric/illegible sonogram view, the magic lie in the new context of the sonogram. Further, I realized it’d be more compelling to make the sonograms themselves legible to explore the motion of the heart across different bodies. That’s where the playfulness could reside.

Reframing the relationship our body. Feedback from peers centered around imbuing the typology with playfulness, embracing the new context I was bringing to the sonogram. Instead of a context of diagnosis and mystery, I wondered how to frame the sonogram images in more playful. One aspect was to shy away from labeling, and to embrace interactivity. Hence, I created an interactive way to browse the typology.

Once I focused on a simple exploration of the motion of the heart, the process of capture and processing of the sonograms became straightforward, allowing me to explore playful ways of interacting with the footage.

Deliverables

Looping GIF of single hearts

First, I produced looping video for each person’s sonogram. Some considerations at this stage included:

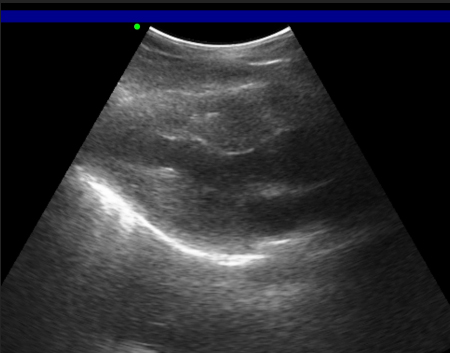

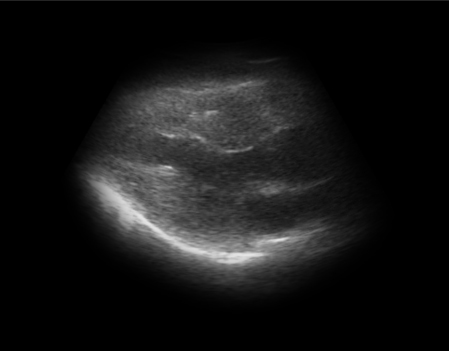

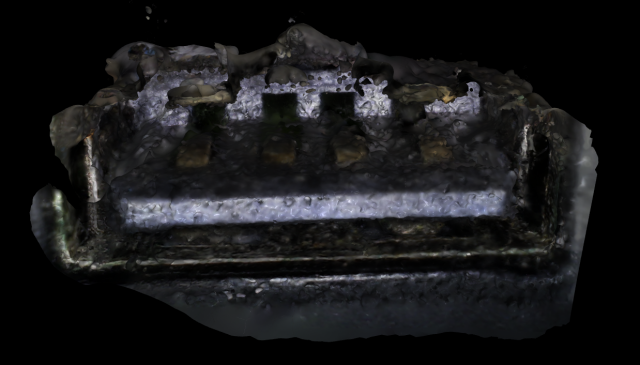

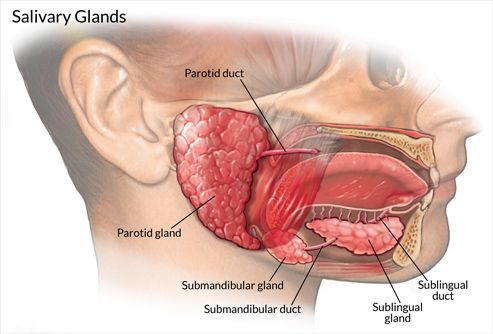

Using a consistent view. In capturing each sonogram, I positioned the sonogram to capture the parasternal long axis view. You can view all four chambers of the heart, can easily access this view with the sonogram, and can see a silhouette of the heart.

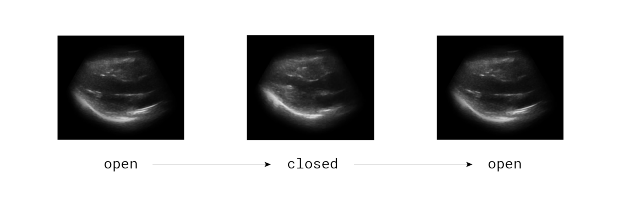

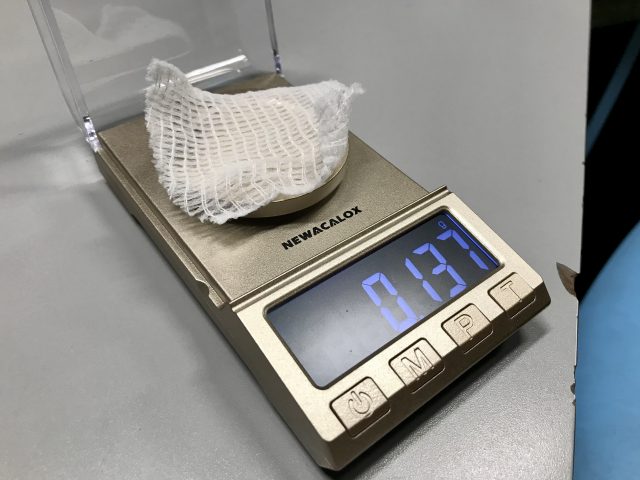

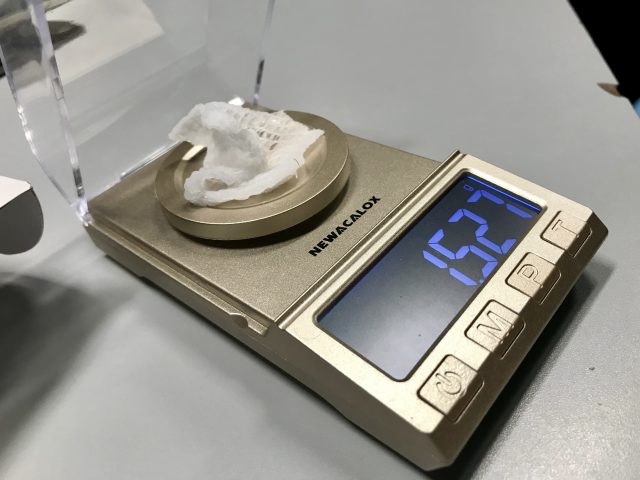

Cropping the video length to one beat cycle. One key to observing motion is to get a sense of it through repetition. In order to create perfect looping gifs, I cropped the video length to exactly one beat cycle. I scrolled through footage frames, searching for where the heart was maximally expanded. Each beat cycle consists of an open-closed-open heart.

Masking the sonogram to just the heart. Feedback made it clear that the sonogram shape was a little untoward. Meanwhile, the idea of labeling the chambers of the heart for some made the sonograms sterile. For these reasons I masked the sonogram footage to the silhouette of the heart. Thus, the silhouette provided some needed legibility without the need to label the heart. Further, I it was easier to compare hearts to one another by size.

Here’s the final product of that stage:

Looping GIF of all hearts, ordered by size

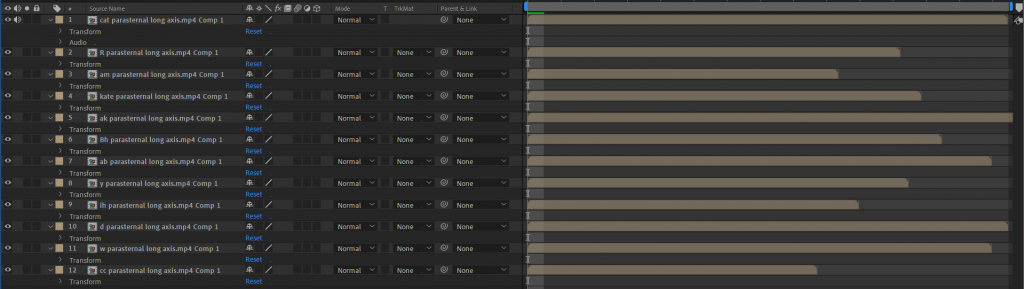

Next, it was time to compare hearts to one another. As I put together a looping video of all the sonograms, I considered the following:

Ordering by size. One cool relationship that emerged were the vast differences in heart size across people. Mine (top left) was coincidentally the smallest (and most Grinch-like).

Scaling all heart cycles to a consistent length. At the time of sonogram recording, each person’s heart rate was different. As I was seeking to explore differences in motion, not heart rate, I scaled all heart cycles to the same number of frames.

Differences in motion. With all other variables held consistent, neat differences in heart motion emerge. Consider the dolphin-like movement heart in the top row, second column to the jostling movement of the heart in the top row, fourth column.

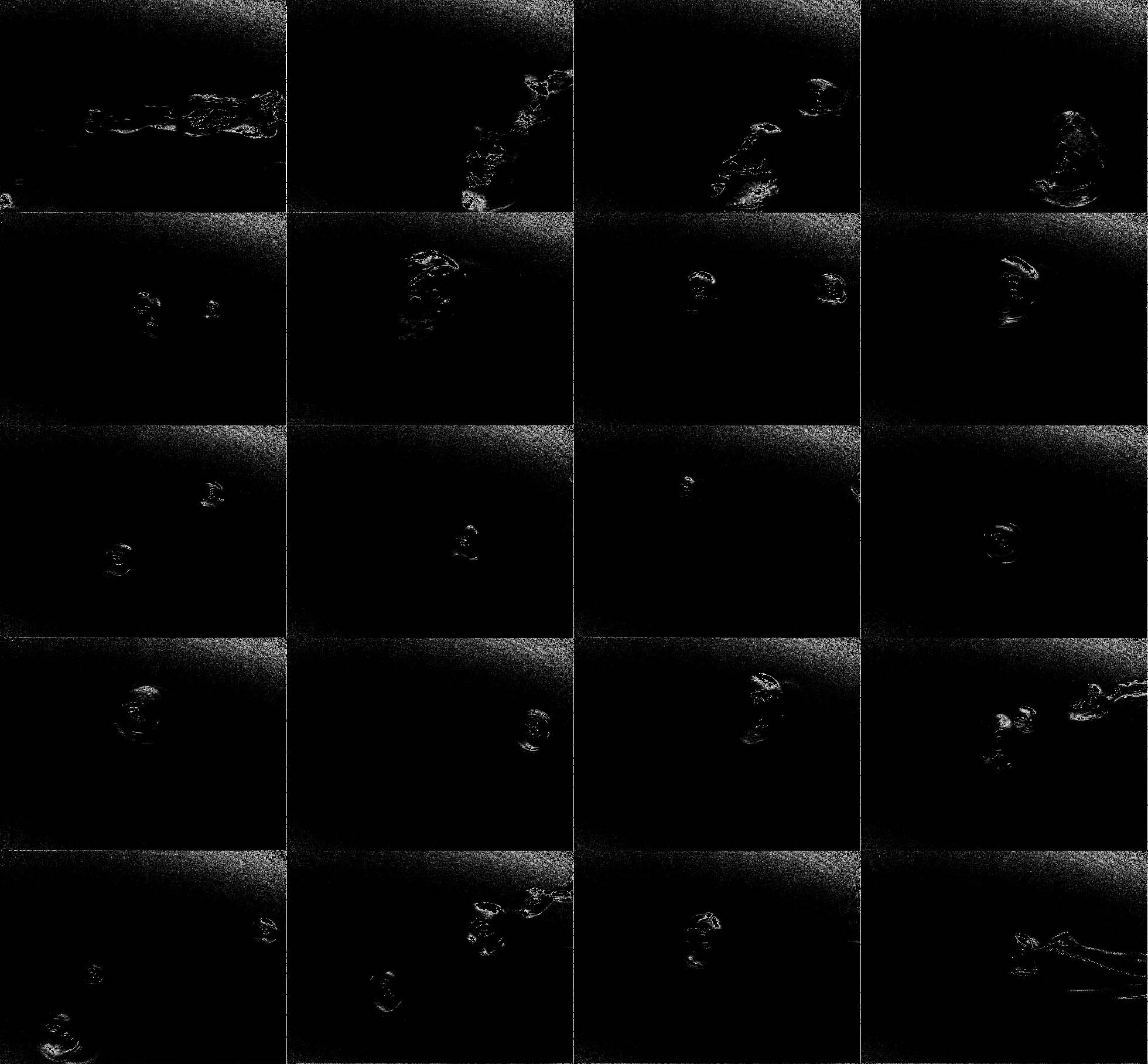

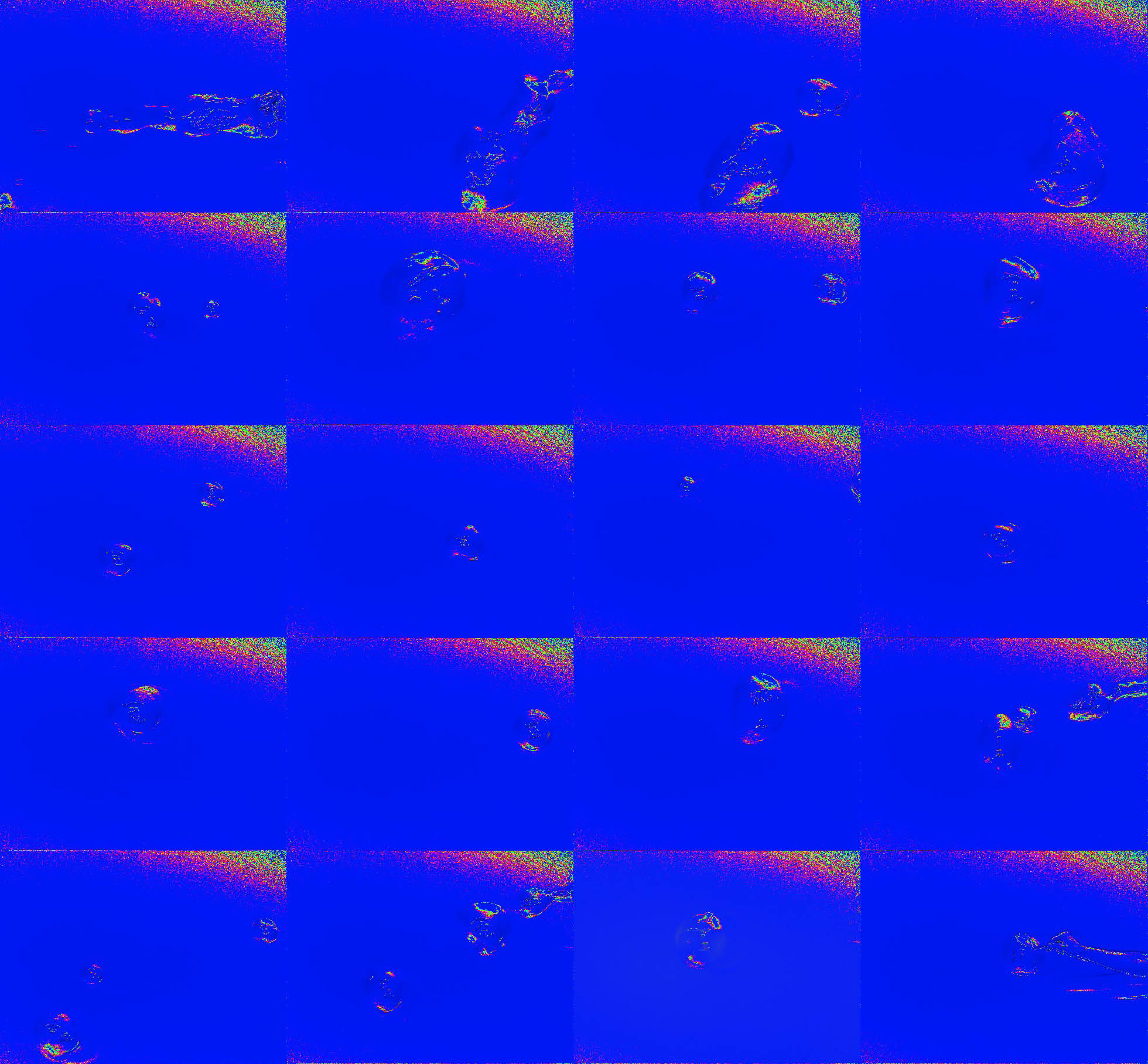

All heart sonograms, synchronized by beat cycle, ordered from left to right and top to bottom by size.

A interactive to explore heart motion

A looping video does the trick for picking out differences in motion. Still, I wondered whether more nuanced and (hopefully) embodied ways of exploring of a heart’s pumping movement existed.

As I edited the footage in After Effects, I found myself scrolling back and forth through frames, appreciating the movement of the heart. The scroll through the movement was compelling, allowing me to speed up/slow down movement. An alternative gesture came to mind: a hand squishing a perfume pump.

The “squishing” hand gesture was inspired by these scenes from The Matrix: Revolutions, in which Neo resurrects Trinity by manually pumping her heart (I think):

Perhaps because portraiture via sonogram is heavily discouraged by the FDA, sonogram-based inspirations were sparse.

So, after briefly training a neural net (with Ml5; creating a regression to detect hand movements using featureExtractor) with an open/closed hand gesture, you can physically pump the hearts with your hand on this webpage.

I also tried classification with featureExtractor and KNNClassifier, although it seemed to choke the animation of the hearts. The movement can also be activated by scrolling, which is neat when you change speed.

A note about tools

Hearts repeat the same basic movement in our bodies ad infinitum (or at least until we die). Thus, a looping presentation seemed natural. In terms of producing the video loops, using After Effects and Photoshop to manipulate video and create gifs were natural decisions for the sonogram screen capture.

Still, I’m exploring ways to incorporate gesture (and other more embodied modes) in visualizing this data. An obvious result of this thought is to allow a more direct manipulation of the capture with the body (in this case, the hand “pumping”the heart. Other tools (like Leap Motion) exist for this purpose, but the accessibility of Ml5 and the unique use of it’s featureExtractor were feasible paths to explore.

Final thoughts

In general, I’m ecstatic that I was able to get discernible, consistent views of the heart with this unique and esoteric device. Opportunities remain to:

Explore other devices for interaction with heart. Though my project focused making the viewing of the data accessible to anyone with a computer, device-based opportunities for exploring the sonogram data are plenty. For example, a simple pressure sensor embedded into a device might provide an improved connection to the beating hearts.

Gather new views of the heart with the sonogram. What about the motion of the heart can be observed through those new angles?

Explore the change in people’s relationship to their bodies. Circumstances prevented me from gathering peoples reactions to their hearts in this new context. Whereas this project focused on the motion of the heart itself, I would like to incorporate the musings of participants as another layer in this project.

Explore other medical tools. Partnerships with institutions that have MRI or other advanced medical tools for viewing the body would be interesting. MRI in particular is quite good ad imaging heart motion.

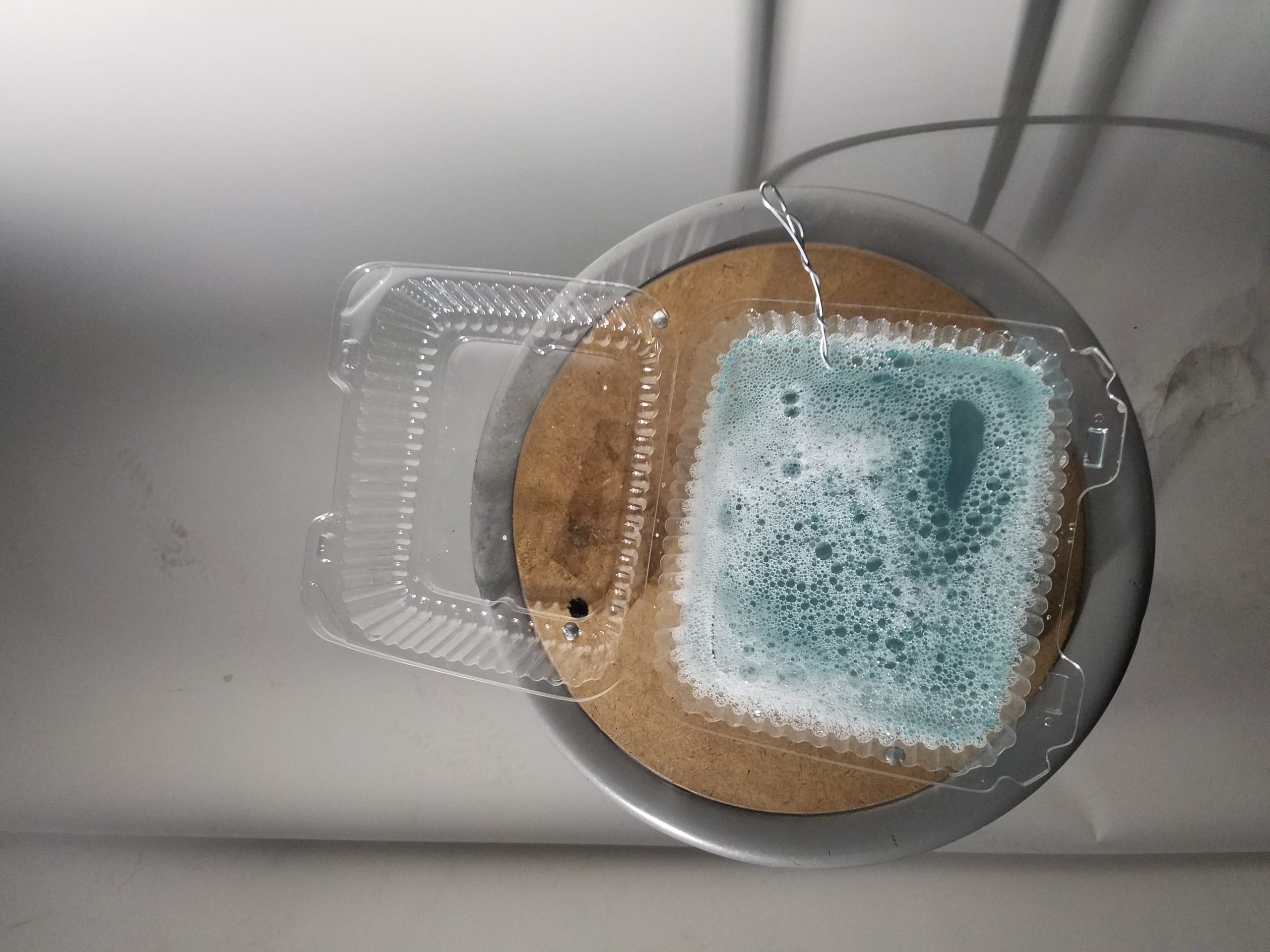

The photo setup I ended up using with the polarization camera, light with circular polarization filter, and my bubble juice.

The photo setup I ended up using with the polarization camera, light with circular polarization filter, and my bubble juice. My bubble juice (made from dish soap, water, and some bubble stuff I bought online that’s pretty much just glycerin and baking powder)

My bubble juice (made from dish soap, water, and some bubble stuff I bought online that’s pretty much just glycerin and baking powder) An image of my arm I captured from the screen recording.

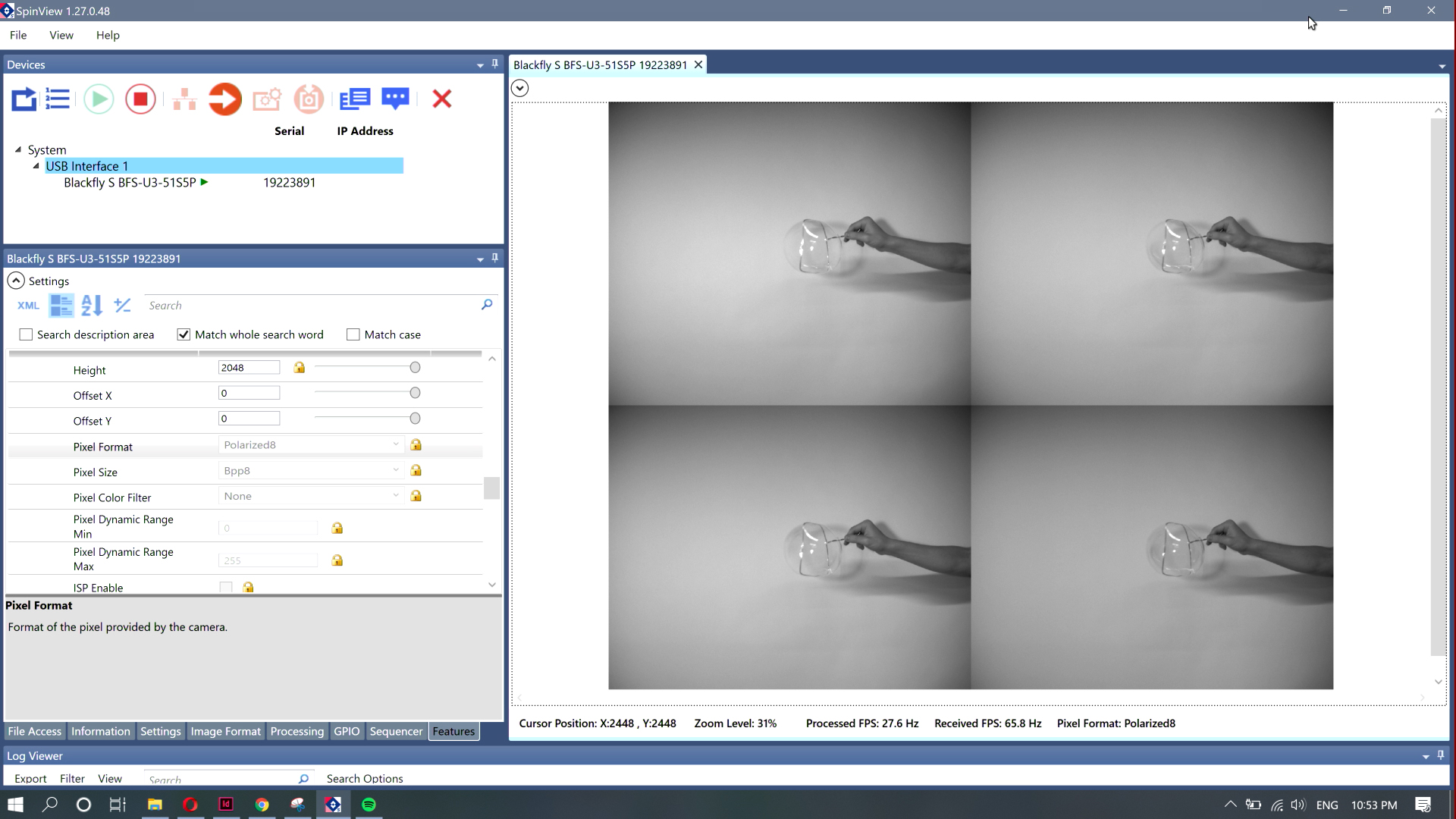

An image of my arm I captured from the screen recording.