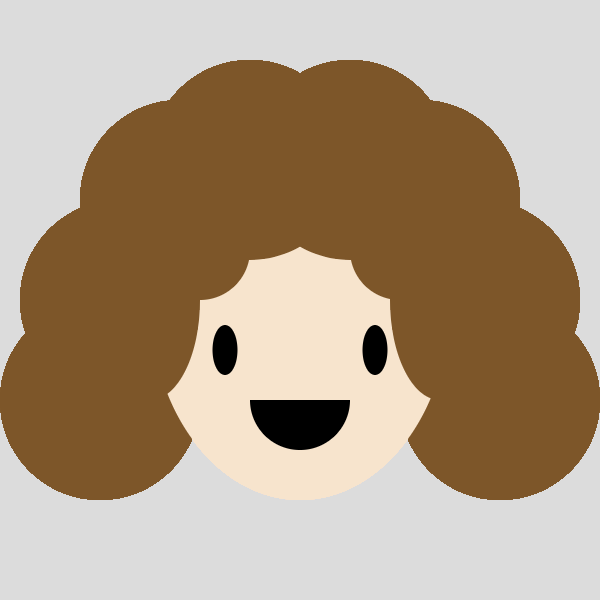

wpf-portraitfunction setup() {

createCanvas(400, 600);

background(0,186,247);

}

function draw() {

fill(70,44,26);

arc(200, 150, 200, 200, PI, TWO_PI,);

fill(70,44,26);

arc(200, 125, 200, 200, PI, TWO_PI,);

fill(233,168,139);

ellipse(200,150,175,175);

stroke(70,44,26);

fill(70,44,26);

rect(125,60,150,30);

fill(70,44,26);

rect(225,120,22.5,5);

fill(70,44,26);

rect(152,120,22.5,5);

fill(255,255,255);

ellipse(165,140,17.5,17.5);

fill(255,255,255);

ellipse(235,140,17.5,17.5);

fill(35,163,102);

ellipse(235,140,10,10);

fill(35,163,102);

ellipse(165,140,10,10);

fill(0);

ellipse(165,140,5,5);

fill(0);

ellipse(235,140,5,5);

fill(239,139,129);

arc(200, 200, 40, 40, TWO_PI, PI);

fill(0);

arc(200, 202.5, 30, 30, TWO_PI, PI);

fill(241,215,212);

arc(200, 202.5, 10, 10, TWO_PI, PI);

fill(241,215,212);

arc(210, 202.5, 10, 10, TWO_PI, PI);

fill(241,215,212);

arc(190, 202.5, 10, 10, TWO_PI, PI);

fill(255,175,145);

arc(200, 175, 15, 30, PI, TWO_PI,);

fill(0);

arc(197.5, 175, 4, 4, PI, TWO_PI,);

fill(0);

arc(202.5, 175, 4, 4, PI, TWO_PI,);

fill(46,56,66)

arc(200, 637.5, 200, 800, PI, TWO_PI,);

}

I found this self-portrait very challenging, particularly creating hair that looked even remotely human, but I had a lot of fun trying to draw myself.

![[OLD SEMESTER] 15-104 • Introduction to Computing for Creative Practice](https://courses.ideate.cmu.edu/15-104/f2021/wp-content/uploads/2023/09/stop-banner.png)