prompt: “nike muji spacecraft collaboration rendered with houdini”

noise seed: 05

iteration: 100

result:

Colab was easy enough to get running, though I didn’t get the diffusion to work (I think because it requires a starting image rather than starting from noise?). It’s really interesting to see the images emerge through the iterations – I found my favorite outcomes were around iteration 100 or 150, when the image had just started to materialize (perhaps because my prompt lent itself well to textures, it didn’t take long to get there), and beyond that it was further refined but the different between subsequent iterations was hardly discernible after a point.

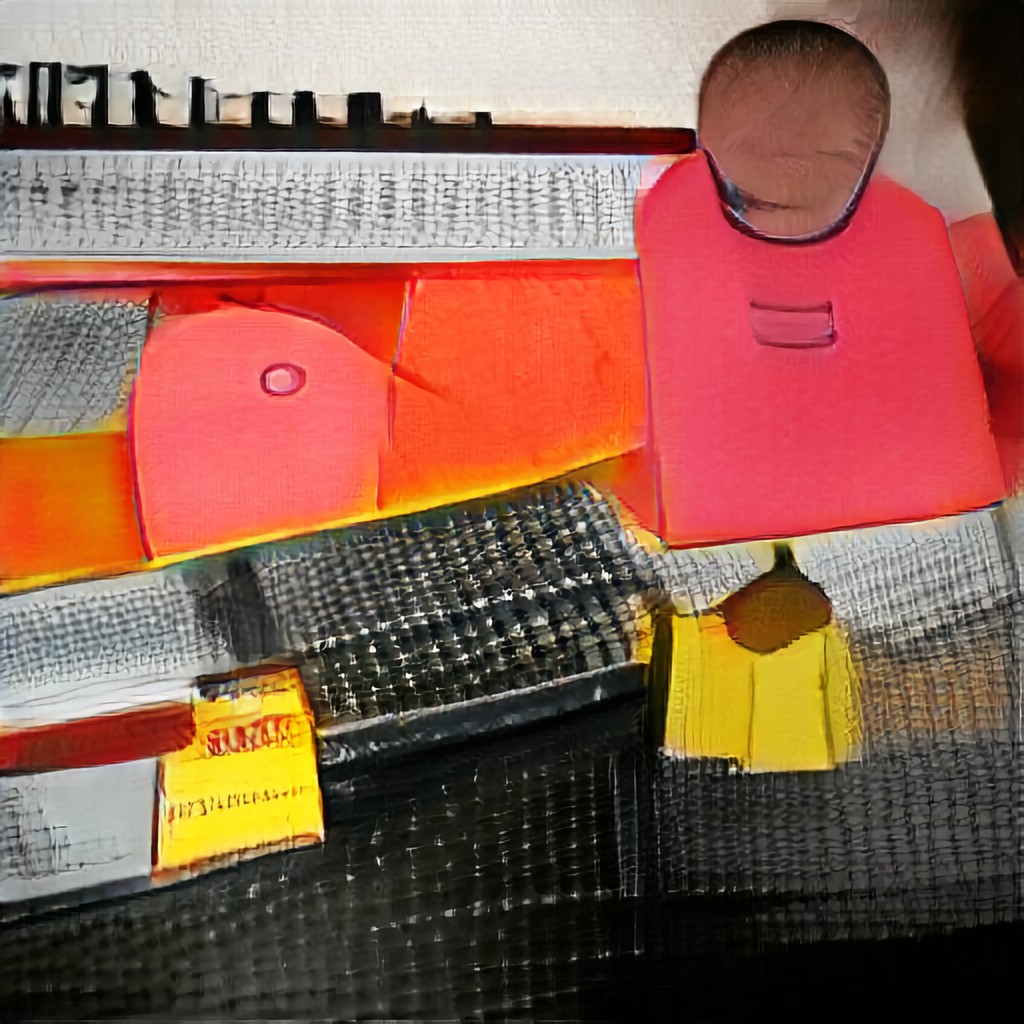

prompt: “nike muji spacecraft collaboration rendered with houdini”

noise seed: 2046

iteration: 50

init image: dragonfly-sliderule_2.jpeg (from ArtBreeder)

result:

prompt: nike:30 | muji:30 | spacecraft:10 | rendered:15 | houdini:5 | color:10

noise seed: 2046

iteration: 50

result: