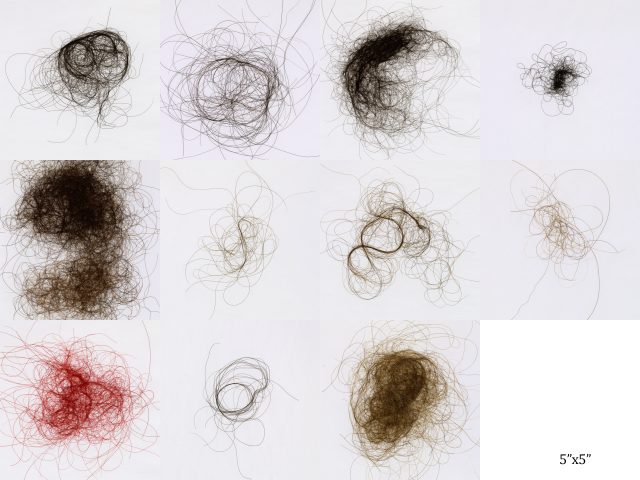

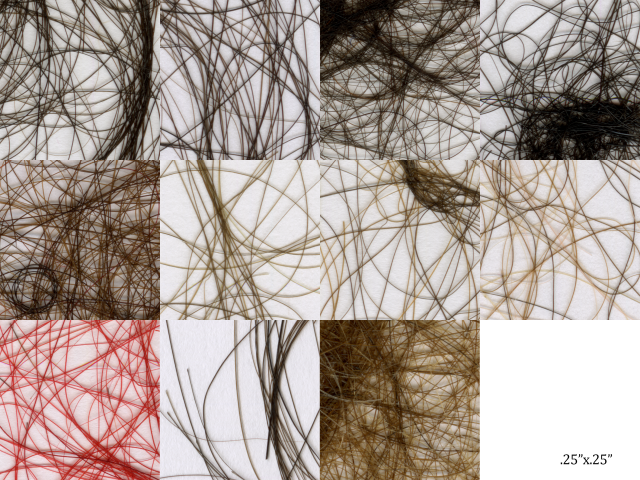

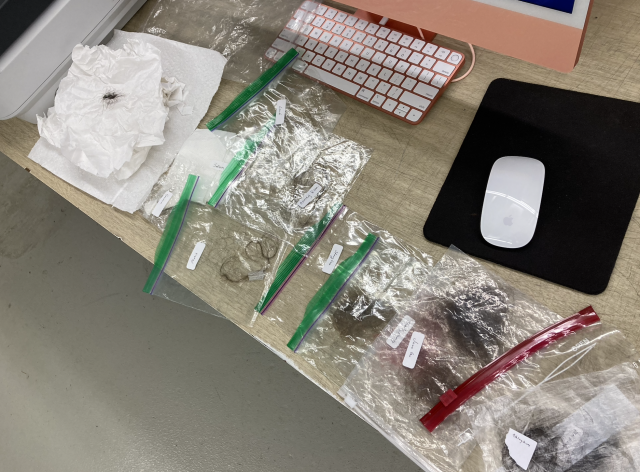

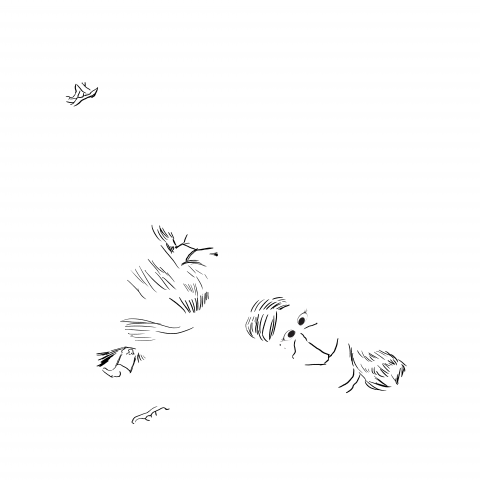

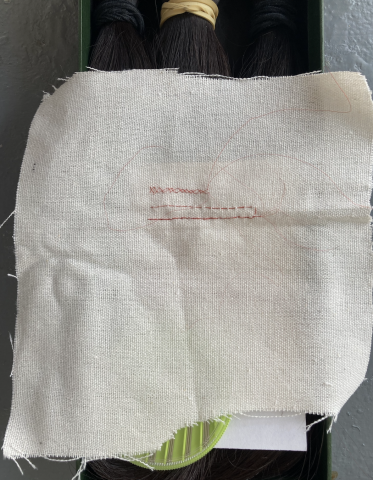

For my final, I revisited my goal of capturing hair. I aimed to use photogrammetry in video form (also called “volumetric” or “4D” video) to try and capture moving hair. There were a lot of unknown factors going into the project that attracted me. I didn’t know how I was going to obtain many cameras, how I could set up a rig, how I could do the capturing process, or how I could process the many images taken by the many cameras into 3D models that could become frames. I wasn’t even sure if I’d get a good, bad, or an unintelligible result. I wanted the chance to do a project that was actually experimental and about hair though.

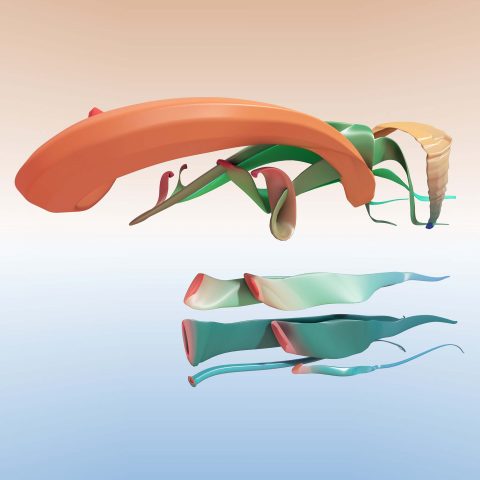

In preparation for proposing this project I was looking into the idea/concept of hair movement and on that subject, what I found were mostly technical art papers on hair simulation (ex. this paper talks about obtaining hair motion data through clip in hair extensions). Artistically though, I found the pursuit of perfectly matching “real” hair through simulations a bit boring. I want the whimsy of photography and the “accuracy” of 3d models at the same time.

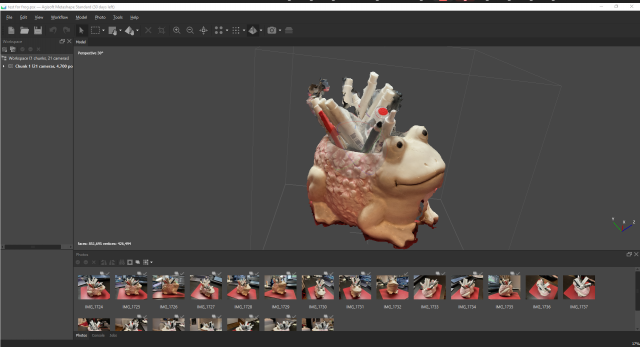

My process started with an exploration of the photogrammetry software: Agisoft Metashape which comes with a very useful 30-day free trial in the standard version. I experimented around with taking pictures and videos to get the hang of the software. My goal here was to see if I could find the fewest amount of photos (and therefore cameras) that would be needed to create a cohesive model. It turns out that number is somewhere just below 20 for a little less than 360 degree coverage.

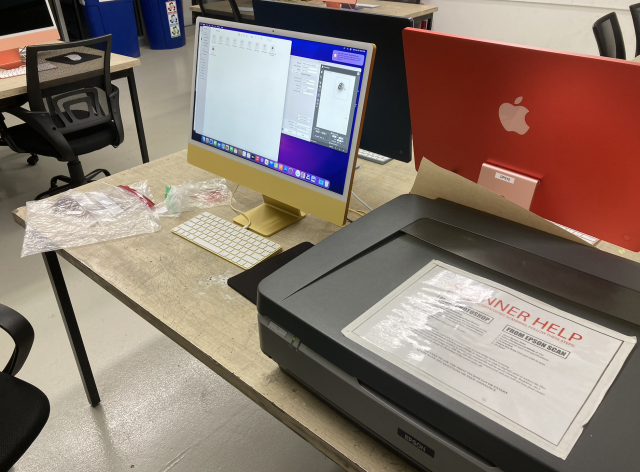

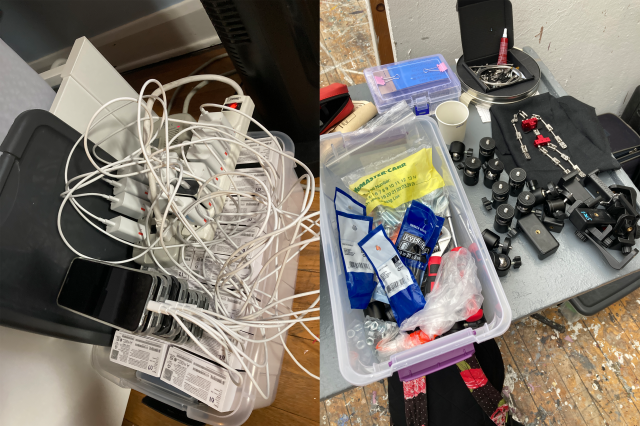

I was able to borrow 18 Google Pixel phones (which all had 1/8th, 240 ftps slow motion), 18 camera mounts, a very large LED light, several phone holders, a few clamps, and a bit of hardware from the Studio. I was then able to construct a hack-y photogrammetry setup.

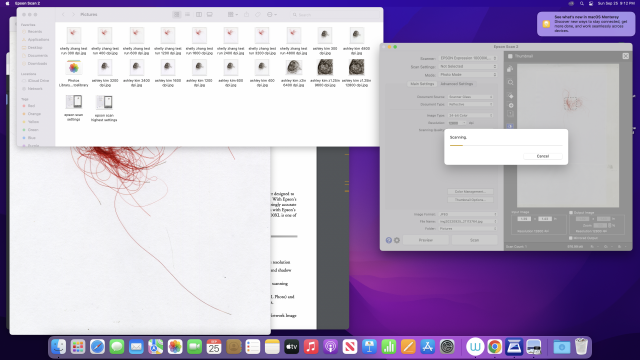

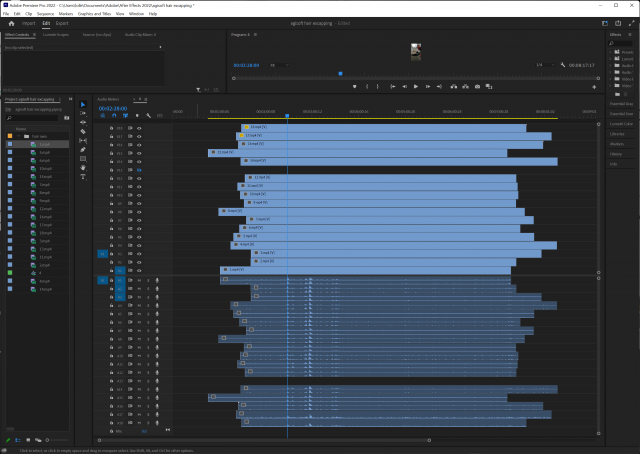

Since the photogrammetry rig seemed pretty sound, the next step was to try using video. After filming a sample of hand movements, manually aligning the footage and exporting each video as folders of jpegs, I followed the “4D processing” Agisoft write-up. This- no joke- took over 15 hours (and I didn’t even get to making the textures).

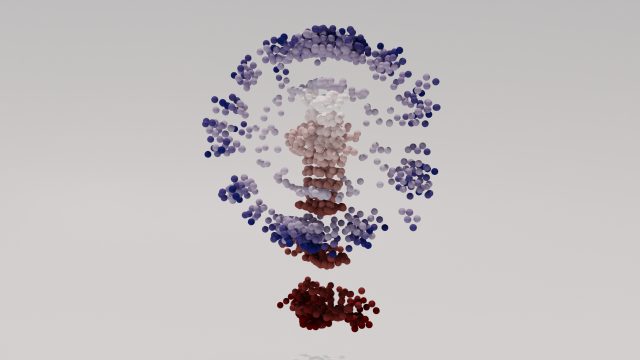

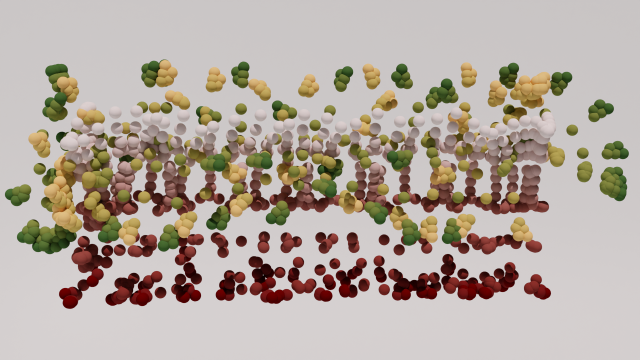

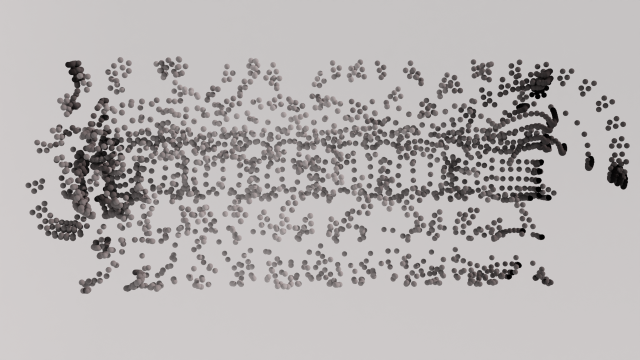

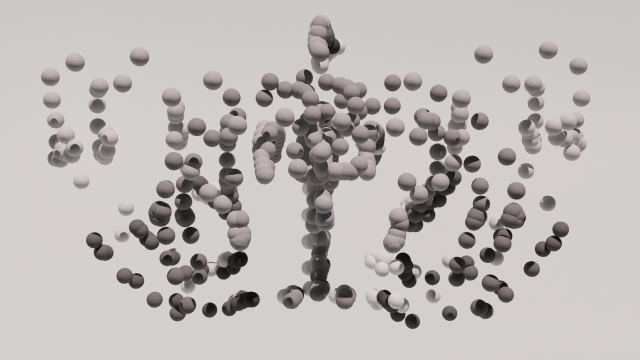

Aligning the photos took a few minutes (I was very lucky with this); generating a sparse point cloud took a bit over an hour; generating the dense point cloud took four; and generating the mesh took over 10. I didn’t dare try to generate the texture at that point because I was running out of time. I discovered here that I’d made a few mistakes:

- I forgot the setup I made is geared towards an upright object that is centered and not hands so this test was not the best to start with

- Auto focus :c

- Auto exposure adjustment :c

- Overlap should really be at about 70%+

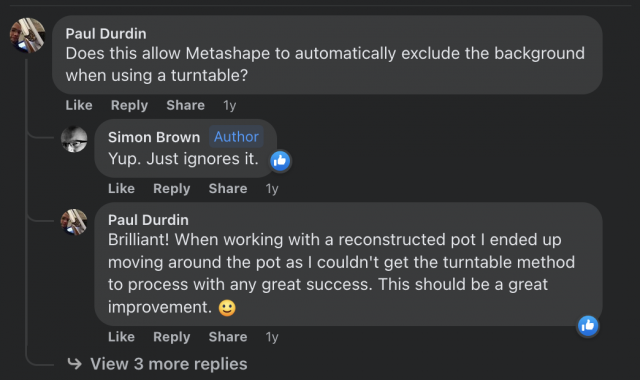

- and “exclude stationary tie points” is an option that should only be checked if using turntable

So, what next? Cry ? yes :C but also I did try to wrangle the hair footage I have into at least a sliver of volumetric capture within the time I had.

I think that in a more complete, working, long form, I’d like for my project to live in Virtual Reality. Viewing 3D models on a screen is nice but I think there is a fun quality of experience in navigating around virtual 3D objects. Also, I guess my project is all about digitization: taking information from the physical world and not really returning.