Slurp detection gone wrong

I (tried) making a slurp detector.

What is this?

This is (intended) to be a command line slurp detection tool that would automatically embed captions into the video whenever a slurp sound appears. It would also produce an srt file, which would give you the time stamps of every slurp that occurred in the video.

Why is this interesting?

I particularly thought the idea of capturing just slurps was quite humorous and in line with my previous project with PersonInTime. Additionally, while preexisting human voice datasets, with the most well known one being AudioSet, do have tokens for classifying human sounds. However, their corpus of human sounds only contains digestive sounds pertaining to mastication, biting, gargling, stomach-grumbling, burping, hiccuping and farting. None of these are slurping, which I argue is the most important human digestive sound in the world. I feel like this is wasted potential, as many (many) people would benefit from being able to detect their slurps.

Where will/does/would this live?

This would live as a github repository/tool available for all to detect their slurps.

What does this build off of?

This work was primarily inspired by Sam Lavigne’s videogrep tool, which generates an srt file using vosk and makes supercuts of user-inputted phrases on videos. Audio classification tools have also been around, with some famous ones being tensorflow’s yamnet and OpenAi’s recent release, Whisper .

This work is a continuation of thoughts I had while completing my experiments for PersonInTime. In particular, I was thinking a lot about slurps when I fed my housemates all those noodles, which organically led to this idea.

Self-Evaluation

Where did you succeed, what could be better, what opportunities remain?

I failed in almost every way possible, since the slurp detector has yet to be made. Perhaps the way I’ve succeeded is by gathering research and knowledge about the current landscape of both captioning services on github and the actual exportable models used on teachable machine.

The thing itself

Audio Playermy slurp dataset which I will use to make my slurp detector

The Process

I started this process by first just trying to replicate Sam Lavigne’s process with vosk, the same captioning tool used in videogrep. However, I soon realized that vosk was only capturing the english works spoken, and none of the other miscellaneous sounds emitted by me and my friends.

So then I realized that in order to generate captions, I had to generate my own srt file. This should be easy enough, I foolishly thought. My initial plan was to use Google’s Teachable Machine to smartly create a quick but robust audio classification model based on my preexisting collection of slurps.

My first setback was stubbornly refusing to record slurps with my microphone. At first I was extremely averse to that idea because I had already collected a large slurp-pository from my other videos from PersonInTime, so why should I have to do this extra work of recording more slurps?

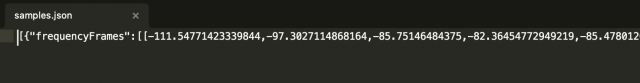

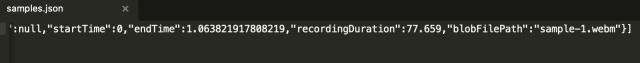

Well, naive me was unaware that Teachable Machine prevents you from uploading audio datasets that aren’t encoded using their method, which is basically a webm of the recording plus a json file stored in a compressed folder. Then, I thought to myself, it shouldn’t be too hard to trick teachable machine into thinking my slurp-set was actually recorded on the website, just by converting the file into an webm, and providing the appropriate tags in the json file.

This was my second mistake, since what the heck were frequencyFrames? The other labels were pretty self explanatory, but the only documentation I could find about frequency Frames was this one Tensorflow general discussion post where some guy was also trying to upload his own audio data to Teachable Machine. The code posted in that discussion didn’t work and I may have tried to stalk the questioner’s github to see if they posted the complete code (they did not). The person who responded, mentioned the same data for training with the speech_commands dataset, which was not what I wanted since it did not have slurps in it.

So after looking at this and trying to figure out what it meant, I decided my brain was not big enough at the time and gave up.

I decided to record on Teachable Machine in the worst way possible, recording with my laptop microphone while blasting my slurps-llection hopes that the slurps would be recorded. I did this with the aim to get something running. I still had the naiveté that I would have enough time to go back a train a better model, using some other tool other than Teachable Machine. (I was wrong)

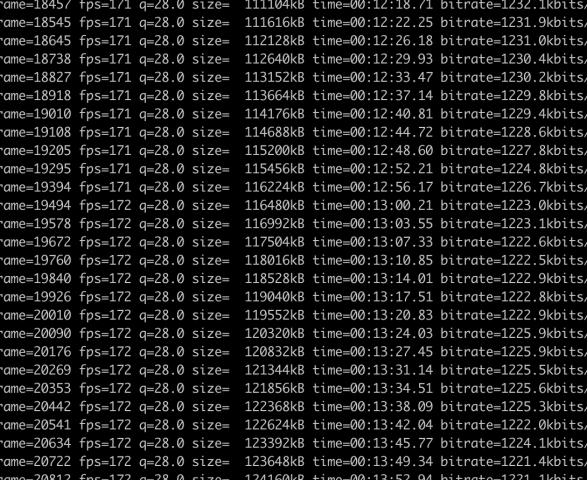

So I finished training the model on teachable machine, and it worked surprisingly well given the quality of the meta-recorded dataset. However, I didn’t realize I was about to encounter my third mistake. Teachable Machine audio models only exported as TF.js or TFlite and not as keras, which was the model type that I was most used to handling. I had also already found a srt captioning tool on github that I wanted to base my modified tool off of (this one, auto-subtitle, uses Whisper oooo) but in python, and I thought the easiest way to combine the tools would be to load the model with plain Tensorflow. Determined to work with something I was at least somewhat familiar with, I tried converting the TF.js layered model into a TF saved model. To do this, I used the tfjs-converter that tensorflow already provided for situations like these. After some annoying brew issues, I converted the model to python. Which is where I’d encounter my fourth mistake.

What I didn’t realize while I was converting my model was that the teachable machines audio component is based off the the model topology of the Tensorflow.js Speech Commands model, which as the name implies is build on top of Tensorflow.js and there isn’t any documentation on non javascript implementations or work arounds, which ultimately dissuaded me from trying to test out whether my converted keras model would even work or not.

At this point, I thought, maybe I shouldn’t even be using Teachable Machine at all? (which was probably what I should’ve done in the first place, since the amount of time I’ve spent trying to work around unimplemented things would’ve been enough to train an audio classification model). I looked at RunwayML’s audio tools. Currently, their selection is very limited (4, noise reduction, silence gap deletion, caption production and real-time captioning), and it wasn’t exactly what I wanted either. I encountered the same problem as I did with vosk, it only recorded words.

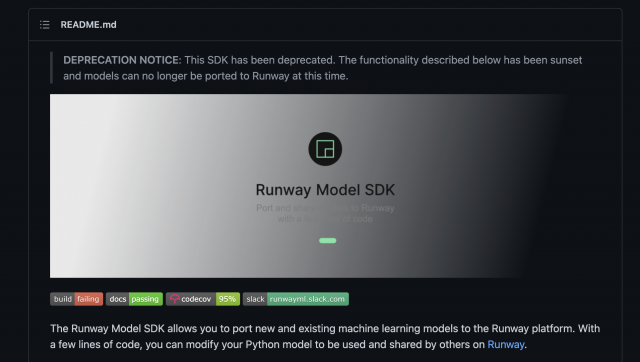

At this point I turn to the almighty Coding Train videos to give me some more insight, and from there I learn about RunwayML-SDK, where you should be able to port your own trained models into their built-in tools. Wow, perfect! me thinks with excess zealotry. For when I hath opened the github repo, what did I see?

This repository was archived + deprecated LAST MONTH (November 8th 2022).

After a whimsical look at some p5.js (ml5 SoundClassifiers are default the microphone, with no documentation on other audio input sources on their website or in the code directly of which I could find) I was back a square one.

Although there are many captioning repositorys written in python, there are almost none that are written in javascript. The most I could find was this one, api.video, which is a fancier fork of another repository that basically is a wrapper for a transcription service, Authot (interesting name choice). They just send the video over the Authot, get the vtt file and return it as that.

At this point I realize that I’m back to square one. I initially wanted to use Teachable Machine because of personal time constraints; I thought it’d be an easier way for me to get the same final product. But given the kind of probing I had to do (completely in the dark about most of these things before this project), it was not worth it. Next steps for me would be to just train an audio classification model from scratch (which isn’t bad, I just allocated my time to the wrong issues) and take my captioning from there in python. For now, I have my tiny slurp dataset. :/