This post was based on an email to Golan.

I’m pretty interested in the tools of the creative tech trade, and there are a lot of tools that I’m interested in or have used that I can recommend as helpful for this course.

60-461/761: Experimental Capture

CMU School of Art / IDeATe, Spring 2020 • Profs. Golan Levin & Nica Ross

This post was based on an email to Golan.

I’m pretty interested in the tools of the creative tech trade, and there are a lot of tools that I’m interested in or have used that I can recommend as helpful for this course.

360 soundscape of a meal

A 360 video and audio composition isolating every sound recorded during a meal (self-produced and peripheral sounds) with its decontextualized visual representation (a mask will cover the video footage partially only uncovering the area that produces the sound).

A dining visual score

Inspired by Sarah Wigglesworth’s dining tables I would like to explore the conversation between the objects used during a meal. For this, I will track the 3d positions and movements of cutlery, plates, utensils, and glasses during a meal for one or more people, and after I, I will create a video composition with 3d animated objects that act by themselves, video fragments of the people spatially placed where they were seated, and audio pieces of the performance.

A meeting of conversational fillers

As a non-native English speaker, I have been noticing lately that when I am very tired my mind can detach from a conversation and suddenly, the only thing I can listen to are repetitive words and conversational fillers. I would like to reproduce that experience, in the same way as my first idea, capturing a 360 video of decontextualized ‘uh’, ‘um’, ‘like’, ‘you know’, ‘okay’ and so on.

Grillboard

A billboard on your teeth.

The Grillboard will be a set of customizable grillz that allow the wearer to interchange the caps of each tooth to create various statements and phrases. Using dental photogrammetry, a 3D model will be made of the mouth and will be used for the base grill. This grill will be all black. From that grill model, white caps will be made to be inserted over the black grill. On the facade of the caps will be a cut out letter; for each exposed tooth (~16) there will be 26 (A-Z) available letters to interchange.

Alternatively or in addition, multiple grillz will be made to create a scrolling text stop motion animation.

https://www.dazeddigital.com/beauty/head/article/42357/1/grills-maker-juanita-grillz

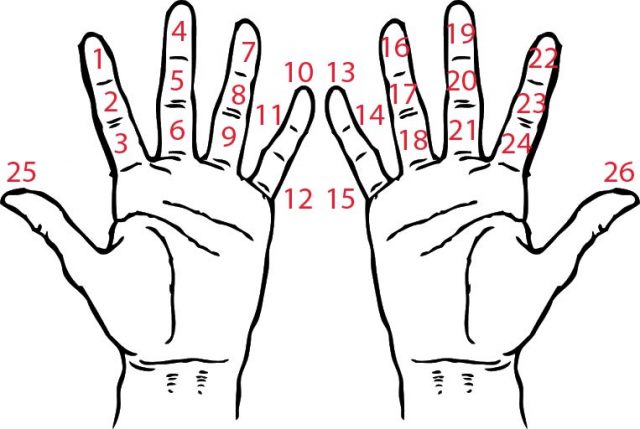

Slime Language

A speaking system using a cross of the English alphabet, sign language, a Ouija board, tattoos, and gang signs.

Slime Language is a system that uses the phalanx sections of the fingers (proximal, middle, and distal) to plot the English alphabet on the hand. By pivoting and pointing with the thumb, words and sentences can be formed to create silent and performative communication between individuals. The placement of the letters is determined by its frequency in the language and accessibility on the hand.

https://www.rypeapp.com/most-common-english-words/

https://www3.nd.edu/~busiforc/handouts/cryptography/letterfrequencies.html

https://www.britannica.com/topic/code-switching

CFA hallway in the Wean Physics department:

I want to set up a bunch of kinetics or motion detectors in the CFA art hallway and track the movement of people as well as set up some microphones and capture ambient sounds. In real time I want to sent this information to an array of LED lights in the Wean physics hallway and have the lights correspond to movement in CFA as well as the captured distorted sounds. By doings this I am creating a ghostly image of people in one location into another.

Blood pressure monitor:

I want to monitor the blood pressure of a CMU freshman, sophomore, junior, and senior and create different vests that correspond to the their blood pressure. If their blood pressure rises I want the vest to tighten around the individual and play high tempo music. If their blood pressure decreases the vest loosens and the music changes to something more relaxing and soothing.

Climbers, one finger holds:

For this one I have no idea what I want to do with it but I just think that is crazy that people can do this.

Here’s a few ideas I’m mulling over for the project. For this project I’m really interested in exploring the unique dynamics and kinematics of human body. Though there are some interesting ideas listed below, I don’t feel that my final framing is contained within.

3 Ideas I had for the project:

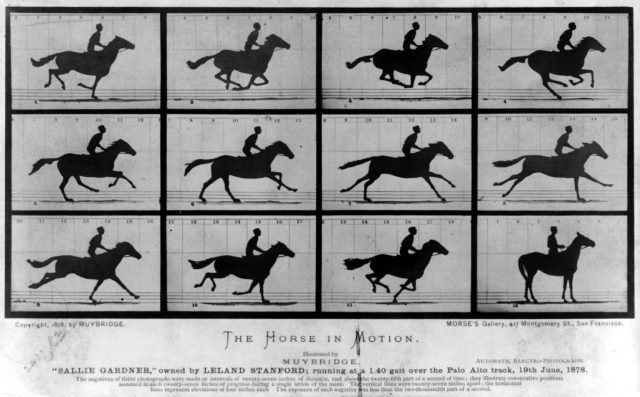

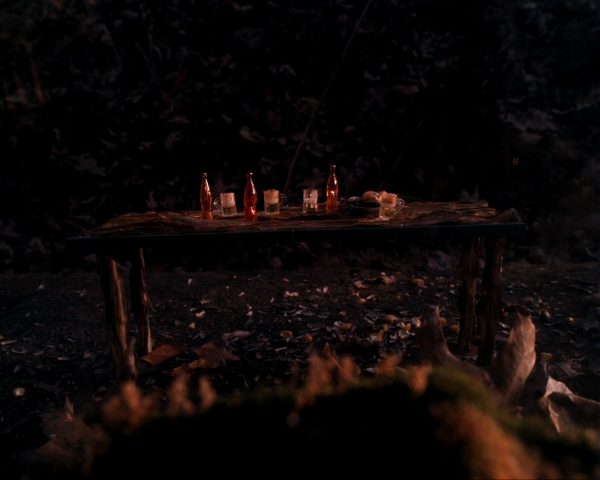

My typology machine is a system for capturing ‘human speed’ videos of squirrels in my back yard interacting with sets that allowed for their behaviors to be anthropomorphized in a humorous and uncanny manner. It was important to me that the sets and the animals interactions felt natural but off, playing with the line between reality and fiction.

I used the Edgertronic high frame rate camera to capture these images, and over the course of 4 weeks have trained the squirrels in my yard to repeatedly come to a specific location for food.

Initial question: what lives in my backyard? what brings them out of their holes? and can I get them to have dinner with me?

Inspiration

Typology

Project link: https://www.lumibarron.com/sciuridaes

instagram @sciuriouser_and_sciuriouser

Process

Bloopers: https://www.lumibarron.com/copy-of-sciuridaes

Sets Images:

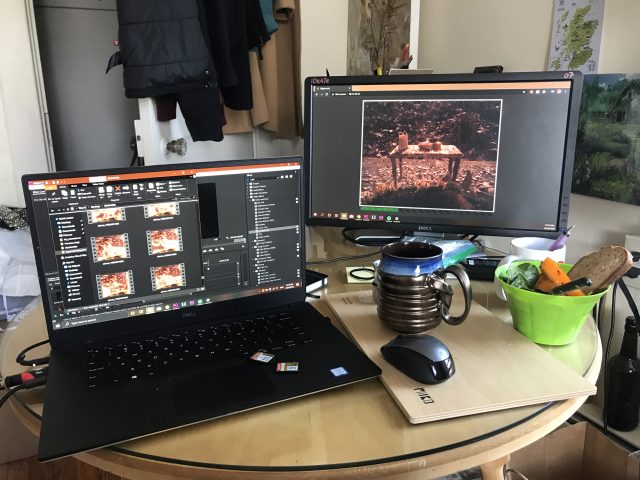

Set Up:

Observation window set up – 100ft Ethernet cable leading from camera in greenhouse to laptop – image projected onto an external monitor for larger view and to allow other work to be done while waiting for the squirrels to make their way to the table.

an initial table set up – while still attempting to get the squirrels to sit in chairs. Soon realized that this was not feasible. Squirrels are incredibly stretchy.

Challenges:

One of the greatest challenges arised from the limitations of recording time and processing/saving to memory card speed capabilities of the Edgertronic. At a full resolution image (1280X1280) at 400fps the longest possible recording time capped at 12.3 seconds. The then processing time for this footage took just over 5 minutes. This heavily limited the amount of footage or interaction captured, as often once the video was done saving to memory, the squirrel was long gone with much of my tiny china in tow.

I ended up setting the image capture time to a shorter amount – approx. 2 seconds. This made for a significantly faster processing time and more opportunities to capture multiple shots of a squirrels interaction with the set. This also meant, however that if an interaction was captured too soon or too late, the interesting/looked for interaction was often cropped short or missed entirely. With this camera a motion tracking trigger system would not have been a useful tool to use for this project.

Another limitation/challenge was the dependency on very bright light for good images on with the high frame rate recording. Clouds, indirect sunlight, shadows from trees, too little sunlight all significantly darkened the images. I was not able to find a set up for the external light that kept it safe from the elements for an extended time and successfully lit the set as well. Footage had to be taken in a limited time window and luck of weather played a very large part in the success of the images.

Initial ideation:

Project Continued

This is a project that I will be continuing to work on throughout the semester for as long as the camera is available. The squirrel feed has attracted deer recently and I would like to begin to play with sets for different backyard creatures at different frame rates (from insects to deer, chipmunks and groundhogs). This is a project that I have enjoyed doing immensely and I have set up a system that allows me to maintain my other work activities while simultaneously observing the animals.

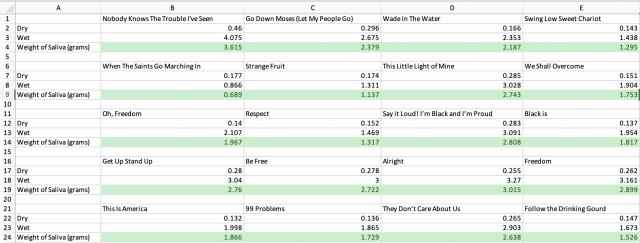

Salivation of Salvation is an experimental capture of the amount of saliva produced while performing songs created during periods of black oppression to uplift or bring attention to wrongdoings. The 20 songs span from 1867 to 2018 through american slavery, the Jim Crow era, black power movements, the civil rights movement, and the black lives matter movement. The chosen songs include the genres of slave songs, gospel, jazz, pop, and hip hop. Inspired by Reynier Leyva Novo’s The Weight of History, Five Nights, “Salivation of Salvation” combines black DNA with black history.

Featured Songs:

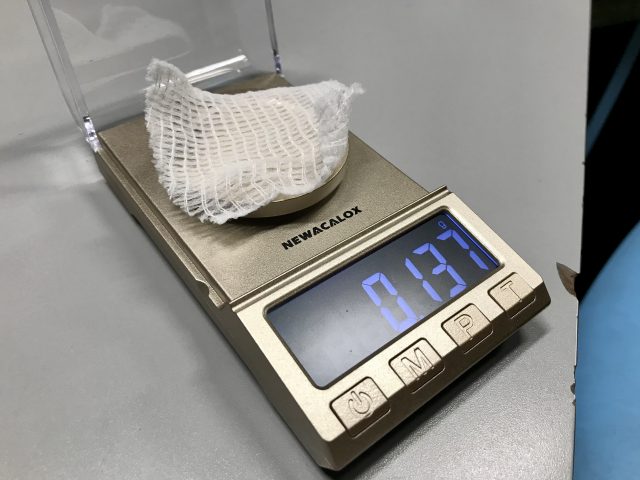

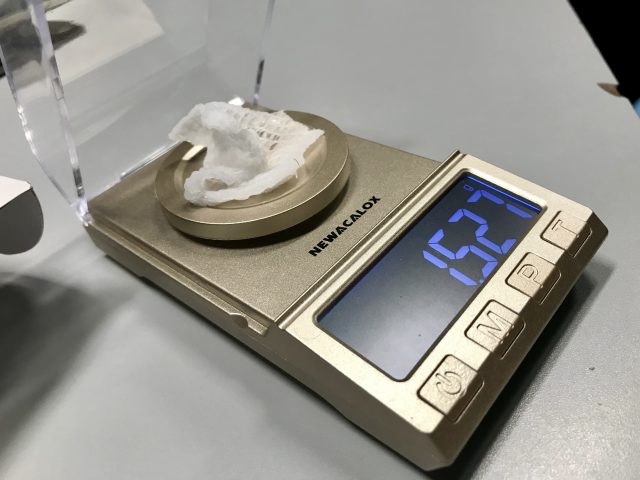

Salivation of Salvation is based on the study of sialometry, the measure of saliva flow. Sialometry uses stimulated and unstimulated techniques to produce results from pooling, chewing, and spitting. Although the practice is more commonly used to investigate hypersalivation (which would be easier to measure due to the excess of non-absorbed liquid), I realized I would need a more sensitive system to record the normal rate of saliva production.

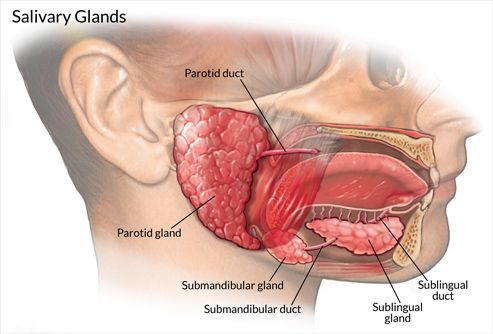

In the mouth there a three salivary glands: Sublingual, Submandivular, and Parotid. The Sublingual glands are the smallest glands and on the floor of the mouth; they constantly excrete. The Submandivular glands are at the triangle of the neck, below the floor of the mouth and also constantly produce saliva. The Parotid glands are largest glands located in front lower molars parallel to the front of the ear. These glands produce through stimulation.

A majority of saliva gets absorbed into the gums and tongue, the remaining fluid rolls back down your throat. Thus making the tongue the target area for my project since it is the pathway to the throat and makes contact with the gums.

The system developed was an artificial tongue that could be weighed dry then weighed wet, the difference in values would be the weight of the saliva collected. This process was simply and effectively produced by laying a strip of gauze the covered the entirety of the tongue (the foreign object also helped trigger the Parotid Glands). The gauze was weighed with a jewelry scale which can display weight to the milligram. The conversion from weight to volume was simple since 1 mg = 1 ml. The recorded weight difference was then transferred in 5 ml vials through drooling, which had to be produced after measurements were taken.

The purpose of Salivation of Salvation was to make a tangible representation of history. Saliva holds our genetic composition and by tying together this constantly self-producing liquid with a song that marked a specific moment, a general and personal “historical” object is developed. Collaterally, patterns of history became shown through the collected data, as well. Below is a list of observations:

Reference:

https://www.hopkinssjogrens.org/disease-information/diagnosis-sjogrens-syndrome/sialometry/

In this project I explored the emergent textures of my worn out stuff – an over used tooth brush, a pill bottle that’s been in my bag for way too long, a charging cord that’s slowly busting out of it’s casing. I explored the textures of these things through robot controlled photogrammetric system (robo – grammetry).

I began this project with the following project statement:

“I interact with a myriad of objects in a single day, often times these are things I carry with me at all time – such as my pencil, sketchbook, or a bone folder – what patterns might emerge if I sample each of these objects and put them on display?”

As I worked on this project my goals for and understanding of it developed. This project has become an exploration of the unique signature of wear and degradation I leave on some of my things.

This project emerged from my learning goal to gain familiarity with the studio for creative inquiry’s universal robot arm. I was able to make the robot step through a spherical path, staying oriented on one point in a working frame, while an Arduino controlled shutter release triggered a camera in sync with the robots motion. The robot script was laid out so I could calibrate the working frame with the plane of my cutting mat. The goal of all this was to create highly standardized photogrammetric composition.

My capture machine was a robotically guided camera that snapped 144 distinct photos of a sampled objet while stepping through a spherical path.

An Arduino was used to open the shutter of the camera every 3 seconds, while the robot moved between to a new point every 3 seconds. The processes were synced at the beginning of every capture.

The goal of this project was to capture the texture of my everyday objects and to see what might emerge from that capture. Somewhere along the line my focus moved to the identifiable aspects of wear and degradation of the objects I interact with. Photogrammetry allowed me to capture these textures in a unique and dimensional way and the Robot arm allowed for extreme standardization of capture.

Full library of models can be accessed here.

With respect to my technical and learning goals this project was a success.

In terms of content and articulation this project feels unfinished – I spent much of this project focusing on the system of capture, and only in the last week have I been able to see the output of this machine. This system has the potential to capture the distinct degradation of people’s things – I believe a larger set of models would highlight greater implications about how one considers and interacts with their stuff. This typology might be more meaningful if I had captured more objects.

This project was difficult because, at moments, it felt like a self portrait of my neurotic tendencies, in my typology machine, and my neglected and worn down objects, the captured objects.

As stated in the project proposal: this project was inspired by a sequence of photos seen in the film 20th century women. The photos are a typology of one character’s things: lipstick, a bra, her camera, etc. Through the composition and curation of objects a unique portrait emerges. My goal in this project was to use my objects to create a self portrait.

In addition, this project’s commitment to standardization is connected to the use of camera’s and capture in the scientific process.