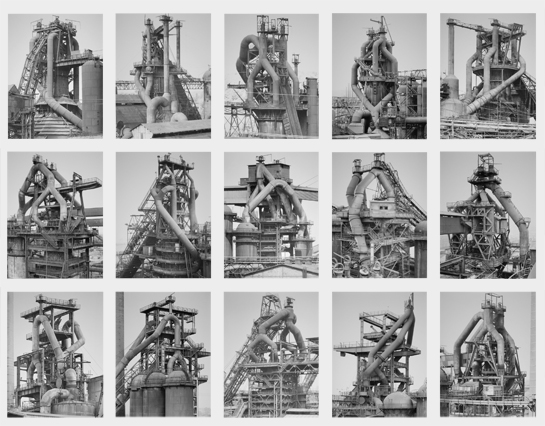

I work on livecoded music pretty much everyday. Given its academic origins, I figured I’d check out its new literature to find something for this post. I stumbled upon an inspiring paper from Francesco Ardan Dal Rì and Raul Masu that designed two different systems for visualizing tidalcycles patterns and audio characteristics in real time. Livecoding visualizers aren’t new. What’s new (to me at least) is how they introduce the visualizer as a “score” and investigate its potential value to the performer.

One visualizer is focused on composition structure, and the other on sound characteristics. Both have I’ve been interested in making myself such a system for some time because in addition to helping the performer conceptualize what they’re doing, it serves as something more engaging for an audience to stare at than someone standing at a laptop for half an hour.

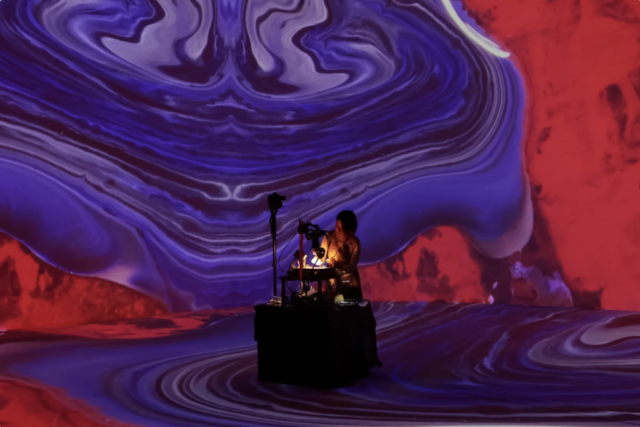

I find this latter function interesting, because few electronic instruments require the same kind of tactile and gestural engagement as traditional instruments, making for a fundamentally different (and generally duller) physical presence on stage. Audio reactive live visuals solve the “something to look at” problem, but they rarely solve the “I get to see what they’re doing” problem. I’d consider this paper’s efforts much closer to an actual solution, as the visuals are tied directly to pattern events in a comprehensible way. That they don’t touch on audience interpretation of the visualizers feels like a bit of a missed opportunity, but that investigation would probably have diluted the focus: it deserves its own paper.

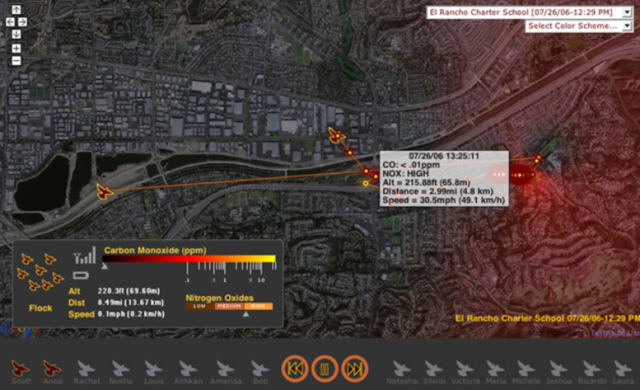

visualization “Time_X” – this visualization focuses on pattern events over time

visualization Time_Z – this visualization focuses on parameters of pattern events

As far as critiques go, none of the content jumps out as lacking. They give a thorough review of existing tools/literature, and provide solid evidence and arguments for the value of their visualization tool. My biggest critique is the lack of documenting media. I could not find the code used, nor could I even find videos of the visualizations in action. At the very end, they do mention “the systems presented in this paper have been developed using FLOSS, and will be release in creative common,” but they didn’t put any link to it in the footnotes, which feels like a massive oversight. A fair amount was written about how the different visualizations influenced composing and what limitations were discovered during use, so it feels strange to me that they wouldn’t want to give the tool life past the paper and let others experiment with and iterate directly on what they’ve successfully argued as something worth exploring.

Many papers are referenced, but two main influences are established: Thor Magnusson’s “Threnoscpe”, an environment + visualizer best for drone music, and Ivan Abreu’s “Didactic pattern visualizer”, which the Time_X visualization seems like a very direct extension of. I’d dig much deeper, but this post is already far past 150 words, so I’d just encourage checking the tools out yourself. They’re both well documented.

References:

– https://nime.pubpub.org/pub/ex3udgld/release/1

– https://thormagnusson.github.io/threnoscope/

– https://github.com/ivan-abreu/didacticpatternvisualizer

/https://tf-cmsv2-smithsonianmag-media.s3.amazonaws.com/filer/c9/82/c982850e-ea2e-4a08-87cc-dc7ff99e4061/ballengee-frog.jpg)