Throughout my childhood the one thing that I had always loved was the arcade. I remember it with a great fondness – how the bright lights, speedy energy, and overall environment always seemed to bring out the excitement in everyone, no matter child or adult. I sought solace in this space and its positive aura. I would always be grinning from ear to ear as I skipped from one side of the arcade to another, playing game after game. I was happy. I was calm. I was content.

But at one point in the night I would always reach one game in particular. A game that made my blood boil; a game that was relentless. It was the bane of my existence, my Achilles heel – the dreaded claw machine.

Growing up and never winning a prize at said game has probably manifested into this all-consuming need I have to see others experience a similar suffering. I initially planned to collect facial expressions that convey the emotion of frustration by recording people the moment the item they are trying to win during a claw machine game slips out of the claw. However, this idea soon evolved into capturing visualizations of a much more weighted reaction – the general feeling of loss. While the game is simple and lighthearted in nature, I feel like these specific, fleeting moments have a much deeper insinuation to them.

Unexpectedly, the limitations of the toy claw machine (i.e. each round only lasting 60 seconds) that I used granted me the ability to diversify my capture by not only being able to record reactions to loss – but different kinds of it.

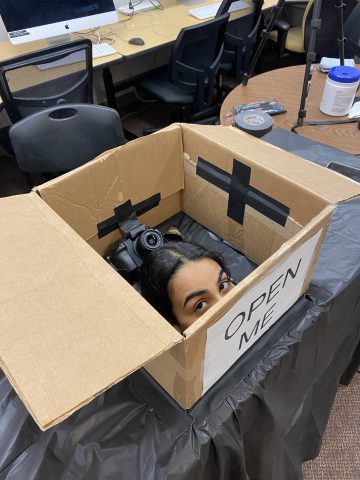

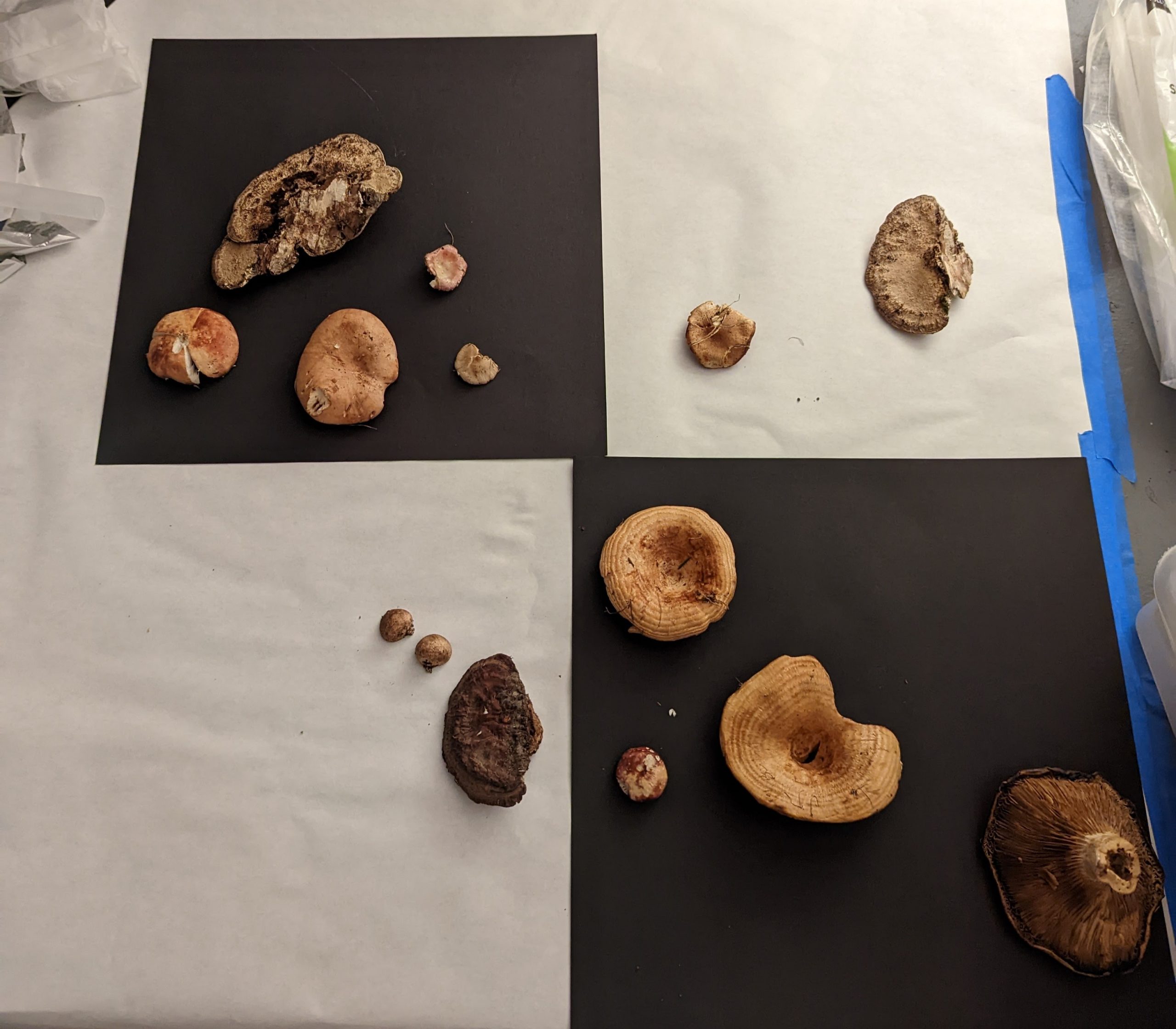

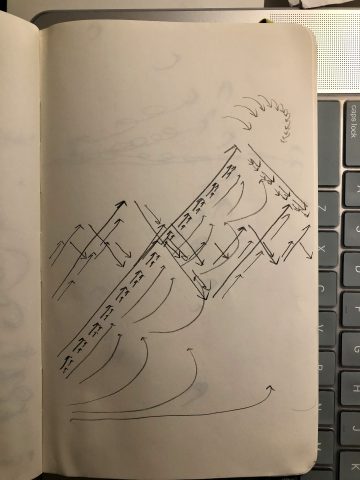

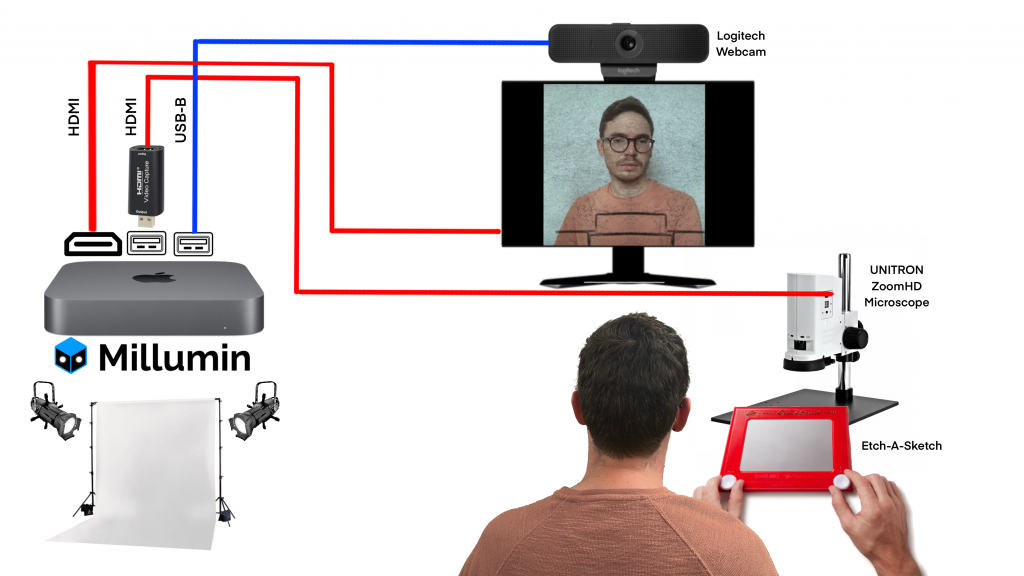

I split my data collection into two parts – reactions to the loss of time and reactions to the loss of an object (in this instance – the paper balls). I used three cameras – a GoPro, a Z Cam, and a Nikon DSLR- all pointed at the subject from different angles and distances to record them playing. Each person recorded was given three coins, which each grants them a minute of play time. They had to collect three balls of paper in a single round to win a prize (a mini pack of M&Ms). The final video consists of four parts – the intro, the control group (footage of how people look when just navigating the claw around), the captures of the loss of time, and the captures of the loss of an object.

(I also placed a trick ball inside of the machine – which is piece of tape that I stuck to the floor – to make the game even more confusing for some!)

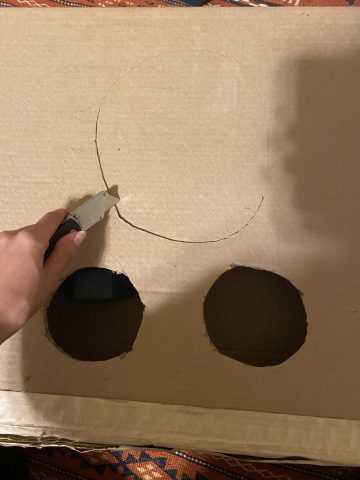

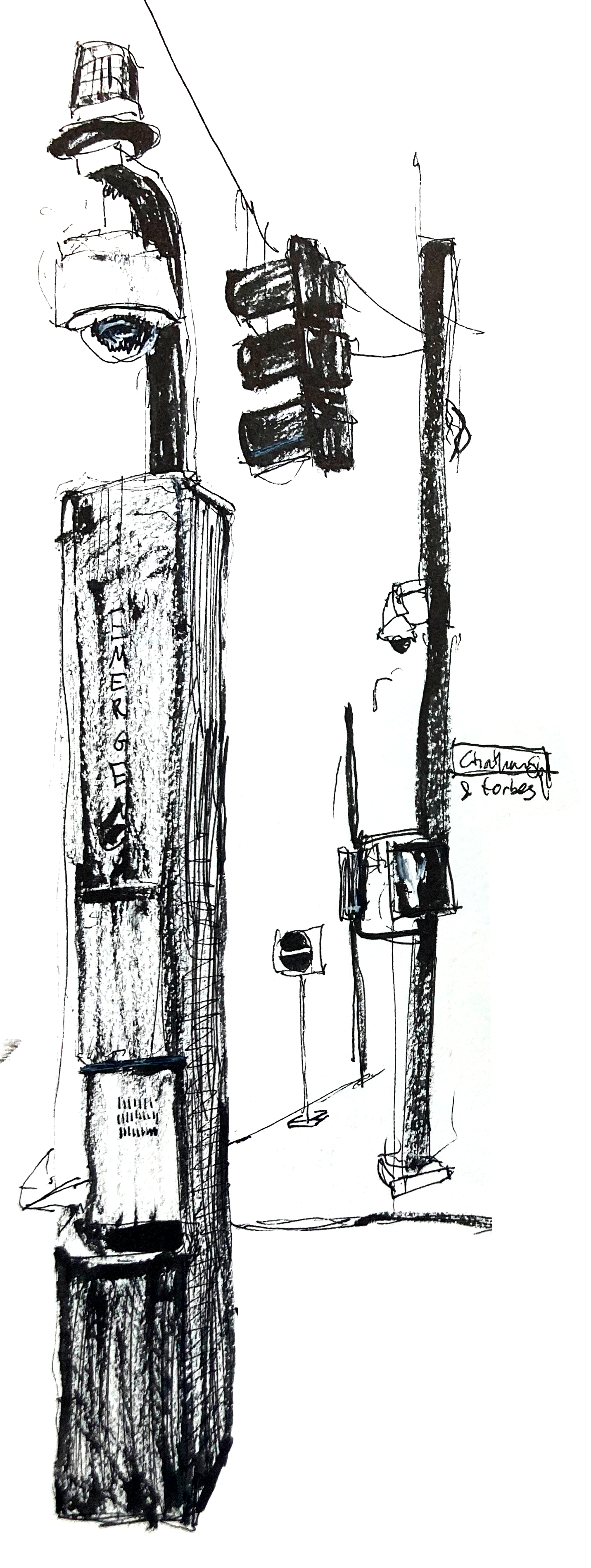

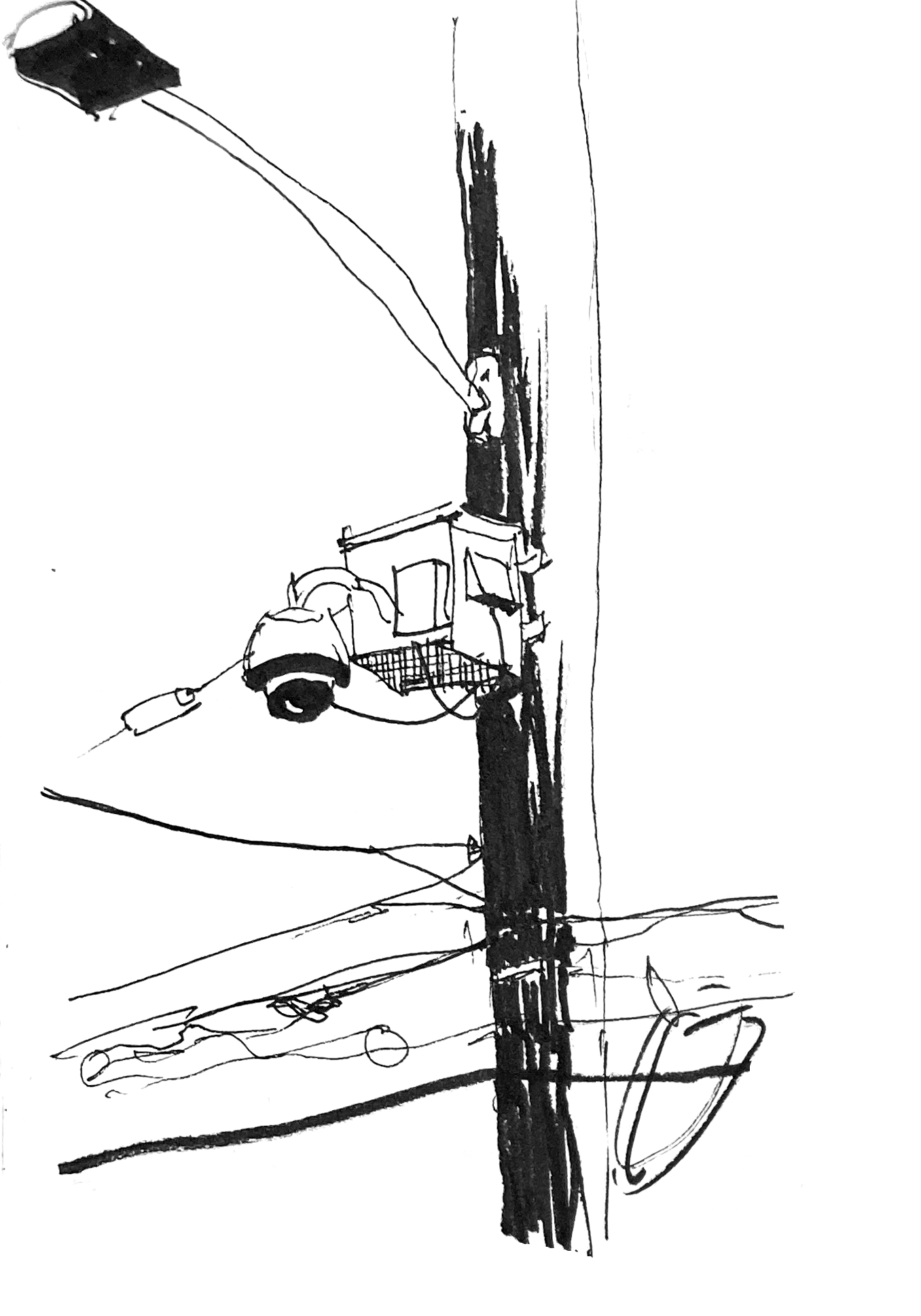

My setup looked like this:

I was mostly inspired by two pieces of work. Conceptually, I was really interested in Shooter (2000) by Beate Geissler & Oliver Sann because I thought the idea of capturing a particular moment in time when emotions and concentration are equally at their highest is very intriguing. In terms of putting the footage together, I was really inspired by how Ray and Charles Eames approached displaying a typology through a montaged film like in Tops (1969). I used the actual audio that the claw machine itself emits as the backing track and since the tempo of the claw machine changed as players progressed through it, the footage also needed to be paced accordingly. This format was the easiest to sculpt that timing and narrative.

If I could do this over again, I would make sure that every participant is seated in the same position for each take and that I stabilize the lighting so there are less inconsistencies in the overall composition, but I am overall pretty content with the end result!

**Content Warning: Profanity/Swearing**