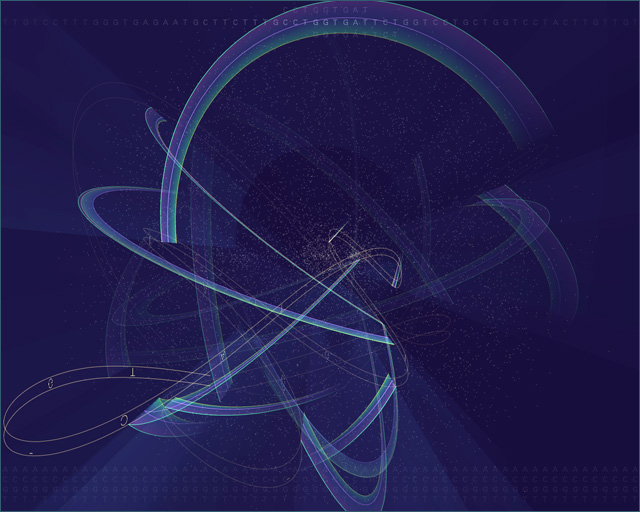

I used the rotation of the hexagons and pointer to represent second hand; smallest circles also represent second hand, middle size circles represent minute hand, and largest circles represent hour hand. The background changes from dark to white every 24 hours representing a day. It is fun to think of various representations of time.

sketchDownloadfunction setup() {

createCanvas(480, 480);

background(220);

text("p5.js vers 0.9.0 test.", 10, 15);

frameRate(1)

angleMode(DEGREES)

rectMode(CENTER)

}

var s = [100,20,100]

var angles = 0

var angles1 = 150

var angles2 = 150

var radius = 0

var colorBackground = 0

var angleEllipse1 = 0

var angleEllipse2 = 0

var angleEllipse3 = 0

function draw() {

background(colorBackground)

//background color changes from black to white and resets every day

if(colorBackground <= 255){

colorBackground += 255/24/3600

}

if(colorBackground >= 255){

colorBackground = 0

}

//background strings

stroke(200,200,220);

strokeWeight(.4)

for (var x = 0; x <= 50; x += .3) {

line(480, 50, 480/50 * x - 3, 0); //right upwards lines

}

for (var x = 20; x <= 80; x += .3) {

line(480, 50, 480/40 * x, 480); //right downwards lines

}

for (var x = 0; x <= 30; x += .3) {

line(0, 430, 480/60 * x, 0); //left upwards lines

}

for (var x = 0; x <= 30; x += .3) {

line(0, 430, 480/30 * x, 480); //left downwards lines

}

//draw bottom hexagon and rotates clockwise

push()

translate(240,320)

rotate(angles2)

noStroke()

fill(255)

hexagon(s[2])

angles2 +=10

pop()

//draw second hand and rotates anticlockwise

push()

translate(240,320)

fill(102,91,169)

noStroke()

rotate(angles)

hexagon(s[1])

strokeWeight(7)

stroke(200,200,220)

line(0,0,0,-50)

pop()

//draw upper hexagon and rotates anticlockwise

push()

translate(240,150)

noStroke()

fill(0)

rotate(angles1)

hexagon(s[0])

angles1 -= 10

pop()

//draw second hand and rotates clockwise

push()

translate(240,150)

fill(102,91,169)

noStroke()

rotate(angles)

hexagon(s[1])

strokeWeight(7)

stroke(200,200,220)

line(0,0,0,-50)

angles += 6

pop()

//draw circles that rotate once every minute, hour, and day

push()

//rotate once every minute

translate(240,240)

fill(100,200,220)

rotate(angleEllipse1)

ellipse(0,-180,10,10)

ellipse(0,180,10,10)

ellipse(180,0,10,10)

ellipse(-180,0,10,10)

angleEllipse1 += 6

pop()

push()

//rotate once every hour

translate(240,240)

fill(50,100,110)

rotate(angleEllipse2)

ellipse(0,-200,15,15)

ellipse(0,200,15,15)

ellipse(200,0,15,15)

ellipse(-200,0,15,15)

angleEllipse2 += 0.1

pop()

push()

//rotate once every day

translate(240,240)

fill(10,50,55)

rotate(angleEllipse3)

ellipse(0,-220,20,20)

ellipse(0,220,20,20)

ellipse(220,0,20,20)

ellipse(-220,0,20,20)

angleEllipse3 += 0.1/24

pop()

print(colorBackground)

}

//set up hexagon

function hexagon(s){

beginShape()

vertex(s,0)

vertex(s/2,s*sqrt(3)/2)

vertex(-s/2,s*sqrt(3)/2)

vertex(-s,0)

vertex(-s/2,-s*sqrt(3)/2)

vertex(s/2,-s*sqrt(3)/2)

endShape(CLOSE)

}

![[OLD SEMESTER] 15-104 • Introduction to Computing for Creative Practice](https://courses.ideate.cmu.edu/15-104/f2021/wp-content/uploads/2023/09/stop-banner.png)