Problem:

For people who work at desks, it can be hard to track when one is tired or needs a break. In these scenarios, they might be inclined to push themselves too hard and damage their health for the sake of productivity. When one is in a state of extreme exhaustion, it is very easy to make simple mistakes that could’ve otherwise been avoided or be unproductive in the long-run. With a cloudy mind, it can be difficult to make clear, thought-out decisions as well. In essence, knowing when to get rest vs. when to continue can be impossible in certain work situations.

A General Solution:

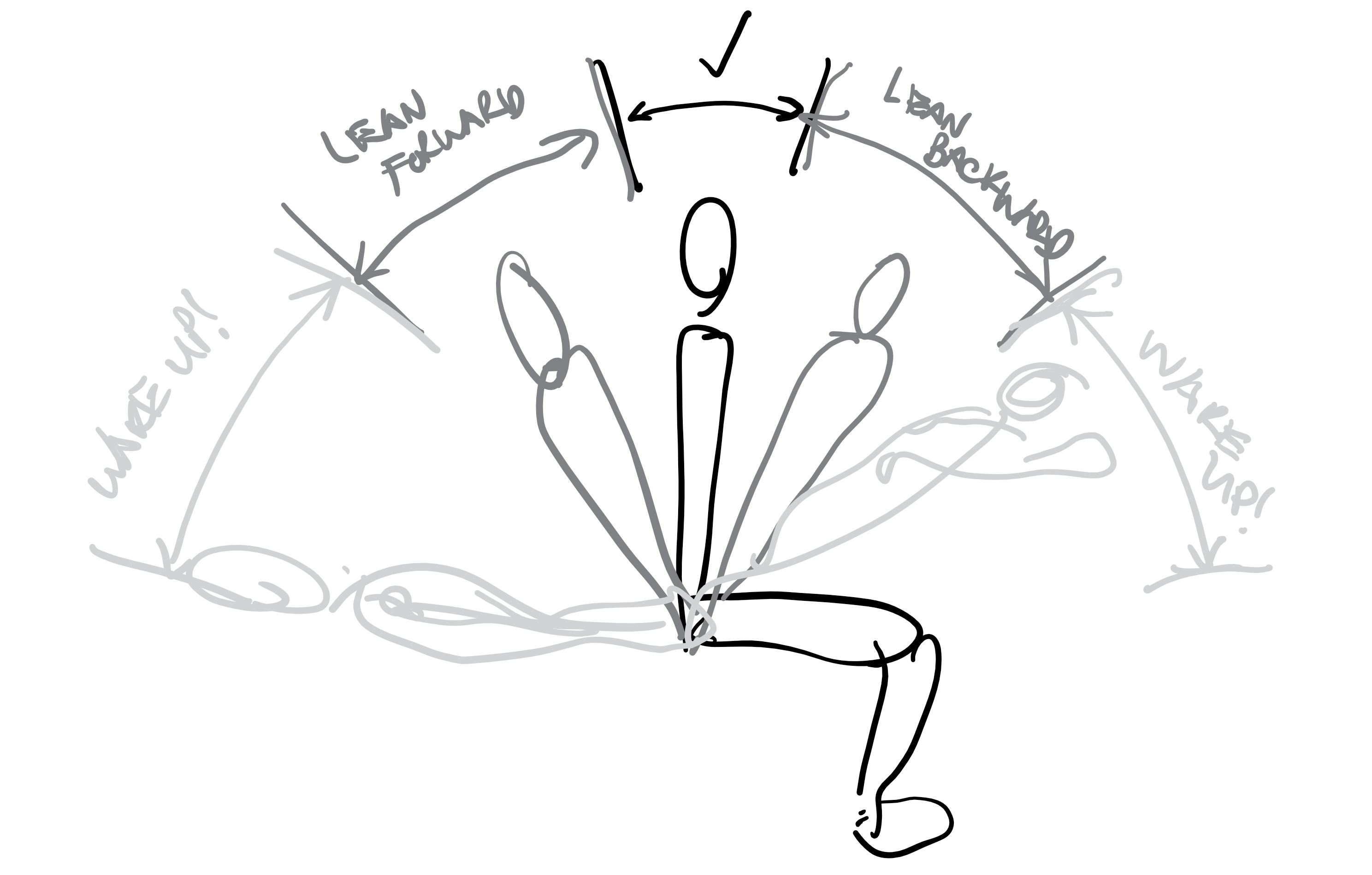

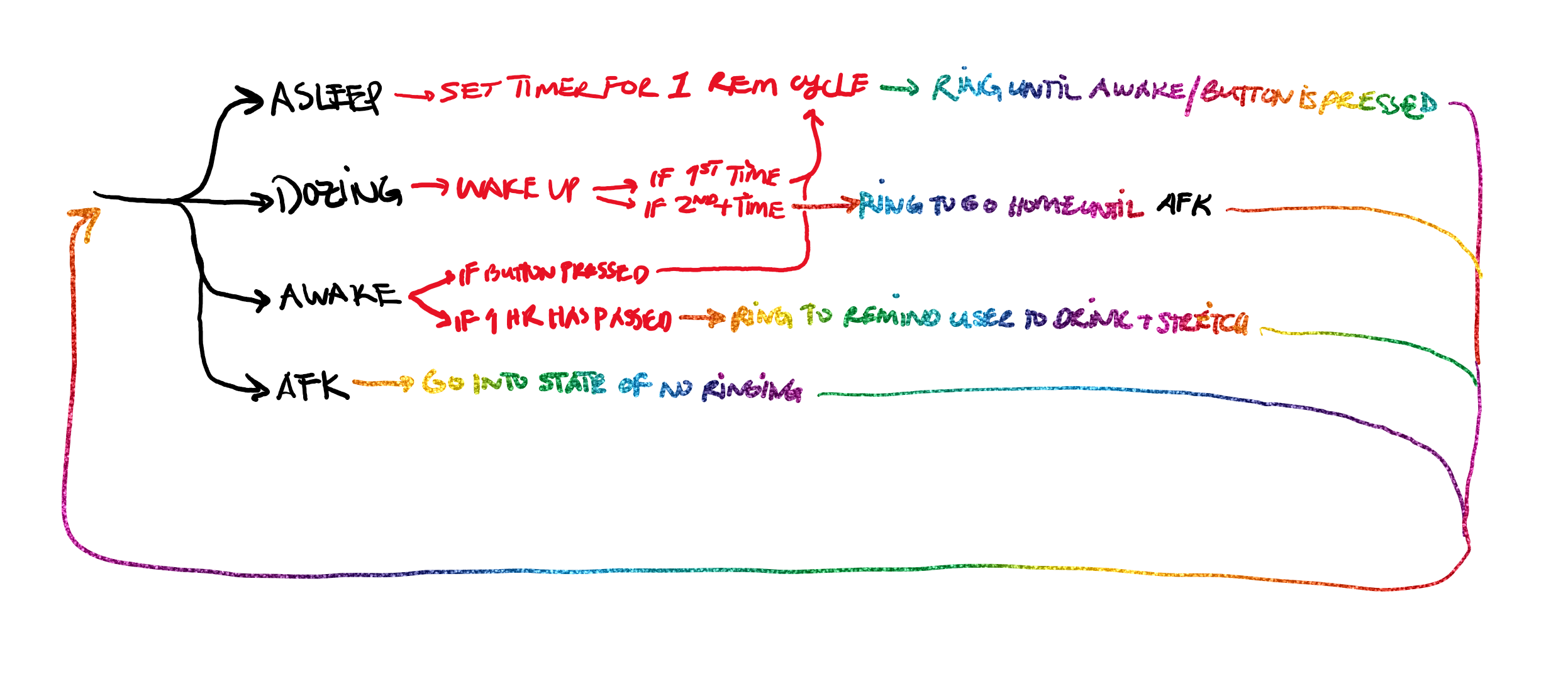

A device that would be able to detect if someone is awake, dozing off, asleep at their desk, or away from the desk. In the case that the person is awake, they will get a periodical reminder to get up, stretch, and hydrate. If the person refuses to take a break, the alarm escalates in intensity, but eventually stops either at the push of a button, or after 3 ignored reminders. In the case that the person is dozing off, the alarm will try to wake them up and encourage them to take a nap. In the case that the person is asleep, the device will set an alarm to wake the person up after a full REM cycle. This can also be triggered using a button to signify that the person will begin taking a nap which will set a timer to wake the person up after a full REM cycle.

Proof of Concept:

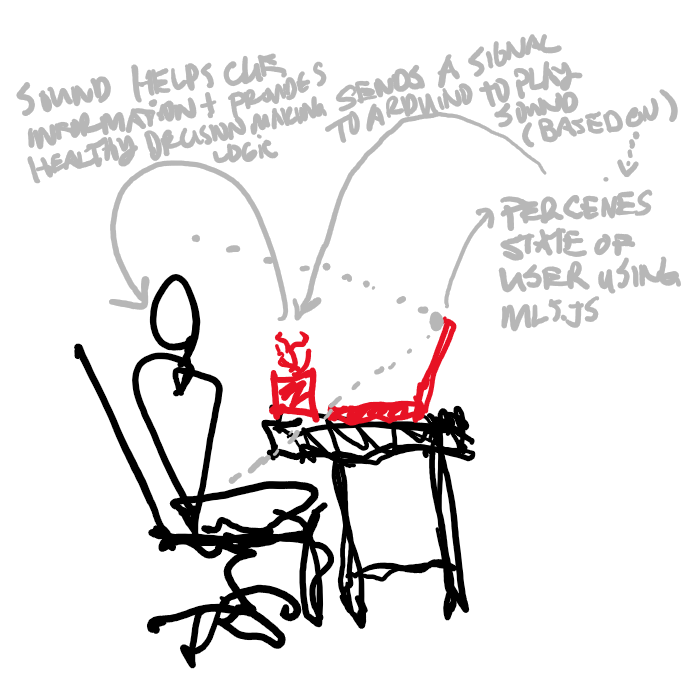

An Arduino connected to a speaker as a communicative output which utilizes p5.js and ml5.js to ascertain the state of the user (awake, dozing, asleep, or away from keyboard (afk). The system uses a machine-learning model looking through a laptop’s camera to detect the four previously mentioned states. The system should have two type of states, one in which the Arduino is looking for whether the person is in an asleep state or is planning on going to sleep and another in which it acts as a timer, counting down to sound an alarm.

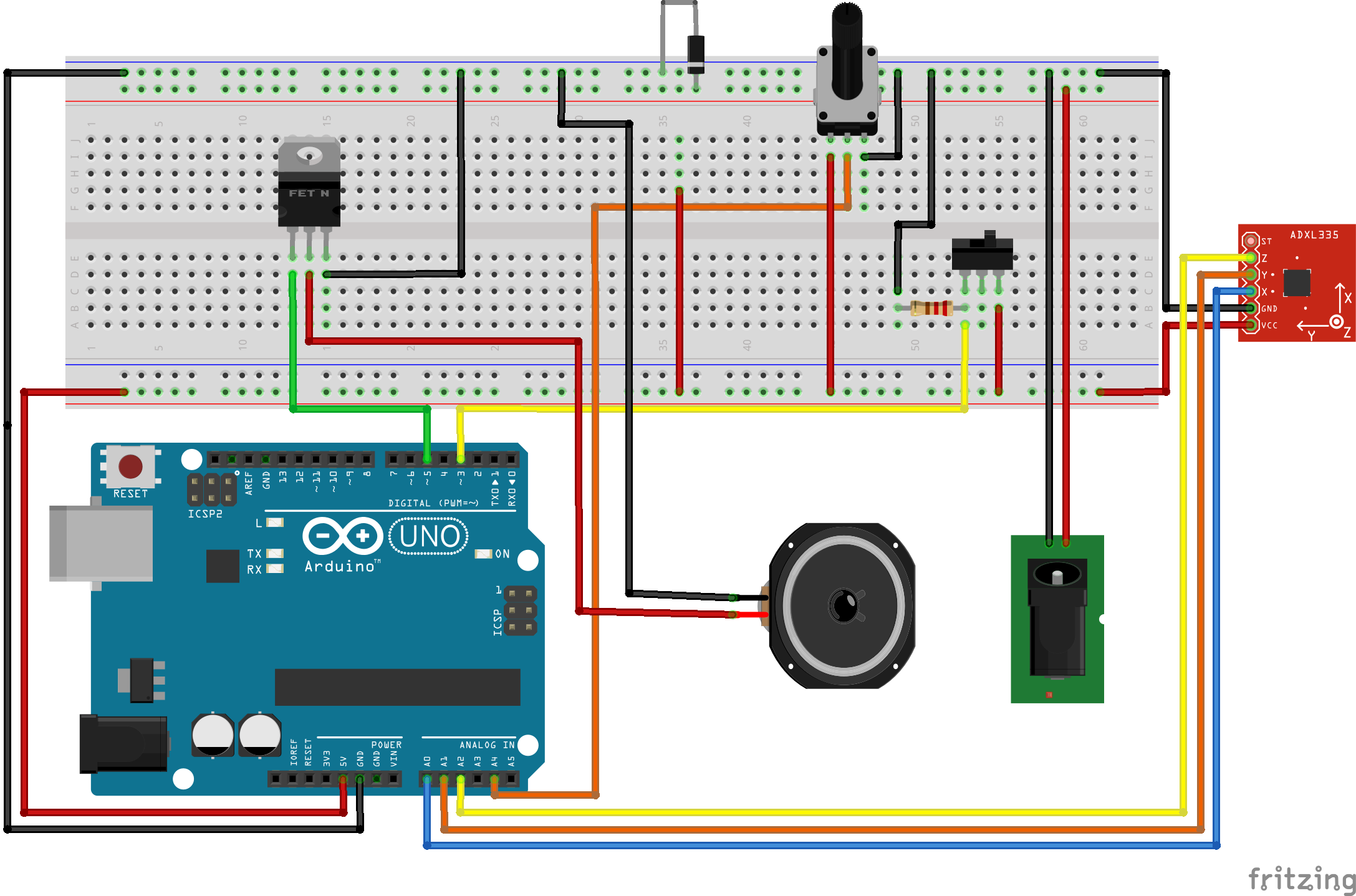

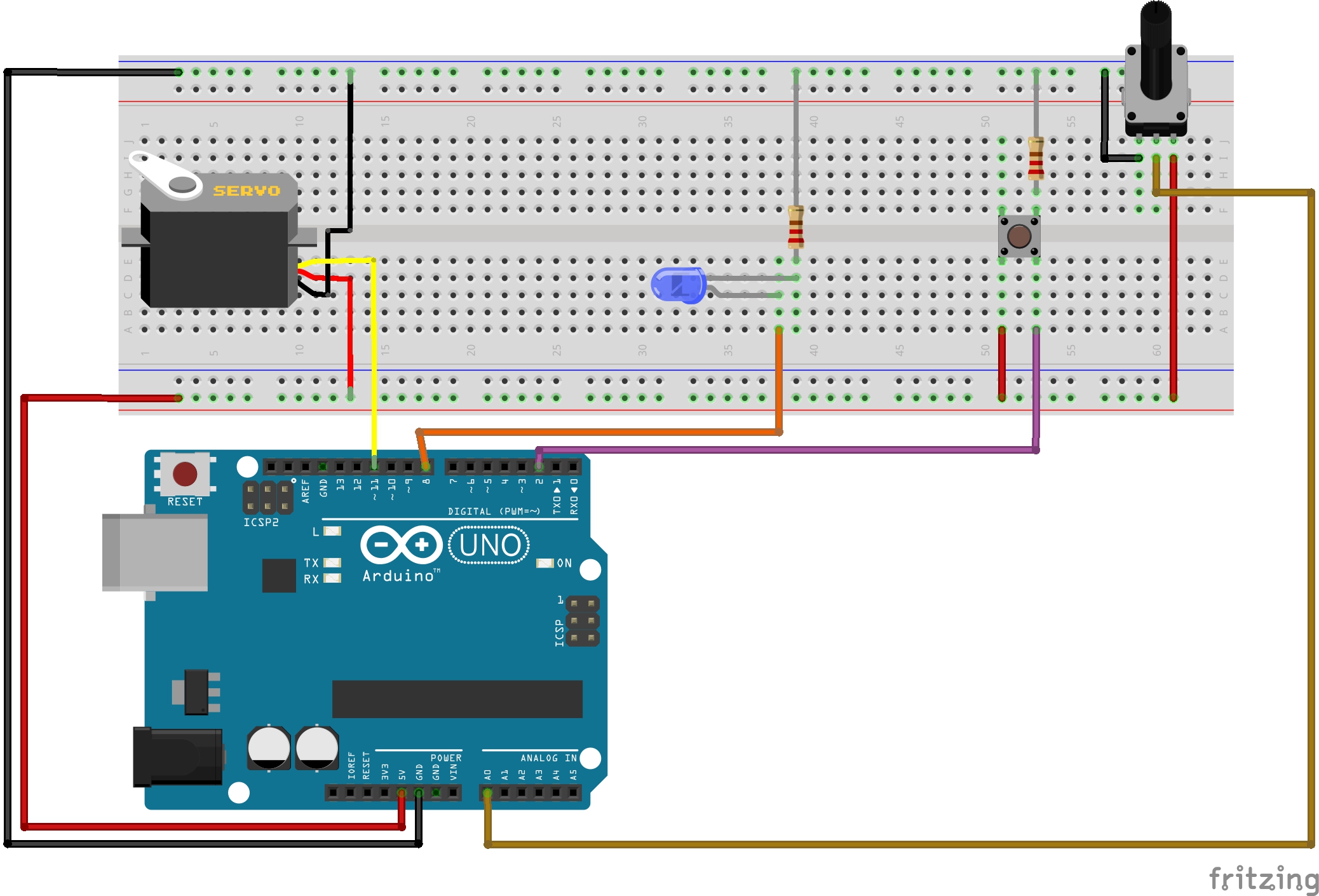

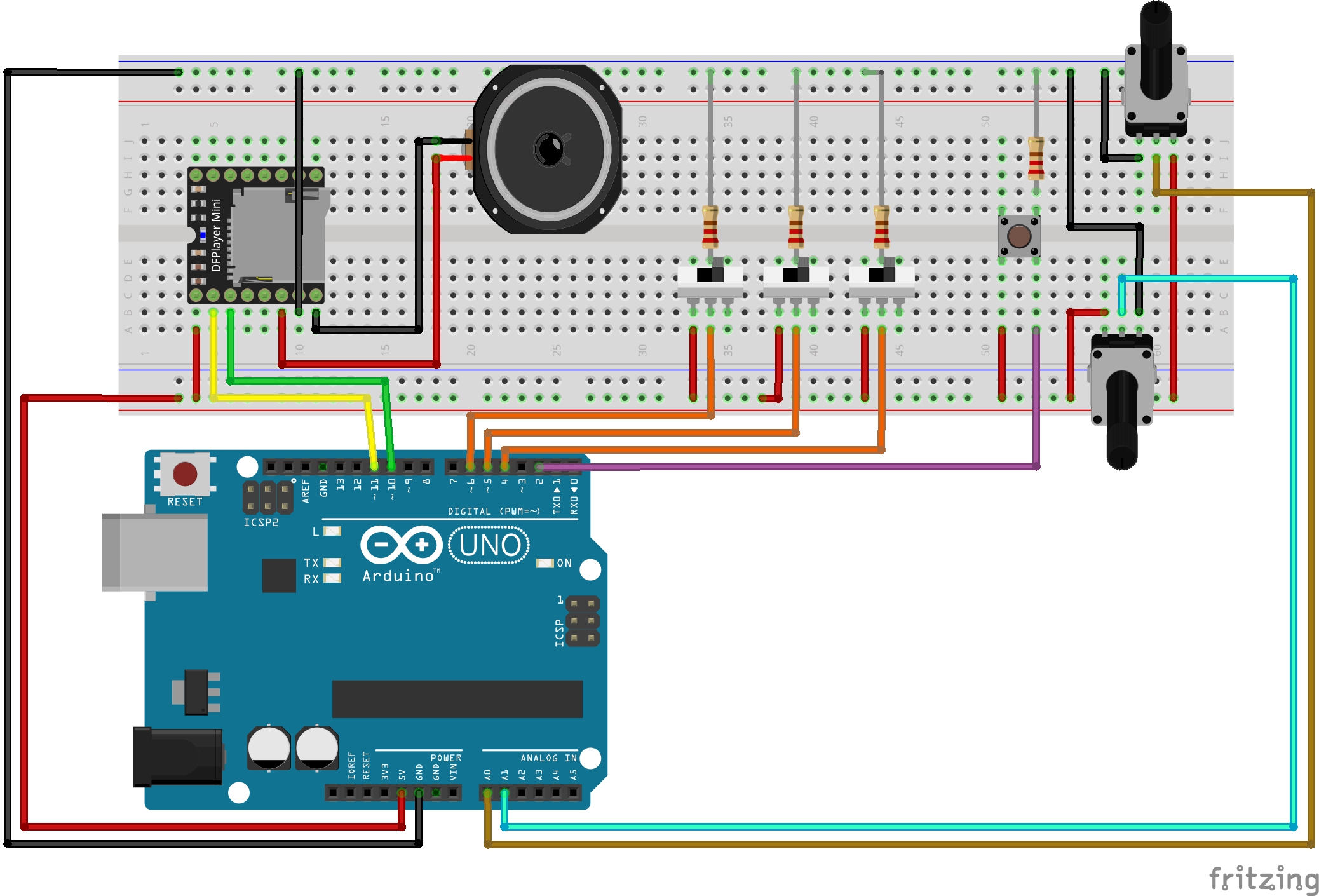

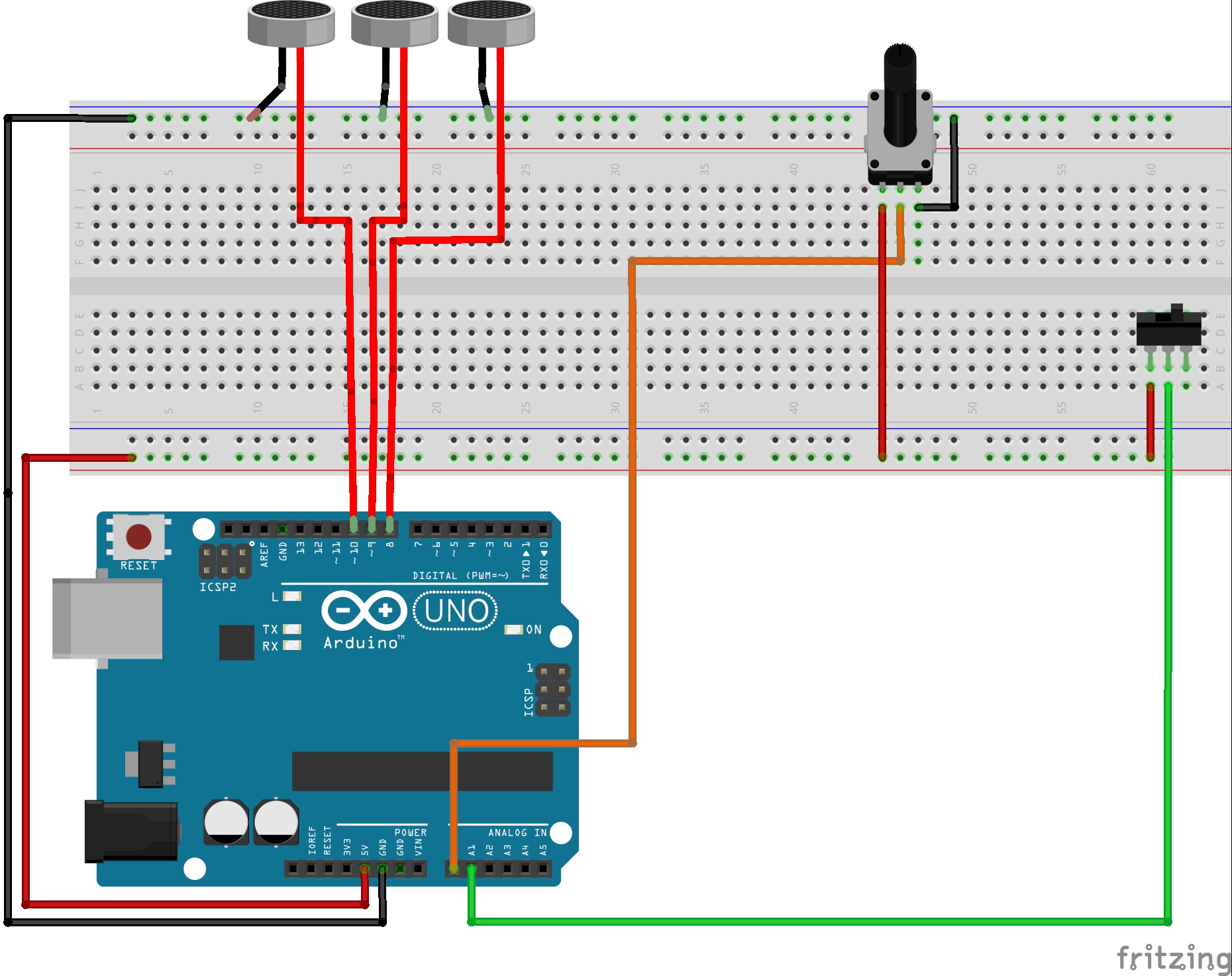

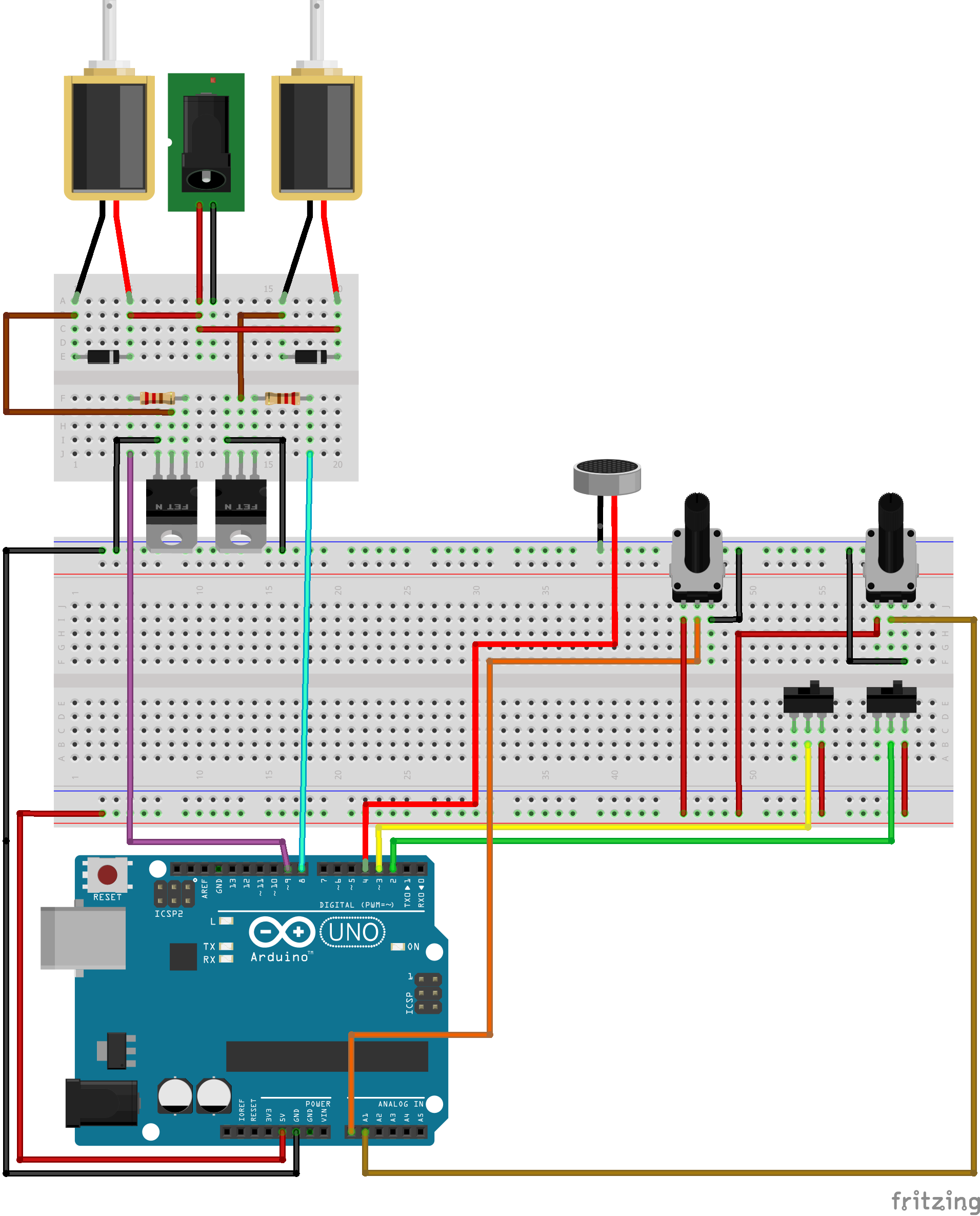

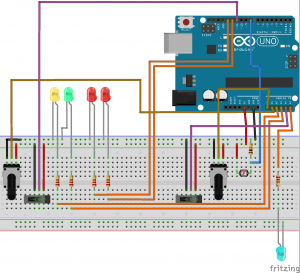

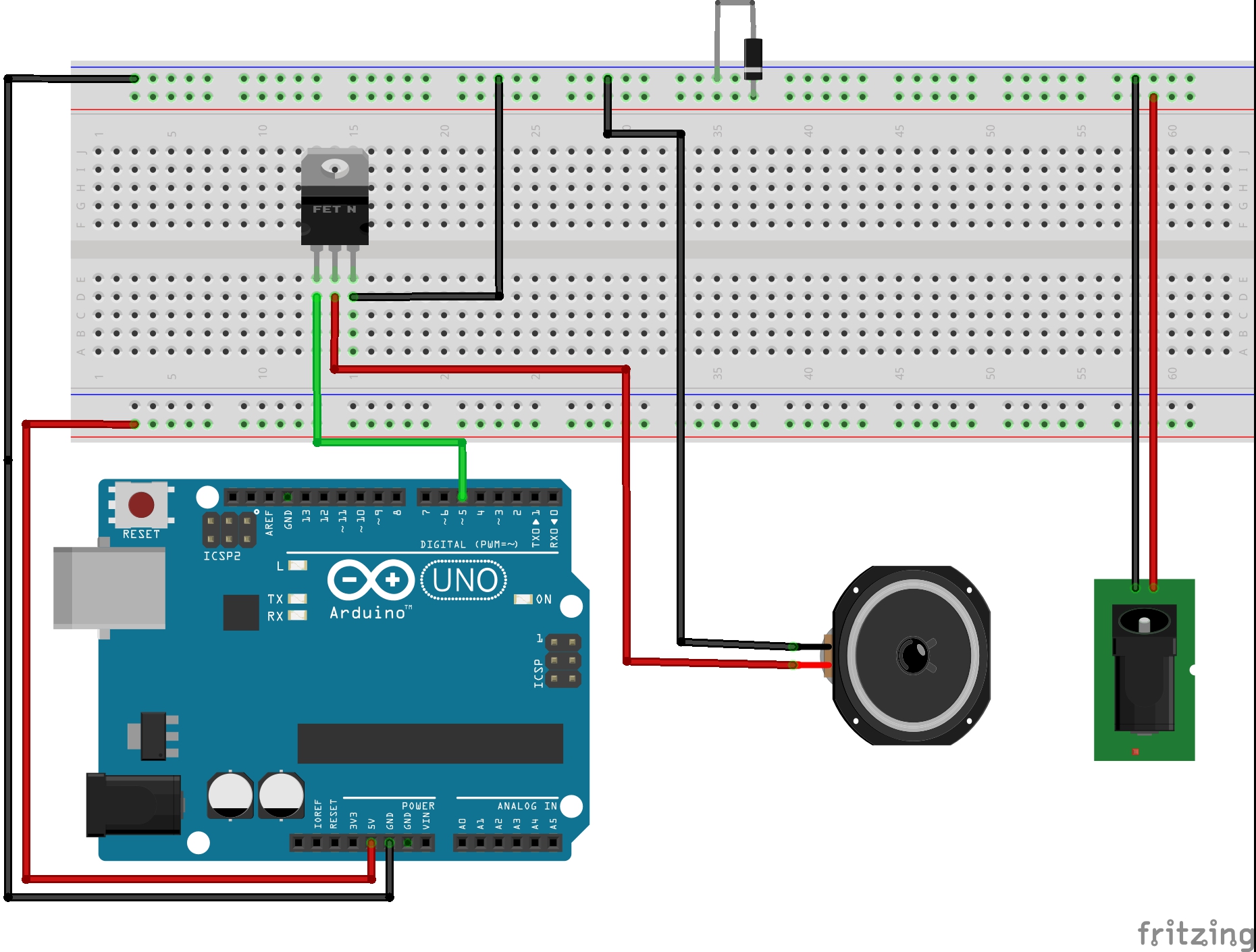

Fritzing Sketch:

The Fritzing sketch shows how the speaker is hooked up to the Arduino to receive outputs. Not pictured, is that the Arduino would have to be connected to the laptop which houses the p5.js code and webcam. The power source pictured allows for various sizes of speakers with different power requirements to be implemented.

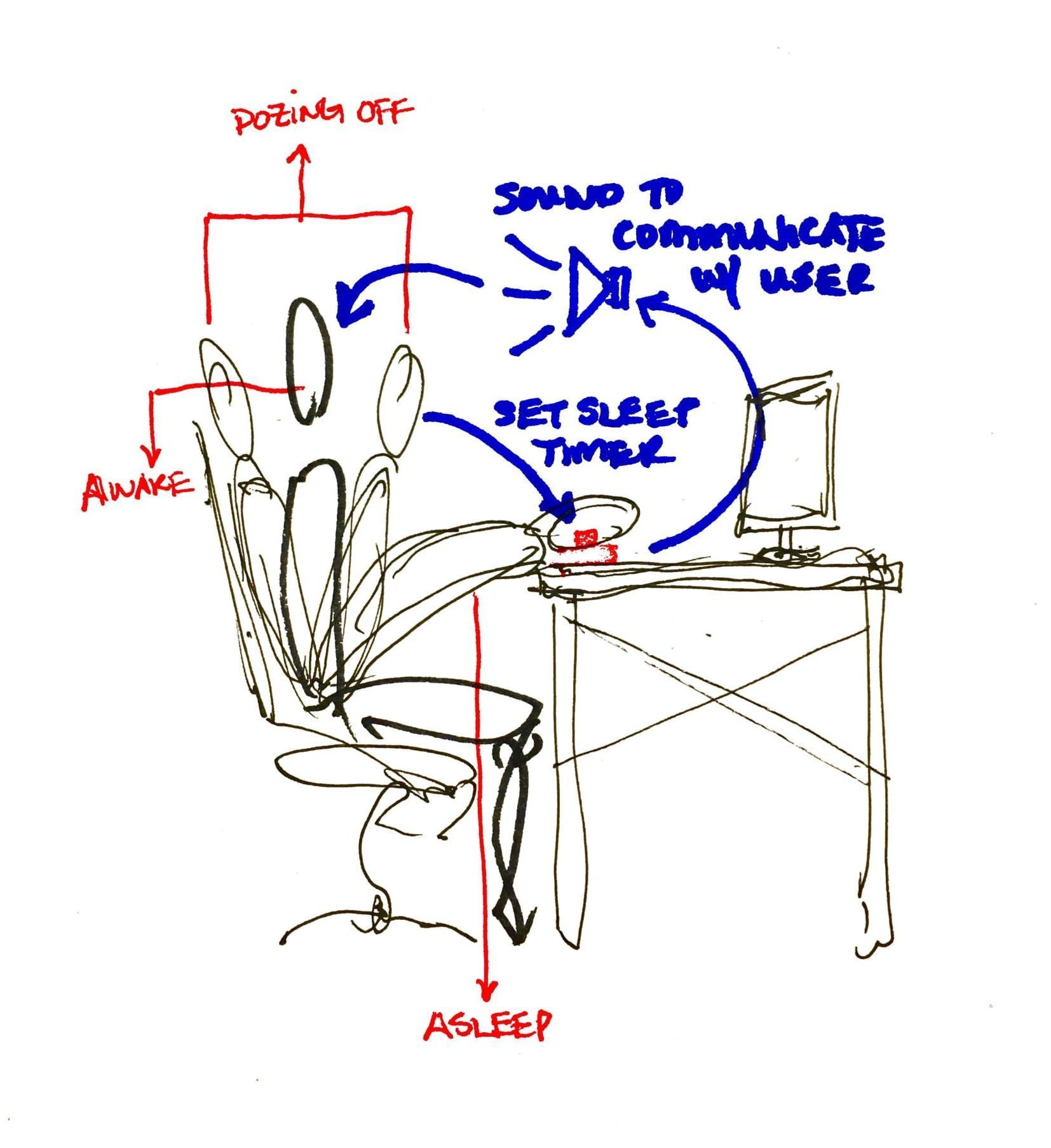

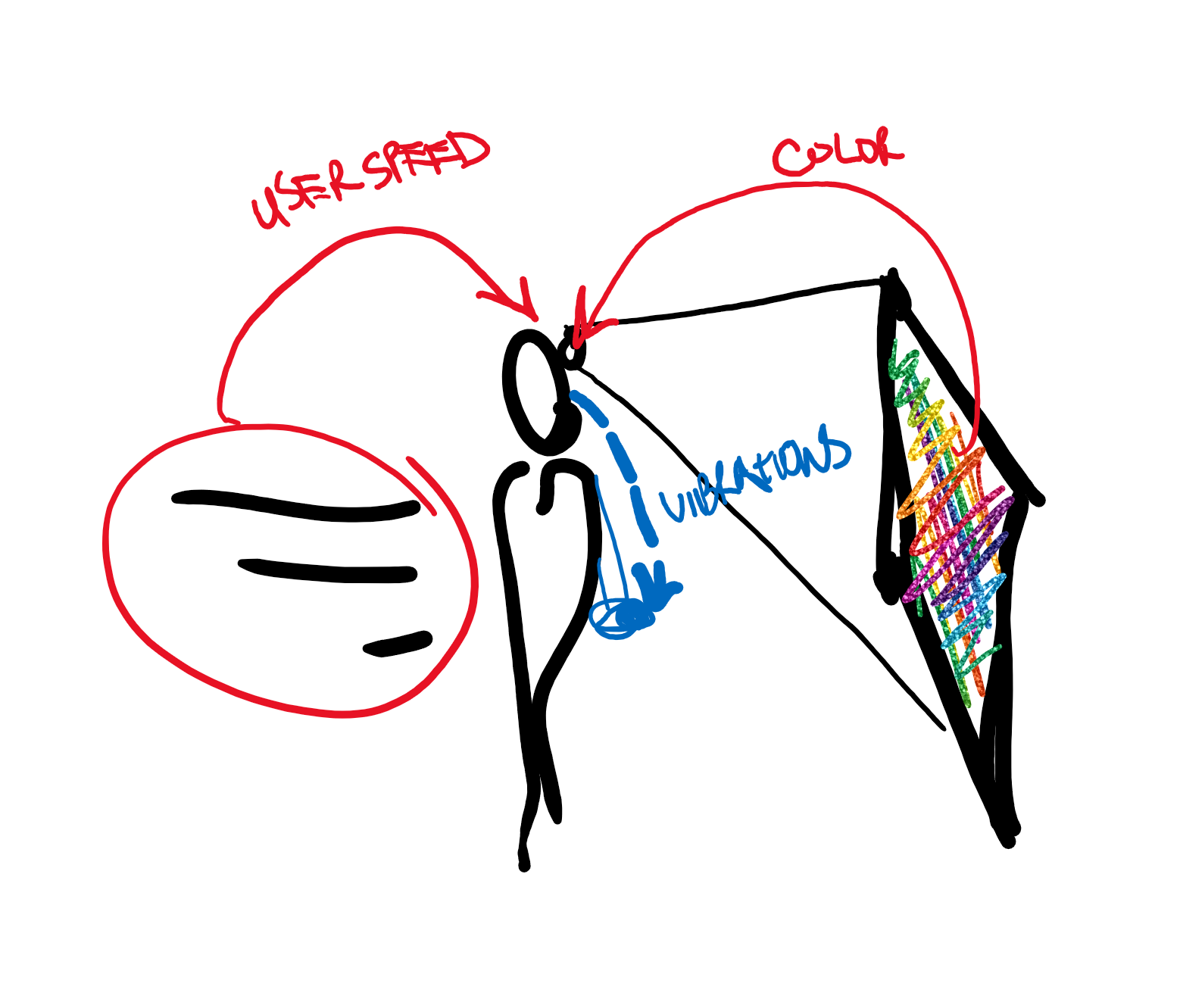

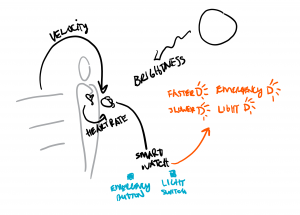

Proof of Concept Sketches:

The user’s pitch (or their perceived state) is sensed using a webcam that feeds live footage to a machine learning model which then informs the device of whether the person is awake, dozing off, asleep, or afk. The computer then sends a signal to the Arduino to play music to cue the user to act accordingly.

Process/Challenges:

This system could absolutely be further improved to take into account one’s schedule, sleep data, etc. to begin making other suggestions to the user. Additionally, this system could be implemented into another table top feature, for example a plant, a sculptural element, or a lamp so that it functions as more than an alarm clock.

Throughout this project, I ran into a couple of initially unforeseen issues. In trying to implement a connection to Google Calendar data as another aspect of the project, I quickly found myself hitting a wall in terms of the depth of my p5.js coding experience and skill level in realizing the feature. I also initially intended to use recordings to humanize “Bud” to make it more relatable and friendly, but both the p5.js and the DF Player Mini were extremely challenging to implement. Both only worked using the 9600 baud rate serial on my Arduino and the DF Player Mini was not supported by the Sparkfun Redboard Turbo board due to a library that wasn’t compatible. I scaled back to use the Volume library to generate simple tunes and sound effects to represent the messages instead.

Lastly, one of the more difficult problems to address was the connection between the Arduino and p5.js. Because the machine learning model was checking through the webcam for the state of the user quite often (at the set/standard frame rate) all of the data was being sent to the Arduino which then was backed up with all of the data, causing it to lag far behind what was happening in real time. I tried a couple of interventions, making it so that p5.js wouldn’t send the same status twice in a row and reducing the frame rate as much as possible, but if the machine learning model isn’t taught very carefully, the system is still susceptible to failure. Ideally, the system would be able to address that concern by ensuring that it constantly deletes old data and only uses the most updated data, but I wasn’t able to figure out how to ensure that.

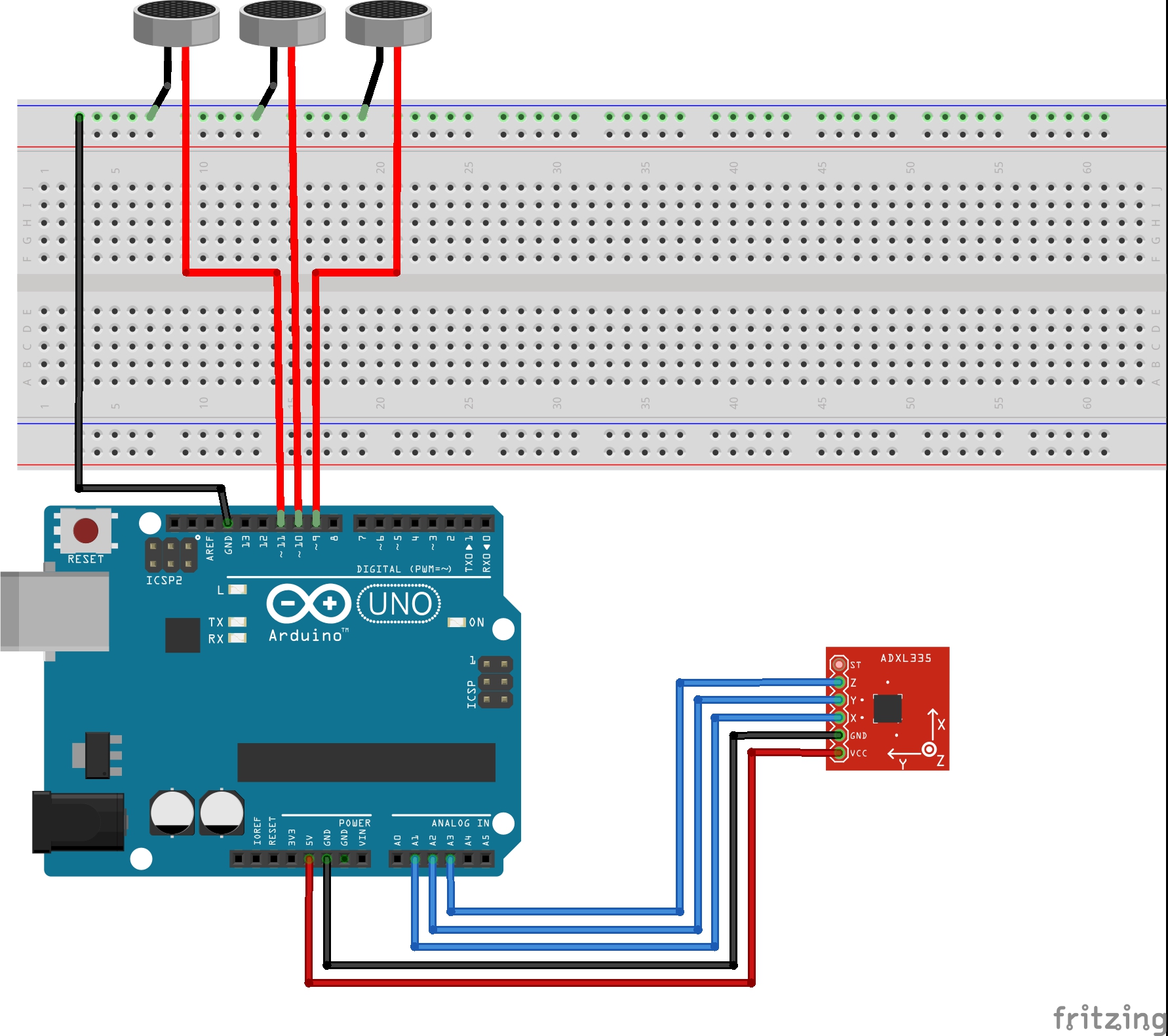

The logic of the system, however, is present in the Arduino, so it might be possible, and more practical, to use an accelerometer (or other sensors) to send a more direct and controlled flow of data to the Arduino. The system might also utilize data from a smart watch or phone to send data to the Arduino in a later iteration.

Proof of Concept Video:

Files: