Realized this was stuck in my drafts since the assignment failed and was iterated into my second crit, the toaster. Putting this here for posterity, but most of it is available in my Crit #2 – Grumpy Toaster.

For this post:

Making Things Interactive, Fall 2019

Making Things Interactive

Realized this was stuck in my drafts since the assignment failed and was iterated into my second crit, the toaster. Putting this here for posterity, but most of it is available in my Crit #2 – Grumpy Toaster.

For this post:

I started with a few problems related to balance for especially visually impaired people. Firstly, when they are moving a pot with hot soup inside, it is really dangerous because they cannot check the balance of it. Another situation will be that, when they are building furniture, especially shelves, it is important to maintain the horizontal balance to keep things safe.

Also, for sighted people, there might be many situations when the balance is important. For example, when we are taking a photo.

How might we use tactile feedback to let them feel the tilt or unbalanced things? I thought that the vibration with various intensity would be a great way to do it.

I decided to use the iPhone for two reasons. First, I realized that it has capabilities to generate a variety of diverse tactile feedbacks using different patterns and intensity, I found it useful to take advantage of its embedded sensors. Lastly, I thought that making a vibrating application will be useful to provide higher accessibility to many people.

I categorized three different groups of the degree to provide different tactile feedback in terms of intensity. When a user tilts the phone 5~20 degrees, it makes light vibration. From 21~45 degrees, it generates medium vibration. From 46~80 degrees, it generates intense vibration. Lastly, from 81~90 degrees, it vibrates the most intensely (just like when it receives a call). I also assigned the degree numbers to RGB code, to change the colors accordingly.

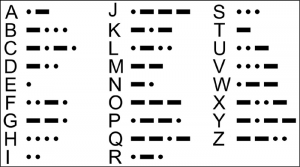

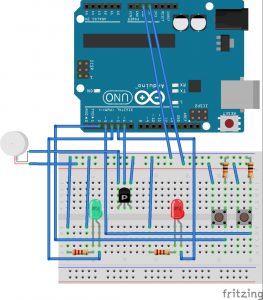

Morse code is commonly received through either visual or audible feedback; however, this can be challenging for those who are blind, deaf, or both. Additionally, I had next to no experience using hardware interrupts on Arduino, so I wanted to find a good application of interrupts for this assignment.

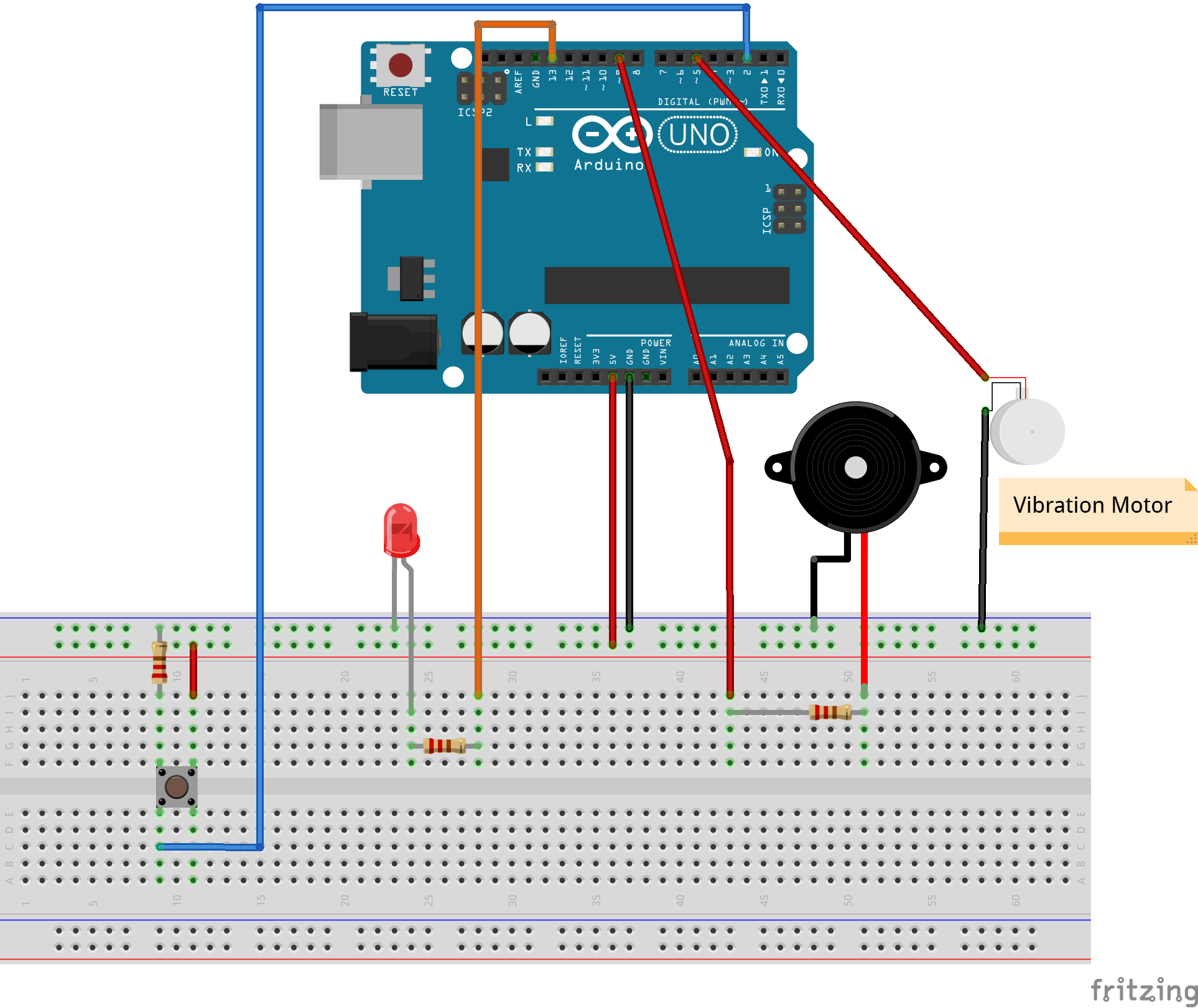

I wanted to create a system that allows morse code senders to quickly adapt their messages into signals that people without sight or hearing can understand. To do this, I created two physical button inputs—the first button directly controls an LED (but could easily be a buzzer) that is used to send the morse code signal; the second button toggles a vibrating motor to buzz in conjunction with the LED. In this way, one can change the message being send from purely visual to both visual and tactile at any time.

Arduino Code and Fritzing Sketch

This project is inspired by the Ubicoustics project here at CMU in the Future Interfaces Group, and by an assignment for my Machine Learning + Sensing class where we taught a model to differentiate between various appliances using recordings made with our phones. This course is taught by Mayank Goel of Smash Lab, and is a great complement to Making Things Interactive.

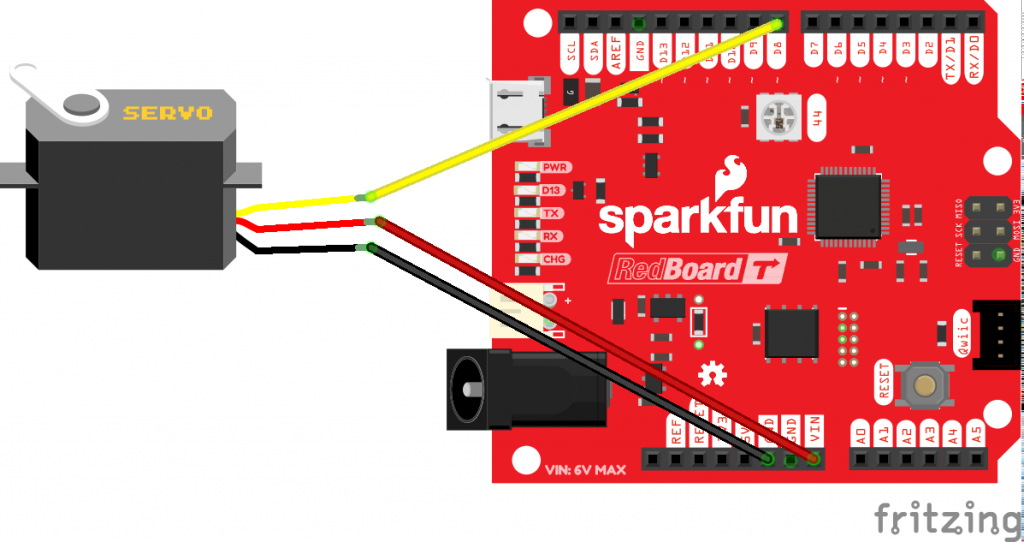

With these current capabilities in mind, and combining physical feedback, I created a prototype for a system that provides physical feedback (a tap on your wrist) when it hears specific types of sounds, in this case over a certain threshold in an audio frequency band. This could be developed into a more sophisticated system with more tap options, and a machine learning classifier to determine specific signals. Here’s a quick peek.

On the technical side, things are pretty straightforward, but all of the key elements are there. The servo connection is standard and the code right now just looks for any signal from the computer doing the listening to trigger a toggle. The messaging is simple and short to minimize any potential lag.

On the python side, audio is being taken in with pyaudio, and then transformed into the frequency spectrum with scipy signal processing, and then scaled down to 32 frequency bins using openCV (a trick I learned in ML+S class). Then bins 8 and 9 are watched for crossing a threshold, which is the equivalent of saying when there’s a spike somewhere around 5khz toggle the motor.

With a bit more time and tinkering, a classifier could be trained in scikit learn with high accuracy to trigger the tap only with certain sounds, say a microwave beeping that it’s done, or a fire alarm.

The system could also be a part of a larger sensor network aware of both real world and virtual events to trigger unique taps for the triggers the user prefers.

Problem:

For those who have impaired vision or are blind, understanding the quality and form of the spaces that they inhabit may be quite difficult to perceive (inspired by Daniel Kish’s TED Talk that Ghalya posted in Looking Outward). This could have applications at various scales, both in helping the visually impaired with way-finding as well as in being able to experience the different spaces they occupy.

A General Solution:

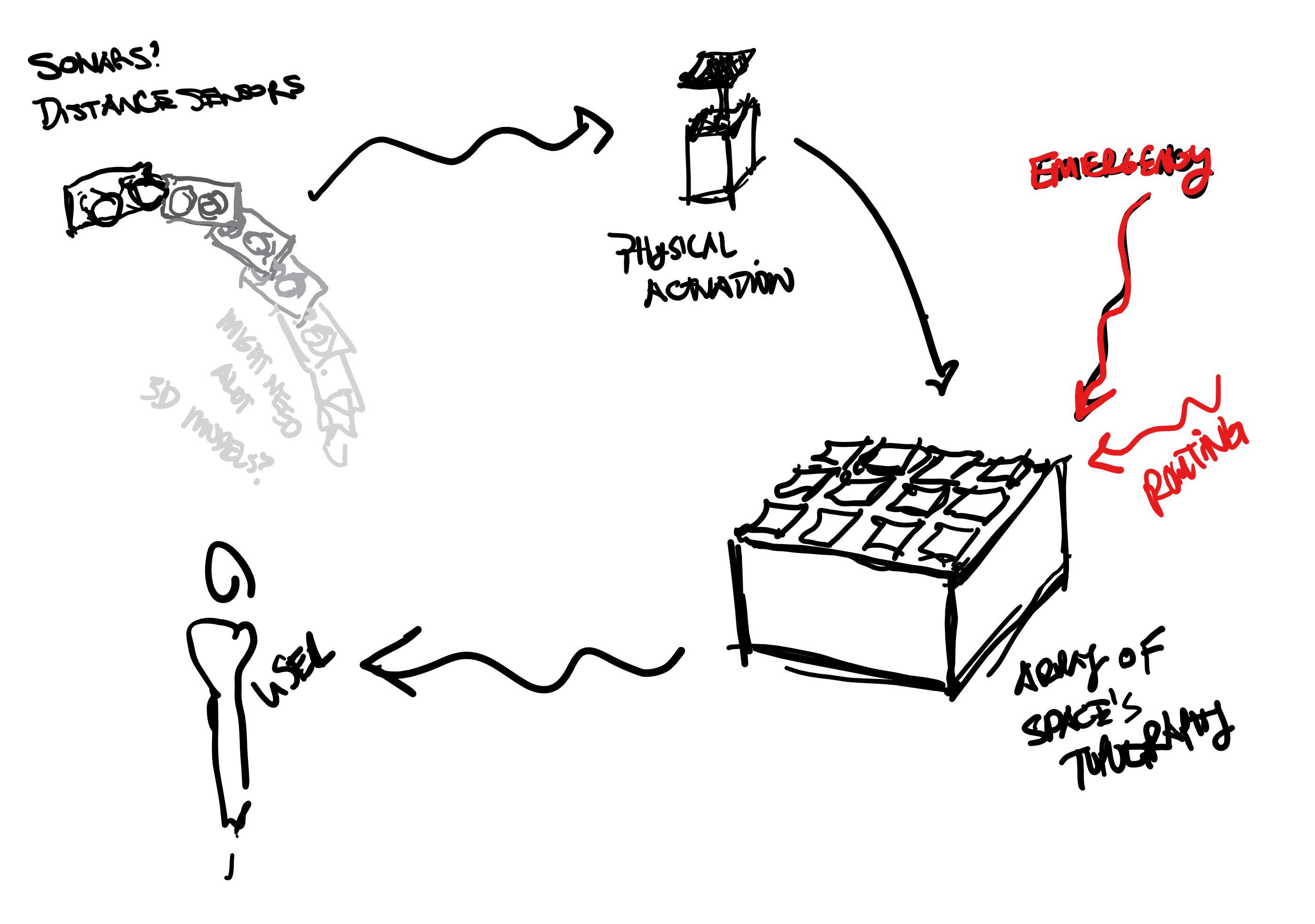

A device that would scan and process a space using sonars, LIDAR, photography, 3D model, etc. which would be processed then mapped onto a interactive surface that would be actuated to represent that space. The user would then be able to understand the space they are in on a larger scale, or on a smaller scale, identify potential tripping hazards as they move through an environment. The device would ideally be able to change scales to address different scenarios. Other aspects such as emergency situation scenarios would also be programmed into the model so that in the case of fire or danger, the user would be able to find their way out of the space.

Proof of Concept:

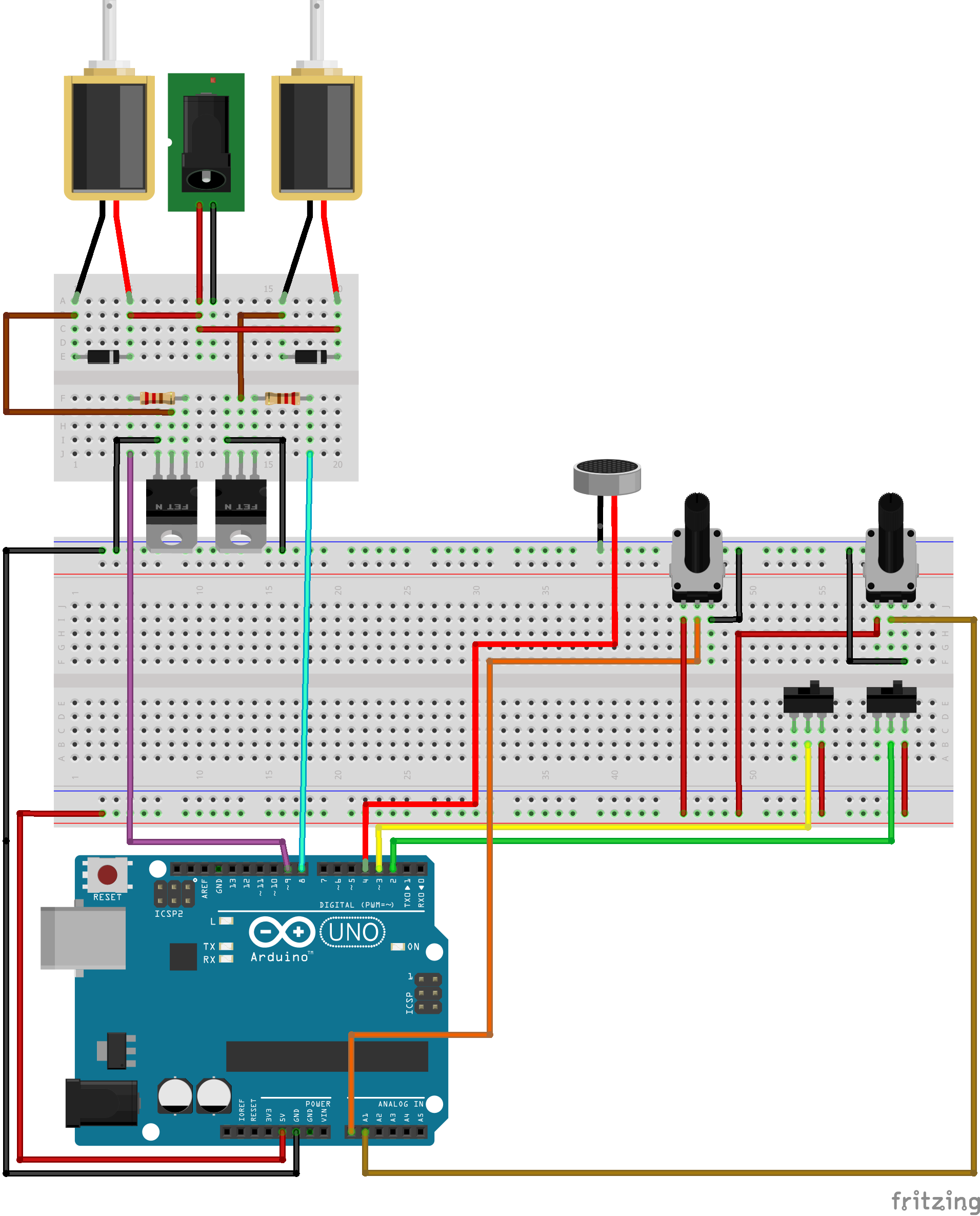

An Arduino with potentiometers (sonars/other spatial sensors ideally) to act as input data to control some solenoids which represent a more extensive network of physical actuators. When the sensors sense a closer distance, the solenoids will pop out and vice versa. The solenoids can only take digital outputs, but the ideal would be more analog so that a more accurate representation could be made of the space. There are also two switches, one that represents an emergency button which alerts the user that there is an emergency, and one that represents a routing button (which ideally would be connected to a network as well, but could also be turned on by the user) which leads the solenoids to create a path out of the space to safety.

Fritzing Sketch:

The Fritzing sketch shows how the proof of concept’s solenoid are wired to a separate power source and is setup to receive signals from the Arduino as well as how all of the input devices are connected to the Arduino to send in data. The transducer for emergencies has been represented by a microphone, which has a similar wiring diagram. Not pictured, is that the Arduino and the battery jack would have to be connected to a battery source.

Proof of Concept Sketches:

The spatial sensor scans the space that the user is occupying which is then actuated into a physical representation and arrayed to create more specificity for the user to touch and perceive. This system would be supplemented by an emergency system to both alert the user that an emergency is occurring, and also how to make their way to safety.

Proof of Concept Videos:

Files:

Rabbit Laser Cutters have dark UV protective paneling to protect users from being exposed to bright, potentially vision damaging light. However, laser cut peices can begin smoking, and even catch fire. This presents a problem, how can user respond to fire and smoke events?

A visibility detection system paired with a motor would allow users to be afforded of an incoming smoke or fire issue by detecting drastic increases or decreases in visibility. The visibility detection system would be placed inside the laser cutter, while the motor would be attached to a wearable device, or atop the laser cutter to bump into it repeatedly in different patterns, creating different noises based on the situation and vibrations on the user’s person.

A series of temperature sensors would serve as the detection system. It would sense whether there was obstructed vision, either being too bright, signifying a fire, or too dim, signifying smoke. A solenoid would tap in a slow pattern to signify smoke, and tap in a hurried, frantic pattern to signify fire. The solenoid would be either attached to a wearable device, or attached atop the cutter itself, to tap against the machine and make noise, signifying to the user to press the emergency stop.

How do you create a universal communication method that can work for everyone, whether they are blind, or deaf, or both? Imagine a universal translation machine that can….

To tackle this, I decided to use a tactile way to feel and translate Morse code. This is done through a combination of:

The hope here is by providing different ways of feedback, the translator can be more accessible.

Lip reading is difficult, and a large portion of the deaf community choose to not read lips. On the other hand, lip reading is a way for many deaf people to feel connected to a world that they often feel removed from. I have linked a powerful video where one such lip reader talks about the difficulty of lip reading but they pay-off she experiences by being able to interact and connect with anyone she wants.

Lip reading relies on being able to see the lips of the person speaking. When you are interacting with one person, this is not an issue, but what if you’re in a group setting? How do you keep track of who is talking and where to look?

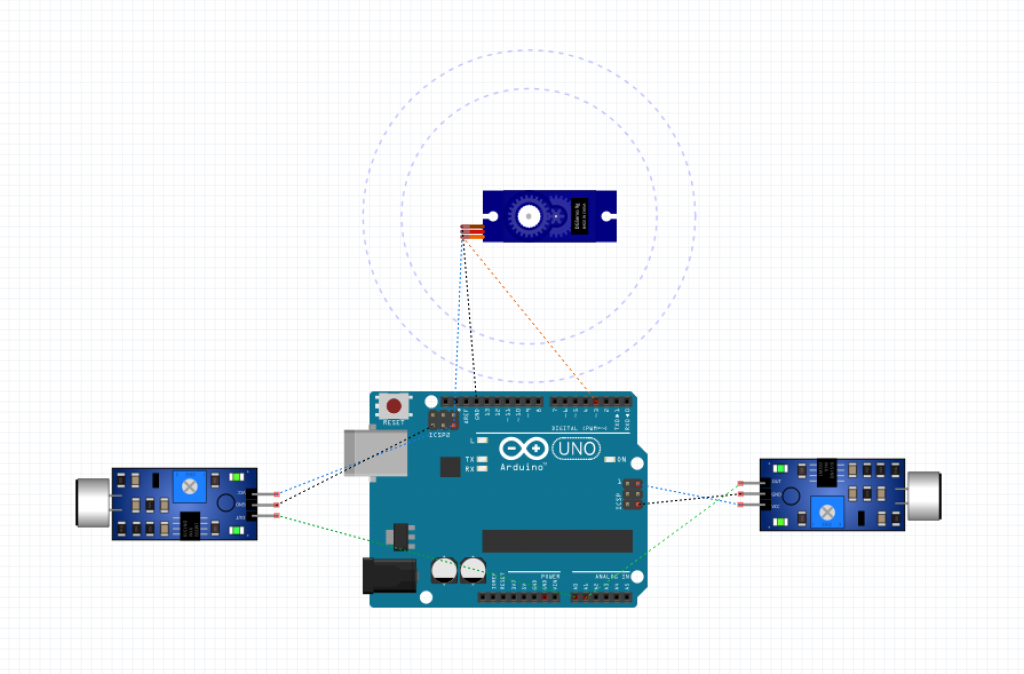

Using 4 sound detector or microphones, detect the area in which sound is coming from. Alert the user of this change in sound by using a servo motor to point in the direction of the sound. This allows people who are hard of hearing to understand who is talking in a group setting and focus on the lips of the person speaking at hand.

To demonstrate this idea, I decided to use 2 sound detectors and a servo motor. My interrupt is a switch which can be used to override the process if, for example, there are too many people talking or they do not need to use this device anymore.

Below is a breadboard view of my project.

I am having some issues after updating to Catalina, so videos will come soon!

State Machine: Sleeping

Problem: Waking up is hard. Lights don’t work. Alarms don’t work. Being yelled at doesn’t work. You have to be moved to be woken up. But what if no one is there to shake you awake?

General solution: A vibrating bed that takes in various sources of available data and inputs to get you out of bed. Everyone has different sleep habits and different life demands, so depending on why you are being woken up, the bed will shake in a certain way. How?

Proof of Concept: I connected the following pieces of hardware to create this demo:

Due Mon night, 11:59 pm

Like assignment 5, give data visual representation, but look at accessibility for someone without hearing or vision. Use interrupts to generate / modify the information being displayed, to control the information from another source. Experiment with more than one interrupt happening at the same time.

The example we discussed in class is how would you let a person without hearing know that someone was knocking at the door or ringing the doorbell?