Month: October 2019

Assignment 7: sound over time, sound-by-interrupt

Due next Tuesday.

For this assignment, using an Arduino, generate sound-over-time and sound-by-interrupt that conveys meaning, feeling, or specific content. You can generate sound with a speaker or a kinetic device (ex: door chime) or some other novel invention.

This is a good time to use push buttons or other inputs to trigger sound and another input to define the type of sound.

My example in class was how my phone *doesn’t* do this well. If I am listening to music and someone rings my doorbell at home, my phone continues to play music *and* the doorbell notification sound at the same time. What it should do is stop the music, play the notification, then give me the opportunity to talk to the person at the door, then continue playing music.

Class notes: 31 October, 2019

Starting Sound

Why is sound important? We have binaural hearing and can point to the direction of a sound without any practice.

How do you “close your earlids” when you go to sleep? How do children learn to speak? Leonard Bernstein’s experiments with making sounds like a child and how common those sounds might be across cultures.

Close your eyes after following links in this section. don’t worry about the visual details and information, this is learning to understand sound and signals.

Classes of sounds (one view)

Signals / alerts — short sounds that transfer information

- automobile informational sounds How many of these sounds are intentionally generated and how many are practical sound effects that let us know we’ve clicked in our seat belt?

- walk/don’t walk beep boops

- fire department / police codes on dispatch channels

- collection that includes a game tone from Starcraft

- doorbell database and an example of the ones used in the 50s and 60s in my neighborhood.

Songs and patterns that transfer information over time

We have a history of using air raid sirens from WWI and WWII as a means to notify the population of an area of an event or condition.

- air raid siren, dual pitch — my borough uses one of these at 9:45pm to signal curfew hours for children. The local volunteer fire department have a different one they use to alert volunteers to a fire.

- tornado sirens used to notify a town/area of a possible tornado

Music and entertainment

- ringing phones are so unique that we map and learn our own ringtones using songs. Golan Levin’s mobile phone concert was only possible because phones had ring tones that couldn’t be changed.

- Star Trek had one of the earliest catalogs of special effects sounds used to alert viewers of plot elements and activity.

- Professional companies that sell sound libraries.

- Orbital and Underworld are two electronic bands using dual internal tempos, one near the rate of resting heart beat the other near the rate of active (dancing) heart beat.

Psychological effects of sound

Is it genetics that cause us to respond to the sound of a crying human baby? Can you think of an “angry” noise? A “happy” noise? a “relaxing” noise?

Sketches, Fritzing, and 3d models

Sketches from sound-class-1 (including one I DID NOT show in class) and Fritzing a speaker to a transistor.

3d printed air raid sirens.

Critique 02: Assisting Individual Body Training through Haptics

Problem

When training our body and build muscles at a gym, it is really important to maintain the accurate and balanced pose and gestures – not only for not being injured but also for maximizing effects and keeping muscles balanced. However, when we go to the gym by ourselves, it is sometimes really difficult to reflect ourselves whether we are using the tools in the right way or not.

Solution

I thought about a device – that could be a smartphone with an armband or a smartwatch or the other devices that are attached to our arms – that provides haptic feedback to us so that we can keep the right pose.

For example, when we are doing push up on the ground, when we go down, the device checks the degree of our arms and time. After we go up and go down again, the device provides haptic feedback (various intensities of vibration) to signal to us that we have to go down a bit further to reach the right degree. When we reach that, it vibrates shortly, to let us know we did well.

Proof of Concept

I tried to code through Swift and use the gyro sensors in the iPhone. I believe I could develop this idea much further – it could check various degrees of our bodies when we are doing exercise, even stretching to increase our flexibility. Also, if it could keep collect data throughout the time, it will understand our capabilities of a certain exercise or part of our muscles, so that it could guide us to eventually increase our capabilities with the appropriate tempo without harming our bodies.

Videos and Codes

degreeChecker – code

Emotional Haptic Feedback for the Blind

Problem:

Haptic feedback as a means of delivering information to the visually impaired isn’t a new concept to this class. Both in class assignments and in products that already exist in the real world, haptics have certainly become a proven tool. However, I feel that there has not been much consideration as to the more specific sensations and interactions that haptics can provide.

Proposed Solution:

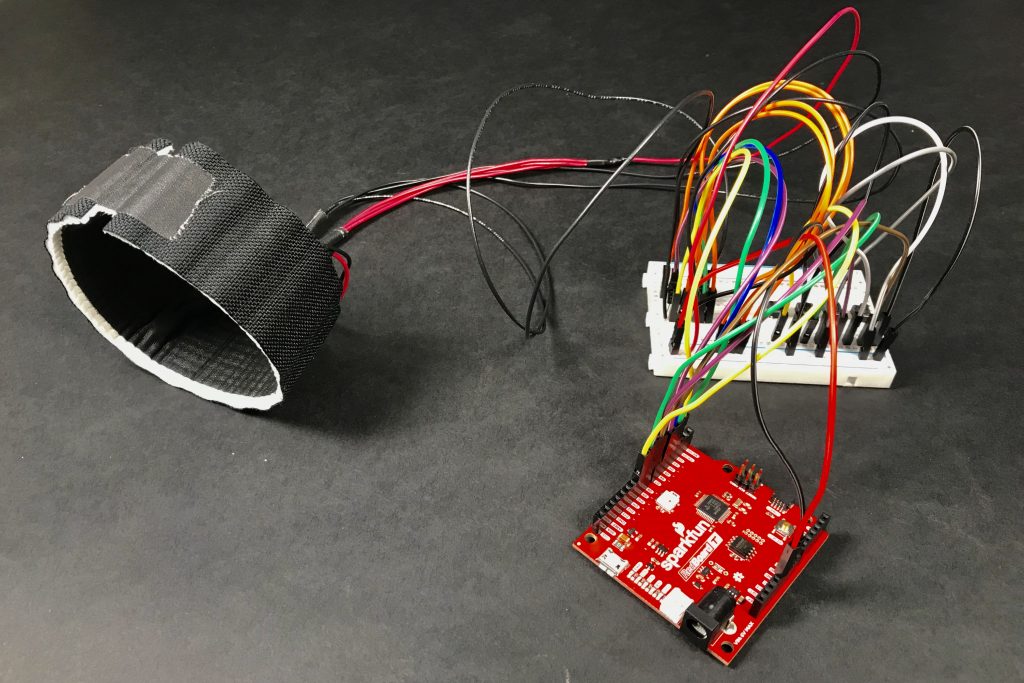

With this project, I attempted to create a haptic armband that adds another dimension of feedback: spacial. By arranging haptic motors radially around the arm, I was able to control intensity, duration, as well as surface area in order to create different sensations. Controlling these variables, I recreated the sensations of tap, nudge, stroke (radially), and grab.

In terms of applications, I think time keeping could be a great illustration as to how different sensations can play a role. For example, a gesture such as a tap or nudge would be appropriate for situations such as a light reminder at the top of the hour — on the other hand, a grab would be more suitable in situations such as an alarm or if a user is running late for an appointment. Other more intricate gestures such as a radial stroke could be for calming users down in stressful situations.

Proof of Concept:

Crit 2: Key Fob Reminder System

Problem:

The blind and memory impaired can often have issues remembering small objects. Keys, phones, and wallets are all easily misplaced items. Forgetting commonplace but important items can be especially frustrating and cause issues for people, especially if the behavior is repeated.

Solution:

A system that relies on RFID tags embedded in a keychain or fob can remind users if they left there devices on tables as they were leaving the house, as well as causing the device to ping when approaching household points of entry when needing a key, would afford users as to where there common household items were during. Items can become “lost” or misplaced even in book bags, and this system would allow for the user to feel a distinct “ping” for each device.

Proof of Concept:

A system of RFIDs that signify whether a user is exiting or entering a common entrance would allow the reminder system to ping both the user and the device, and allowing the user to manually ping keys by pressing a button and triggering a dime motor.

Your Personal Doorman

Idea

I often want to know who is at home when I’m on campus. If I’ve had a rough day – maybe I’d like to come home to chat with my roommate or maybe I’d like some alone time.

One might argue that Find My Friends has many of the features I require but as an Android user this feature is not available to me. Another issue to point out is that if you live in an apartment building, like me, then there is some likelihood they they are not in the room – rather in somewhere else in the building.

If I was to extend the project, I would try to use IFTTT to notify me when someone enters and exits. Additionally, I would add temporary keys so that if friends or family are visiting they can temporarily unlock my door when they need to.

Proof Of Concept

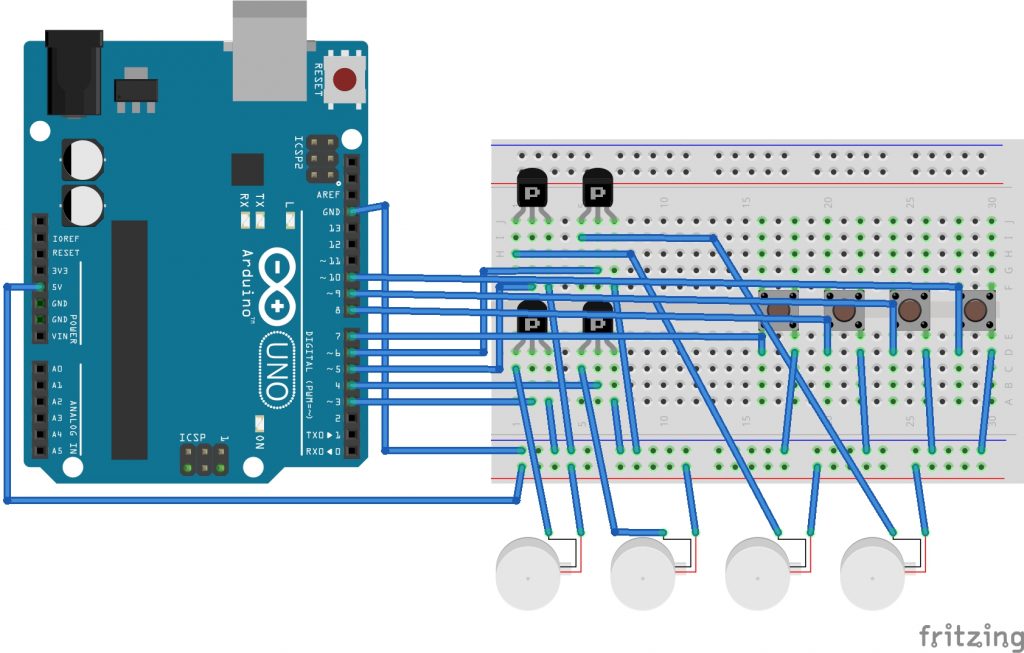

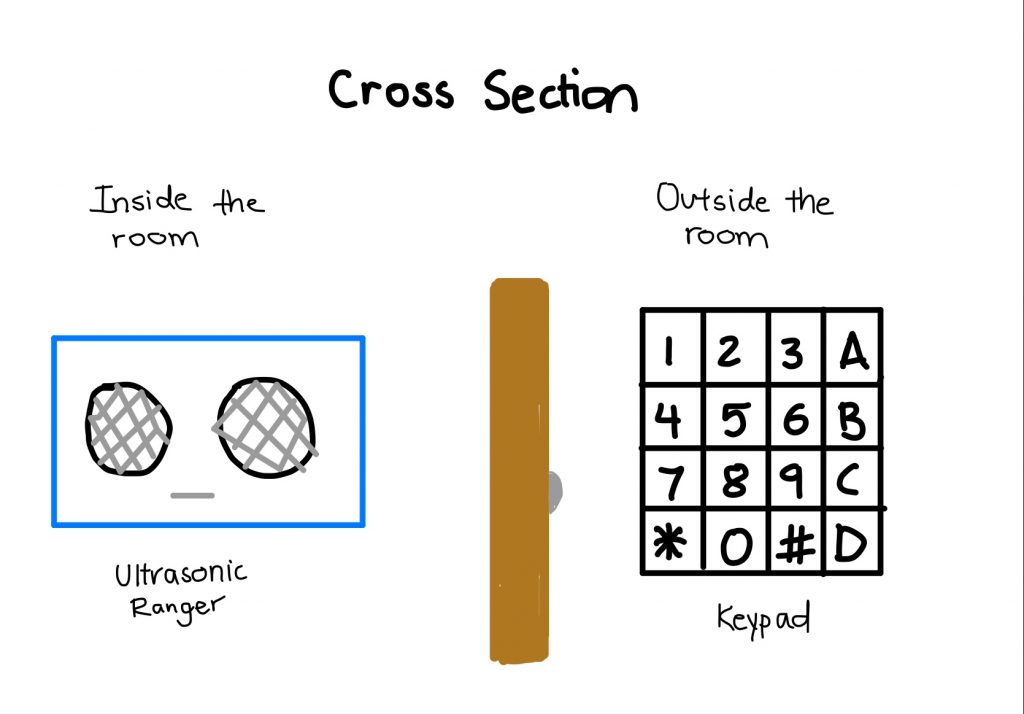

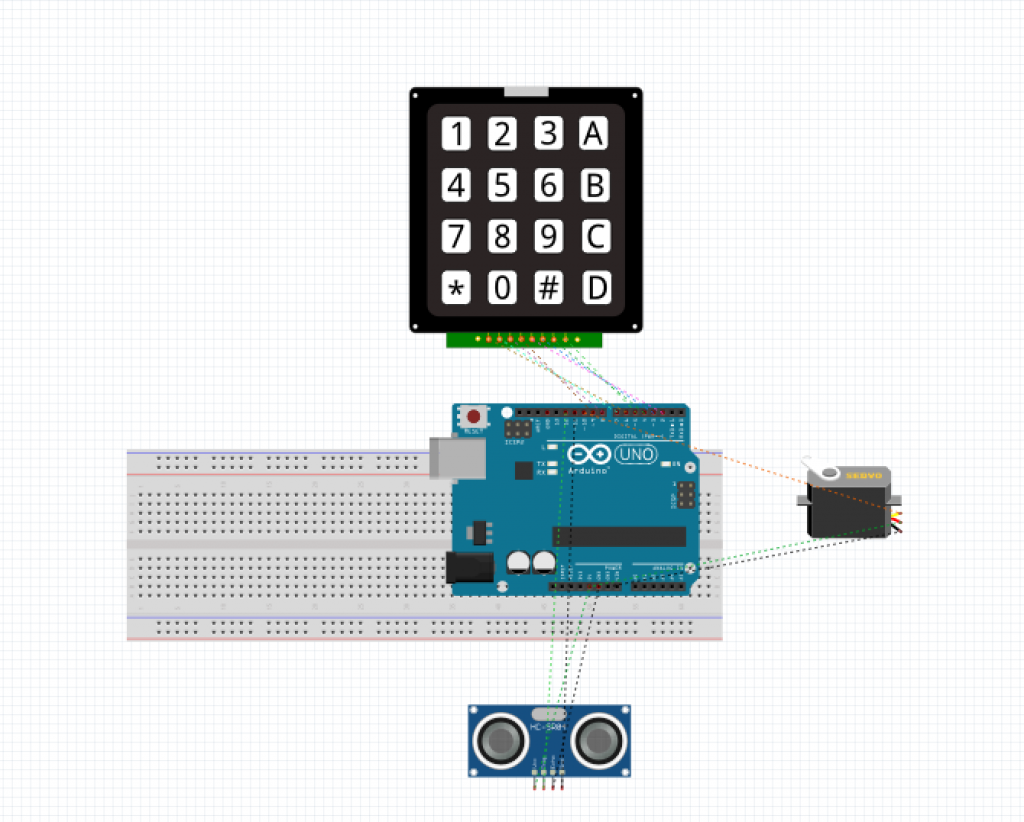

To input the password, I decided to use a keypad. Every person has an associated key, so we can track who is entering the apartment. To further incorporate the kinetic requirement of this project – I added an ultrasonic ranger which ‘unlocks the door’ when a person stands near the door from the inside. There are also modes which can disable certain keys from working if you need privacy.

Kinetic Crit: Touch Mouse

Concept

Whiteboards and other hand drawn diagrams are an integral part of day to day life for designers, engineers, and business people of all types. They bridge the gap between the capabilities of formal language and human experience, and have existed as a part of human communication for thousands of years.

However powerful they may be, drawings are dependent on the observer’s power of sight. Why does this have to be? People without sight have been shown to be fully capable of spatial understanding, and have found their own ways of navigating space with their other senses. What if we could introduce a way for them to similarly absorb diagrams and drawings by translating them into touch.

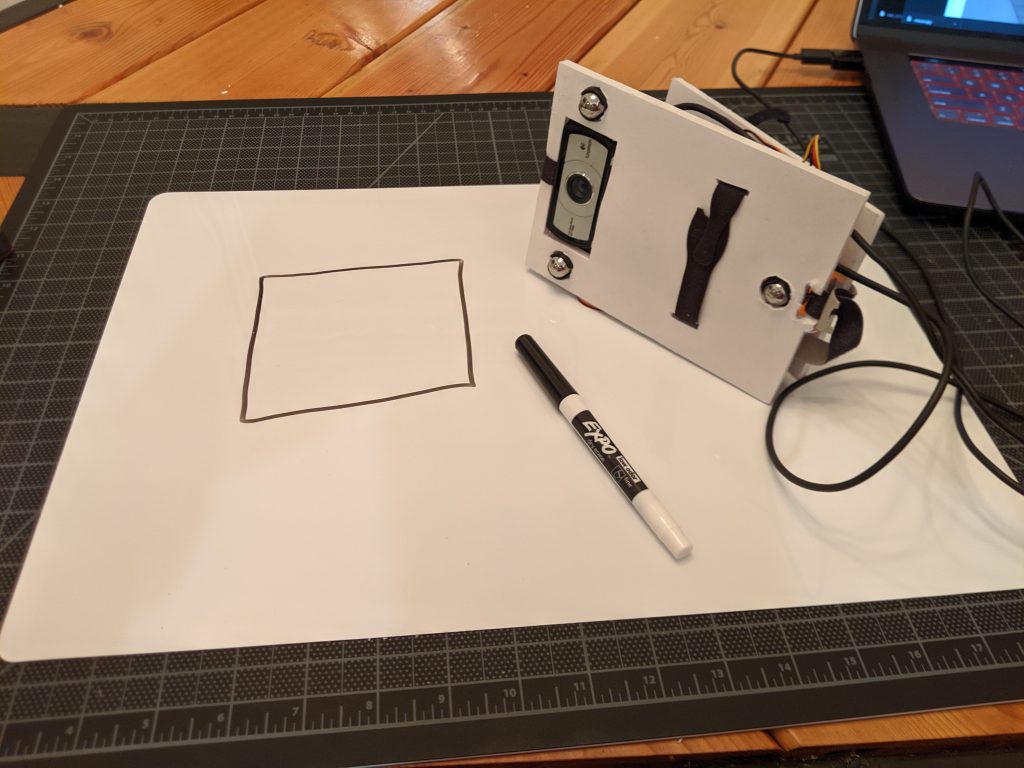

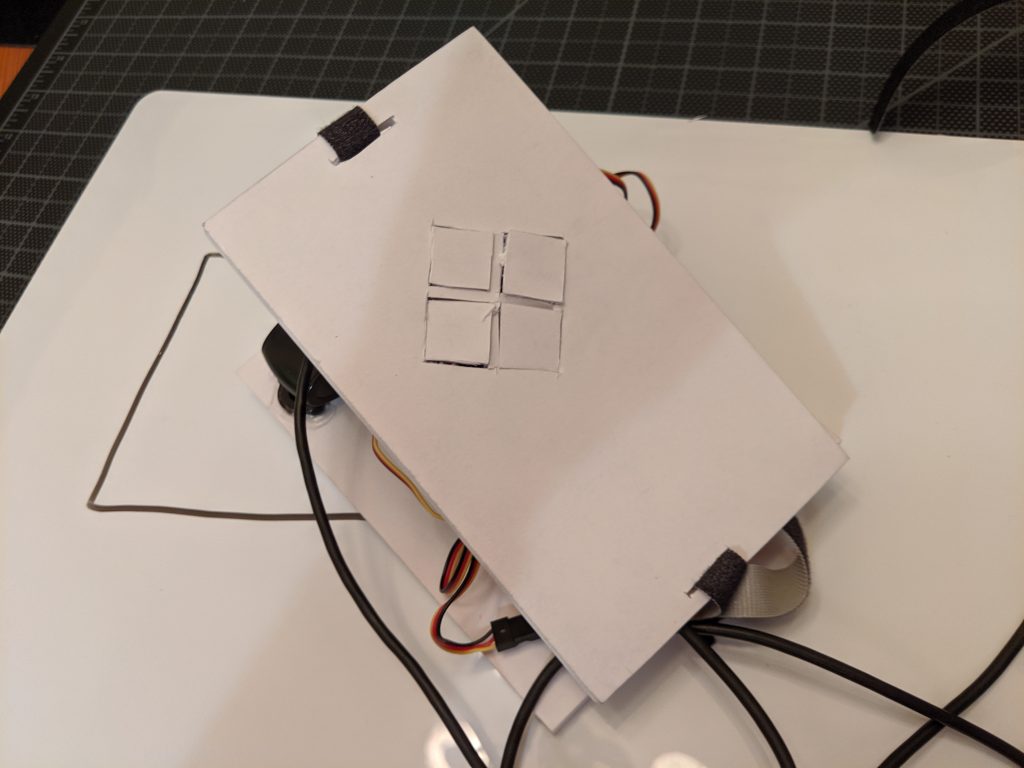

The touch mouse aims to do just that. A webcam faces the whiteboard suspended by ball casters (which minimize smearing of the image). The image collected by the camera is processed to find the thresholds between light and dark areas, and triggers servo motors to lift and drop material under the user’s fingers to indicate dark spots above, below, or to either side of their current location. Using these indicators, the user can feel where the lines begin and end, and follow the traces of the diagram in space.

Inspiration

The video Jet showed in class showing special paper that a seeing person could draw on, to create a raised image for a blind person to feel and understand served as the primary inspiration for this project, but after beginning work on the prototype, I discovered a project at CMU using a robot to trace directions spatially to assist seeing impaired users in way-finding.

Similarly in the physical build I was heartened to see Engelbart’s original mouse prototype. This served double duty as inspiration for the form factor, and as an example of a rough prototype that could be refined into a sleek tool for everyday use.

The Build and Code

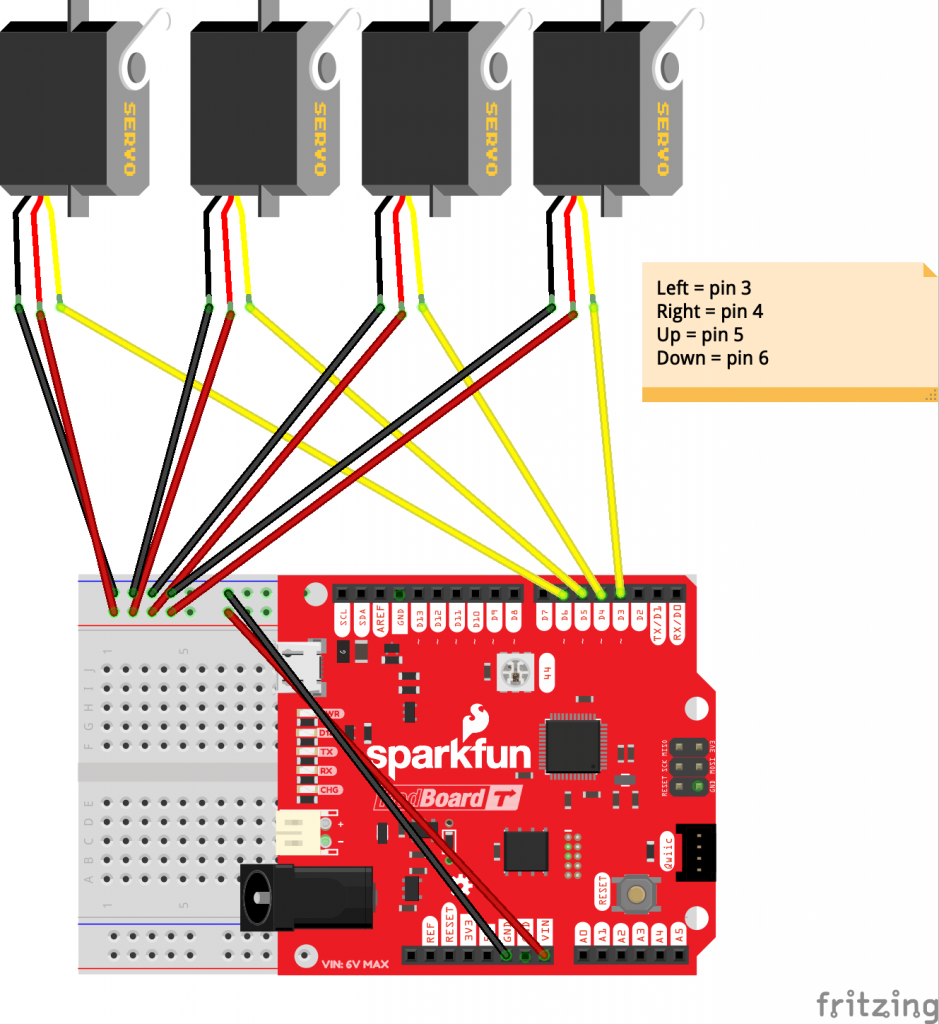

The components themselves are pretty straightforward. Four servo motors lift and drop the physical pixels for the user to feel. A short burst of 1s and 0s indicates which pixels should be in which position.

The python code uses openCV to read in the video from the webcam, convert to grayscale, measure thresholds for black and white, and then average that down into the 4 pixel regions for left, right, up and down.

I hope to have the opportunity in the future to refine the processing pipeline, and the physical design, and perhaps even add handwriting recognition to allow for easier reading of labels, but until then this design can be tested for the general viability of the concept.

Python and Arduino code:

Crit #2 – Grumpy Toaster

Problem: Toasters currently only use their *pop* and occasionally a beep to communicate that they are finished toasting whatever is inside them. It is also difficult to tell the state of various enclosed elements of the toaster, like if the crumb tray needs to be empty, any heating elements need wiped off, etc. I believe the toaster could communicate a lot more with its “done” state in ways that would be inclusive to a variety of different user types.

Solution: More or less, a toaster that gets grumpy if it is left in a state of disrepair. Toasters are almost always associated with an energetic (and occasionally annoying) burst of energy to start mornings off, but what if the toaster’s enthusiasm was dampened? Because users are generally at least half paying attention to their toaster, a noticeably different *pop* and kinetic output could alert them that certain parts of the toaster needed attention. For example, if the toaster needed cleaned badly, it would slowly push the bread out, instead of happily popping it up. Both the visual and audio differences generated by modifying this kinetic output would be noticeable.

Proof of Concept: I constructed a model toaster (sans heating elements) using a small servo and a raising platform. Because a variety of sensing methods for crumbs did not work, “dirtiness” is represented by a potentiometer. I’ve substituted a common lever for a light push switch to accommodate a broader range of possible physical actions.

The servo drives the emotion of the toaster. It can sharply or lethargically push its contents out, providing the user its current state. Once removed, the weight of the next item to be put inside then lowers the platform back onto the servo.

Files + Video: Drive link

Discussion: This model is ripe for extension. I originally designed this around the idea of overstuffing your toaster, something I do frequently that not only doesn’t toast the bread well but surely dumps more crumbs than necessary into the bottom tray. Unfortunately, I couldn’t figure out a way to test for stuffedness, and went with straight cleanliness instead. But, the overall idea behind designing emotionally (grumpy toaster, fearful car back-up sensor) has helped me understand this class a lot better, and I hope to continue working on that line of thinking with more physical builds like this.

Feeling Color

Problem:

For people who either color blind or blind, seeing and comprehending color, which is embedded in many aspects of our lives as an encoder of information, can be very challenging, if not impossible. In addition, color adds another dimension to our experiences and enhances them as well.

A General Solution:

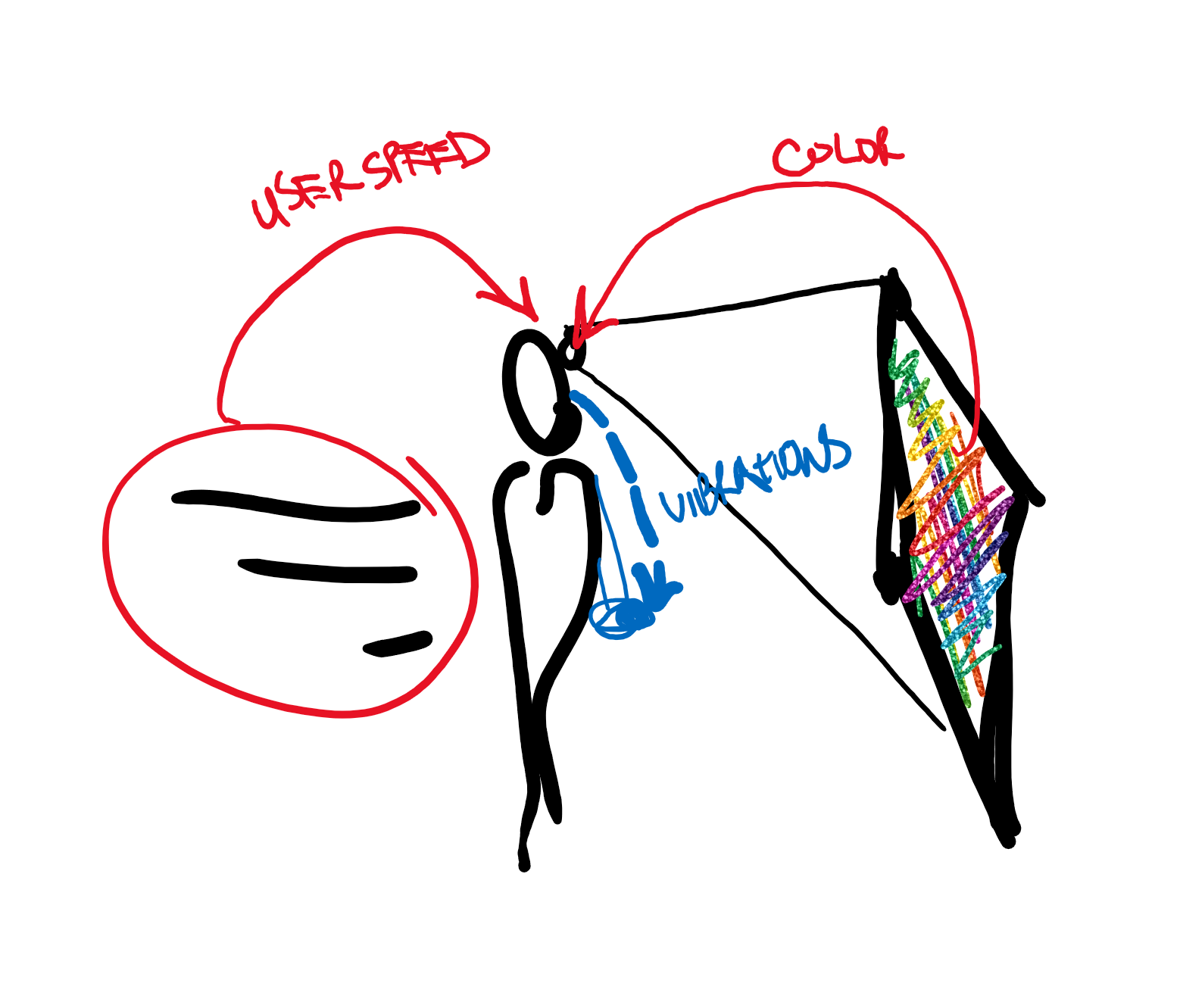

A device that would be able to detect color and send the information to actuators to display the information through vibration. Ideally, the system would be able to vary the specificity and accuracy of the detection (in terms of frame rate, but also in regards to the sample area used for color detection) based on the velocity of the user using it or other input variables.

Proof of Concept:

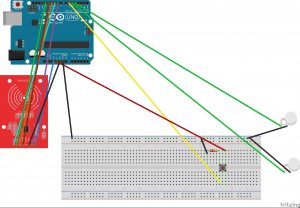

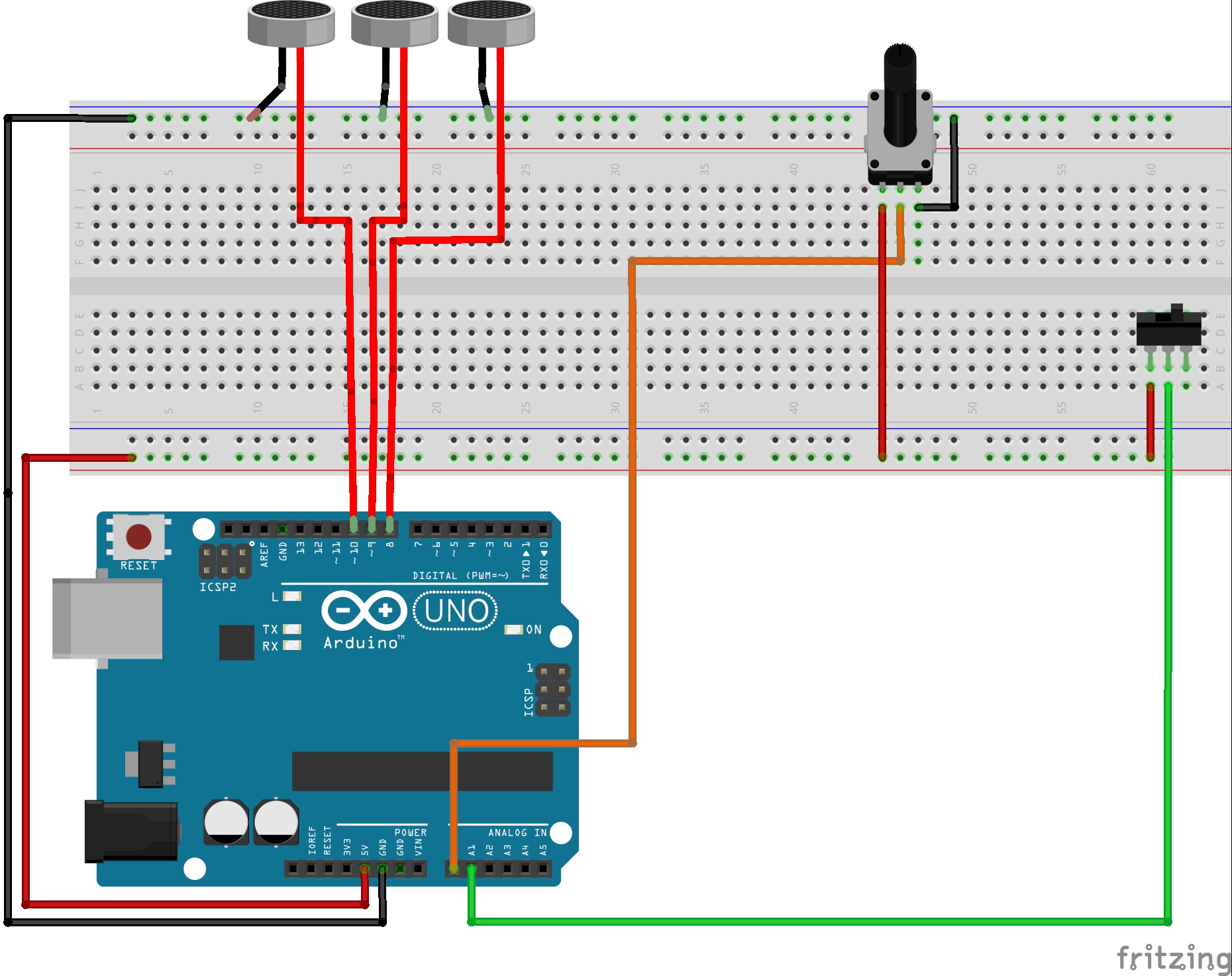

An Arduino with a potentiometer to represent the velocity of the user and three vibration motors (tactors) to represent the color data. These physical sensors and actuators are connected to p5.js code which uses a video camera and mouse cursor to select the point of a live video feed to extract color from. The color is then sent to the Arduino where it is processed and sent as output signals to the tactors. A switch is also available, when the user doesn’t want to have vibrations clouding their mind.

Fritzing Sketch:

The Fritzing sketch shows how the potentiometer and switch are set up to feed information into the Arduino as well as how the tactors are connected to the Arduino to receive outputs. Not pictured, is that the Arduino would have to be connected to the laptop which houses the p5.js code.

Proof of Concept Sketches:

The user’s velocity is sensed which alters the rate at which color is processed and sent back to the user through vibrations. The user data could also be extended to change the range of color sensing that is applied to the live feed to feel the colors of a general range.

Proof of Concept Video:

Files: