Problem

“Until now, we have always had to adapt to the limits of technology and conform the way we work with computers to a set of arbitrary conventions and procedures. With NUI(Natural User Interface), computing devices will adapt to our needs and preferences for the first time and humans will begin to use technology in whatever way is most comfortable and natural for us.”

—Bill Gates, co-founder of the multinational technology company Microsoft

I think that gesture control interface could have great potential to help people interact with computing devices naturally because gestures are inherently natural. Gestures are a huge part of communication and they contain a great amount of information, especially the conscious or unconscious intentions of the gesture-doers. They sometimes communicate more, faster, and stronger than other communication methods.

General Solution

In this perspective, I want to design a gesture user interface for a computer. When people are sitting on a chair in front of a computer, their body gesture (including posture or movement) shows their intentions very well. I did some research and I could find a few interesting gestures that people commonly use in front of a computer.

When people are interested in something or when they want to see something more closely, they lean forward to see something in detail. Reversely, when people lean backward on a chair with two hands on their heads staring at somewhere, it is easy to guess that they are contemplating or thinking on something. When they swing a chair repeatedly or shake legs, it means that they are losing interests and become distracted.

The same gestures could have different meanings. For example, leaning forward means the intention to see closer when people are looking at images, but it could mean the intention to see the previous frame again when they are watching a video.

I am going to build a gesture interaction system that could be installed on computers, desks, or chairs to recognize the gestures and movements of a user. According to a person’s gestures and surrounding contexts (what kind of contents he/she is watching, what time is it, etc), the computer will interpret the gestures differently and extract implicit intentions from them. This natural gesture user interface system could leverage user experience(UX) of using computing devices.

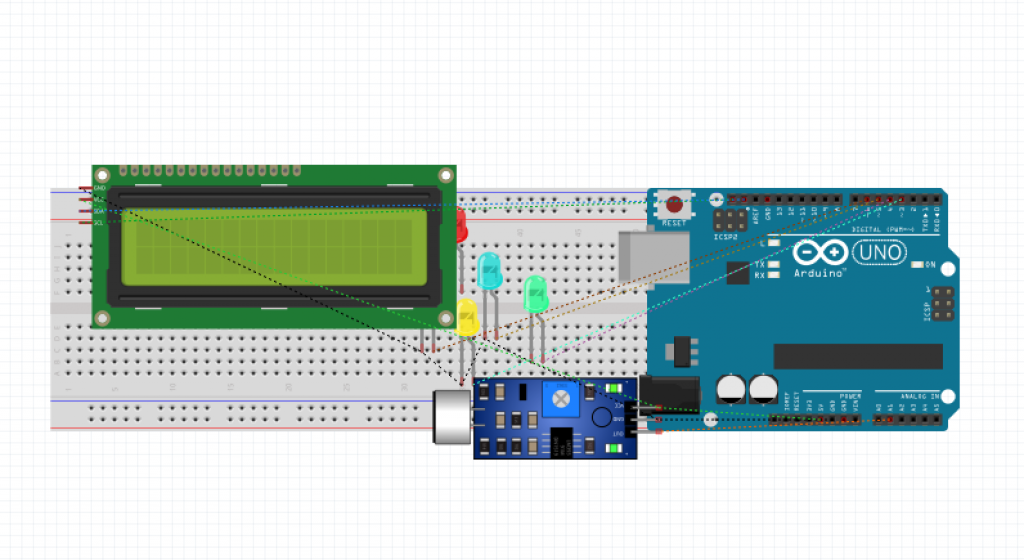

I am also considering to add haptic or visual feedback to show whether the computer understood the intention of the gestures of the user as input.

Proof of Concept

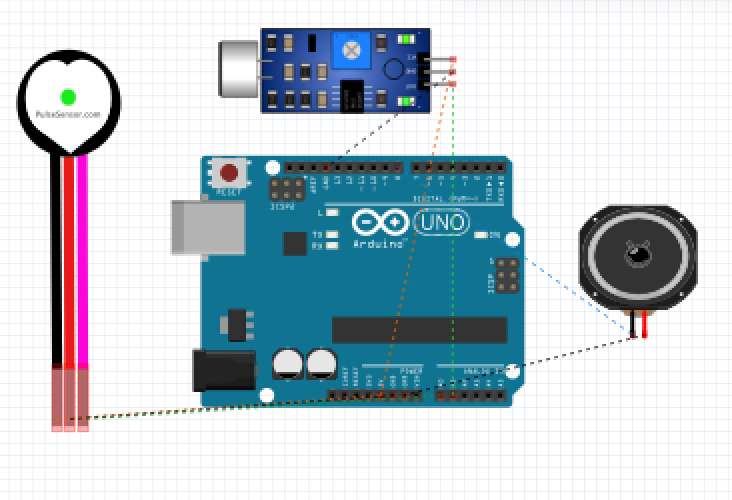

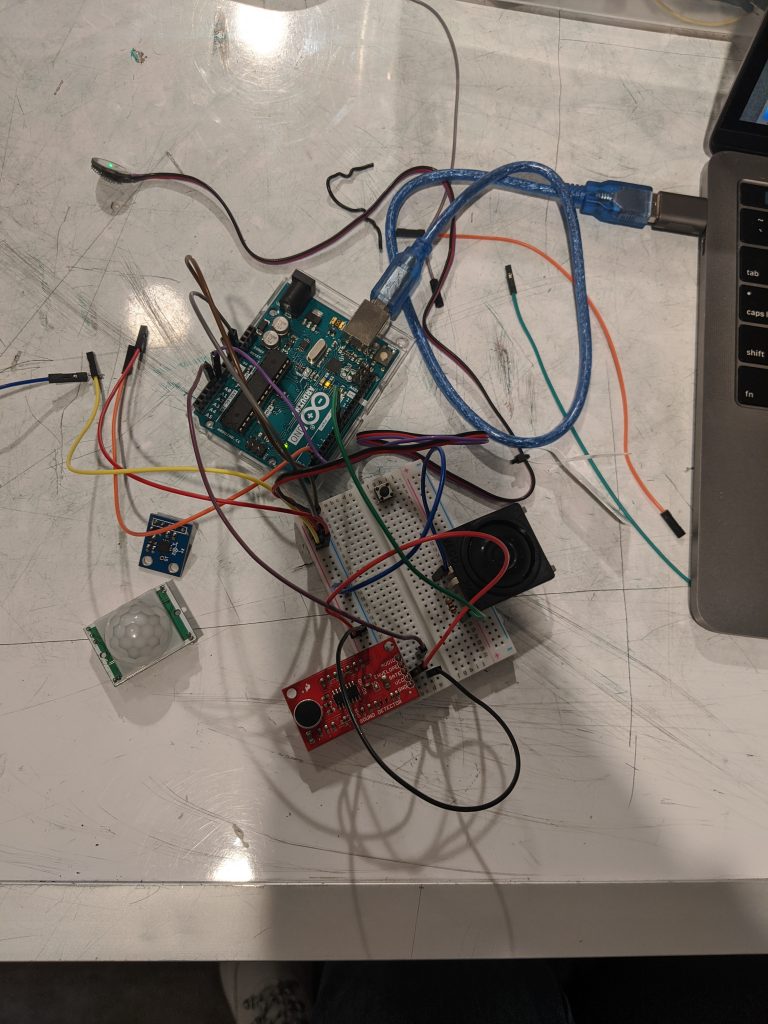

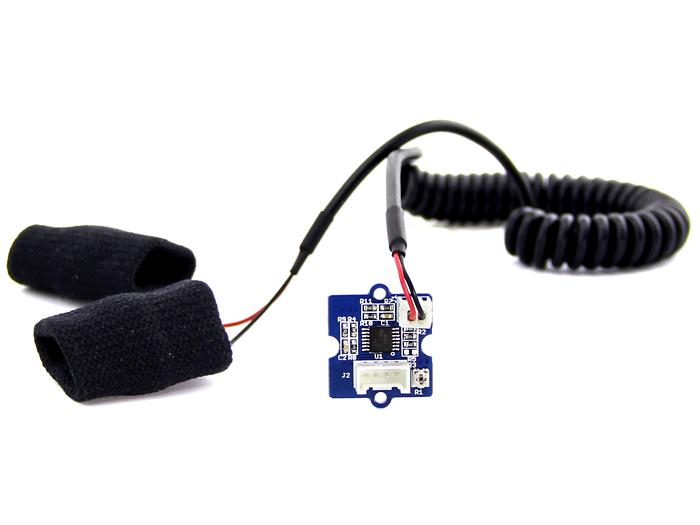

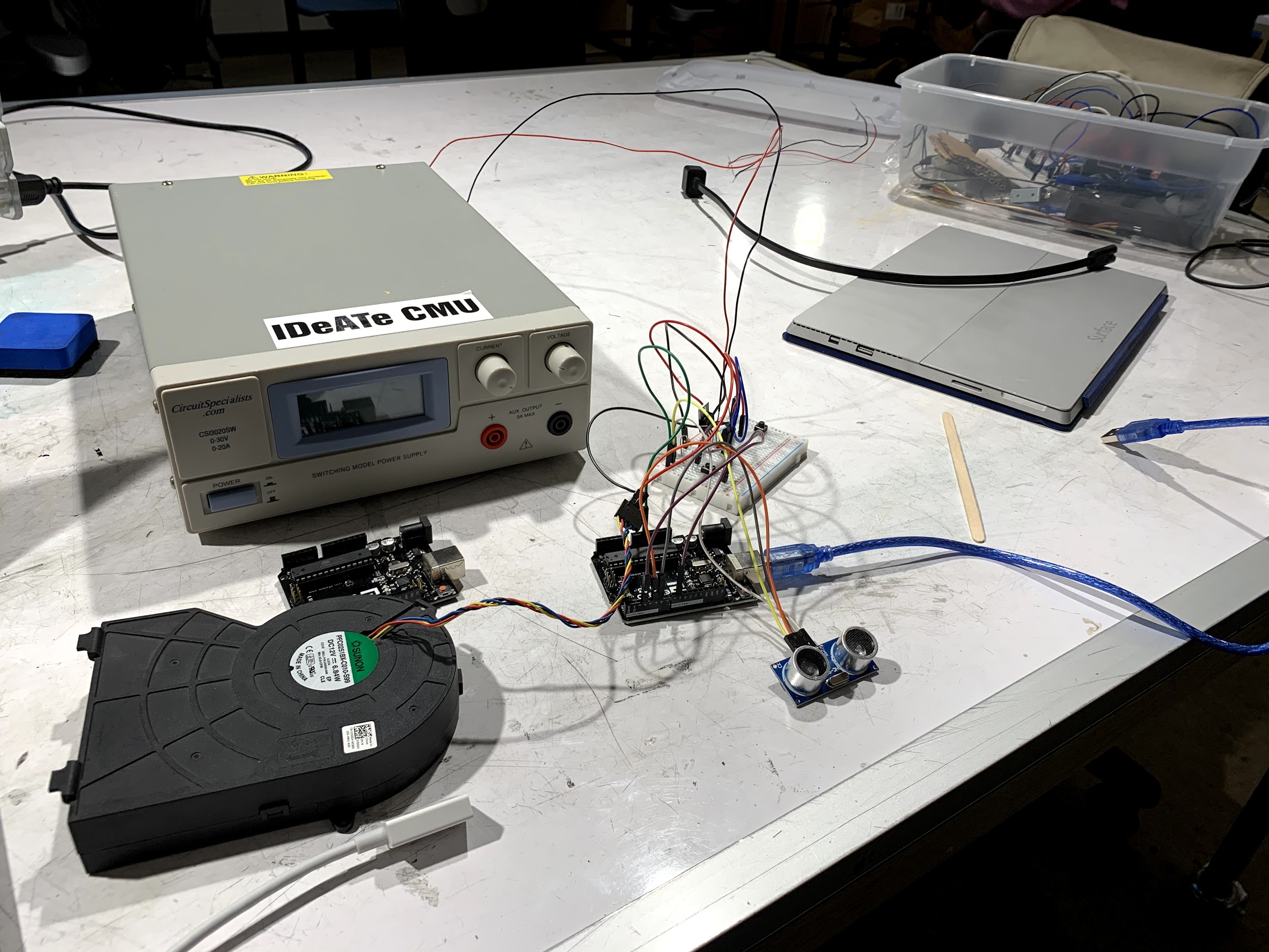

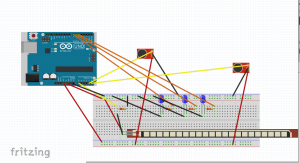

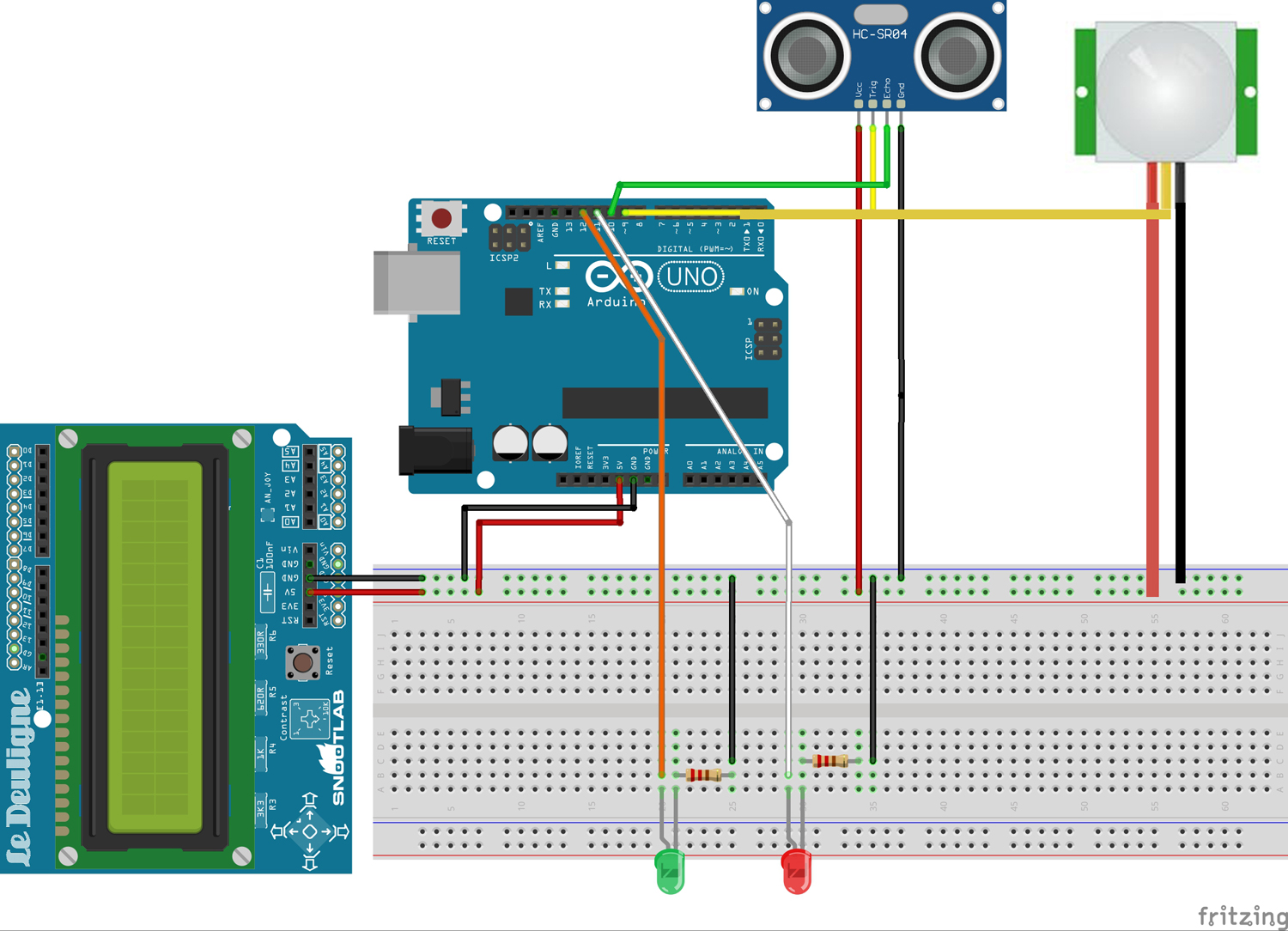

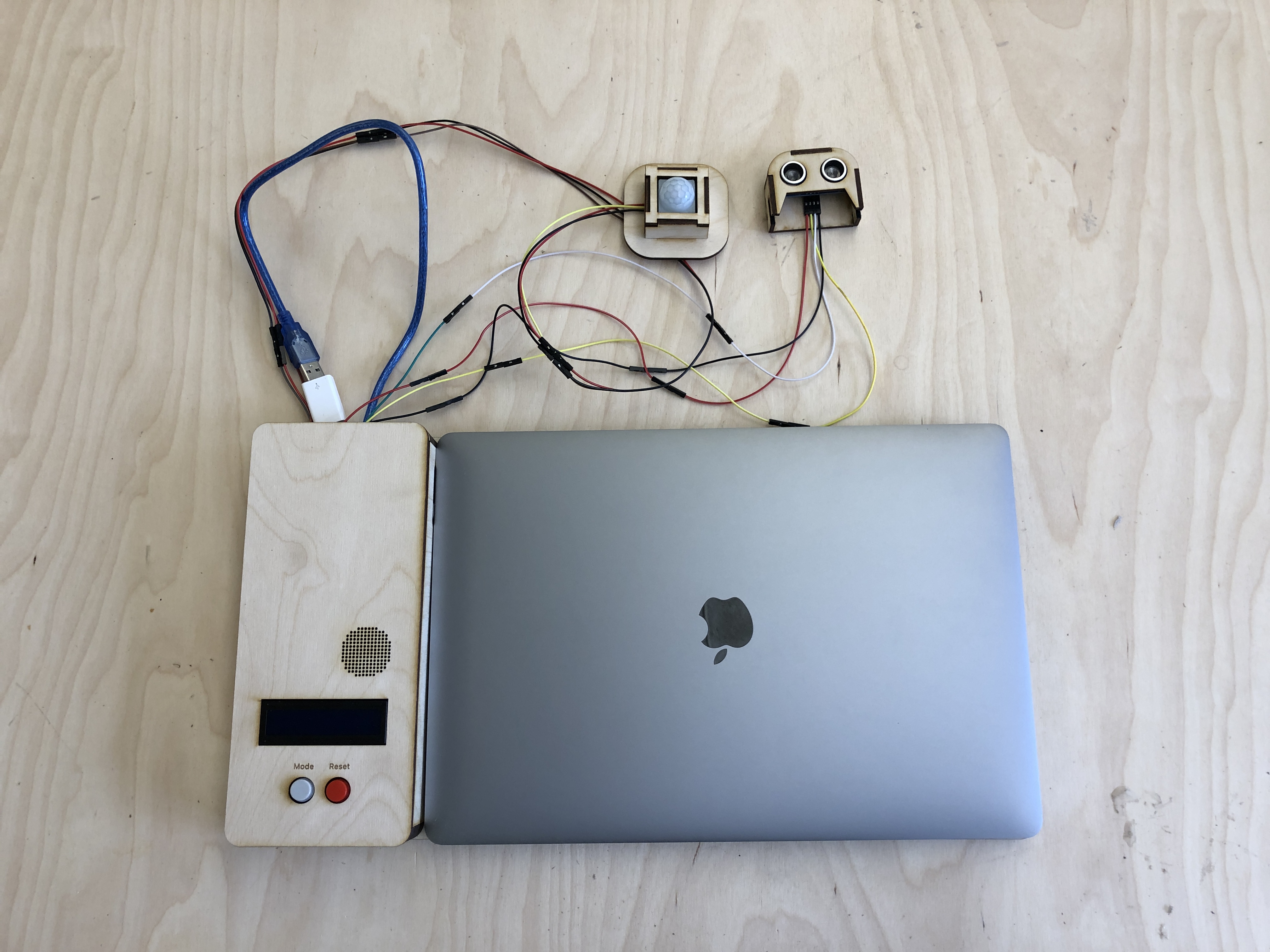

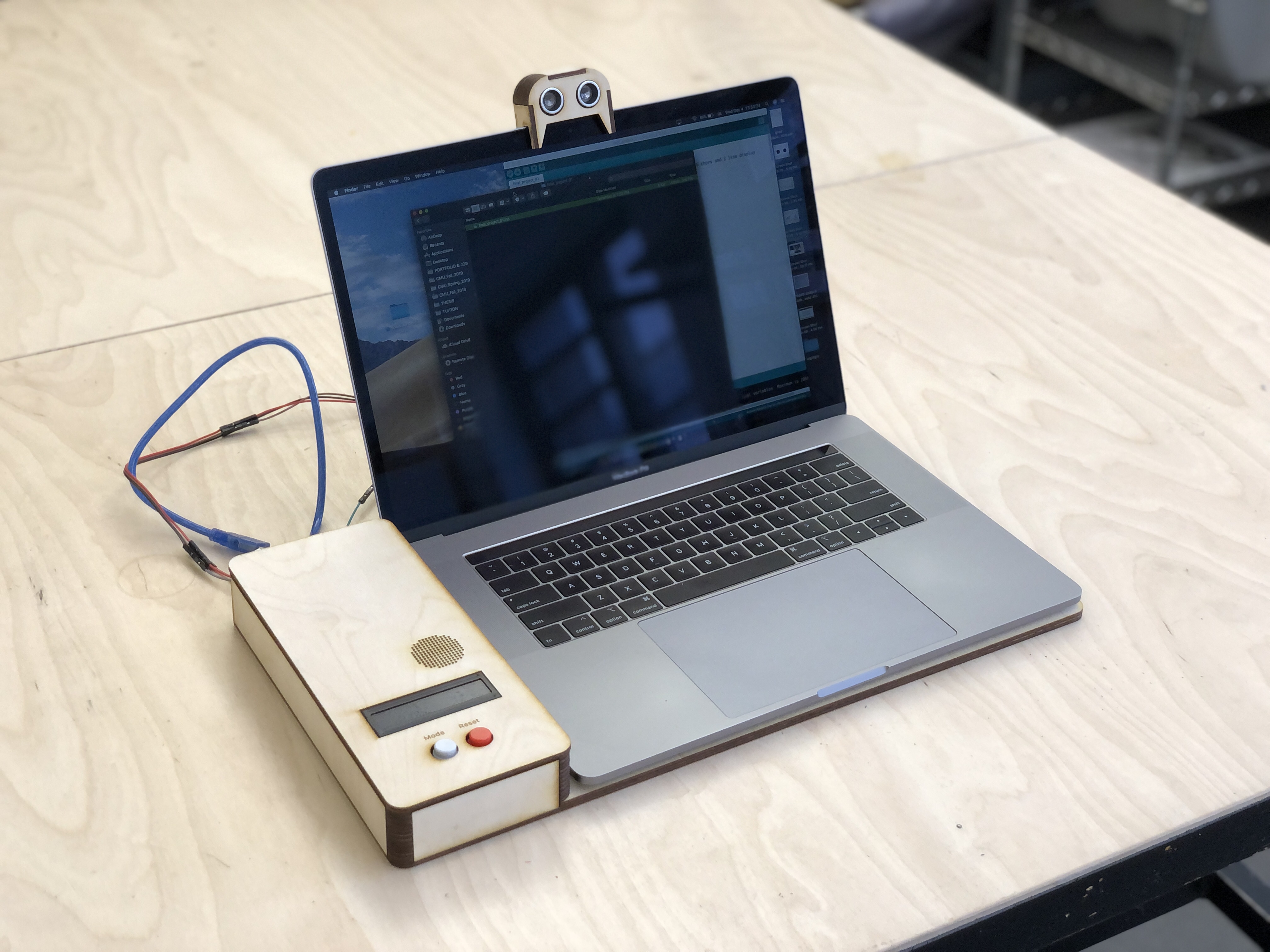

The system is composed of two main sensors. The motion sensor is attached under a desk so that it could detect the movements of legs. The ultrasonic sensor is attached to the monitor of a laptop so that it could detect the posture of a user, like an image below.

The lean forward gesture could be interpreted differently based on the contexts and the contents that a user is watching at that time. I conducted research and found correlations;

- When a user is seeing an image or reading a document, they lean forward with the intention to look something closer or in detail.

- When a user is watching a video, they lean forward with a surprise or an interest, with the intention to look at the recent scene again.

- When a user is working on multiple windows, they lean backward for thinking for a while or see the overview of all windows.

Based on this intention and the contexts, the system I designed responds differently. For the first case, it zooms in the screen. For the second case, it rewinds the scene. For the last situation, it shows all windows.

Also, the system could detect the distraction level of a user. The common gestures when people lose interest or become boring are shaking legs or staring at other places for a while. The motion sensor attached below the desk could detect the motion and when the motion keeps being detected more than a certain amount of time, the computer turns on music that could help a user focus.

Video & Codes

(PW: cmu123)