Realized this was stuck in my drafts since the assignment failed and was iterated into my second crit, the toaster. Putting this here for posterity, but most of it is available in my Crit #2 – Grumpy Toaster.

For this post:

Making Things Interactive, Fall 2019

Making Things Interactive

Realized this was stuck in my drafts since the assignment failed and was iterated into my second crit, the toaster. Putting this here for posterity, but most of it is available in my Crit #2 – Grumpy Toaster.

For this post:

“Until now, we have always had to adapt to the limits of technology and conform the way we work with computers to a set of arbitrary conventions and procedures. With NUI(Natural User Interface), computing devices will adapt to our needs and preferences for the first time and humans will begin to use technology in whatever way is most comfortable and natural for us.”

—Bill Gates, co-founder of the multinational technology company Microsoft

I think that gesture control interface could have great potential to help people interact with computing devices naturally because gestures are inherently natural. Gestures are a huge part of communication and they contain a great amount of information, especially the conscious or unconscious intentions of the gesture-doers. They sometimes communicate more, faster, and stronger than other communication methods.

In this perspective, I want to design a gesture user interface for a computer. When people are sitting on a chair in front of a computer, their body gesture (including posture or movement) shows their intentions very well. I did some research and I could find a few interesting gestures that people commonly use in front of a computer.

When people are interested in something or when they want to see something more closely, they lean forward to see something in detail. Reversely, when people lean backward on a chair with two hands on their heads staring at somewhere, it is easy to guess that they are contemplating or thinking on something. When they swing a chair repeatedly or shake legs, it means that they are losing interests and become distracted.

The same gestures could have different meanings. For example, leaning forward means the intention to see closer when people are looking at images, but it could mean the intention to see the previous frame again when they are watching a video.

I am going to build a gesture interaction system that could be installed on computers, desks, or chairs to recognize the gestures and movements of a user. According to a person’s gestures and surrounding contexts (what kind of contents he/she is watching, what time is it, etc), the computer will interpret the gestures differently and extract implicit intentions from them. This natural gesture user interface system could leverage user experience(UX) of using computing devices.

I am also considering to add haptic or visual feedback to show whether the computer understood the intention of the gestures of the user as input.

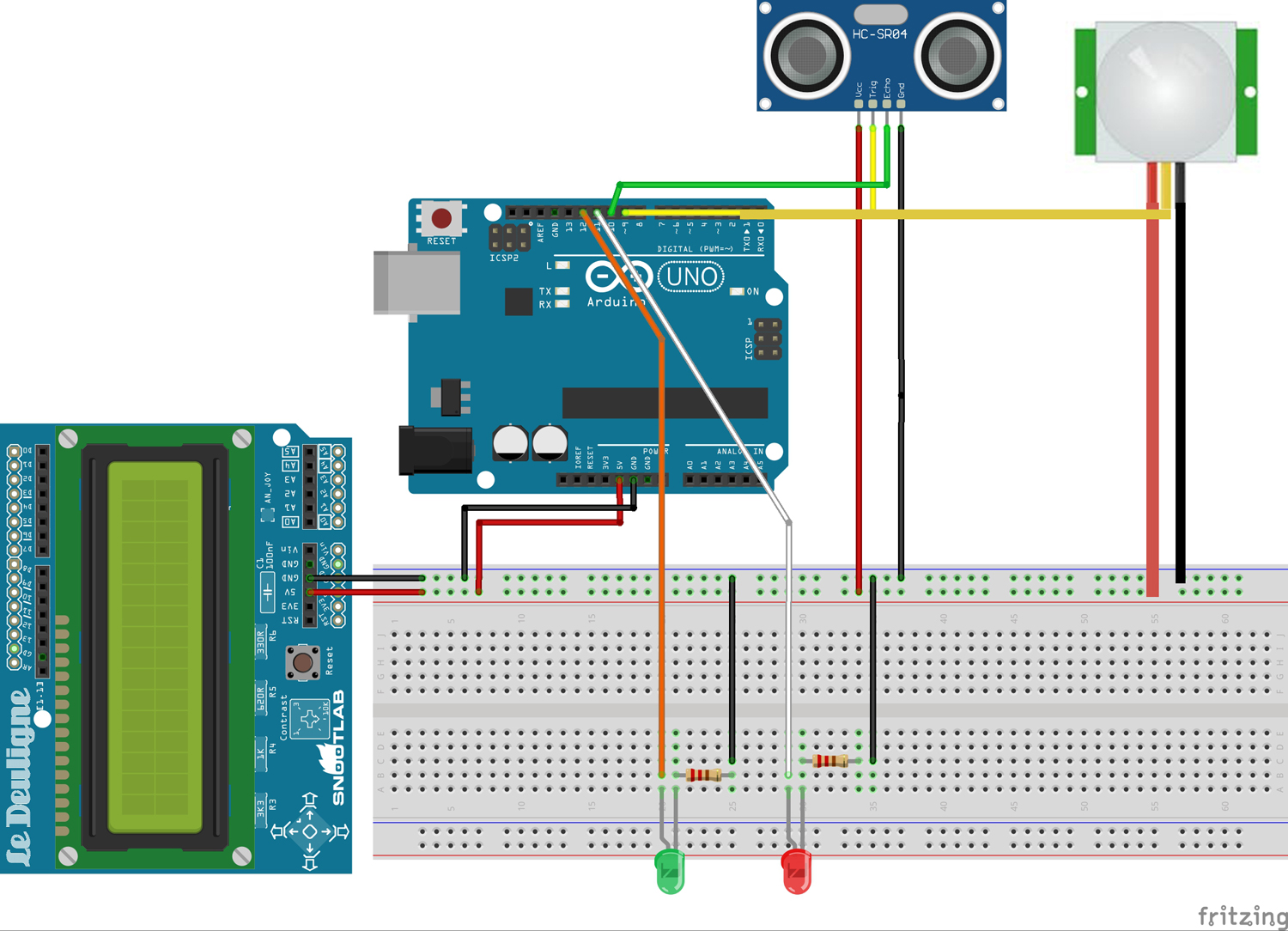

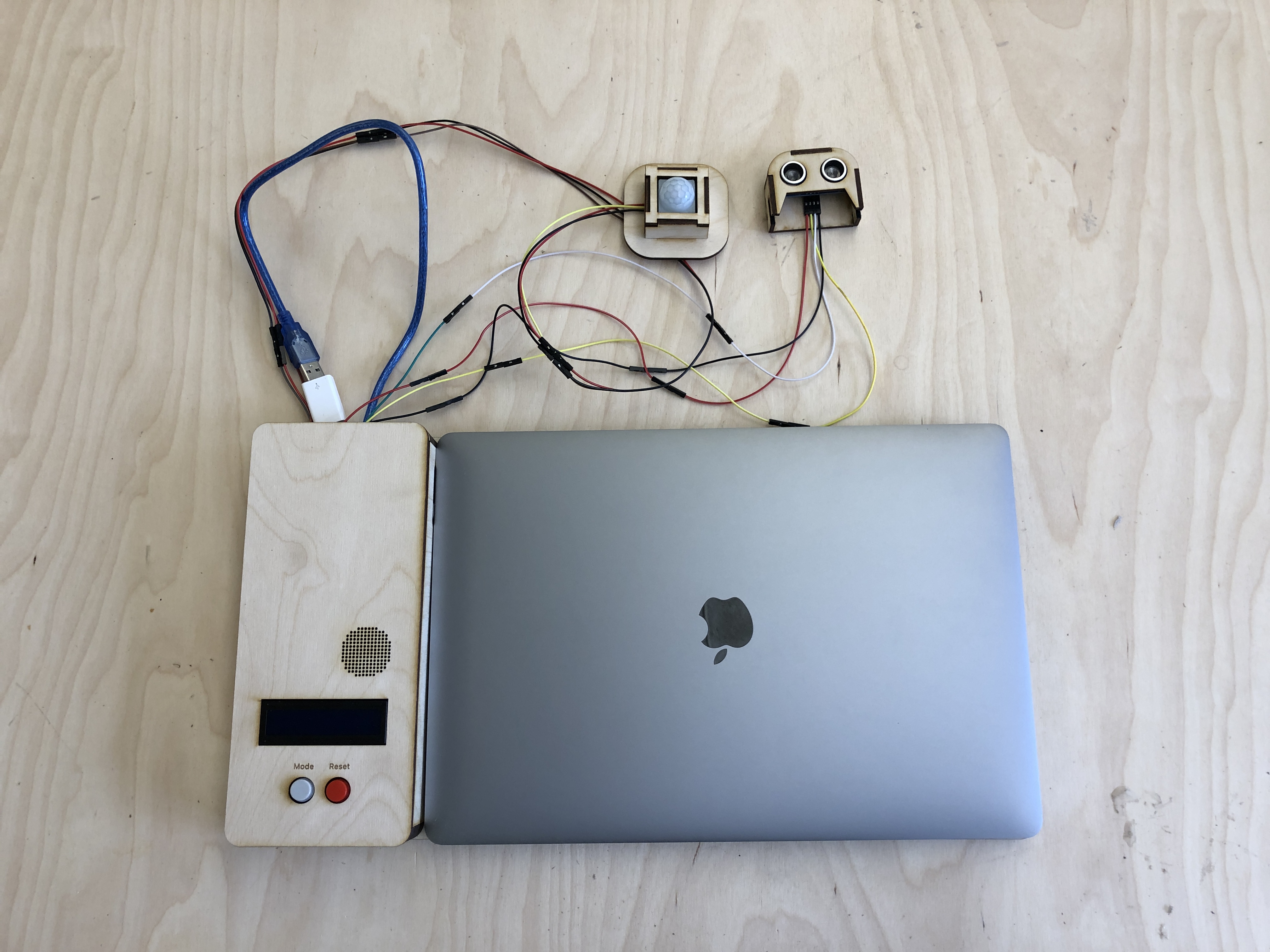

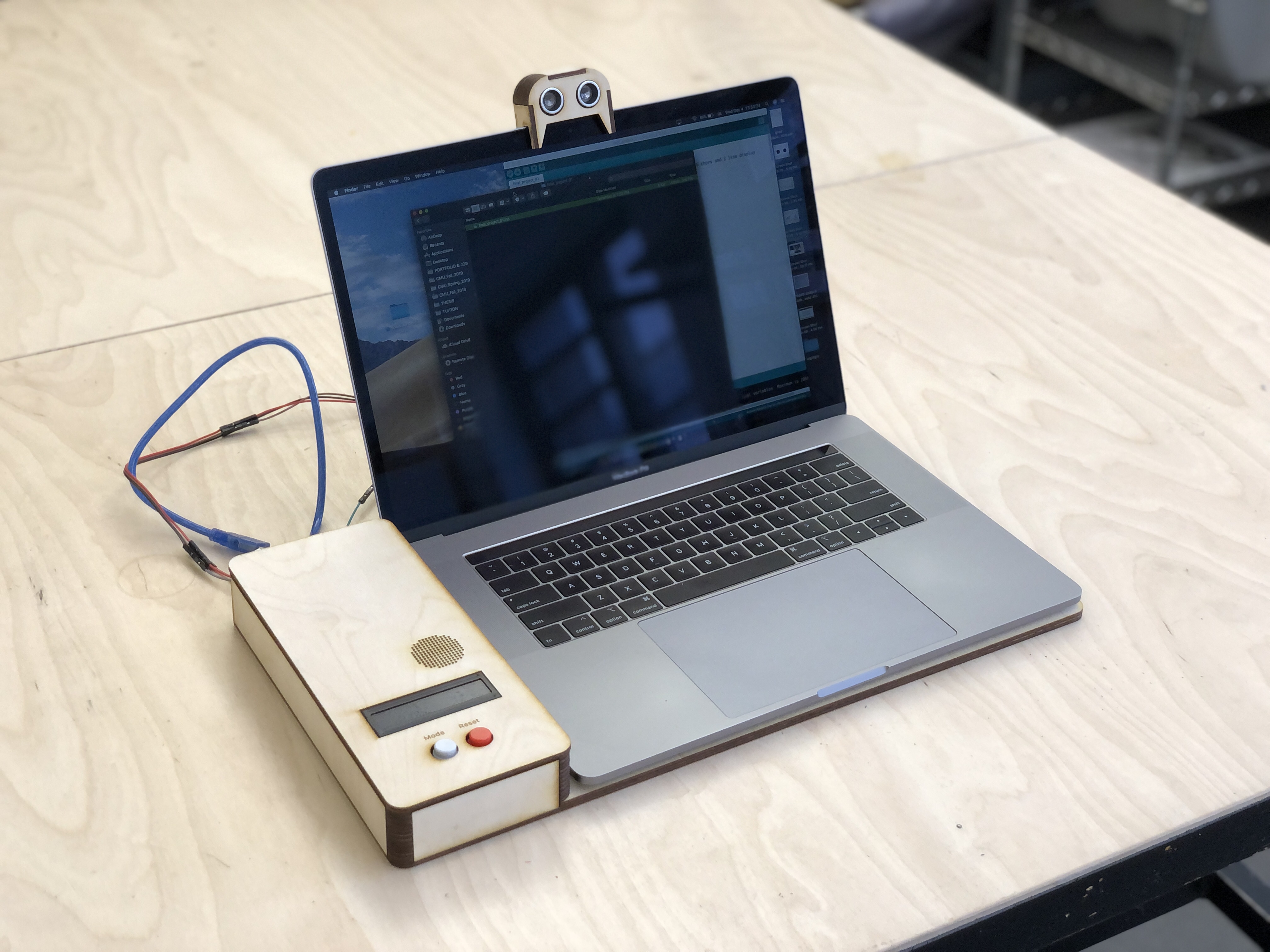

The system is composed of two main sensors. The motion sensor is attached under a desk so that it could detect the movements of legs. The ultrasonic sensor is attached to the monitor of a laptop so that it could detect the posture of a user, like an image below.

The lean forward gesture could be interpreted differently based on the contexts and the contents that a user is watching at that time. I conducted research and found correlations;

Based on this intention and the contexts, the system I designed responds differently. For the first case, it zooms in the screen. For the second case, it rewinds the scene. For the last situation, it shows all windows.

Also, the system could detect the distraction level of a user. The common gestures when people lose interest or become boring are shaking legs or staring at other places for a while. The motion sensor attached below the desk could detect the motion and when the motion keeps being detected more than a certain amount of time, the computer turns on music that could help a user focus.

(PW: cmu123)

Have you ever been home and didn’t want to interact with anyone or have you ever wanted to scare off unwanted guests? What if you had a security system that works by scaring people away at the door?

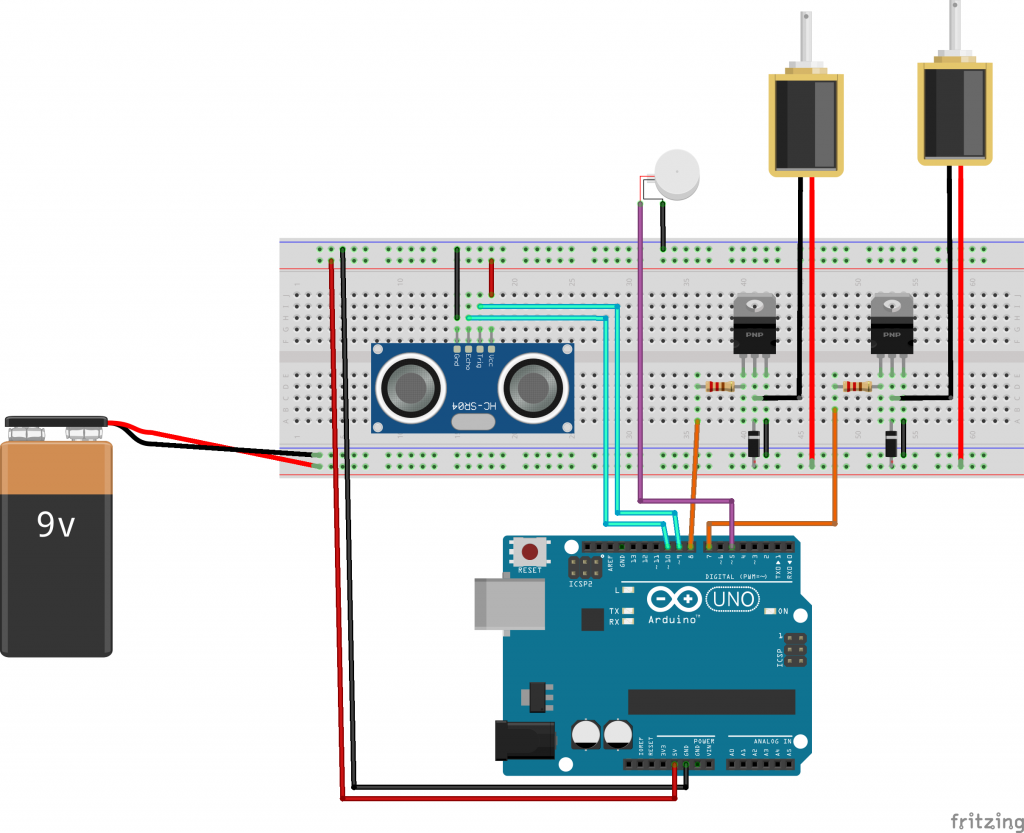

A system that scares people away using tapping and buzzing. The closer you get, the faster the tapping. If you get really close, then the doorknob vibrates. The idea here is that it only scares people who don’t see it coming (ie uninvited people who won’t leave you alone). If you know about the system then it won’t scare you, and the only way you would know about the system is if you’re the person who set it up or if you tell invited people.

People can often be unprepared for the days weather, and upon getting ready in the morning, they rarely check the weather until after getting dressed and ready. How can this process be made more efficient?

An emotional notification system that informs users of the weather using sound, vibration, and light would grab sleep-weary users attention, while communicating to them the types of clothing and gear that they would need for the day.

A solenoid, tactor, and servo would serve as indicators from weather and temperature. Each will be paired with an led to further emotionally communicate the weather state. The solenoids led pulses twice, and then the solenoid strikes twice, simulating lightning and thunder. The tactor and its led “shivers” indicating cold weather. The servo slowly rocks back and forth, indicating warm weather.

For gym enthusiasts lifting heavy weights, bad form can result in weeks off from the gym. However, injuries are not always instant and even the slightest odd angle in a squat, repeated over time, can result in debilitating pain.

A smart weight that “talks” to the user, using sound, would be ideal to help correct lifting form. Gyroscopic sensors that detect movement, angle and altitude can be used for a variety of exercises to determine proper form. If the user’s form is off, the weight will speak to them, making a different sound for different aspects of form that are not correct. Proof of Concept

Proof of Concept

A gyroscope and microphone simulates a barbell weight. When the bar is tilted left, the pitch of the sound goes up, when the barbell is tilted right, the pitch goes down. A button is used to simulate grip, telling the system to begin recording accelerometer data.

I kept working on the Leap motion sensor for this week, too. The problem that I focused on was recognizing hand signals for transportation. When a road reconstruction is going on or a traffic accident happens, it is usual that normal people or police officers temporally control the traffic on the road. However, it is very dangerous that it causes additional accidents because sometimes it is difficult to notice signals from a far distance.

I came up with multi-sensory feedback (for this assignment, auditory feedback) of hand signals on the road. The device that is attached to the front of a car could read the gestures of people on the road and make different sounds according to the signals. Thus, a driver could notice the gesture much precisely as well as easier and fast. I believe this system could help to prevent accidents in advance. Also, it could be attached to the autonomous car as well and the car could automatically read and react to the signals.

Because of the limited options in SDKs, I could just implement pinch and fist gestures to make sounds. For the fist gesture, I used two different sounds according to the time – the first fist and the second fist gestures sound differently. I thought that hand signals could be a series of movements, and this feature could also read them and make different sounds.

Video-Jay_Assignment_08_gesture_sound

Codes-Jay_Assignment_08_gesture_sound

This project was very challenging for me so I decided to take a different approach to the problem. As an engineer, I find it very difficult to think about physical structures. Keeping this in mind, I designed my structure for making form first before deciding how I would use it and then I further modified it to exactly what I needed.

Problem

A concept difficult difficult for kids, and adults, is understanding how close they are to their goal. Visualization is often a good technique to better understand where they stand. I decided to use visualization paired with sound effects to aid the user in their understanding of their goals.

Solution

To accomplish this, I used an Arduino Uno, a push button, a servo motor, some marbles and a glass bottle. The idea is that whenever the user gets closer and closer to their goal they can press the button and the marble will be added to the bottle. This gives the user the satisfaction from pushing the button and a hearing the clink of the marble in the bottle. Additionally, the user can always glance at the bottle to see their progress.

Possible Improvements

In today’s world, there are many carefully considered alarm tones that are designed to be played from mobile phones or other speakers in order to wake users up slowly and gradually. However, some people are such deep sleepers that these alarms have no effect; and something much stronger and more visceral is required.

With this project, I sought to create a visceral sound using kinetic output that is both loud and jarring enough to wake even the deepest of sleepers. To do this, I drew on my own experiences with balloons; and how when they pop, everyone in the room is stunned into silence. Using a servo motor with a connected pin as the actuator, I also integrated a timer and a start button using a simple potentiometer and a push button. Using these things, users are able to set a timer that terminates in the loud popping of a balloon.

Problem: I’ve driven off after my friends have gotten stuff out of my car trunk but before they’ve closed it. Thinking about this problem, its baked into my usual wait period when dropping someone off, the weirdness of hearing the thunking of the trunk when you’re not the one opening or closing it, and the fact that its all directly behind you. Audio feedback would be a good way to help differentiate the trunk’s state when the driver is not the one operating.

Solution: More or less, different audio cues based on trunk status. Traditionally there is a slap slap slap done by someone on the side of the car that means “you’re good to drive off now,” but this could be communicated better. It is important this does not confuse the driver though, and should be noticeably distinct from any relevant “trunk open” or “door ajar” sounds that they may also be hearing during normal trunk procedures. So, the system differentiated through kinetic sound when the drunk is closed, and more electronic audio when the trunk is ajar or being fuddled with. The former is accomplished with a solenoid, and the latter, a normal speaker and ideally a weight sensor.

Proof of Concept:

Problem:

Both in private and public settings, the use of physically actuated faucets can be a confusing when users encounter automatic/motion-actuated faucets in their daily lives as well (is this just me?). Remembering to shut off the faucet, especially completely, is often overlooked and is a significant waste of water depending on the duration it is ignored. Because the sound of water can easily be tuned out given how often we encounter it, it is important to communicate this information to users whether for the environment or the bill payer’s sake. In addition, most people use (I hope) soap when they wash their hands, but they don’t need the water to be running while that is happening, so ideally, the water should be shut off briefly while that event is happening to save water as well.

A General Solution:

A device that would represent the flow of water using percussion to signify when it is still running. The tempo of the percussion should convey whether water is running. Ideally, it should be a sound that is distinct from the sound of water running from a faucet. It should take into account when other events are happening as well, such as soap being dispensed. Potential augmentations of the system could be using sensing when people are in front of the sink vs. leaning to dispense soap or other tracking of the users position to understand when the user needs/doesn’t need water.

Proof of Concept:

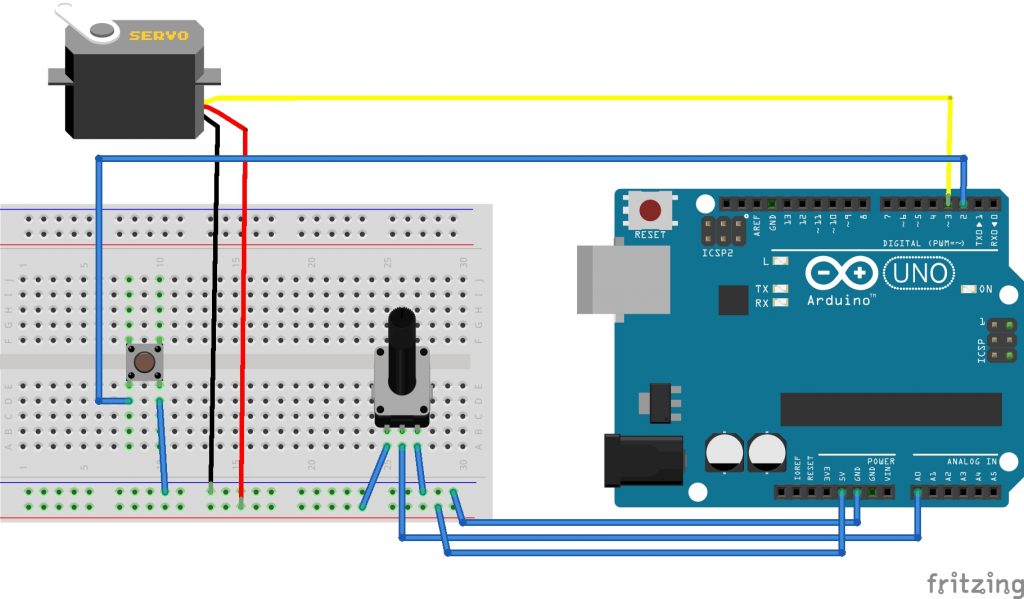

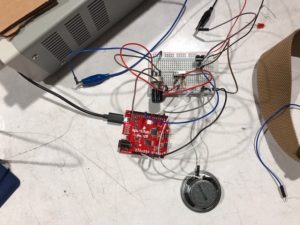

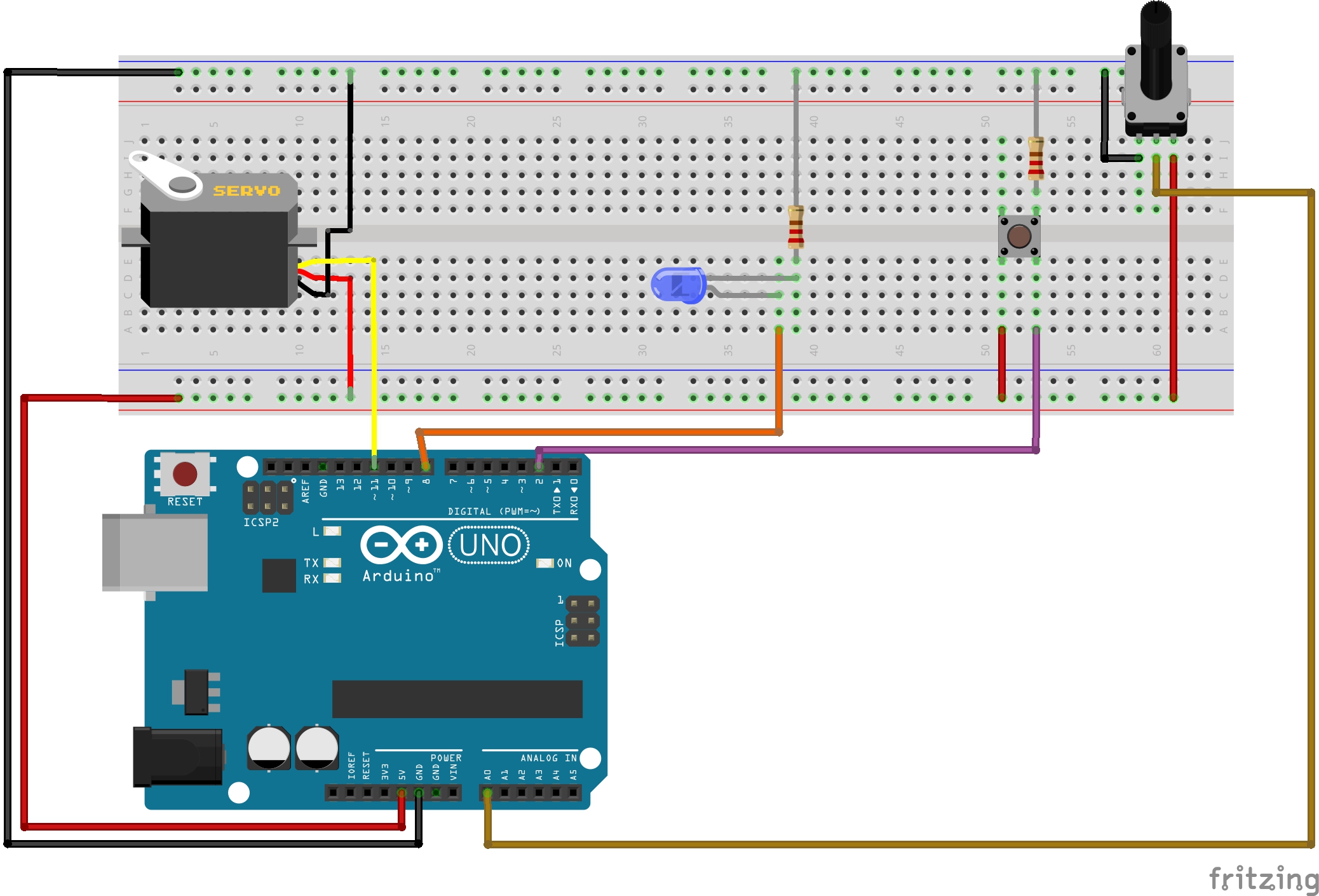

An Arduino with a potentiometer to represent the faucet being turned on/off, an LED to represent the water (whether it is running or not), and a switch/button to represent when soap is being dispensed. The potentiometer being rotate on will cause a Servo to sweep faster which hits straws to create a beat. The LED, based on the press of a button, will switch on and off regardless of the potentiometer, but unless the button is pressed, the LED will show the potentiometer’s reading of how much water the user wants.

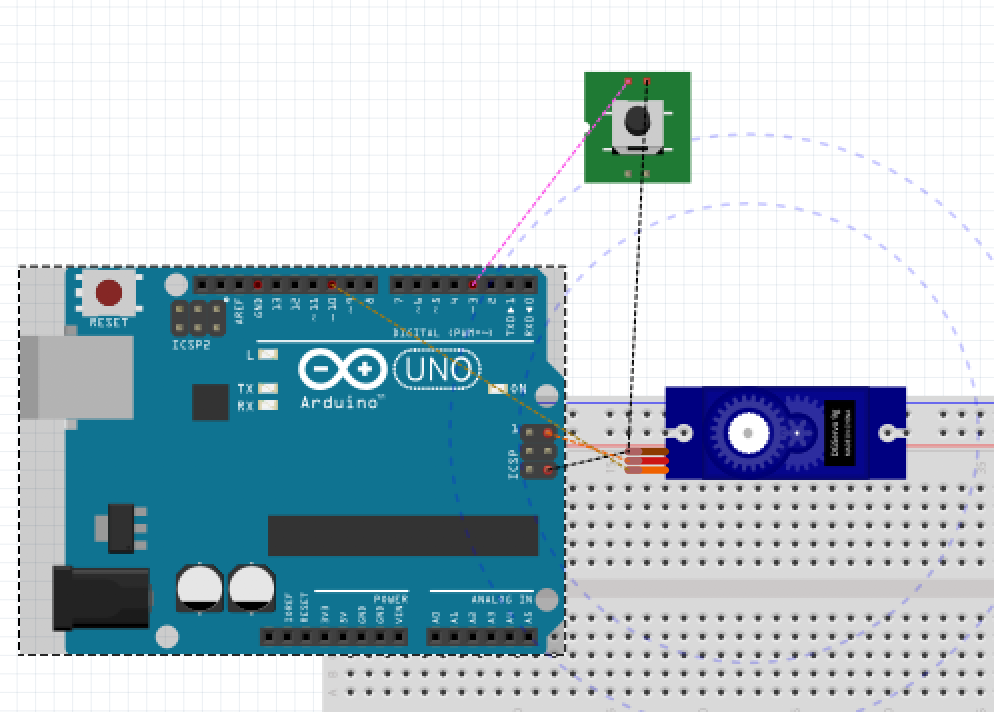

Fritzing Sketch:

The Fritzing sketch shows how the potentiometer and button are set up to feed information into the Arduino as well as how the LED and Servo are connected to the Arduino to receive outputs. Not pictured, is that the Arduino would have to be connected to a power source.

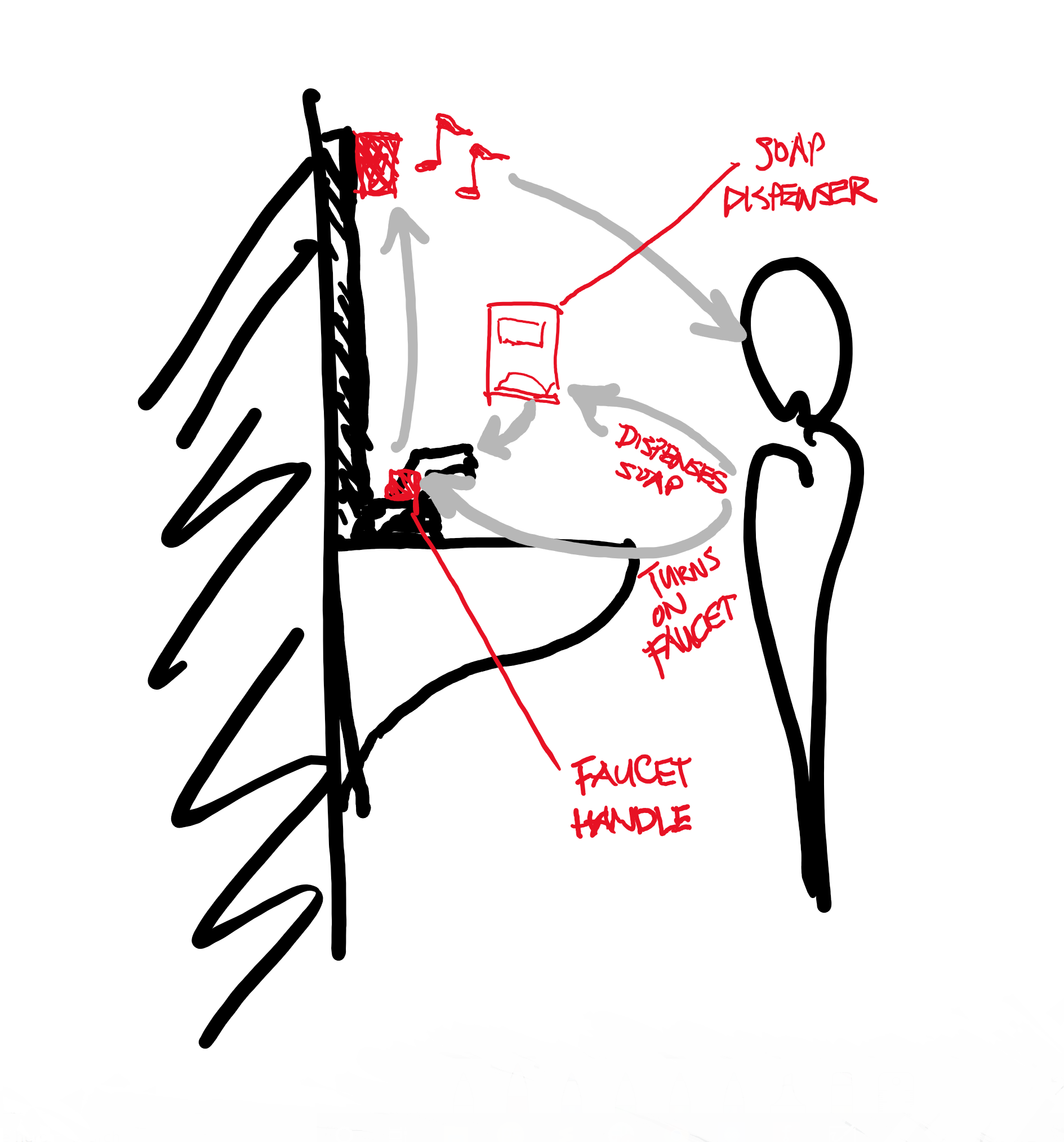

Proof of Concept Sketches:

The user’s turns on the faucet and is met with a drumming that conveys the flow of the water. If they are sensed to be dispensing soap, the water stops briefly. The system is meant to remind the user to make sure that the faucet is completely closed when they leave. There are however, many additional features that could be added on as the scale of the intervention increases, for example, the drumming could be active only if it is sensed that there is someone present in the space.

Proof of Concept Video:

Files: