This project is inspired by the Ubicoustics project here at CMU in the Future Interfaces Group, and by an assignment for my Machine Learning + Sensing class where we taught a model to differentiate between various appliances using recordings made with our phones. This course is taught by Mayank Goel of Smash Lab, and is a great complement to Making Things Interactive.

With these current capabilities in mind, and combining physical feedback, I created a prototype for a system that provides physical feedback (a tap on your wrist) when it hears specific types of sounds, in this case over a certain threshold in an audio frequency band. This could be developed into a more sophisticated system with more tap options, and a machine learning classifier to determine specific signals. Here’s a quick peek.

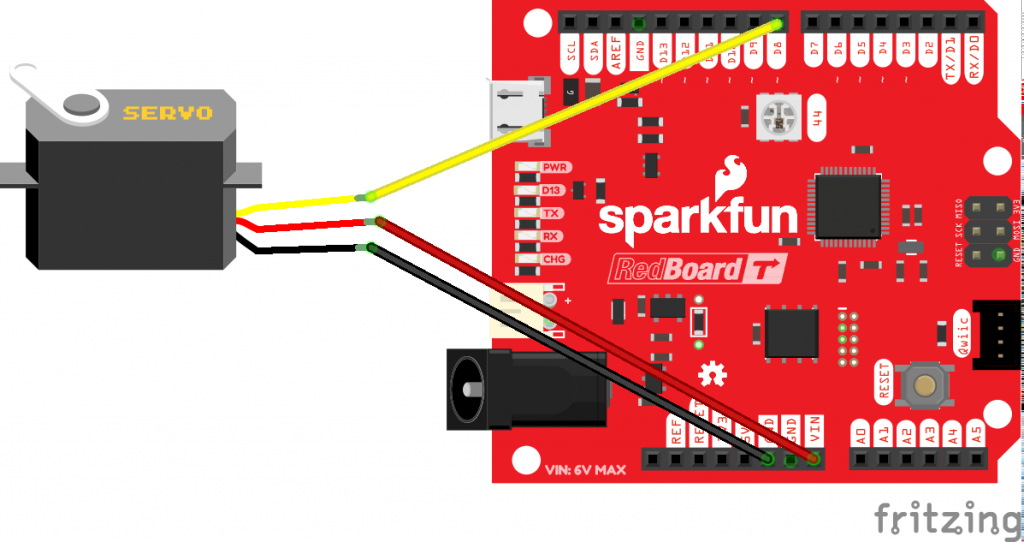

On the technical side, things are pretty straightforward, but all of the key elements are there. The servo connection is standard and the code right now just looks for any signal from the computer doing the listening to trigger a toggle. The messaging is simple and short to minimize any potential lag.

On the python side, audio is being taken in with pyaudio, and then transformed into the frequency spectrum with scipy signal processing, and then scaled down to 32 frequency bins using openCV (a trick I learned in ML+S class). Then bins 8 and 9 are watched for crossing a threshold, which is the equivalent of saying when there’s a spike somewhere around 5khz toggle the motor.

With a bit more time and tinkering, a classifier could be trained in scikit learn with high accuracy to trigger the tap only with certain sounds, say a microwave beeping that it’s done, or a fire alarm.

The system could also be a part of a larger sensor network aware of both real world and virtual events to trigger unique taps for the triggers the user prefers.